[toc]

常见的损失函数

y_i表示实际值,f_i表示预测值

0-1损失函数

L(y_i, f_i) = \left\{\begin{matrix} 1, ~y_i = f_i\\

0, ~y_i \neq f_i\end{matrix}\right.

等价形式:

L(y_i, f_i) = \frac{1}{2}(1 - sign(y_i\cdot f_i)), ~y_i\in\{\pm1\}

Perceptron感知损失函数(感知机)

L(y_i, f_i) = \left\{\begin{matrix} 1, ~|y_i - f_i| > t\\

0, ~|y_i - f_i| \leq t\end{matrix}\right.

等价形式:

L(y_i, f_i) = max\{0,~-(y_i\cdot f_i)\}, ~y_i\in\{\pm1\}

证明

因为当y_i = {-1, +1}时,|y_i - f_i| = {0, +2},第一个式子等价于

L(y_i, f_i) = \left\{\begin{matrix} 1, ~|y_i - f_i| = 2~/~y_i\cdot f_i = -1\\

0, ~|y_i - f_i| = 0~/~y_i\cdot f_i = 1\end{matrix}\right.

又等价于

L(y_i, f_i) = max\{0,~-(y_i\cdot f_i)\}, ~y_i\in\{\pm1\}

Hinge损失函数(SVM)

L(y_i, f_i) = max\{0,~1 - y_i\cdot f_i\},~ y \in \{\pm1\}

Loss损失函数(Logistic回归)

L(y_i, f_i) = -\left(y_i\log f_i + (1-y_i)\log{(1-f_i)}\right),~y_i\in \{0,1\}

其中

f(x) = 1/\exp(-w^T\cdot x)

等价于

L(y_i, f_i) = log(1 + \exp(y_i\cdot f_i)),~ y_i \in \{\pm1\}

证明

因为当y_i = {0, +1}时,第一个式子等价于

L(y_i,f_i) = \left\{\begin{matrix} log(1+\exp(-w^T\cdot x),~y_i = 1\\

log(1+\exp(w^T\cdot x),~y_i=0\end{matrix}\right.

等价于,当y_i = {-1, +1}时

L(y_i,f_i) = \left\{\begin{matrix} log(1+\exp(-w^T\cdot x),~y_i = 1\\

log(1+\exp(w^T\cdot x),~y_i=-1\end{matrix}\right.

等价于

L(y_i, f_i) = log(1 + \exp(y_i\cdot f_i)),~ y_i \in \{\pm1\}

指数损失函数(Adaboost)

L(y_i,f_i)=\exp(-y_i\cdot f_i), y_i\in \{\pm1\}

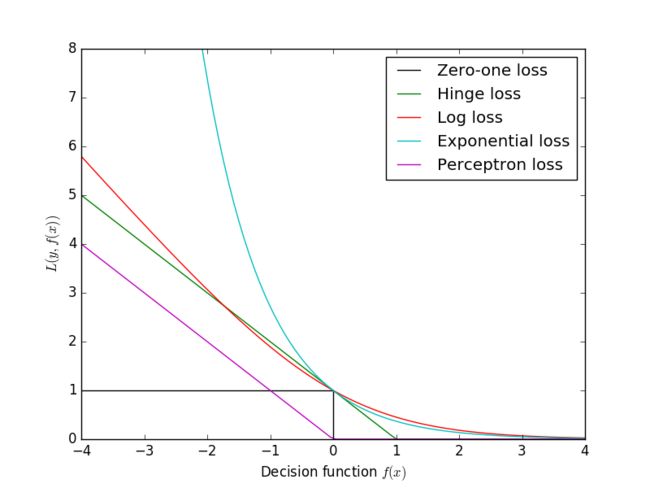

几个损失函数的图像

回归损失函数

Square损失函数

L(y_i,f_i)=(y_i - f_i)^2

Absolute损失函数

L(y_i,f_i)=|y_i-f_i|

参考

- http://blog.csdn.net/google19890102/article/details/50522945

- http://blog.csdn.net/heyongluoyao8/article/details/52462400

- http://www.csuldw.com/2016/03/26/2016-03-26-loss-function/