一、概述和说明

1.1 安装情况说明

本文在OEL5.8 X86_64位系统上安装配置Oracle 11gR2 RAC (11.2.0.3), 采用VMware Workstation8虚拟机,安装两台 OEL5.8 X86_64 位Linux 系统,一台openfiler虚拟机进行iscsi服务。

openfiler虚拟机安装与配置本文略过。

虽然已经安装过多次Oracle 11gR2 RAC,本文主要是想测试一下非asmlib方式配置ASM,以及减少SSH等效性的配置的配置方式。这也是本文与其它大多数人安装的oracle 11g rac不同之处。

1. 采用udev方式配置asm, 本文主要是想测试一下非asmlib方式配置ASM.

2. 采用Oracle 11gR2 RAC(11.2.0.3)新增的方式配置grid,oracle ssh等效性。

本文出自:http://koumm.blog.51cto.com

参考配置文档

Why ASMLIB and why not?

http://www.oracledatabase12g.com/archives/why-asmlib-and-why-not.html

利用UDEV服务解决RAC ASM存储设备名

http://www.oracledatabase12g.com/archives/utilize-udev-resolve-11gr2-rac-asm-device-name.html

1.2 软件说明

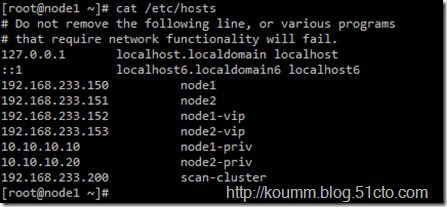

1. IP地址规划

环境说明:

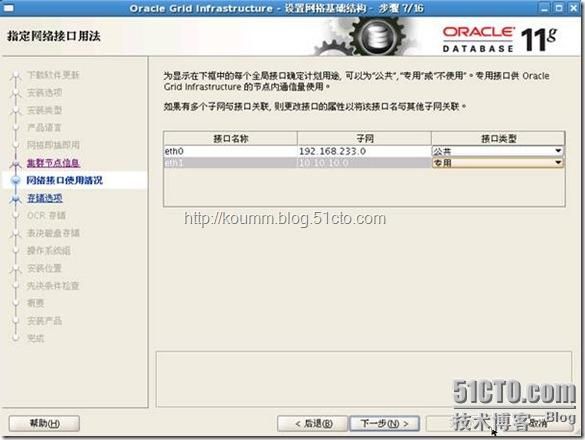

1)192.168.233.x/255.255.255.0 网段是应用,iscsi网段,接第一块网卡。

2)10.10.10.x/255.255.255.0网段是集群内部心跳网段,接第二块网卡。

2. 软件准备

3. 共享磁盘划分

二、安装准备

2.1 配置本地网络

需要在node1,node2节点上分别安装与配置。

1. 配置本地hosts文件

2. 修改hostname名称

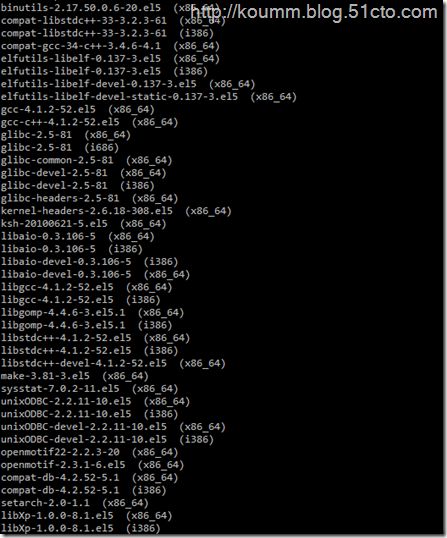

2.2 安装相关软件包

采用OEL5.8 X64安装,安装过程中选中图形界面,开发包,开发库,老的软件开发包等。

# rpm -q binutils compat-libstdc++-

33

compat-gcc-

34

-c++ elfutils-libelf elfutils-libelf-devel elfutils-libelf-devel-

static

gcc gcc-c++ glibc glibc-common glibc-devel glibc-headers kernel-headers ksh libaio libaio-devel libgcc libgomp libstdc++ libstdc++-devel make sysstat unixODBC unixODBC-devel openmotif22 openmotif compat-db setarch libXp

#采用OEL5.8 X64安装,采用uek内核所需软件包

# mount /dev/cdrom /mnt # cd /mnt/Server/ rpm -ivh compat-db-4.2.52-5.1.i386.rpm compat-db-4.2.52-5.1.x86_64.rpm rpm -ivh libaio-devel-0.3.106-5.x86_64.rpm libaio-devel-0.3.106-5.i386.rpm rpm -ivh sysstat-7.0.2-11.el5.x86_64.rpm numactl-devel-0.9.8-12.0.1.el5_6.x86_64.rpm rpm -ivh unixODBC-2.2.11-10.el5.x86_64.rpm unixODBC-devel-2.2.11-10.el5.x86_64.rpm unixODBC-libs-2.2.11-10.el5.x86_64.rpm rpm -ivh unixODBC-2.2.11-10.el5.i386.rpm unixODBC-devel-2.2.11-10.el5.i386.rpm unixODBC-libs-2.2.11-10.el5.i386.rpm rpm -ivh libXp-1.0.0-8.1.el5.i386.rpm libXp-1.0.0-8.1.el5.x86_64.rpm rpm -ivh openmotif22-2.2.3-20.x86_64.rpm openmotif-2.3.1-6.el5.x86_64.rpm

2.3 安装替换sqlplus工具

wget http:

//utopia.knoware.nl/~hlub/uck/rlwrap/rlwrap-0.37.tar.gz

tar zxvf rlwrap-0.37.tar.gz cd rlwrap-0.36 ./configure make && make install

2.4 创建oracle帐号与DBA组

1. 创建用户组和用户的命令如下(用root身份):

/usr/sbin/groupadd -g 501 oinstall /usr/sbin/groupadd -g 502 dba /usr/sbin/groupadd -g 503 oper /usr/sbin/groupadd -g 504 asmadmin /usr/sbin/groupadd -g 505 asmoper /usr/sbin/groupadd -g 506 asmdba /usr/sbin/useradd -u 501 -g oinstall -G dba,asmdba,asmadmin,asmoper grid /usr/sbin/useradd -u 502 -g oinstall -G dba,oper,asmdba oracle

2. 直接配置密码

echo "grid" | passwd --stdin grid echo "oracle" | passwd --stdin oracle

细节: 检查ID号,确保两节点的用户组ID与用户ID相同,可用-g固定指定gid。

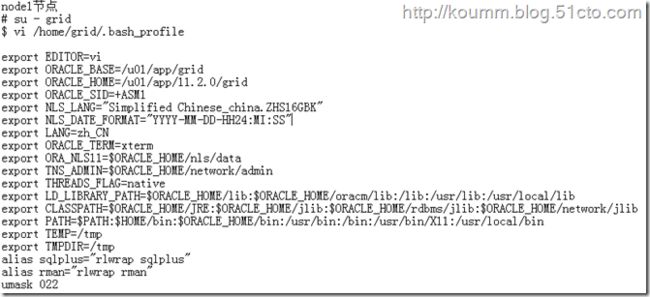

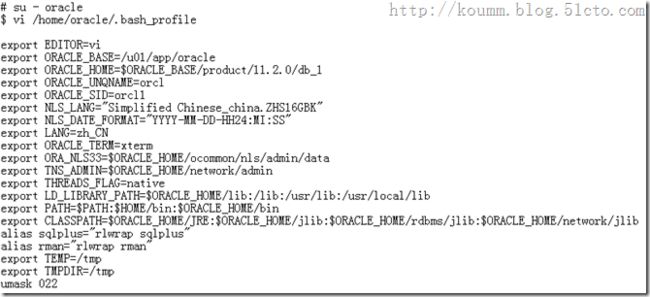

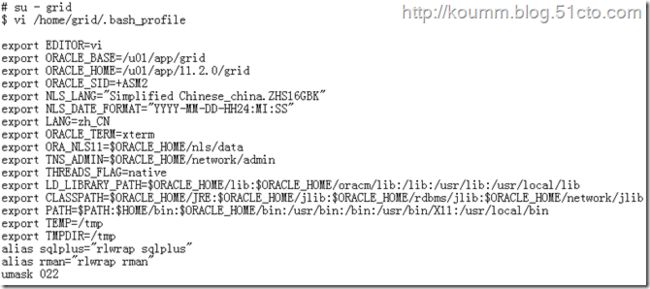

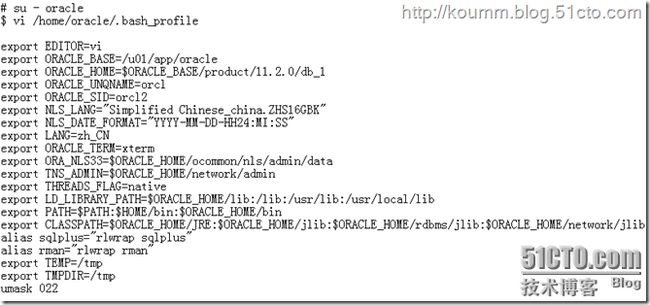

2.5 修改oracle用户环境变量

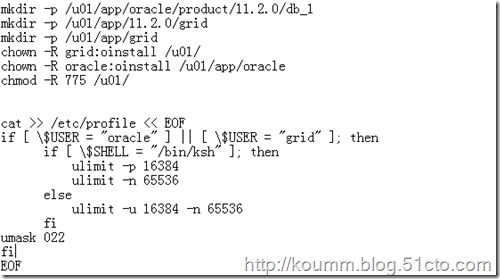

1. 创建安装目录 ,node1,node2节点

2. 创建oracle,grid 环境变量

node1

node2

2.6 修改内核支持

node1,node2节点

# vi /etc/sysctl.conf

fs.aio-max-nr = 1048576 fs.file-max = 6815744 kernel.shmall = 2097152 kernel.shmmax = 2147483648 kernel.shmmni = 4096 kernel.sem = 250 32000 100 128 net.ipv4.ip_local_port_range = 9000 65500 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 1048586

# 提示,上述参数因按实际情况配置。

# sysctl -p

2.7 修改文件描述符

node1,node2节点

cat >> /etc/security/limits.conf << EOF oracle soft nproc 2047 oracle hard nproc 16384 oracle soft nofile 1024 oracle hard nofile 65536 oracle soft stack 10240 grid soft nproc 2047 grid hard nproc 16384 grid soft nofile 1024 grid hard nofile 65536 grid soft stack 10240 EOF

2.8 修改登陆认证

node1,node2节点

#加入内容

cat >> /etc/pam.d/login << EOF session required /lib64/security/pam_limits.so EOF

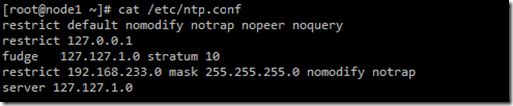

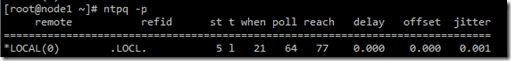

2.9 配置时间同步

虽然官方不推荐采用ntpdate,来实例还是采用NTPDATE配置时间同步,也可以采用time-stream。

1. 以NODE1节点的本地时间为准。

[root@node1 ~]# service ntpd start

Starting ntpd: [ OK ]

# vi /etc/sysconfig/ntpd

# 修改如下:

OPTIONS="-x -u ntp:ntp -p /var/run/ntpd.pid" SYNC_HWCLOCK=no NTPDATE_OPTIONS=""

# 然后重启ntp服务

2. 在node2 上添加任务,每一分钟和node1进行一次时间同步。

# crontab -l

*/1 * * * * /usr/sbin/ntpdata 10.10.10.10

2.10 挂载共享磁盘并创建分区

1. 共享磁盘采用的是iscsi共享磁盘

node1,node2

# rpm -ivh iscsi-initiator-utils-6.2.0.871-0.16.el5.i386.rpm chkconfig --level 2345 iscsi on service iscsi start

2. 安装iscsi服务

# iscsiadm --mode discovery --type sendtargets --portal 192.168.233.130

192.168.233.130:3260,1 iqn.2006-01.com.openfiler:tsn.713bbba5efdb

[root@node1 ~]# fdisk -l

Disk /dev/sda: 37.5 GB, 37580963840 bytes 255 heads, 63 sectors/track, 4568 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sda1 * 1 13 104391 83 Linux /dev/sda2 14 4568 36588037+ 8e Linux LVM Disk /dev/dm-0: 33.2 GB, 33252442112 bytes 255 heads, 63 sectors/track, 4042 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk /dev/dm-0 doesn't contain a valid partition table Disk /dev/dm-1: 4194 MB, 4194304000 bytes 255 heads, 63 sectors/track, 509 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk /dev/dm-1 doesn't contain a valid partition table Disk /dev/sdb: 536 MB, 536870912 bytes 17 heads, 61 sectors/track, 1011 cylinders Units = cylinders of 1037 * 512 = 530944 bytes Disk /dev/sdb doesn't contain a valid partition table Disk /dev/sdc: 536 MB, 536870912 bytes 17 heads, 61 sectors/track, 1011 cylinders Units = cylinders of 1037 * 512 = 530944 bytes Disk /dev/sdc doesn't contain a valid partition table Disk /dev/sdd: 536 MB, 536870912 bytes 17 heads, 61 sectors/track, 1011 cylinders Units = cylinders of 1037 * 512 = 530944 bytes Disk /dev/sdd doesn't contain a valid partition table Disk /dev/sdf: 536 MB, 536870912 bytes 17 heads, 61 sectors/track, 1011 cylinders Units = cylinders of 1037 * 512 = 530944 bytes Disk /dev/sdf doesn't contain a valid partition table Disk /dev/sde: 536 MB, 536870912 bytes 17 heads, 61 sectors/track, 1011 cylinders Units = cylinders of 1037 * 512 = 530944 bytes Disk /dev/sde doesn't contain a valid partition table Disk /dev/sdg: 4294 MB, 4294967296 bytes 133 heads, 62 sectors/track, 1017 cylinders Units = cylinders of 8246 * 512 = 4221952 bytes Disk /dev/sdg doesn't contain a valid partition table Disk /dev/sdh: 4294 MB, 4294967296 bytes 133 heads, 62 sectors/track, 1017 cylinders Units = cylinders of 8246 * 512 = 4221952 bytes Disk /dev/sdh doesn't contain a valid partition table Disk /dev/sdi: 4294 MB, 4294967296 bytes 133 heads, 62 sectors/track, 1017 cylinders Units = cylinders of 8246 * 512 = 4221952 bytes Disk /dev/sdi doesn't contain a valid partition table

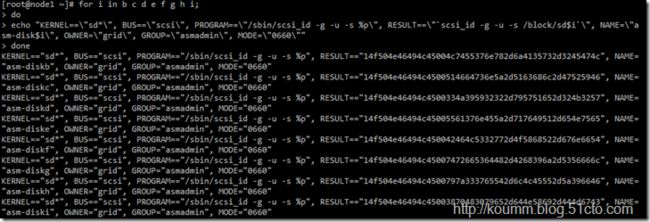

2.11 udev方式配置ASM磁盘

1. node1上创建udev脚本

node1,node2

1) 创建udev脚本

for i in b c d e f g h i; do echo "KERNEL==\"sd*\", BUS==\"scsi\", PROGRAM==\"/sbin/scsi_id -g -u -s %p\", RESULT==\"`scsi_id -g -u -s /block/sd$i`\", NAME=\"asm-disk$i\", OWNER=\"grid\", GROUP=\"asmadmin\", MODE=\"0660\"" done

脚本原创地址:http://www.oracledatabase12g.com/archives/utilize-udev-resolve-11gr2-rac-asm-device-name.html

2) 创建udev ASM代码脚本

# vi /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="14f504e46494c45003374436e755a2d4a4368312d4a32774e", NAME="asm-diskb", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="14f504e46494c45006332716b68452d624168392d36743666", NAME="asm-diskc", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="14f504e46494c45003968715271782d734c786b2d576c5133", NAME="asm-diskd", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="14f504e46494c45006756684c45352d6d585a442d706f5242", NAME="asm-diske", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="14f504e46494c45006446797661732d375a30702d6c78384e", NAME="asm-diskf", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="14f504e46494c45003171545656422d525a68732d47555849", NAME="asm-diskg", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="14f504e46494c4500797a333765542d6c4c45552d5a396646", NAME="asm-diskh", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="14f504e46494c45003870483079652d644e58692d444d6743", NAME="asm-diski", OWNER="grid", GROUP="asmadmin", MODE="0660"

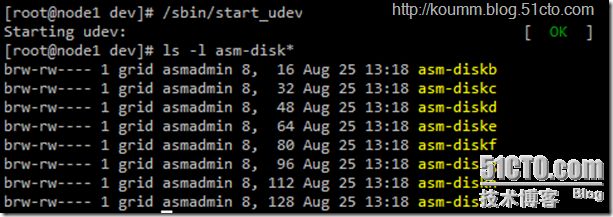

3)启动udev服务

# /sbin/start_udev

Starting udev: [ OK ]

查看是否成功

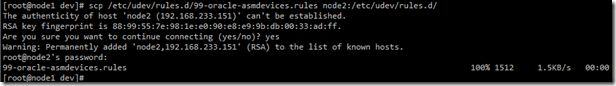

2. 将/etc/udev/rules.d/99-oracle-asmdevices.rules 文件传到node2

node1

node2

[root@node2 ~]# /sbin/start_udev

Starting udev: [ OK ]

[root@node2 ~]# ls -l /dev/asm-disk*

brw-rw---- 1 grid asmadmin 8, 16 Aug 25 13:22 /dev/asm-diskb brw-rw---- 1 grid asmadmin 8, 32 Aug 25 13:22 /dev/asm-diskc brw-rw---- 1 grid asmadmin 8, 48 Aug 25 13:22 /dev/asm-diskd brw-rw---- 1 grid asmadmin 8, 64 Aug 25 13:22 /dev/asm-diske brw-rw---- 1 grid asmadmin 8, 80 Aug 25 13:22 /dev/asm-diskf brw-rw---- 1 grid asmadmin 8, 96 Aug 25 13:22 /dev/asm-diskg brw-rw---- 1 grid asmadmin 8, 112 Aug 25 13:22 /dev/asm-diskh brw-rw---- 1 grid asmadmin 8, 128 Aug 25 13:22 /dev/asm-diski

三、安装Oracle Grid Infrastructure

3.1 准备安装包并上传

node1:

# su - grid

上传以下三个包到/u01

p10404530_112030_Linux-x86-64_1of7.zip

p10404530_112030_Linux-x86-64_2of7.zip

p10404530_112030_Linux-x86-64_3of7.zip

说明: 1、2是oracle安装介质,3是GRID软件的安装介质。

注意:版本均是目前Oracle 11g的最新版本,11.2.0.3.0。

我们通过下述命令来解压上述3个压缩软件包:

# unzip p10404530_112030_Linux-x86-64_1of7.zip

# unzip p10404530_112030_Linux-x86-64_2of7.zip

# unzip p10404530_112030_Linux-x86-64_3of7.zip

node2:

上传以下包到/u01

p10404530_112030_Linux-x86-64_3of7.zip

# unzip p10404530_112030_Linux-x86-64_3of7.zip

3.2 预安装环境检查

1.安装Cvuqdisk RPM包

node1,node2节点使用root用户进行安装。

# cd /u01/grid/rpm

# ls

cvuqdisk-1.0.9-1.rpm

[root@node1 rpm]# rpm -vih cvuqdisk-1.0.9-1.rpm

Preparing... ########################################### [100%]

1:cvuqdisk ########################################### [100%]

[root@node1 rpm]#

2.预环境检查

node1,node2节点

# su - grid

$ cd /u01/grid

$ ./runcluvfy.sh stage -pre crsinst -n node1,node2 -fixup -verbose

过程略过,由于是前面没有做ssh等效性,通不过。针对前提是ssh等效性提前做的情况。

3.2 预安装环境检查

node1节点,clusterware只需要在一个节点上安装。

通过linux图形界面,可以采用直接控制台到图形界面,或VNC等方式。

# xhost +

# su - grid

$ cd /u01/grid

$ ./runInstall

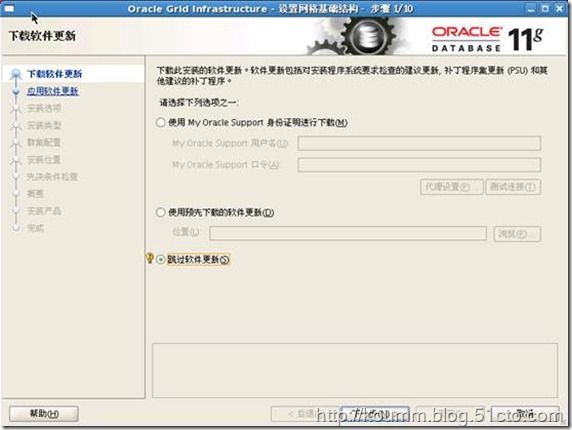

1.跳过软件更新

2.为集群安装与配置grid

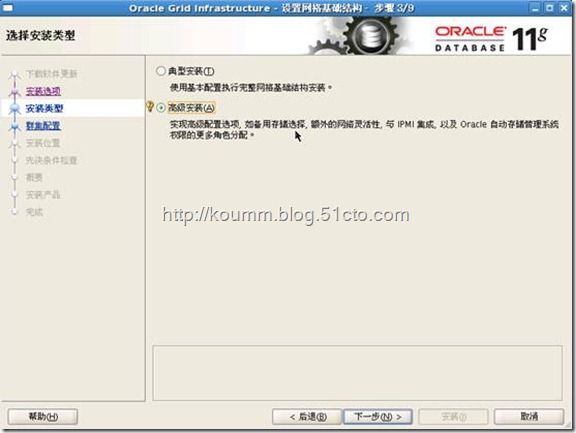

3.高级安装

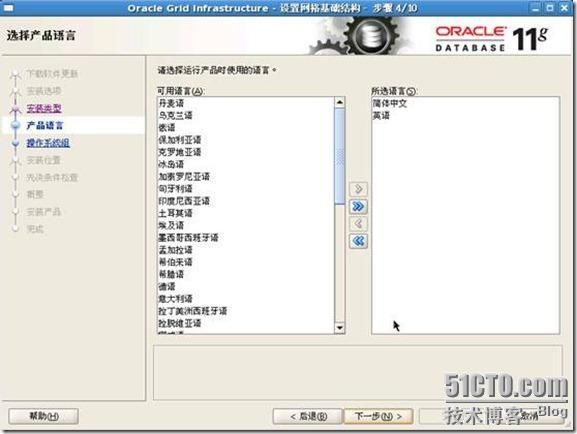

4.选择产品语言

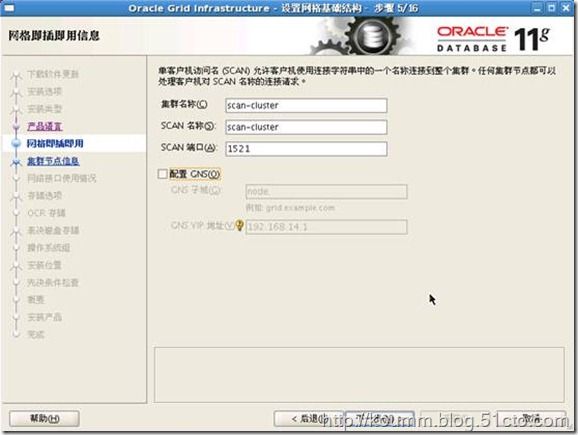

5.配置集群名称

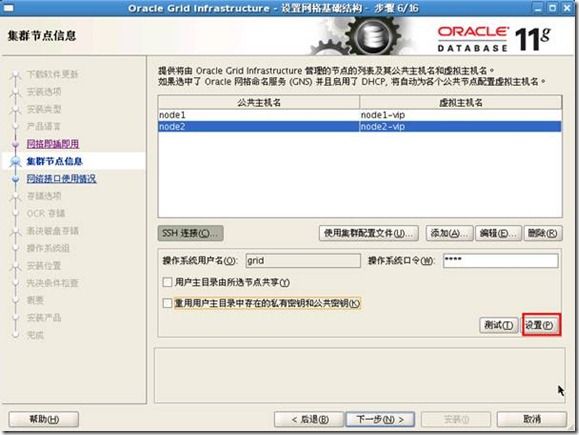

6.添加集群节点

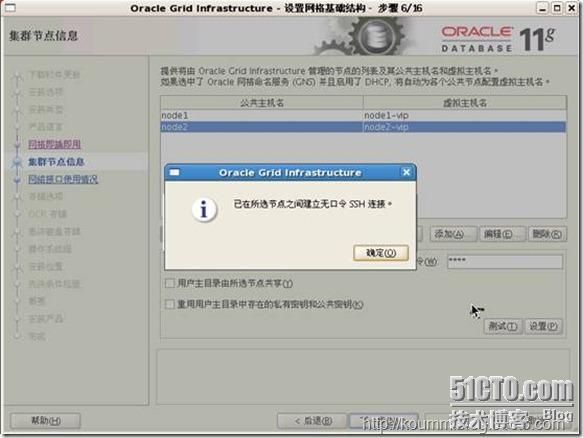

7.验证节点node2

实验内容之一,可以不需要事先做ssh等效。

8.指定网络接口类型

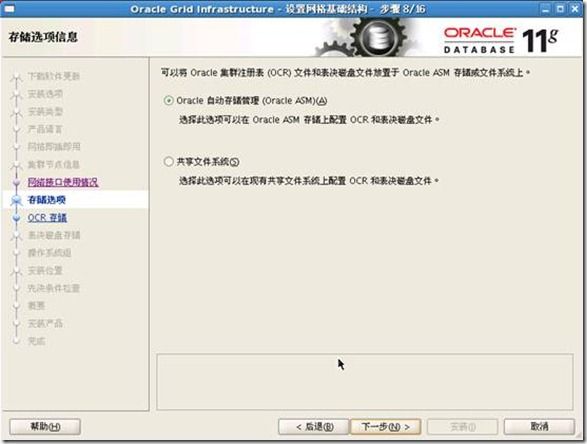

9.配置oracle asm

10.配置CRS ASM磁盘组

1)通过查看搜索路径查看asm磁盘组:/dev/asm*

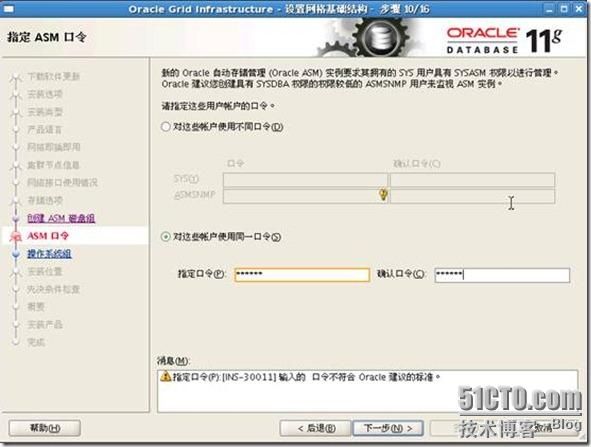

11.设置SYS/ASMSNMP口令(有时无法输入密码,修改DG的名称,再试就OK了)

12.不使用IPMI接口。

13.配置操作系统组

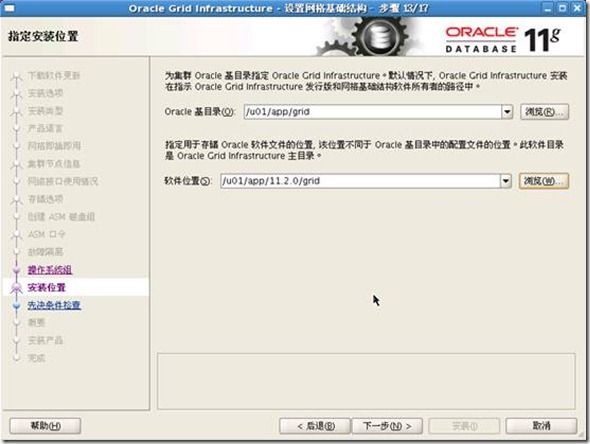

14.指定grid安装位置

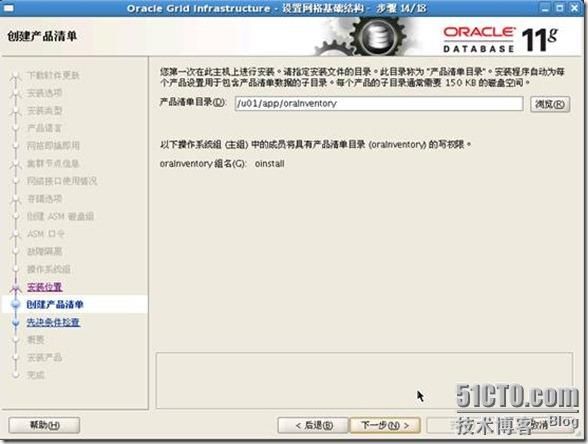

15.指定产口清单目录

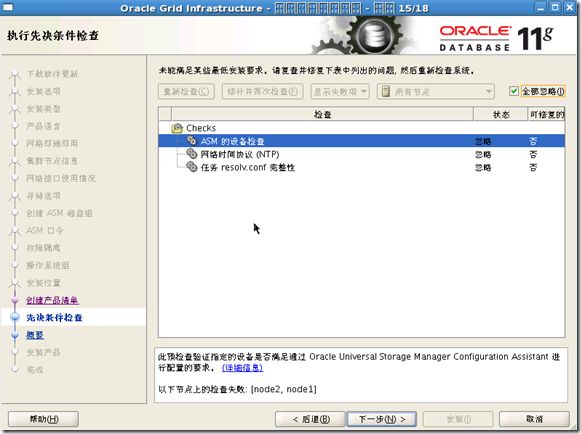

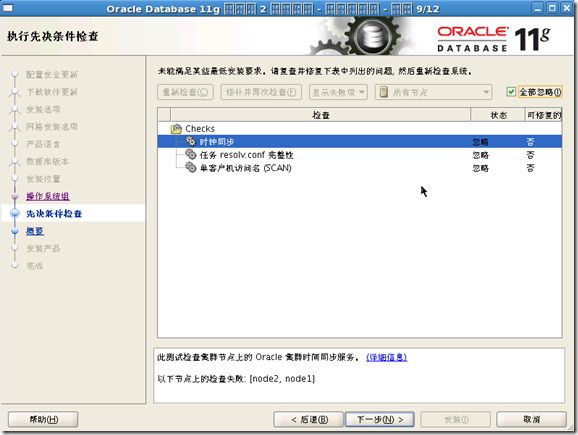

16.检查GRID安装环境

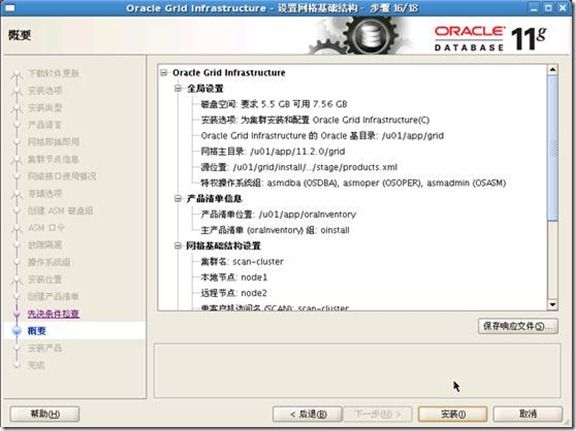

17.安装grid

18.执行配置脚本

在 node1,node2上执行 /u01/app/oraInventory/orainstRoot.sh

在 node1,node2 上执行 /u01/app/11.2.0/grid/root.sh

node1

[root@node1 .vnc]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /u01/app/11.2.0/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: Copying dbhome to /usr/local/bin ... Copying oraenv to /usr/local/bin ... Copying coraenv to /usr/local/bin ... Creating /etc/oratab file... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params Creating trace directory User ignored Prerequisites during installation OLR initialization - successful root wallet root wallet cert root cert export peer wallet profile reader wallet pa wallet peer wallet keys pa wallet keys peer cert request pa cert request peer cert pa cert peer root cert TP profile reader root cert TP pa root cert TP peer pa cert TP pa peer cert TP profile reader pa cert TP profile reader peer cert TP peer user cert pa user cert Adding Clusterware entries to inittab CRS-2672: Attempting to start 'ora.mdnsd' on 'node1' CRS-2676: Start of 'ora.mdnsd' on 'node1' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'node1' CRS-2676: Start of 'ora.gpnpd' on 'node1' succeeded CRS-2672: Attempting to start 'ora.cssdmonitor' on 'node1' CRS-2672: Attempting to start 'ora.gipcd' on 'node1' CRS-2676: Start of 'ora.gipcd' on 'node1' succeeded CRS-2676: Start of 'ora.cssdmonitor' on 'node1' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'node1' CRS-2672: Attempting to start 'ora.diskmon' on 'node1' CRS-2676: Start of 'ora.diskmon' on 'node1' succeeded CRS-2676: Start of 'ora.cssd' on 'node1' succeeded ASM created and started successfully. Disk Group CRS created successfully. clscfg: -install mode specified Successfully accumulated necessary OCR keys. Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. CRS-4256: Updating the profile Successful addition of voting disk 40826ec832344f68bfc664acef7eea34. Successful addition of voting disk 6ed43279653f4f3fbfdc447fc6772af1. Successful addition of voting disk 53128cf1793e4f94bfc112d2ae02e365. Successfully replaced voting disk group with +CRS. CRS-4256: Updating the profile CRS-4266: Voting file(s) successfully replaced ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE 40826ec832344f68bfc664acef7eea34 (/dev/asm-diskb) [CRS] 2. ONLINE 6ed43279653f4f3fbfdc447fc6772af1 (/dev/asm-diskc) [CRS] 3. ONLINE 53128cf1793e4f94bfc112d2ae02e365 (/dev/asm-diskd) [CRS] Located 3 voting disk(s). CRS-2672: Attempting to start 'ora.asm' on 'node1' CRS-2676: Start of 'ora.asm' on 'node1' succeeded CRS-2672: Attempting to start 'ora.CRS.dg' on 'node1' CRS-2676: Start of 'ora.CRS.dg' on 'node1' succeeded Configure Oracle Grid Infrastructure for a Cluster ... succeeded

node2

[root@node2 ~]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /u01/app/11.2.0/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: Copying dbhome to /usr/local/bin ... Copying oraenv to /usr/local/bin ... Copying coraenv to /usr/local/bin ... Creating /etc/oratab file... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params Creating trace directory User ignored Prerequisites during installation OLR initialization - successful Adding Clusterware entries to inittab CRS-4402: CSS 守护程序已在独占模式下启动, 但在节点 node1 (编号为 1) 上发现活动 CSS 守护程序, 因此正在终止 An active cluster was found during exclusive startup, restarting to join the cluster Configure Oracle Grid Infrastructure for a Cluster ... succeeded

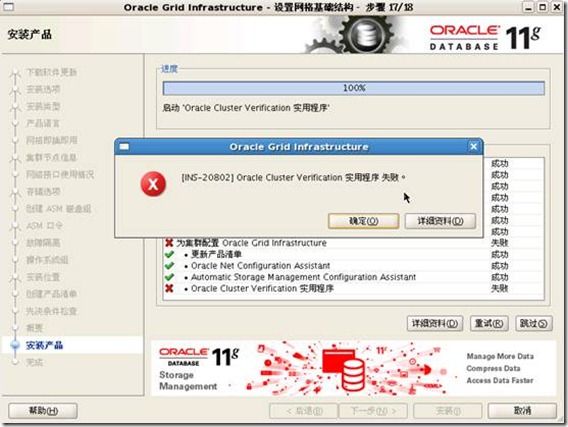

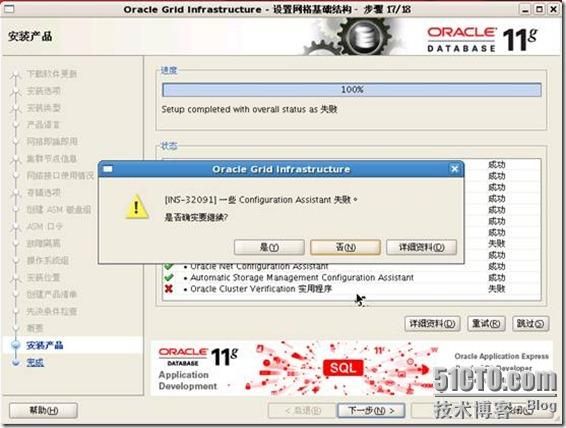

19.执行最后的完成安装

忽略掉配置错误,继续安装,原因由采用/etc/hosts进行SCAN配置造成。

关闭,完成安装。

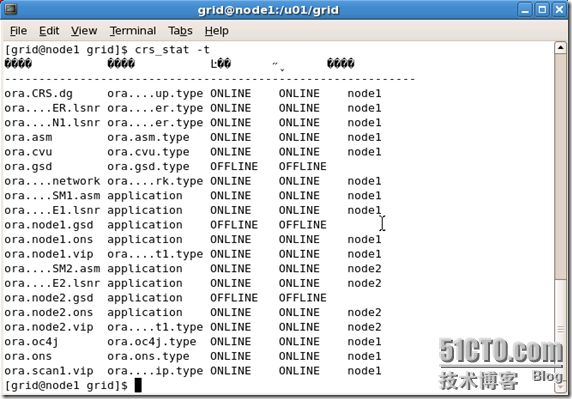

测试一下

四、安装Oracle 数据库

4.1 创建数据库ASM磁盘组

node1节点

# su - grid

$ asmca

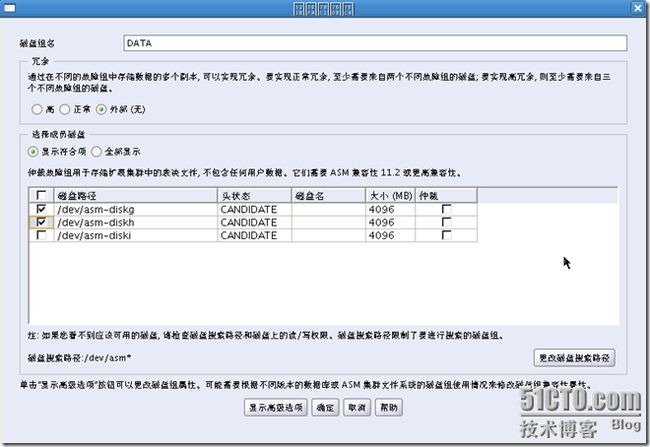

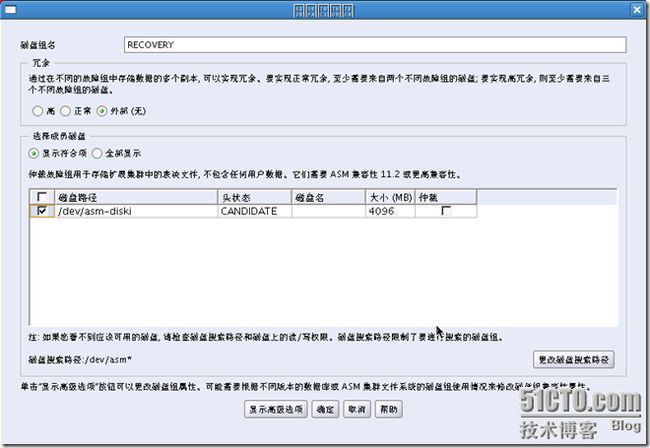

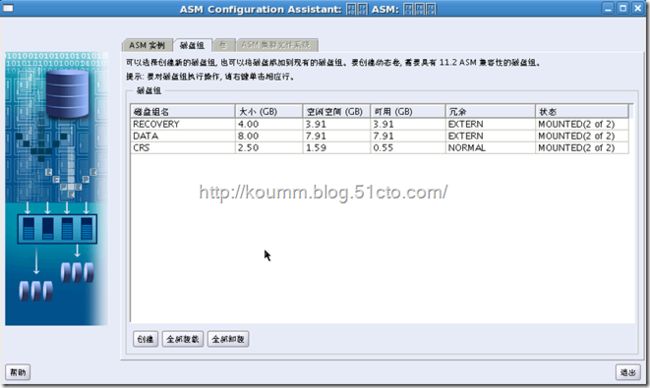

1.创建磁盘组

2.创建DATA磁盘组。

3.创建RECOVERY磁盘组。

4.完成磁盘组的创建。

完成,退出。

4.2 安装Oracle软件

node1节点上,安装只在一台机器上进行

# xhost +

# su - oracle

$ cd /u01/database

$ ./runInstall

1.选择取消接收安全更新

2.跳过软件更新。

3.更安装数据库软件

4.全选,选择oracle real application clusters数据库安装。

5.配置语言。

6.选择企业版安装。

7.指定oracle安装目录。

8.默认组,下一步。

9.安装环境检查,全部忽略。

10.开始安装。

11.执行配置脚本

以root用户分别在两个节点上运行脚本

在 node1,node2 上执行

/u01/app/oracle/product/11.2.0/db_1/root.sh

12.完成安装。

4.3 创建Oracle数据库

# su – oracle

安装只在一台机器上进行

$ dbca

1.创建RAC数据库

2.通过模板创建数据库

3.选择一般用途或事务处理数据库模板

4.输入全局数据库名orcl,全选两个节点。

5.配置EM,下一步。

6.配置用户口令。

7.指定数据库存储区域+DATA

8.输入ASMSNMP密码。

9.配置闪回区到+RECOVERY,并开启规档。

10.不选择示例方案,下一步。

11.调整oracle参数

1)调整内存参数

2)调整进程数。

3)调整字符集

12.调整redolog,控制文件等,可默认配置,下一步。

13.单击完成,开始创建数据库。

14.安装完成,单点退出。

到此,oracle 11g rac安装完毕,测试过程略。

Oracle 10g RAC RMAN备份异机单实例恢复

http://koumm.blog.51cto.com/703525/1252898

![clip_p_w_picpath002[5] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第32张图片](http://img.e-com-net.com/image/info3/a3fbefea090a4696b785d3b81e2379e5.jpg)

![clip_p_w_picpath002[17] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第41张图片](http://img.e-com-net.com/image/info3/a086daefeaeb43e9a1c483a79eb731fa.jpg)

![clip_p_w_picpath004[11] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第42张图片](http://img.e-com-net.com/image/info3/e8d9df97f9764a46876cc32981e42136.jpg)

![clip_p_w_picpath006[12] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第43张图片](http://img.e-com-net.com/image/info3/2a962a6b66c946aa8e0afa2af032fc1c.jpg)

![clip_p_w_picpath008[8] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第44张图片](http://img.e-com-net.com/image/info3/f2cc919f73d54b9688f3dacc2422704f.jpg)

![clip_p_w_picpath010[8] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第45张图片](http://img.e-com-net.com/image/info3/f1d2755eb73e4076b07c14644fcda1a9.jpg)

![clip_p_w_picpath012[6] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第46张图片](http://img.e-com-net.com/image/info3/3e9f56b71be14813ab84c32157cd68e5.jpg)

![clip_p_w_picpath014[5] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第47张图片](http://img.e-com-net.com/image/info3/1fb06c1812ce47b086ddc51dcb57635f.jpg)

![clip_p_w_picpath016[6] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第48张图片](http://img.e-com-net.com/image/info3/40a9a91177f64908ba8aab15645e342f.jpg)

![clip_p_w_picpath018[5] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第49张图片](http://img.e-com-net.com/image/info3/139a24c51cb341c3a0826ff89cc421fe.jpg)

![clip_p_w_picpath022[7] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第51张图片](http://img.e-com-net.com/image/info3/bb370865fb224f85960562b4c9cbbc72.jpg)

![clip_p_w_picpath024[6] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第52张图片](http://img.e-com-net.com/image/info3/be8dc093d3fa469eab6f7bceaa59a6f4.jpg)

![clip_p_w_picpath026[6] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第53张图片](http://img.e-com-net.com/image/info3/c67b29c0ce014b4ba847eb8beb651bc6.jpg)

![clip_p_w_picpath002[19] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第54张图片](http://img.e-com-net.com/image/info3/ec1f712ce2274e57a58c65478f9edcbb.jpg)

![clip_p_w_picpath004[13] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第55张图片](http://img.e-com-net.com/image/info3/32d1417d91dc4e38924eabb14cd0c95c.jpg)

![clip_p_w_picpath006[14] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第56张图片](http://img.e-com-net.com/image/info3/51a0008347794057b57781b536c17ba0.jpg)

![clip_p_w_picpath008[10] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第57张图片](http://img.e-com-net.com/image/info3/085e7ef2eda544b2b8d409381d66a9df.jpg)

![clip_p_w_picpath010[10] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第58张图片](http://img.e-com-net.com/image/info3/57dc2b33eb33441ba0e3c8b1be4b6c5e.jpg)

![clip_p_w_picpath012[8] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第59张图片](http://img.e-com-net.com/image/info3/74a37d6f193c4aaeab77dc7e393ca42a.jpg)

![clip_p_w_picpath014[7] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第60张图片](http://img.e-com-net.com/image/info3/e01e257b83964955afdacd5f858078fe.jpg)

![clip_p_w_picpath016[8] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第61张图片](http://img.e-com-net.com/image/info3/c058d6724ca14fd2b16a0a8a87ecb3c9.jpg)

![clip_p_w_picpath018[7] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第62张图片](http://img.e-com-net.com/image/info3/12729d8ba12f49afbf3d9607f6191f8b.jpg)

![clip_p_w_picpath020[9] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第63张图片](http://img.e-com-net.com/image/info3/864e03c083c540d1866cc4848e622c86.jpg)

![clip_p_w_picpath022[9] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第64张图片](http://img.e-com-net.com/image/info3/d9a7e39376ce424f9117c4dce3840e34.jpg)

![clip_p_w_picpath024[8] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第65张图片](http://img.e-com-net.com/image/info3/1ad6360fcc894f2ea44165a2fb6b70f1.jpg)

![clip_p_w_picpath026[8] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第66张图片](http://img.e-com-net.com/image/info3/36c8bfe7d1b64b0794909616941c7365.jpg)

![clip_p_w_picpath028[6] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第67张图片](http://img.e-com-net.com/image/info3/1a839bbb51cf427b804d048b37d45801.jpg)

![clip_p_w_picpath030[6] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第68张图片](http://img.e-com-net.com/image/info3/01f23af5a84841daa20a53fd8969a5f1.jpg)

![clip_p_w_picpath032[9] Oracle 11g R2 RAC on OEL5.8 x64安装笔记_第69张图片](http://img.e-com-net.com/image/info3/6c5111e443054ae880cc3606270d55c3.jpg)