序

本文主要研究一下FluxSink的机制

FluxSink

reactor-core-3.1.3.RELEASE-sources.jar!/reactor/core/publisher/FluxSink.java

/**

* Wrapper API around a downstream Subscriber for emitting any number of

* next signals followed by zero or one onError/onComplete.

*

* @param the value type

*/

public interface FluxSink {

/**

* @see Subscriber#onComplete()

*/

void complete();

/**

* Return the current subscriber {@link Context}.

*

* {@link Context} can be enriched via {@link Flux#subscriberContext(Function)}

* operator or directly by a child subscriber overriding

* {@link CoreSubscriber#currentContext()}

*

* @return the current subscriber {@link Context}.

*/

Context currentContext();

/**

* @see Subscriber#onError(Throwable)

* @param e the exception to signal, not null

*/

void error(Throwable e);

/**

* Try emitting, might throw an unchecked exception.

* @see Subscriber#onNext(Object)

* @param t the value to emit, not null

*/

FluxSink next(T t);

/**

* The current outstanding request amount.

* @return the current outstanding request amount

*/

long requestedFromDownstream();

/**

* Returns true if the downstream cancelled the sequence.

* @return true if the downstream cancelled the sequence

*/

boolean isCancelled();

/**

* Attaches a {@link LongConsumer} to this {@link FluxSink} that will be notified of

* any request to this sink.

*

* For push/pull sinks created using {@link Flux#create(java.util.function.Consumer)}

* or {@link Flux#create(java.util.function.Consumer, FluxSink.OverflowStrategy)},

* the consumer

* is invoked for every request to enable a hybrid backpressure-enabled push/pull model.

* When bridging with asynchronous listener-based APIs, the {@code onRequest} callback

* may be used to request more data from source if required and to manage backpressure

* by delivering data to sink only when requests are pending.

*

* For push-only sinks created using {@link Flux#push(java.util.function.Consumer)}

* or {@link Flux#push(java.util.function.Consumer, FluxSink.OverflowStrategy)},

* the consumer is invoked with an initial request of {@code Long.MAX_VALUE} when this method

* is invoked.

*

* @param consumer the consumer to invoke on each request

* @return {@link FluxSink} with a consumer that is notified of requests

*/

FluxSink onRequest(LongConsumer consumer);

/**

* Associates a disposable resource with this FluxSink

* that will be disposed in case the downstream cancels the sequence

* via {@link org.reactivestreams.Subscription#cancel()}.

* @param d the disposable callback to use

* @return the {@link FluxSink} with resource to be disposed on cancel signal

*/

FluxSink onCancel(Disposable d);

/**

* Associates a disposable resource with this FluxSink

* that will be disposed on the first terminate signal which may be

* a cancel, complete or error signal.

* @param d the disposable callback to use

* @return the {@link FluxSink} with resource to be disposed on first terminate signal

*/

FluxSink onDispose(Disposable d);

/**

* Enumeration for backpressure handling.

*/

enum OverflowStrategy {

/**

* Completely ignore downstream backpressure requests.

*

* This may yield {@link IllegalStateException} when queues get full downstream.

*/

IGNORE,

/**

* Signal an {@link IllegalStateException} when the downstream can't keep up

*/

ERROR,

/**

* Drop the incoming signal if the downstream is not ready to receive it.

*/

DROP,

/**

* Downstream will get only the latest signals from upstream.

*/

LATEST,

/**

* Buffer all signals if the downstream can't keep up.

*

* Warning! This does unbounded buffering and may lead to {@link OutOfMemoryError}.

*/

BUFFER

}

}

注意OverflowStrategy.BUFFER使用的是一个无界队列,需要额外注意OOM问题

实例

public static void main(String[] args) throws InterruptedException {

final Flux flux = Flux. create(fluxSink -> {

//NOTE sink:class reactor.core.publisher.FluxCreate$SerializedSink

LOGGER.info("sink:{}",fluxSink.getClass());

while (true) {

LOGGER.info("sink next");

fluxSink.next(ThreadLocalRandom.current().nextInt());

}

}, FluxSink.OverflowStrategy.BUFFER);

//NOTE flux:class reactor.core.publisher.FluxCreate,prefetch:-1

LOGGER.info("flux:{},prefetch:{}",flux.getClass(),flux.getPrefetch());

flux.subscribe(e -> {

LOGGER.info("subscribe:{}",e);

try {

TimeUnit.SECONDS.sleep(10);

} catch (InterruptedException e1) {

e1.printStackTrace();

}

});

TimeUnit.MINUTES.sleep(20);

}

这里create创建的是reactor.core.publisher.FluxCreate,而其sink是reactor.core.publisher.FluxCreate$SerializedSink

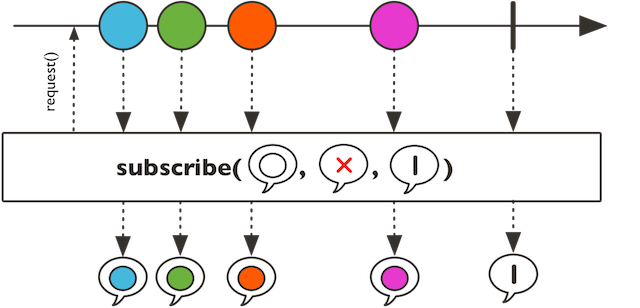

Flux.subscribe

reactor-core-3.1.3.RELEASE-sources.jar!/reactor/core/publisher/Flux.java

/**

* Subscribe {@link Consumer} to this {@link Flux} that will respectively consume all the

* elements in the sequence, handle errors, react to completion, and request upon subscription.

* It will let the provided {@link Subscription subscriptionConsumer}

* request the adequate amount of data, or request unbounded demand

* {@code Long.MAX_VALUE} if no such consumer is provided.

*

* For a passive version that observe and forward incoming data see {@link #doOnNext(java.util.function.Consumer)},

* {@link #doOnError(java.util.function.Consumer)}, {@link #doOnComplete(Runnable)}

* and {@link #doOnSubscribe(Consumer)}.

*

For a version that gives you more control over backpressure and the request, see

* {@link #subscribe(Subscriber)} with a {@link BaseSubscriber}.

*

* Keep in mind that since the sequence can be asynchronous, this will immediately

* return control to the calling thread. This can give the impression the consumer is

* not invoked when executing in a main thread or a unit test for instance.

*

*

*  *

* @param consumer the consumer to invoke on each value

* @param errorConsumer the consumer to invoke on error signal

* @param completeConsumer the consumer to invoke on complete signal

* @param subscriptionConsumer the consumer to invoke on subscribe signal, to be used

* for the initial {@link Subscription#request(long) request}, or null for max request

*

* @return a new {@link Disposable} that can be used to cancel the underlying {@link Subscription}

*/

public final Disposable subscribe(

@Nullable Consumer consumer,

@Nullable Consumer errorConsumer,

@Nullable Runnable completeConsumer,

@Nullable Consumer subscriptionConsumer) {

return subscribeWith(new LambdaSubscriber<>(consumer, errorConsumer,

completeConsumer,

subscriptionConsumer));

}

@Override

public final void subscribe(Subscriber actual) {

onLastAssembly(this).subscribe(Operators.toCoreSubscriber(actual));

}

*

* @param consumer the consumer to invoke on each value

* @param errorConsumer the consumer to invoke on error signal

* @param completeConsumer the consumer to invoke on complete signal

* @param subscriptionConsumer the consumer to invoke on subscribe signal, to be used

* for the initial {@link Subscription#request(long) request}, or null for max request

*

* @return a new {@link Disposable} that can be used to cancel the underlying {@link Subscription}

*/

public final Disposable subscribe(

@Nullable Consumer consumer,

@Nullable Consumer errorConsumer,

@Nullable Runnable completeConsumer,

@Nullable Consumer subscriptionConsumer) {

return subscribeWith(new LambdaSubscriber<>(consumer, errorConsumer,

completeConsumer,

subscriptionConsumer));

}

@Override

public final void subscribe(Subscriber actual) {

onLastAssembly(this).subscribe(Operators.toCoreSubscriber(actual));

}

创建的是LambdaSubscriber,最后调用FluxCreate.subscribe

FluxCreate.subscribe

reactor-core-3.1.3.RELEASE-sources.jar!/reactor/core/publisher/FluxCreate.java

public void subscribe(CoreSubscriber actual) {

BaseSink sink = createSink(actual, backpressure);

actual.onSubscribe(sink);

try {

source.accept(

createMode == CreateMode.PUSH_PULL ? new SerializedSink<>(sink) :

sink);

}

catch (Throwable ex) {

Exceptions.throwIfFatal(ex);

sink.error(Operators.onOperatorError(ex, actual.currentContext()));

}

}

static BaseSink createSink(CoreSubscriber t,

OverflowStrategy backpressure) {

switch (backpressure) {

case IGNORE: {

return new IgnoreSink<>(t);

}

case ERROR: {

return new ErrorAsyncSink<>(t);

}

case DROP: {

return new DropAsyncSink<>(t);

}

case LATEST: {

return new LatestAsyncSink<>(t);

}

default: {

return new BufferAsyncSink<>(t, Queues.SMALL_BUFFER_SIZE);

}

}

}

先创建sink,这里创建的是BufferAsyncSink,然后调用LambdaSubscriber.onSubscribe

然后再调用source.accept,也就是调用fluxSink的lambda方法产生数据,开启stream模式

LambdaSubscriber.onSubscribe

reactor-core-3.1.3.RELEASE-sources.jar!/reactor/core/publisher/LambdaSubscriber.java

public final void onSubscribe(Subscription s) {

if (Operators.validate(subscription, s)) {

this.subscription = s;

if (subscriptionConsumer != null) {

try {

subscriptionConsumer.accept(s);

}

catch (Throwable t) {

Exceptions.throwIfFatal(t);

s.cancel();

onError(t);

}

}

else {

s.request(Long.MAX_VALUE);

}

}

}

这里又调用了BufferAsyncSink的request(Long.MAX_VALUE),实际是调用BaseSink的request

public final void request(long n) {

if (Operators.validate(n)) {

Operators.addCap(REQUESTED, this, n);

LongConsumer consumer = requestConsumer;

if (n > 0 && consumer != null && !isCancelled()) {

consumer.accept(n);

}

onRequestedFromDownstream();

}

}

这里的onRequestedFromDownstream调用了BufferAsyncSink的onRequestedFromDownstream

@Override

void onRequestedFromDownstream() {

drain();

}

调用的是BufferAsyncSink的drain

BufferAsyncSink.drain

void drain() {

if (WIP.getAndIncrement(this) != 0) {

return;

}

int missed = 1;

final Subscriber a = actual;

final Queue q = queue;

for (; ; ) {

long r = requested;

long e = 0L;

while (e != r) {

if (isCancelled()) {

q.clear();

return;

}

boolean d = done;

T o = q.poll();

boolean empty = o == null;

if (d && empty) {

Throwable ex = error;

if (ex != null) {

super.error(ex);

}

else {

super.complete();

}

return;

}

if (empty) {

break;

}

a.onNext(o);

e++;

}

if (e == r) {

if (isCancelled()) {

q.clear();

return;

}

boolean d = done;

boolean empty = q.isEmpty();

if (d && empty) {

Throwable ex = error;

if (ex != null) {

super.error(ex);

}

else {

super.complete();

}

return;

}

}

if (e != 0) {

Operators.produced(REQUESTED, this, e);

}

missed = WIP.addAndGet(this, -missed);

if (missed == 0) {

break;

}

}

}

这里的queue是创建BufferAsyncSink指定的,默认是Queues.SMALL_BUFFER_SIZE(

Math.max(16,Integer.parseInt(System.getProperty("reactor.bufferSize.small", "256"))))

而这里的onNext则是同步调用LambdaSubscriber的consumer

FluxCreate.subscribe#source.accept

source.accept(

createMode == CreateMode.PUSH_PULL ? new SerializedSink<>(sink) :

sink);

CreateMode.PUSH_PULL这里对sink包装为SerializedSink,然后调用Flux.create自定义的lambda consumer

fluxSink -> {

//NOTE sink:class reactor.core.publisher.FluxCreate$SerializedSink

LOGGER.info("sink:{}",fluxSink.getClass());

while (true) {

LOGGER.info("sink next");

fluxSink.next(ThreadLocalRandom.current().nextInt());

}

}

之后就开启数据推送

SerializedSink.next

reactor-core-3.1.3.RELEASE-sources.jar!/reactor/core/publisher/FluxCreate.java#SerializedSink.next

public FluxSink next(T t) {

Objects.requireNonNull(t, "t is null in sink.next(t)");

if (sink.isCancelled() || done) {

Operators.onNextDropped(t, sink.currentContext());

return this;

}

if (WIP.get(this) == 0 && WIP.compareAndSet(this, 0, 1)) {

try {

sink.next(t);

}

catch (Throwable ex) {

Operators.onOperatorError(sink, ex, t, sink.currentContext());

}

if (WIP.decrementAndGet(this) == 0) {

return this;

}

}

else {

Queue q = queue;

synchronized (this) {

q.offer(t);

}

if (WIP.getAndIncrement(this) != 0) {

return this;

}

}

drainLoop();

return this;

}

这里调用BufferAsyncSink.next,然后drainLoop之后才返回

BufferAsyncSink.next

public FluxSink next(T t) {

queue.offer(t);

drain();

return this;

}

这里将数据放入queue中,然后调用drain取数据,同步调用LambdaSubscriber的onNext

reactor-core-3.1.3.RELEASE-sources.jar!/reactor/core/publisher/LambdaSubscriber.java

@Override

public final void onNext(T x) {

try {

if (consumer != null) {

consumer.accept(x);

}

}

catch (Throwable t) {

Exceptions.throwIfFatal(t);

this.subscription.cancel();

onError(t);

}

}

即同步调用自定义的subscribe方法,实例中除了log还会sleep,这里是同步阻塞的

这里调用完之后,fluxSink这里的next方法返回,然后继续循环

fluxSink -> {

//NOTE sink:class reactor.core.publisher.FluxCreate$SerializedSink

LOGGER.info("sink:{}",fluxSink.getClass());

while (true) {

LOGGER.info("sink next");

fluxSink.next(ThreadLocalRandom.current().nextInt());

}

}

小结

fluxSink这里看是无限循环next产生数据,实则不用担心,如果subscribe与fluxSink都是同一个线程的话(本实例都是在main线程),它们是同步阻塞调用的。

subscribe的时候调用LambdaSubscriber.onSubscribe,request(N)请求数据,然后再调用source.accept,也就是调用fluxSink的lambda方法产生数据,开启stream模式

这里的fluxSink.next里头阻塞调用了subscribe的consumer,返回之后才继续循环。

至于BUFFER模式OOM的问题,可以思考下如何产生。