作为学习记录,记录一下复杂的心情。整个项目并没看完,很多流程其实已经忘了,但。。。。。。。。。。。

极其简单的修改奏效了

使用vgg.py的vgg_a作为整个模型的特征提取工具。

代码如下:

def vgg_a(inputs,

num_classes=1000,

is_training=True,

dropout_keep_prob=0.5,

spatial_squeeze=True,

scope='vgg_a',

fc_conv_padding='VALID',

global_pool=False,

stride=8):

with tf.variable_scope(scope, 'vgg_a', [inputs]) as sc:

end_points_collection = sc.original_name_scope + '_end_points'

# Collect outputs for conv2d, fully_connected and max_pool2d.

with slim.arg_scope([slim.conv2d, slim.max_pool2d],

outputs_collections=end_points_collection):

end_points = slim.utils.convert_collection_to_dict(end_points_collection)

net = slim.repeat(inputs, 1, slim.conv2d, 64, [3, 3], scope='conv1')

net = slim.max_pool2d(net, [2, 2], scope='pool1')

end_points['pool1'] = net

net = slim.repeat(net, 1, slim.conv2d, 128, [3, 3], scope='conv2')

net = slim.max_pool2d(net, [2, 2], scope='pool2')

end_points['pool2'] = net

net = slim.repeat(net, 2, slim.conv2d, 256, [3, 3], scope='conv3')

net = slim.max_pool2d(net, [2, 2], scope='pool3')

end_points['pool3'] = net

if stride == 8:

return net, end_points

net = slim.repeat(net, 2, slim.conv2d, 512, [3, 3], scope='conv4')

net = slim.max_pool2d(net, [2, 2], scope='pool4')

end_points['pool4'] = net

if stride == 16:

return net, end_points

net = slim.repeat(net, 2, slim.conv2d, 512, [3, 3], scope='conv5')

net = slim.max_pool2d(net, [2, 2], scope='pool5')

end_points['pool5'] = net

# Use conv2d instead of fully_connected layers.

return net, end_points

这里的返回值就是最后一层的返回值和end_ponts的字典。

修改Resnet V1 Faster R-CNN implementation.

不敢在其他地方修改,照着Resnet V1 Faster R-CNN implementation的test文件简单修改了一下,其他地方是不敢动啊。

"""Resnet V1 Faster R-CNN implementation.

See "Deep Residual Learning for Image Recognition" by He et al., 2015.

https://arxiv.org/abs/1512.03385

Note: this implementation assumes that the classification checkpoint used

to finetune this model is trained using the same configuration as that of

the MSRA provided checkpoints

(see https://github.com/KaimingHe/deep-residual-networks), e.g., with

same preprocessing, batch norm scaling, etc.

"""

import tensorflow as tf

from object_detection.meta_architectures import faster_rcnn_meta_arch

from nets import resnet_utils

from nets import resnet_v1

from nets import vgg

slim = tf.contrib.slim

class FasterRCNNResnetV1FeatureExtractor(

faster_rcnn_meta_arch.FasterRCNNFeatureExtractor):

"""Faster R-CNN Resnet V1 feature extractor implementation."""

def __init__(self,

architecture,

resnet_model,

is_training,

first_stage_features_stride,

batch_norm_trainable=False,

reuse_weights=None,

weight_decay=0.0):

"""Constructor.

Args:

architecture: Architecture name of the Resnet V1 model.

resnet_model: Definition of the Resnet V1 model.

is_training: See base class.

first_stage_features_stride: See base class.

batch_norm_trainable: See base class.

reuse_weights: See base class.

weight_decay: See base class.

Raises:

ValueError: If `first_stage_features_stride` is not 8 or 16.

"""

if first_stage_features_stride != 8 and first_stage_features_stride != 16:

raise ValueError('`first_stage_features_stride` must be 8 or 16.')

self._architecture = architecture

self._resnet_model = resnet_model

super(FasterRCNNResnetV1FeatureExtractor, self).__init__(

is_training, first_stage_features_stride, batch_norm_trainable,

reuse_weights, weight_decay)

def preprocess(self, resized_inputs):

"""Faster R-CNN Resnet V1 preprocessing.

VGG style channel mean subtraction as described here:

https://gist.github.com/ksimonyan/211839e770f7b538e2d8#file-readme-md

Args:

resized_inputs: A [batch, height_in, width_in, channels] float32 tensor

representing a batch of images with values between 0 and 255.0.

Returns:

preprocessed_inputs: A [batch, height_out, width_out, channels] float32

tensor representing a batch of images.

"""

channel_means = [123.68, 116.779, 103.939]

return resized_inputs - [[channel_means]]

def _extract_proposal_features(self, preprocessed_inputs, scope):

"""Extracts first stage RPN features.

Args:

preprocessed_inputs: A [batch, height, width, channels] float32 tensor

representing a batch of images.

scope: A scope name.

Returns:

rpn_feature_map: A tensor with shape [batch, height, width, depth]

activations: A dictionary mapping feature extractor tensor names to

tensors

Raises:

InvalidArgumentError: If the spatial size of `preprocessed_inputs`

(height or width) is less than 33.

ValueError: If the created network is missing the required activation.

"""

if len(preprocessed_inputs.get_shape().as_list()) != 4:

raise ValueError('`preprocessed_inputs` must be 4 dimensional, got a '

'tensor of shape %s' % preprocessed_inputs.get_shape())

shape_assert = tf.Assert(

tf.logical_and(

tf.greater_equal(tf.shape(preprocessed_inputs)[1], 33),

tf.greater_equal(tf.shape(preprocessed_inputs)[2], 33)),

['image size must at least be 33 in both height and width.'])

with tf.control_dependencies([shape_assert]):

# Disables batchnorm for fine-tuning with smaller batch sizes.

# TODO(chensun): Figure out if it is needed when image

# batch size is bigger.

with slim.arg_scope(vgg.vgg_arg_scope(weight_decay=self._weight_decay)):

net, end_point = vgg.vgg_a(preprocessed_inputs,

num_classes=1000,

is_training=True,

dropout_keep_prob=0.5,

spatial_squeeze=True,

scope='vgg_a',

fc_conv_padding='VALID',

global_pool=False,

stride=self._first_stage_features_stride)

# with slim.arg_scope(

# resnet_utils.resnet_arg_scope(

# batch_norm_epsilon=1e-5,

# batch_norm_scale=True,

# weight_decay=self._weight_decay)):

# with tf.variable_scope(

# self._architecture, reuse=self._reuse_weights) as var_scope:

# _, activations = self._resnet_model(

# preprocessed_inputs,

# num_classes=None,

# is_training=self._train_batch_norm,

# global_pool=False,

# output_stride=self._first_stage_features_stride,

# spatial_squeeze=False,

# scope=var_scope)

#

# handle = scope + '/%s/block1' % self._architecture

return net, end_point

def _extract_box_classifier_features(self, proposal_feature_maps, scope):

"""Extracts second stage box classifier features.

Args:

proposal_feature_maps: A 4-D float tensor with shape

[batch_size * self.max_num_proposals, crop_height, crop_width, depth]

representing the feature map cropped to each proposal.

scope: A scope name (unused).

Returns:

proposal_classifier_features: A 4-D float tensor with shape

[batch_size * self.max_num_proposals, height, width, depth]

representing box classifier features for each proposal.

"""

with slim.arg_scope(vgg.vgg_arg_scope(weight_decay=self._weight_decay)):

proposal_classifier_features = slim.conv2d(proposal_feature_maps, num_outputs=2048, kernel_size=3,scope='conv8')

# with tf.variable_scope(self._architecture, reuse=self._reuse_weights):

# with slim.arg_scope(

# resnet_utils.resnet_arg_scope(

# batch_norm_epsilon=1e-5,

# batch_norm_scale=True,

# weight_decay=self._weight_decay)):

# with slim.arg_scope([slim.batch_norm],

# is_training=self._train_batch_norm):

# blocks = [

# resnet_utils.Block('block2', resnet_v1.bottleneck, [{

# 'depth': 2048,

# 'depth_bottleneck': 512,

# 'stride': 1

# }] * 3)

# ]

# proposal_classifier_features = resnet_utils.stack_blocks_dense(

# proposal_feature_maps, blocks)

return proposal_classifier_features

class FasterRCNNResnet101FeatureExtractor(FasterRCNNResnetV1FeatureExtractor):

"""Faster R-CNN Resnet 101 feature extractor implementation."""

def __init__(self,

is_training,

first_stage_features_stride,

batch_norm_trainable=False,

reuse_weights=None,

weight_decay=0.0):

"""Constructor.

Args:

is_training: See base class.

first_stage_features_stride: See base class.

batch_norm_trainable: See base class.

reuse_weights: See base class.

weight_decay: See base class.

Raises:

ValueError: If `first_stage_features_stride` is not 8 or 16,

or if `architecture` is not supported.

"""

super(FasterRCNNResnet101FeatureExtractor, self).__init__(

'resnet_v1_101', resnet_v1.resnet_v1_101, is_training,

first_stage_features_stride, batch_norm_trainable,

reuse_weights, weight_decay)

配置文件使用的是101的配置文件,所以其他的可以干掉了。

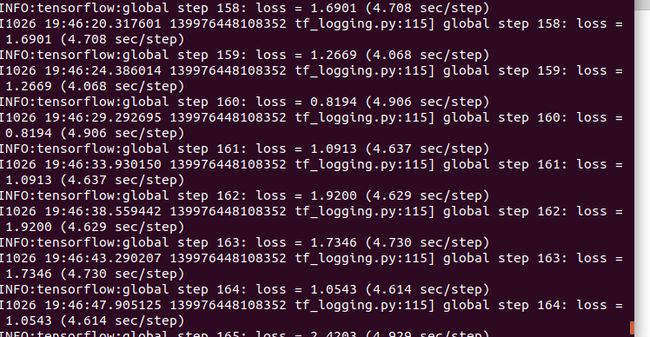

修改奏效了

模型

loss

pb文件

检测图片

这里只是简单修改,因为在test文件中发现Resnet V1 Faster R-CNN implementation的第二阶段竟然没有改变输入的维度。照着test文件进行修改了一下。

更新

今天又重新进行了改动,

创建faster_rcnn_vgg_feature_extractor.py

# Copyright 2017 The TensorFlow Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ==============================================================================

#

import tensorflow as tf

from object_detection.meta_architectures import faster_rcnn_meta_arch

from nets import vgg

slim = tf.contrib.slim

class FasterRCNNVGGFeatureExtractor(

faster_rcnn_meta_arch.FasterRCNNFeatureExtractor):

"""Faster R-CNN Resnet V1 feature extractor implementation."""

def __init__(self,

is_training,

first_stage_features_stride,

batch_norm_trainable=False,

reuse_weights=None,

weight_decay=0.0):

print('first_stage_features_stride',first_stage_features_stride)

if first_stage_features_stride != 8 and first_stage_features_stride != 16:

raise ValueError('`first_stage_features_stride` must be 8 or 16.')

super(FasterRCNNVGGFeatureExtractor, self).__init__(

is_training, first_stage_features_stride, batch_norm_trainable,

reuse_weights, weight_decay)

def preprocess(self, resized_inputs):

channel_means = [123.68, 116.779, 103.939]

return resized_inputs - [[channel_means]]

def _extract_proposal_features(self, preprocessed_inputs, scope):

if len(preprocessed_inputs.get_shape().as_list()) != 4:

raise ValueError('`preprocessed_inputs` must be 4 dimensional, got a '

'tensor of shape %s' % preprocessed_inputs.get_shape())

shape_assert = tf.Assert(

tf.logical_and(

tf.greater_equal(tf.shape(preprocessed_inputs)[1], 33),

tf.greater_equal(tf.shape(preprocessed_inputs)[2], 33)),

['image size must at least be 33 in both height and width.'])

with tf.control_dependencies([shape_assert]):

# Disables batchnorm for fine-tuning with smaller batch sizes.

# TODO(chensun): Figure out if it is needed when image

# batch size is bigger.

with slim.arg_scope(vgg.vgg_arg_scope(weight_decay=self._weight_decay)):

net, end_point = vgg.vgg_a(preprocessed_inputs,

num_classes=1000,

is_training=True,

dropout_keep_prob=0.5,

spatial_squeeze=True,

scope='vgg_a',

fc_conv_padding='VALID',

global_pool=False,

stride=self._first_stage_features_stride)

return net, end_point

def _extract_box_classifier_features(self, proposal_feature_maps, scope):

with slim.arg_scope(vgg.vgg_arg_scope(weight_decay=self._weight_decay)):

net = slim.repeat(proposal_feature_maps, 2, slim.conv2d, 2048, [3, 3], scope='conv8')

net = slim.max_pool2d(net, [2, 2], scope='pool8')

proposal_classifier_features = slim.repeat(proposal_feature_maps, 3, slim.conv2d, 64, [3, 3], scope='conv9')

return proposal_classifier_features

在model_builder.py中增加faster_vgg

from object_detection.models import faster_rcnn_vgg_feature_extractor as frcnn_vgg

# 进注册

FASTER_RCNN_FEATURE_EXTRACTOR_CLASS_MAP = {

'faster_rcnn_nas':

frcnn_nas.FasterRCNNNASFeatureExtractor,

'faster_rcnn_vgg':

frcnn_vgg.FasterRCNNVGGFeatureExtractor,

'faster_rcnn_pnas':

frcnn_pnas.FasterRCNNPNASFeatureExtractor,

'faster_rcnn_inception_resnet_v2':

frcnn_inc_res.FasterRCNNInceptionResnetV2FeatureExtractor,

'faster_rcnn_inception_v2':

frcnn_inc_v2.FasterRCNNInceptionV2FeatureExtractor,

'faster_rcnn_resnet50':

frcnn_resnet_v1.FasterRCNNResnet50FeatureExtractor,

'faster_rcnn_resnet101':

frcnn_resnet_v1.FasterRCNNResnet101FeatureExtractor,

'faster_rcnn_resnet152':

frcnn_resnet_v1.FasterRCNNResnet152FeatureExtractor,

}

修改config文件

model {

faster_rcnn {

num_classes: 20

image_resizer {

keep_aspect_ratio_resizer {

min_dimension: 600

max_dimension: 1024

}

}

feature_extractor {

type: 'faster_rcnn_vgg'

first_stage_features_stride: 16

}

first_stage_anchor_generator {

grid_anchor_generator {

scales: [0.25, 0.5, 1.0, 2.0]

aspect_ratios: [0.5, 1.0, 2.0]

height_stride: 16

width_stride: 16

}

}

first_stage_box_predictor_conv_hyperparams {

op: CONV

regularizer {

l2_regularizer {

weight: 0.0

}

}

initializer {

truncated_normal_initializer {

stddev: 0.01

}

}

}

first_stage_nms_score_threshold: 0.0

first_stage_nms_iou_threshold: 0.7

first_stage_max_proposals: 300

first_stage_localization_loss_weight: 2.0

first_stage_objectness_loss_weight: 1.0

initial_crop_size: 14

maxpool_kernel_size: 2

maxpool_stride: 2

second_stage_box_predictor {

mask_rcnn_box_predictor {

use_dropout: false

dropout_keep_probability: 1.0

fc_hyperparams {

op: FC

regularizer {

l2_regularizer {

weight: 0.0

}

}

initializer {

variance_scaling_initializer {

factor: 1.0

uniform: true

mode: FAN_AVG

}

}

}

}

}

second_stage_post_processing {

batch_non_max_suppression {

score_threshold: 0.0

iou_threshold: 0.6

max_detections_per_class: 100

max_total_detections: 300

}

score_converter: SOFTMAX

}

second_stage_localization_loss_weight: 2.0

second_stage_classification_loss_weight: 1.0

}

}

train_config: {

batch_size: 1

optimizer {

momentum_optimizer: {

learning_rate: {

manual_step_learning_rate {

initial_learning_rate: 0.0003

schedule {

step: 900000

learning_rate: .00003

}

schedule {

step: 1200000

learning_rate: .000003

}

}

}

momentum_optimizer_value: 0.9

}

use_moving_average: false

}

gradient_clipping_by_norm: 10.0

#fine_tune_checkpoint: "faster_rcnn_vgg_coco_11_06_2017/model.ckpt"

from_detection_checkpoint: false

data_augmentation_options {

random_horizontal_flip {

}

}

}

train_input_reader: {

tf_record_input_reader {

input_path: "data/voc2012_trian.record"

}

label_map_path: "data/pascal_label_map.pbtxt"

}

eval_config: {

num_examples: 100

# Note: The below line limits the evaluation process to 10 evaluations.

# Remove the below line to evaluate indefinitely.

max_evals: 100

eval_interval_secs: 5

#metrics_set:"pascal_voc_detection_metrics"

metrics_set: "coco_detection_metrics"

}

eval_input_reader: {

tf_record_input_reader {

input_path: "data/voc2012_val.record"

}

label_map_path: "data/pascal_label_map.pbtxt"

shuffle: false

num_readers: 1

num_epochs: 1

}

怎么调参需要慢慢弄。。。。。。。