本文为《Flink大数据项目实战》学习笔记,想通过视频系统学习Flink这个最火爆的大数据计算框架的同学,推荐学习课程:

Flink大数据项目实战:http://t.cn/EJtKhaz

1. 创建Flink项目及依赖管理

1.1创建Flink项目

官网创建Flink项目有两种方式:

https://ci.apache.org/projects/flink/flink-docs-release-1.6/quickstart/java_api_quickstart.html

方式一:

mvn archetype:generate \

-DarchetypeGroupId=org.apache.flink \

-DarchetypeArtifactId=flink-quickstart-java\

-DarchetypeVersion=1.6.2

方式二

$ curlhttps://flink.apache.org/q/quickstart.sh | bash -s 1.6.2

这里我们仍然使用第一种方式创建Flink项目。

打开终端,切换到对应的目录,通过maven创建flink项目

mvn archetype:generate-DarchetypeGroupId=org.apache.flink -DarchetypeArtifactId=flink-quickstart-java -DarchetypeVersion=1.6.2

项目构建过程中需要输入groupId,artifactId,version和package

Flink项目创建成功

打开IDEA工具,点击open。

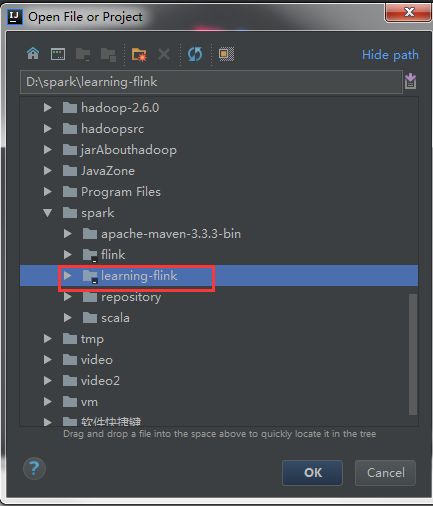

选择刚刚创建的flink项目

Flink项目已经成功导入IDEA开发工具

通过maven打包测试运行

mvn clean package

刷新target目录可以看到刚刚打包的flink项目

1.2. Flink依赖

Core Dependencies(核心依赖):

1.核心依赖打包在flink-dist*.jar里

2.包含coordination,

networking, checkpoints, failover, APIs, operations (such as windowing),

resource management等必须的依赖

注意:核心依赖不会随着应用打包(provided)

3.核心依赖项尽可能小,并避免依赖项冲突

Pom文件中添加核心依赖

org.apache.flink

flink-java

1.6.2

provided

org.apache.flink

flink-streaming-java_2.11

1.6.2

provided

注意:不会随着应用打包。

User Application Dependencies(应用依赖):

connectors, formats, or libraries(CEP, SQL,

ML)、

注意:应用依赖会随着应用打包(scope保持默认值就好)

Pom文件中添加应用依赖

org.apache.flink

flink-connector-kafka-0.10_2.11

1.6.2

注意:应用依赖按需选择,会随着应用打包,可以通过Maven Shade插件进行打包。

1.3. 关于Scala版本

Scala各版本之间是不兼容的(你基于Scala2.12开发Flink应用就不能依赖Scala2.11的依赖包)。

只使用Java的开发人员可以选择任何Scala版本,Scala开发人员需要选择与他们的应用程序的Scala版本匹配的Scala版本。

1.4. Hadoop依赖

不要把Hadoop依赖直接添加到Flink application,而是:

export HADOOP_CLASSPATH=`hadoop classpath`

Flink组件启动时会使用该环境变量的

特殊情况:如果在Flink application中需要用到Hadoop的input-/output format,只需引入Hadoop兼容包即可(Hadoop compatibility wrappers)

org.apache.flink

flink-hadoop-compatibility_2.11

1.6.2

1.5 Flink项目打包

Flink 可以使用maven-shade-plugin对Flink maven项目进行打包,具体打包命令为mvn clean package。

2. 自己编译Flink

2.1安装maven

1.下载

到maven官网下载安装包,这里我们可以选择使用apache-maven-3.3.9-bin.tar.gz。

2.解压

将apache-maven-3.3.9-bin.tar.gz安装包上传至主节点的,然后使用tar命令进行解压

tar -zxvf apache-maven-3.3.9-bin.tar.gz

3.创建软连接

ln -s apache-maven-3.3.9 maven

4.配置环境变量

vi ~/.bashrc

export MAVEN_HOME=/home/hadoop/app/maven

export PATH=$MAVEN_HOME/bin:$PATH

5.生效环境变量

source ~/.bashrc

6.查看maven版本

mvn –version

7. settings.xml配置阿里镜像

添加阿里镜像

nexus-osc

*

Nexusosc

http://maven.aliyun.com/nexus/content/repositories/central

2.2安装jdk

编译flink要求jdk8或者以上版本,这里已经提前安装好jdk1.8,具体安装配置不再赘叙,查看版本如下:

[hadoop@cdh01 conf]$ java -version

java version "1.8.0_51"

Java(TM) SE Runtime Environment (build1.8.0_51-b16)

Java HotSpot(TM) 64-Bit Server VM (build25.51-b03, mixed mode)

2.3下载源码

登录github:https://github.com/apache/flink,获取flink下载地址:https://github.com/apache/flink.git

打开Flink主节点终端,进入/home/hadoop/opensource目录,通过git

clone下载flink源码:

gitclonehttps://github.com/apache/flink.git

错误1:如果Linux没有安装git,会报如下错误:

bash:git: command not found

解决:git安装步骤如下所示:

1.安装编译git时需要的包(注意需要在root用户下安装)

yuminstall curl-devel expat-devel gettext-devel openssl-devel zlib-devel

yuminstall gcc perl-ExtUtils-MakeMaker

2.删除已有的git

yumremove git

3.下载git源码

先安装wget

yum -yinstall wget

使用wget下载git源码

wgethttps://www.kernel.org/pub/software/scm/git/git-2.0.5.tar.gz

解压git

tar xzfgit-2.0.5.tar.gz

编译安装git

cdgit-2.0.5

makeprefix=/usr/local/git all

sudomake prefix=/usr/local/git install

echo"export PATH=$PATH:/usr/local/git/bin" >> ~/.bashrc

source~/.bashrc

查看git版本

git –version

错误2:git clonehttps://github.com/apache/flink.git

Cloninginto 'flink'...

fatal:unable to access 'https://github.com/apache/flink.git/': SSL connect error

解决:

升级 nss 版本:yum updatenss

2.4切换对应flink版本

使用如下命令查看flink版本分支

git tag

切换到flink对应版本(这里我们使用flink1.6.2)

git checkout release-1.6.2

2.5编译flink

进入flink 源码根目录:/home/hadoop/opensource/flink,通过maven编译flink

mvn clean install -DskipTests-Dhadoop.version=2.6.0

报错:

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 06:58 min

[INFO] Finished at:2019-01-18T22:11:54-05:00

[INFO] Final Memory: 106M/454M

[INFO]------------------------------------------------------------------------

[ERROR] Failed to execute goal on projectflink-mapr-fs: Could not resolve dependencies for projectorg.apache.flink:flink-mapr-fs:jar:1.6.2: Could not find artifactcom.mapr.hadoop:maprfs:jar:5.2.1-mapr in nexus-osc(http://maven.aliyun.com/nexus/content/repositories/central) -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of theerrors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch toenable full debug logging.

[ERROR]

[ERROR] For more information about theerrors and possible solutions, please read the following articles:

[ERROR] [Help 1]http://cwiki.apache.org/confluence/display/MAVEN/DependencyResolutionException

[ERROR]

[ERROR] After correcting the problems, youcan resume the build with the command

[ERROR] mvn -rf :flink-mapr-fs

报错缺失flink-mapr-fs,需要手动下载安装。

解决:

1.下载maprfs jar包

通过手动下载maprfs-5.2.1-mapr.jar包,下载地址地址:https://repository.mapr.com/nexus/content/groups/mapr-public/com/mapr/hadoop/maprfs/5.2.1-mapr/

2.上传至主节点

将下载的maprfs-5.2.1-mapr.jar包上传至主节点的/home/hadoop/downloads目录下。

3.手动安装

手动安装缺少的包到本地仓库

mvn install:install-file-DgroupId=com.mapr.hadoop -DartifactId=maprfs -Dversion=5.2.1-mapr-Dpackaging=jar -Dfile=/home/hadoop/downloads/maprfs-5.2.1-mapr.jar

4.继续编译

使用maven继续编译flink(可以排除刚刚已经安装的包)

mvn clean install -Dmaven.test.skip=true-Dhadoop.version=2.7.3 -rf:flink-mapr-fs

报错:

[INFO] BUILD FAILURE

[INFO]------------------------------------------------------------------------

[INFO] Total time: 05:51 min

[INFO] Finished at:2019-01-18T22:39:20-05:00

[INFO] Final Memory: 108M/480M

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goalorg.apache.maven.plugins:maven-compiler-plugin:3.1:compile (default-compile) onproject flink-mapr-fs: Compilation failure: Compilation failure:

[ERROR] /home/hadoop/opensource/flink/flink-filesystems/flink-mapr-fs/src/main/java/org/apache/flink/runtime/fs/maprfs/MapRFileSystem.java:[70,44]package org.apache.hadoop.fs does not exist

[ERROR]/home/hadoop/opensource/flink/flink-filesystems/flink-mapr-fs/src/main/java/org/apache/flink/runtime/fs/maprfs/MapRFileSystem.java:[73,45]cannot find symbol

[ERROR] symbol: class Configuration

[ERROR] location: packageorg.apache.hadoop.conf

[ERROR]/home/hadoop/opensource/flink/flink-filesystems/flink-mapr-fs/src/main/java/org/apache/flink/runtime/fs/maprfs/MapRFileSystem.java:[73,93]cannot find symbol

[ERROR] symbol: class Configuration

缺失org.apache.hadoop.fs包,报错找不到。

解决:

flink-mapr-fs模块的pom文件中添加如下依赖:

org.apache.hadoop

hadoop-common

${hadoop.version}

继续往后编译:

mvn clean install -Dmaven.test.skip=true-Dhadoop.version=2.7.3 -rf:flink-mapr-fs

又报错:

[ERROR] Failed to execute goal on projectflink-avro-confluent-registry: Could not resolve dependencies for projectorg.apache.flink:flink-avro-confluent-registry:jar:1.6.2: Could not findartifact io.confluent:kafka-schema-registry-client:jar:3.3.1 in nexus-osc(http://maven.aliyun.com/nexus/content/repositories/central) -> [Help 1]

[ERROR]

报错缺少kafka-schema-registry-client-3.3.1.jar 包

解决:

手动下载kafka-schema-registry-client-3.3.1.jar包,下载地址如下:

http://packages.confluent.io/maven/io/confluent/kafka-schema-registry-client/3.3.1/kafka-schema-registry-client-3.3.1.jar

将下载的kafka-schema-registry-client-3.3.1.jar上传至主节点的目录下/home/hadoop/downloads

手动安装缺少的kafka-schema-registry-client-3.3.1.jar包

mvninstall:install-file -DgroupId=io.confluent-DartifactId=kafka-schema-registry-client -Dversion=3.3.1 -Dpackaging=jar -Dfile=/home/hadoop/downloads/kafka-schema-registry-client-3.3.1.jar

继续往后编译

mvnclean install -Dmaven.test.skip=true -Dhadoop.version=2.7.3 -rf :flink-mapr-fs