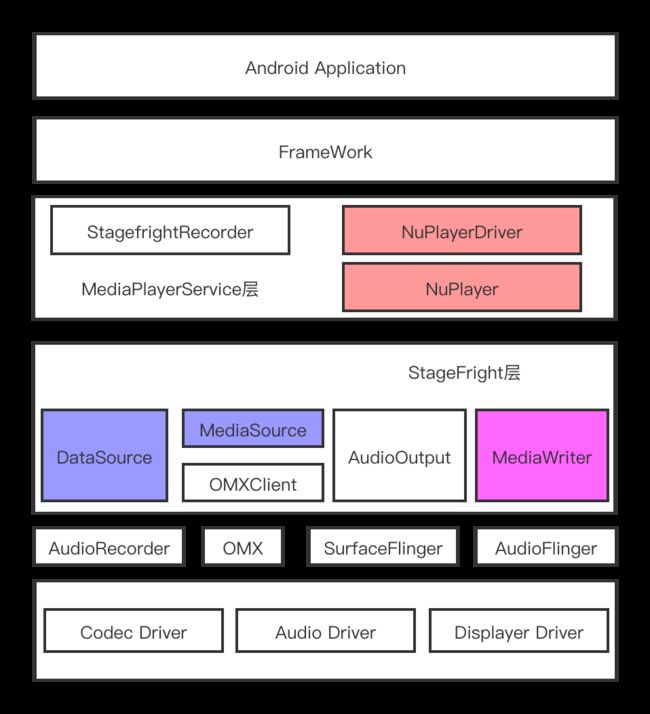

1.MediaPlayerService与StageFright层次关系

StageFright 层与 MediaPlayerService层次关系,MediaPlayerService暴露了一些接口,通过NuPlayer 承接上层的调用,向下调用StageFright层的解析、解码模块;

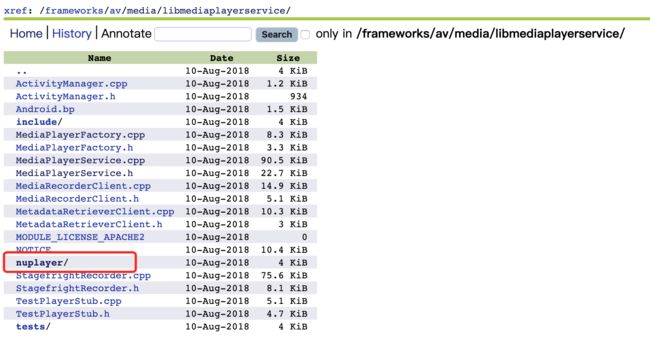

从上面的代码结构可以看到:

MediaPlayerSerivce层的代码在/ frameworks/ av/ media/ libmediaplayerservice/

StageFright层代码在/frameworks/av/media/libstagefright/

完整的视频播放过程:

- 分离出音视频流;

- 如果是视频流,通过OMX组件解码;

- 如果是音频流,通过音频流解码器解码,例如AACDecoder AC3Decoder解码;

- 解码完整后,做音视频同步工作;

- 将解码的视频数据渲染到屏幕上;

- 将解码后的音频通过音频系统进行播放;

2.NuPlayer 视频模块剖析

/frameworks/av/media/libmediaplayerservice/MediaPlayerFactory.cpp中实例了NuPlayerDriver对象创建NuPlayer 对象;

/frameworks/av/media/libmediaplayerservice/nuplayer/NuPlayerDriver.h继承/frameworks/av/media/libmediaplayerservice/include/MediaPlayerInterface.h

framework层调用到NuPlayerDriver,NuPlayerDriver 再和底层交互;

NuPlayerDriver 构造函数中 初始化 NuPlayer 对象;

/frameworks/av/media/libmediaplayerservice/nuplayer/NuPlayer.h继承自AHandler ,引入 AMessage,通过ALooper 来处理消息机制:

371void NuPlayer::prepareAsync() {

372 ALOGV("prepareAsync");

373

374 (new AMessage(kWhatPrepare, this))->post();

375}

548void NuPlayer::onMessageReceived(const sp &msg) {

549 switch (msg->what()) {

......

......

616 case kWhatPrepare:

617 {

618 ALOGV("onMessageReceived kWhatPrepare");

619

620 mSource->prepareAsync();

621 break;

622 }

......

......

1476 }

1477}

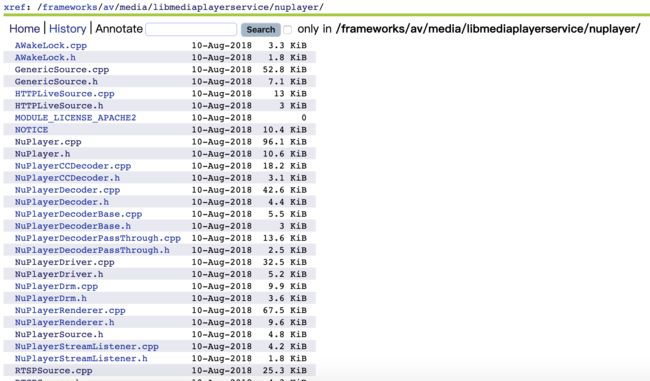

2.1 NuPlayer 数据解析

视频的url是在NuPlayer->setDataSourceAsync 中设置进去;

上一章也介绍过:

- HTTPLiveSource:针对m3u8 视频,实际上直播不止m3u8一种类型,MediaPlayer 支持有限;

- RTSPSource:这是针对RTSP的流,MediaPlayer专门针对RTSP的流做了优化;

- GenericSource:通用的source,一些http https file:///协议的url都是走这儿的;

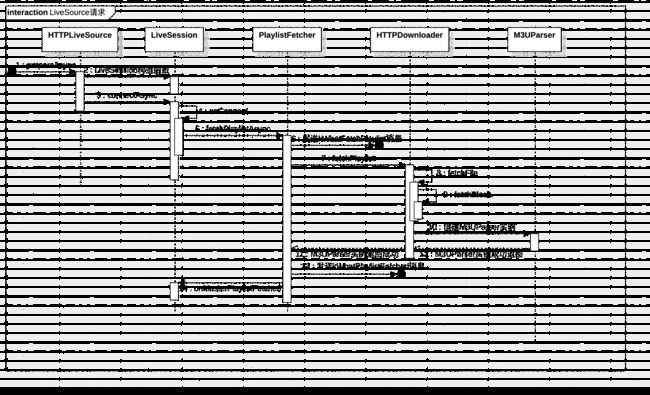

HTTPLiveSource 资源解析:

/frameworks/av/media/libmediaplayerservice/nuplayer/HTTPLiveSource.h

/frameworks/av/media/libstagefright/httplive/LiveSession.h

/frameworks/av/media/libstagefright/httplive/HTTPDownloader.h

/frameworks/av/media/libstagefright/httplive/M3UParser.h

/frameworks/av/media/libstagefright/httplive/PlaylistFetcher.h

/frameworks/av/media/libstagefright/httplive/HTTPDownloader.h

NuPlayer->setDataSourceAsync 只是构建一下Source,NuPlayer->prepareAsync 才会真正发起网络请求;

102void NuPlayer::HTTPLiveSource::prepareAsync() {

103 if (mLiveLooper == NULL) {

104 mLiveLooper = new ALooper;

105 mLiveLooper->setName("http live");

106 mLiveLooper->start();

107

108 mLiveLooper->registerHandler(this);

109 }

110

111 sp notify = new AMessage(kWhatSessionNotify, this);

112

113 mLiveSession = new LiveSession(

114 notify,

115 (mFlags & kFlagIncognito) ? LiveSession::kFlagIncognito : 0,

116 mHTTPService);

117

118 mLiveLooper->registerHandler(mLiveSession);

119

120 mLiveSession->setBufferingSettings(mBufferingSettings);

121 mLiveSession->connectAsync(

122 mURL.c_str(), mExtraHeaders.isEmpty() ? NULL : &mExtraHeaders);

123}

创建LiveSession实例,然后调用LiveSession->connectAsync 函数

504void LiveSession::connectAsync(

505 const char *url, const KeyedVector *headers) {

506 sp msg = new AMessage(kWhatConnect, this);

507 msg->setString("url", url);

508

509 if (headers != NULL) {

510 msg->setPointer(

511 "headers",

512 new KeyedVector(*headers));

513 }

514

515 msg->post();

516}

LiveSession->connectAsync发起一个异步消息,进入ALooper中处理;接收到消息,调用到PlayerListFetcher->fetchPlaylistAsync,发送kWhatFetchPlaylist 异步消息;

657 case kWhatFetchPlaylist:

658 {

659 bool unchanged;

660 sp playlist = mHTTPDownloader->fetchPlaylist(

661 mURI.c_str(), NULL /* curPlaylistHash */, &unchanged);

662

663 sp notify = mNotify->dup();

664 notify->setInt32("what", kWhatPlaylistFetched);

665 notify->setObject("playlist", playlist);

666 notify->post();

667 break;

668 }

请求到 M3UParser 对象,回传这个对象,回传到LiveSession 类中;

897 case PlaylistFetcher::kWhatPlaylistFetched:

898 {

899 onMasterPlaylistFetched(msg);

900 break;

901 }

onMasterPlaylistFetched 函数负责解析一下请求回来的M3U数据;

现在继续回到mHTTPDownloader->fetchPlaylist 函数;

224sp HTTPDownloader::fetchPlaylist(

225 const char *url, uint8_t *curPlaylistHash, bool *unchanged) {

226 ALOGV("fetchPlaylist '%s'", url);

227

228 *unchanged = false;

229

230 sp buffer;

231 String8 actualUrl;

232 ssize_t err = fetchFile(url, &buffer, &actualUrl);

233

234 // close off the connection after use

235 mHTTPDataSource->disconnect();

236

237 if (err <= 0) {

238 return NULL;

239 }

240

241 // MD5 functionality is not available on the simulator, treat all

242 // playlists as changed.

243

244#if defined(__ANDROID__)

245 uint8_t hash[16];

246

247 MD5_CTX m;

248 MD5_Init(&m);

249 MD5_Update(&m, buffer->data(), buffer->size());

250

251 MD5_Final(hash, &m);

252

253 if (curPlaylistHash != NULL && !memcmp(hash, curPlaylistHash, 16)) {

254 // playlist unchanged

255 *unchanged = true;

256

257 return NULL;

258 }

259#endif

260

261 sp playlist =

262 new M3UParser(actualUrl.string(), buffer->data(), buffer->size());

263

264 if (playlist->initCheck() != OK) {

265 ALOGE("failed to parse .m3u8 playlist");

266

267 return NULL;

268 }

269

270#if defined(__ANDROID__)

271 if (curPlaylistHash != NULL) {

272

273 memcpy(curPlaylistHash, hash, sizeof(hash));

274 }

275#endif

276

277 return playlist;

278}

ssize_t err = fetchFile(url, &buffer, &actualUrl);

发起请求,然后创建一个M3UParser对象;

/frameworks/av/media/libstagefright/httplive/M3UParser.h

M3UParser的核心结构如下:

64 struct Item {

65 AString mURI;

66 sp mMeta;

67 };

68

69 status_t mInitCheck;

70

71 AString mBaseURI;

72 bool mIsExtM3U;

73 bool mIsVariantPlaylist;

74 bool mIsComplete;

75 bool mIsEvent;

76 int32_t mFirstSeqNumber;

77 int32_t mLastSeqNumber;

78 int64_t mTargetDurationUs;

79 size_t mDiscontinuitySeq;

80 int32_t mDiscontinuityCount;

81

82 sp mMeta;

83 Vector- mItems;

84 ssize_t mSelectedIndex;

85

86 // Media groups keyed by group ID.

87 KeyedVector

> mMediaGroups;

1025void LiveSession::onMasterPlaylistFetched(const sp &msg) {

1026 AString uri;

1027 CHECK(msg->findString("uri", &uri));

1028 ssize_t index = mFetcherInfos.indexOfKey(uri);

1029 if (index < 0) {

1030 ALOGW("fetcher for master playlist is gone.");

1031 return;

1032 }

1033

1034 // no longer useful, remove

1035 mFetcherLooper->unregisterHandler(mFetcherInfos[index].mFetcher->id());

1036 mFetcherInfos.removeItemsAt(index);

1037

1038 CHECK(msg->findObject("playlist", (sp *)&mPlaylist));

1039 if (mPlaylist == NULL) {

1040 ALOGE("unable to fetch master playlist %s.",

1041 uriDebugString(mMasterURL).c_str());

1042

1043 postPrepared(ERROR_IO);

1044 return;

1045 }

1046 // We trust the content provider to make a reasonable choice of preferred

1047 // initial bandwidth by listing it first in the variant playlist.

1048 // At startup we really don't have a good estimate on the available

1049 // network bandwidth since we haven't tranferred any data yet. Once

1050 // we have we can make a better informed choice.

1051 size_t initialBandwidth = 0;

1052 size_t initialBandwidthIndex = 0;

1053

1054 int32_t maxWidth = 0;

1055 int32_t maxHeight = 0;

1056

1057 if (mPlaylist->isVariantPlaylist()) {

1058 Vector itemsWithVideo;

1059 for (size_t i = 0; i < mPlaylist->size(); ++i) {

1060 BandwidthItem item;

1061

1062 item.mPlaylistIndex = i;

1063 item.mLastFailureUs = -1ll;

1064

1065 sp meta;

1066 AString uri;

1067 mPlaylist->itemAt(i, &uri, &meta);

1068

1069 CHECK(meta->findInt32("bandwidth", (int32_t *)&item.mBandwidth));

1070

1071 int32_t width, height;

1072 if (meta->findInt32("width", &width)) {

1073 maxWidth = max(maxWidth, width);

1074 }

1075 if (meta->findInt32("height", &height)) {

1076 maxHeight = max(maxHeight, height);

1077 }

1078

1079 mBandwidthItems.push(item);

1080 if (mPlaylist->hasType(i, "video")) {

1081 itemsWithVideo.push(item);

1082 }

1083 }

1084 // remove the audio-only variants if we have at least one with video

1085 if (!itemsWithVideo.empty()

1086 && itemsWithVideo.size() < mBandwidthItems.size()) {

1087 mBandwidthItems.clear();

1088 for (size_t i = 0; i < itemsWithVideo.size(); ++i) {

1089 mBandwidthItems.push(itemsWithVideo[i]);

1090 }

1091 }

1092

1093 CHECK_GT(mBandwidthItems.size(), 0u);

1094 initialBandwidth = mBandwidthItems[0].mBandwidth;

1095

1096 mBandwidthItems.sort(SortByBandwidth);

1097

1098 for (size_t i = 0; i < mBandwidthItems.size(); ++i) {

1099 if (mBandwidthItems.itemAt(i).mBandwidth == initialBandwidth) {

1100 initialBandwidthIndex = i;

1101 break;

1102 }

1103 }

1104 } else {

1105 // dummy item.

1106 BandwidthItem item;

1107 item.mPlaylistIndex = 0;

1108 item.mBandwidth = 0;

1109 mBandwidthItems.push(item);

1110 }

1111

1112 mMaxWidth = maxWidth > 0 ? maxWidth : mMaxWidth;

1113 mMaxHeight = maxHeight > 0 ? maxHeight : mMaxHeight;

1114

1115 mPlaylist->pickRandomMediaItems();

1116 changeConfiguration(

1117 0ll /* timeUs */, initialBandwidthIndex, false /* pickTrack */);

1118}

解析M3UParser中的 数据,将 width height 和 m3u8 中的其他属性取出来,接下来开始解析M3U8中的ts文件;

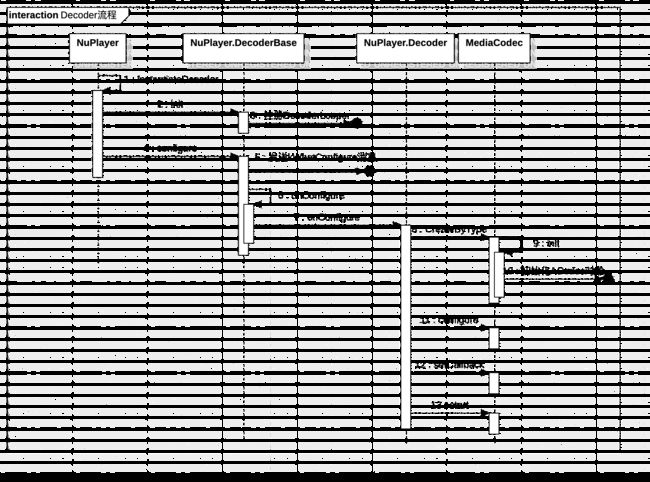

2.2 NuPlayer 解码模块

/frameworks/av/media/libmediaplayerservice/nuplayer/NuPlayerDecoderBase.cpp

/frameworks/av/media/libmediaplayerservice/nuplayer/NuPlayerDecoder.cpp

/frameworks/av/media/libstagefright/MediaCodec.cpp

/frameworks/av/media/libstagefright/ACodec.cpp

/frameworks/av/media/libmediaplayerservice/nuplayer/NuPlayerDecoder.cpp

继承

/frameworks/av/media/libmediaplayerservice/nuplayer/NuPlayerDecoderBase.cpp

在NuPlayer-> instantiateDecoder函数中,执行初始化Decoder的操作;

1894status_t NuPlayer::instantiateDecoder(

1895 bool audio, sp *decoder, bool checkAudioModeChange) {

1896 // The audio decoder could be cleared by tear down. If still in shut down

1897 // process, no need to create a new audio decoder.

1898 if (*decoder != NULL || (audio && mFlushingAudio == SHUT_DOWN)) {

1899 return OK;

1900 }

1901

1902 sp format = mSource->getFormat(audio);

1903

1904 if (format == NULL) {

1905 return UNKNOWN_ERROR;

1906 } else {

1907 status_t err;

1908 if (format->findInt32("err", &err) && err) {

1909 return err;

1910 }

1911 }

1912

1913 format->setInt32("priority", 0 /* realtime */);

1914

1915 if (!audio) {

1916 AString mime;

1917 CHECK(format->findString("mime", &mime));

1918

1919 sp ccNotify = new AMessage(kWhatClosedCaptionNotify, this);

1920 if (mCCDecoder == NULL) {

1921 mCCDecoder = new CCDecoder(ccNotify);

1922 }

1923

1924 if (mSourceFlags & Source::FLAG_SECURE) {

1925 format->setInt32("secure", true);

1926 }

1927

1928 if (mSourceFlags & Source::FLAG_PROTECTED) {

1929 format->setInt32("protected", true);

1930 }

1931

1932 float rate = getFrameRate();

1933 if (rate > 0) {

1934 format->setFloat("operating-rate", rate * mPlaybackSettings.mSpeed);

1935 }

1936 }

1937

1938 if (audio) {

1939 sp notify = new AMessage(kWhatAudioNotify, this);

1940 ++mAudioDecoderGeneration;

1941 notify->setInt32("generation", mAudioDecoderGeneration);

1942

1943 if (checkAudioModeChange) {

1944 determineAudioModeChange(format);

1945 }

1946 if (mOffloadAudio) {

1947 mSource->setOffloadAudio(true /* offload */);

1948

1949 const bool hasVideo = (mSource->getFormat(false /*audio */) != NULL);

1950 format->setInt32("has-video", hasVideo);

1951 *decoder = new DecoderPassThrough(notify, mSource, mRenderer);

1952 ALOGV("instantiateDecoder audio DecoderPassThrough hasVideo: %d", hasVideo);

1953 } else {

1954 mSource->setOffloadAudio(false /* offload */);

1955

1956 *decoder = new Decoder(notify, mSource, mPID, mUID, mRenderer);

1957 ALOGV("instantiateDecoder audio Decoder");

1958 }

1959 mAudioDecoderError = false;

1960 } else {

1961 sp notify = new AMessage(kWhatVideoNotify, this);

1962 ++mVideoDecoderGeneration;

1963 notify->setInt32("generation", mVideoDecoderGeneration);

1964

1965 *decoder = new Decoder(

1966 notify, mSource, mPID, mUID, mRenderer, mSurface, mCCDecoder);

1967 mVideoDecoderError = false;

1968

1969 // enable FRC if high-quality AV sync is requested, even if not

1970 // directly queuing to display, as this will even improve textureview

1971 // playback.

1972 {

1973 if (property_get_bool("persist.sys.media.avsync", false)) {

1974 format->setInt32("auto-frc", 1);

1975 }

1976 }

1977 }

1978 (*decoder)->init();

......

......

1988 (*decoder)->configure(format);

......

......

2010 return OK;

2011}

还区分了音频流和视频流;

如果是视频流,初始化Decoder对象,这个Decoder对象就是/frameworks/av/media/libmediaplayerservice/nuplayer/NuPlayerDecoder.cpp

它的父类是/frameworks/av/media/libmediaplayerservice/nuplayer/NuPlayerDecoderBase.cpp

decoder->configure执行到关键流程在 NuPlayer::Decoder::onConfigure 函数中:

279void NuPlayer::Decoder::onConfigure(const sp &format) {

280 CHECK(mCodec == NULL);

281

282 mFormatChangePending = false;

283 mTimeChangePending = false;

284

285 ++mBufferGeneration;

286

287 AString mime;

288 CHECK(format->findString("mime", &mime));

289

290 mIsAudio = !strncasecmp("audio/", mime.c_str(), 6);

291 mIsVideoAVC = !strcasecmp(MEDIA_MIMETYPE_VIDEO_AVC, mime.c_str());

292

293 mComponentName = mime;

294 mComponentName.append(" decoder");

295 ALOGV("[%s] onConfigure (surface=%p)", mComponentName.c_str(), mSurface.get());

296

297 mCodec = MediaCodec::CreateByType(

298 mCodecLooper, mime.c_str(), false /* encoder */, NULL /* err */, mPid, mUid);

299 int32_t secure = 0;

300 if (format->findInt32("secure", &secure) && secure != 0) {

301 if (mCodec != NULL) {

302 mCodec->getName(&mComponentName);

303 mComponentName.append(".secure");

304 mCodec->release();

305 ALOGI("[%s] creating", mComponentName.c_str());

306 mCodec = MediaCodec::CreateByComponentName(

307 mCodecLooper, mComponentName.c_str(), NULL /* err */, mPid, mUid);

308 }

309 }

310 if (mCodec == NULL) {

311 ALOGE("Failed to create %s%s decoder",

312 (secure ? "secure " : ""), mime.c_str());

313 handleError(UNKNOWN_ERROR);

314 return;

315 }

316 mIsSecure = secure;

317

318 mCodec->getName(&mComponentName);

319

320 status_t err;

321 if (mSurface != NULL) {

322 // disconnect from surface as MediaCodec will reconnect

323 err = nativeWindowDisconnect(mSurface.get(), "onConfigure");

324 // We treat this as a warning, as this is a preparatory step.

325 // Codec will try to connect to the surface, which is where

326 // any error signaling will occur.

327 ALOGW_IF(err != OK, "failed to disconnect from surface: %d", err);

328 }

329

330 // Modular DRM

331 void *pCrypto;

332 if (!format->findPointer("crypto", &pCrypto)) {

333 pCrypto = NULL;

334 }

335 sp crypto = (ICrypto*)pCrypto;

336 // non-encrypted source won't have a crypto

337 mIsEncrypted = (crypto != NULL);

338 // configure is called once; still using OR in case the behavior changes.

339 mIsEncryptedObservedEarlier = mIsEncryptedObservedEarlier || mIsEncrypted;

340 ALOGV("onConfigure mCrypto: %p (%d) mIsSecure: %d",

341 crypto.get(), (crypto != NULL ? crypto->getStrongCount() : 0), mIsSecure);

342

343 err = mCodec->configure(

344 format, mSurface, crypto, 0 /* flags */);

345

346 if (err != OK) {

347 ALOGE("Failed to configure [%s] decoder (err=%d)", mComponentName.c_str(), err);

348 mCodec->release();

349 mCodec.clear();

350 handleError(err);

351 return;

352 }

353 rememberCodecSpecificData(format);

354

355 // the following should work in configured state

356 CHECK_EQ((status_t)OK, mCodec->getOutputFormat(&mOutputFormat));

357 CHECK_EQ((status_t)OK, mCodec->getInputFormat(&mInputFormat));

358

359 mStats->setString("mime", mime.c_str());

360 mStats->setString("component-name", mComponentName.c_str());

361

362 if (!mIsAudio) {

363 int32_t width, height;

364 if (mOutputFormat->findInt32("width", &width)

365 && mOutputFormat->findInt32("height", &height)) {

366 mStats->setInt32("width", width);

367 mStats->setInt32("height", height);

368 }

369 }

370

371 sp reply = new AMessage(kWhatCodecNotify, this);

372 mCodec->setCallback(reply);

373

374 err = mCodec->start();

375 if (err != OK) {

376 ALOGE("Failed to start [%s] decoder (err=%d)", mComponentName.c_str(), err);

377 mCodec->release();

378 mCodec.clear();

379 handleError(err);

380 return;

381 }

382

383 releaseAndResetMediaBuffers();

384

385 mPaused = false;

386 mResumePending = false;

387}

这里面首先调用MediaCodec::CreateByType 创建合适的Codec实例,

这里引入了/frameworks/av/media/libstagefright/MediaCodec.cpp作为解码器;

配置解码器:mCodec->configure

设置解码器回调:mCodec->setCallback(reply)

启动解码器:mCodec->start();

StageFright 层的解码模块比较复杂,涉及到底层解码知识,后续分析;我们现在了解一下大概的流程;

2.3 NuPlayer 渲染模块

音频、视频数据解码出来,需要将解码出来的数据显示或者播放出来;这就是渲染模块的工作;

- 将音视频原始数据缓存到队列;

- 音频数据播放出来;

- 视频数据显示出来;

- 做好音视频同步工作;

- 播放器控制工作;

在/frameworks/av/media/libmediaplayerservice/nuplayer/NuPlayerRenderer.h

中定义一个音频缓存队列和视频缓存队列将音频和视频数据分别放在队列中:

137 // if mBuffer != nullptr, it's a buffer containing real data.

138 // else if mNotifyConsumed == nullptr, it's EOS.

139 // else it's a tag for re-opening audio sink in different format.

140 struct QueueEntry {

141 sp mBuffer;

142 sp mMeta;

143 sp mNotifyConsumed;

144 size_t mOffset;

145 status_t mFinalResult;

146 int32_t mBufferOrdinal;

147 };

......

......

156 List mAudioQueue;

157 List mVideoQueue;

音频和视频的解码后的数据都放在这两个队列中,然后依次取出来处理;

音视频渲染模块在

/frameworks/av/media/libmediaplayerservice/nuplayer/NuPlayerRenderer.h

/frameworks/av/media/libmediaplayerservice/nuplayer/NuPlayerRenderer.cpp

可以从数据入队看起,在NuPlayerDecoder->onConfigure 中执行入队操作,

160void NuPlayer::Renderer::queueBuffer(

161 bool audio,

162 const sp &buffer,

163 const sp ¬ifyConsumed) {

164 sp msg = new AMessage(kWhatQueueBuffer, this);

165 msg->setInt32("queueGeneration", getQueueGeneration(audio));

166 msg->setInt32("audio", static_cast(audio));

167 msg->setObject("buffer", buffer);

168 msg->setMessage("notifyConsumed", notifyConsumed);

169 msg->post();

170}

1433void NuPlayer::Renderer::onQueueBuffer(const sp &msg) {

1434 int32_t audio;

1435 CHECK(msg->findInt32("audio", &audio));

1436

1437 if (dropBufferIfStale(audio, msg)) {

1438 return;

1439 }

1440

1441 if (audio) {

1442 mHasAudio = true;

1443 } else {

1444 mHasVideo = true;

1445 }

1446

1447 if (mHasVideo) {

1448 if (mVideoScheduler == NULL) {

1449 mVideoScheduler = new VideoFrameScheduler();

1450 mVideoScheduler->init();

1451 }

1452 }

1453

1454 sp obj;

1455 CHECK(msg->findObject("buffer", &obj));

1456 sp buffer = static_cast(obj.get());

1457

1458 sp notifyConsumed;

1459 CHECK(msg->findMessage("notifyConsumed", ¬ifyConsumed));

1460

1461 QueueEntry entry;

1462 entry.mBuffer = buffer;

1463 entry.mNotifyConsumed = notifyConsumed;

1464 entry.mOffset = 0;

1465 entry.mFinalResult = OK;

1466 entry.mBufferOrdinal = ++mTotalBuffersQueued;

1467

1468 if (audio) {

1469 Mutex::Autolock autoLock(mLock);

1470 mAudioQueue.push_back(entry);

1471 postDrainAudioQueue_l();

1472 } else {

1473 mVideoQueue.push_back(entry);

1474 postDrainVideoQueue();

1475 }

1476

1477 Mutex::Autolock autoLock(mLock);

1478 if (!mSyncQueues || mAudioQueue.empty() || mVideoQueue.empty()) {

1479 return;

1480 }

1481

1482 sp firstAudioBuffer = (*mAudioQueue.begin()).mBuffer;

1483 sp firstVideoBuffer = (*mVideoQueue.begin()).mBuffer;

1484

1485 if (firstAudioBuffer == NULL || firstVideoBuffer == NULL) {

1486 // EOS signalled on either queue.

1487 syncQueuesDone_l();

1488 return;

1489 }

1490

1491 int64_t firstAudioTimeUs;

1492 int64_t firstVideoTimeUs;

1493 CHECK(firstAudioBuffer->meta()

1494 ->findInt64("timeUs", &firstAudioTimeUs));

1495 CHECK(firstVideoBuffer->meta()

1496 ->findInt64("timeUs", &firstVideoTimeUs));

1497

1498 int64_t diff = firstVideoTimeUs - firstAudioTimeUs;

1499

1500 ALOGV("queueDiff = %.2f secs", diff / 1E6);

1501

1502 if (diff > 100000ll) {

1503 // Audio data starts More than 0.1 secs before video.

1504 // Drop some audio.

1505

1506 (*mAudioQueue.begin()).mNotifyConsumed->post();

1507 mAudioQueue.erase(mAudioQueue.begin());

1508 return;

1509 }

1510

1511 syncQueuesDone_l();

1512}

- 取出解码之后的数据,分别放入音频队列和视频队列中;

1468 if (audio) {

1469 Mutex::Autolock autoLock(mLock);

1470 mAudioQueue.push_back(entry);

1471 postDrainAudioQueue_l();

1472 } else {

1473 mVideoQueue.push_back(entry);

1474 postDrainVideoQueue();

1475 }

- 如果音频的TimeUs较多,要丢弃;下面是相应的代码;

1498 int64_t diff = firstVideoTimeUs - firstAudioTimeUs;

1499

1500 ALOGV("queueDiff = %.2f secs", diff / 1E6);

1501

1502 if (diff > 100000ll) {

1503 // Audio data starts More than 0.1 secs before video.

1504 // Drop some audio.

1505

1506 (*mAudioQueue.begin()).mNotifyConsumed->post();

1507 mAudioQueue.erase(mAudioQueue.begin());

1508 return;

1509 }

播放音频数据,

399 const sp &format,

400 bool offloadOnly,

401 bool hasVideo,

402 uint32_t flags,

403 bool *isOffloaded,

404 bool isStreaming) {

405 sp msg = new AMessage(kWhatOpenAudioSink, this);

406 msg->setMessage("format", format);

407 msg->setInt32("offload-only", offloadOnly);

408 msg->setInt32("has-video", hasVideo);

409 msg->setInt32("flags", flags);

410 msg->setInt32("isStreaming", isStreaming);

411

412 sp response;

413 status_t postStatus = msg->postAndAwaitResponse(&response);

414

415 int32_t err;

416 if (postStatus != OK || response.get() == nullptr || !response->findInt32("err", &err)) {

417 err = INVALID_OPERATION;

418 } else if (err == OK && isOffloaded != NULL) {

419 int32_t offload;

420 CHECK(response->findInt32("offload", &offload));

421 *isOffloaded = (offload != 0);

422 }

423 return err;

424}

发送异步消息,执行到 NuPlayer::Renderer::onOpenAudioSink 函数;

处理音频:postDrainAudioQueue_l();

发送 kWhatDrainAudioQueue 消息处理音频数据,

539 case kWhatDrainAudioQueue:

540 {

541 mDrainAudioQueuePending = false;

542

543 int32_t generation;

544 CHECK(msg->findInt32("drainGeneration", &generation));

545 if (generation != getDrainGeneration(true /* audio */)) {

546 break;

547 }

548

549 if (onDrainAudioQueue()) {

550 uint32_t numFramesPlayed;

551 CHECK_EQ(mAudioSink->getPosition(&numFramesPlayed),

552 (status_t)OK);

553

554 // Handle AudioTrack race when start is immediately called after flush.

555 uint32_t numFramesPendingPlayout =

556 (mNumFramesWritten > numFramesPlayed ?

557 mNumFramesWritten - numFramesPlayed : 0);

558

559 // This is how long the audio sink will have data to

560 // play back.

561 int64_t delayUs =

562 mAudioSink->msecsPerFrame()

563 * numFramesPendingPlayout * 1000ll;

564 if (mPlaybackRate > 1.0f) {

565 delayUs /= mPlaybackRate;

566 }

567

568 // Let's give it more data after about half that time

569 // has elapsed.

570 delayUs /= 2;

571 // check the buffer size to estimate maximum delay permitted.

572 const int64_t maxDrainDelayUs = std::max(

573 mAudioSink->getBufferDurationInUs(), (int64_t)500000 /* half second */);

574 ALOGD_IF(delayUs > maxDrainDelayUs, "postDrainAudioQueue long delay: %lld > %lld",

575 (long long)delayUs, (long long)maxDrainDelayUs);

576 Mutex::Autolock autoLock(mLock);

577 postDrainAudioQueue_l(delayUs);

578 }

579 break;

580 }

消息处理体中有重新调用 postDrainAudioQueue_l 函数,延时 是delayUs /= 2,利用一半的时间刷新下次的数据;

处理视频:postDrainVideoQueue();

发送kWhatDrainVideoQueue 消息处理视频队列数据;

582 case kWhatDrainVideoQueue:

583 {

584 int32_t generation;

585 CHECK(msg->findInt32("drainGeneration", &generation));

586 if (generation != getDrainGeneration(false /* audio */)) {

587 break;

588 }

589

590 mDrainVideoQueuePending = false;

591

592 onDrainVideoQueue();

593

594 postDrainVideoQueue();

595 break;

596 }

1300void NuPlayer::Renderer::onDrainVideoQueue() {

1301 if (mVideoQueue.empty()) {

1302 return;

1303 }

1304

1305 QueueEntry *entry = &*mVideoQueue.begin();

1306

1307 if (entry->mBuffer == NULL) {

1308 // EOS

1309

1310 notifyEOS(false /* audio */, entry->mFinalResult);

1311

1312 mVideoQueue.erase(mVideoQueue.begin());

1313 entry = NULL;

1314

1315 setVideoLateByUs(0);

1316 return;

1317 }

1318

1319 int64_t nowUs = ALooper::GetNowUs();

1320 int64_t realTimeUs;

1321 int64_t mediaTimeUs = -1;

1322 if (mFlags & FLAG_REAL_TIME) {

1323 CHECK(entry->mBuffer->meta()->findInt64("timeUs", &realTimeUs));

1324 } else {

1325 CHECK(entry->mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

1326

1327 realTimeUs = getRealTimeUs(mediaTimeUs, nowUs);

1328 }

1329 realTimeUs = mVideoScheduler->schedule(realTimeUs * 1000) / 1000;

1330

1331 bool tooLate = false;

1332

1333 if (!mPaused) {

1334 setVideoLateByUs(nowUs - realTimeUs);

1335 tooLate = (mVideoLateByUs > 40000);

1336

1337 if (tooLate) {

1338 ALOGV("video late by %lld us (%.2f secs)",

1339 (long long)mVideoLateByUs, mVideoLateByUs / 1E6);

1340 } else {

1341 int64_t mediaUs = 0;

1342 mMediaClock->getMediaTime(realTimeUs, &mediaUs);

1343 ALOGV("rendering video at media time %.2f secs",

1344 (mFlags & FLAG_REAL_TIME ? realTimeUs :

1345 mediaUs) / 1E6);

1346

1347 if (!(mFlags & FLAG_REAL_TIME)

1348 && mLastAudioMediaTimeUs != -1

1349 && mediaTimeUs > mLastAudioMediaTimeUs) {

1350 // If audio ends before video, video continues to drive media clock.

1351 // Also smooth out videos >= 10fps.

1352 mMediaClock->updateMaxTimeMedia(mediaTimeUs + 100000);

1353 }

1354 }

1355 } else {

1356 setVideoLateByUs(0);

1357 if (!mVideoSampleReceived && !mHasAudio) {

1358 // This will ensure that the first frame after a flush won't be used as anchor

1359 // when renderer is in paused state, because resume can happen any time after seek.

1360 clearAnchorTime();

1361 }

1362 }

1363

1364 // Always render the first video frame while keeping stats on A/V sync.

1365 if (!mVideoSampleReceived) {

1366 realTimeUs = nowUs;

1367 tooLate = false;

1368 }

1369

1370 entry->mNotifyConsumed->setInt64("timestampNs", realTimeUs * 1000ll);

1371 entry->mNotifyConsumed->setInt32("render", !tooLate);

1372 entry->mNotifyConsumed->post();

1373 mVideoQueue.erase(mVideoQueue.begin());

1374 entry = NULL;

1375

1376 mVideoSampleReceived = true;

1377

1378 if (!mPaused) {

1379 if (!mVideoRenderingStarted) {

1380 mVideoRenderingStarted = true;

1381 notifyVideoRenderingStart();

1382 }

1383 Mutex::Autolock autoLock(mLock);

1384 notifyIfMediaRenderingStarted_l();

1385 }

1386}

这里进行了音视频同步工作,发现系统的MediaPlayer 是以 视频时间为准,解码后的音频数据时间戳如果大于视频数据时间戳吗,直接丢弃音频数据包,同时渲染视频;

音频播放依赖AudioSink;

视频显示依赖解码器解析数据,然后写入Surface, SurfaceFlinger合成Surface,显示画面。