最近想学做数据分析,可是干啃书实在太无聊,看着看着就走神了。所以干脆找点有意思的事情做。碰巧看到知乎上有人教怎么做词频统计还有图片https://www.zhihu.com/question/28975391/answer/100796070

又突然想起之前看到有人爬咪蒙的文章做统计,我想了想干脆我也做咪蒙的词频统计吧。

首先,我得先找到咪蒙的所有文章。由于周末在家,我用的是六年前的老笔记本,实在太卡。我也不打算爬她的公众号了。

这个我百度一下去找现成的她的文章。

http://www.360doc.com/content/16/1026/12/34624569_601474728.shtml

这次我用scrapy框架来爬取,上代码(不懂scrapy的童鞋请自行看官方文档学习基础知识)

spider

import scrapy

from bs4 import BeautifulSoup

from ali.items import AliItem

class ali(scrapy.Spider):

name = 'aliyun'

n=0

def start_requests(self):

start_url=['http://www.360doc.com/content/16/1026/12/34624569_601474728.shtml']

for url in start_url:

yield scrapy.Request(url=url,callback=self.parse_getlink)

def parse_getlink(self,response):

n=0

#print response.url

soup = BeautifulSoup(response.body,'lxml')

for i in soup.find_all('td',id='artContent'):#定位元素,拿到每篇文章的链接

for j in i.find_all('a'):

url= j.get('href')

yield scrapy.Request(url=url, callback=self.parse)

n=n+1

print n

def parse(self,response):

item = AliItem()

soup = BeautifulSoup(response.body,'lxml')

for i in soup.find_all('div',id='js_content'):#定位元素,拿到每篇文章的内容

item['content'] = i.get_text()

yield item#把内容传到pipeline上去处理

爬虫的逻辑写完了,然后我们到pipeline去处理爬回来的内容

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

class AliPipeline(object):

def process_item(self, item, spider):#把爬回来的文章内容写到mimeng.txt上去

f=open('mimeng.txt','a')

content = item['content'].encode('utf-8')

f.writelines(content)

f.close()

return item

然后我们就要对文章内容进行分词了!这里用到jieba这个库,没错就是结巴。

写个新的py

import jieba

f = open('mimeng.txt', 'r',encoding='utf-8').read()

w = open('mimengcount.txt','w')

words = list(jieba.cut(f))#用jieba分词

for word in words:

if len(word) > 1:

word = word + '\n'

#a.append(word)

w.writelines(word)

w.close()

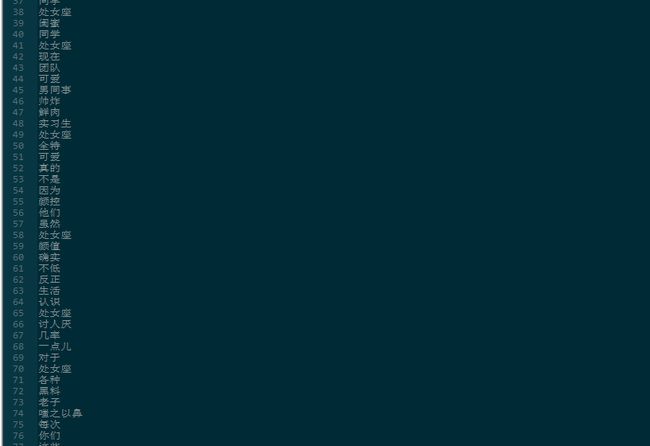

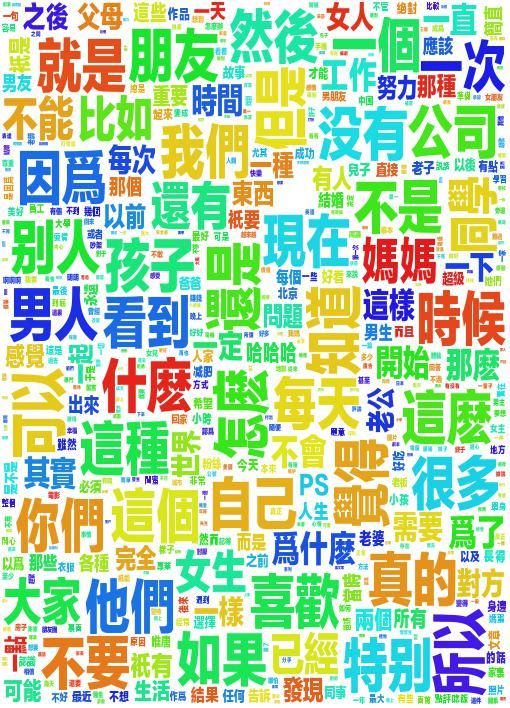

接下来就要用四个库来进行词频统计和作画

numpy,wordcloud,PLS以及matplotlib

下面这段代码我是复制别人的,其实我也不懂那几个库哈哈

import jieba

from wordcloud import WordCloud

import PIL

import matplotlib.pyplot as plt

import numpy as np

def wordcloudplot():

text = open('mimengcount.txt').read()

path='ziti.ttf'#字体文件,没有这个中文会乱码,自己去百度下载

#path=unicode(path,'utf8').encode('gb18030')

alice_mask = np.array(PIL.Image.open('3e2f.jpg'))

wordcloud = WordCloud(font_path=path,background_color="white", margin=5, width=1800, height=800, mask=alice_mask, max_words=2000,

max_font_size=60, random_state=42)

worcloud = wordcloud.generate(text)

wordcloud.to_file('pic3.jpg')

plt.imshow(wordcloud)

plt.axis('off')

plt.show()

wordcloudplot()

下面,我们来见证奇迹

看到这结果。。。。我突然有种浪费了几小时什么的感觉。。。本来今天下了王者荣耀想要去玩的。。。我特么的犯贱跑去做这个。。。真无聊