音视频编解码:视频编解码基础1-FFmpeg结构与API摘要

音视频编解码:视频编解码基础2-FFmpeg解码实践

音视频编解码:视频编解码基础篇3-FFmpeg转码实践

音视频编解码:视频编解码基础篇4-FFmpeg解码播放

音视频编解码:视频编解码基础篇5-FFmpeg水印添加

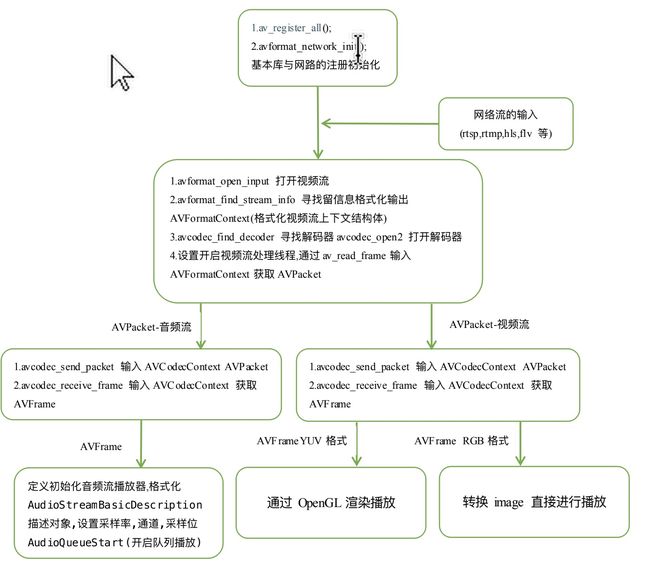

一.FFmpeg解码播放

1首先接到上篇基础篇3的转码基础上继续.

在正常打开音视频流后开启读取音视频流线程(注意线程安全加解锁的多个细节点)

预制关键类和基本数据

@interface DJMediaFrame : NSObject

@property (nonatomic) CGFloat position;

@property (nonatomic) CGFloat duration;

@end

@interface DJAudioFrame : DJMediaFrame

@property (nonatomic)NSData *samples;

@end

@interface DJVideoFrame : DJMediaFrame

@property (nonatomic) NSInteger width;

@property (nonatomic) NSInteger height;

@end

@interface DJVideoFrameYUV : DJVideoFrame

@property (nonatomic)NSInteger dataLenth;

@property (nonatomic)UInt8* buffer;

/**

* 互斥锁

*/

NSCondition* _condition;

/**

* 用于装解码后的音视频数据

*/

AVFrame *_videoFrame;

AVFrame *_audioFrame;

/**

* 解码上下文

*/

AVFormatContext *_inFormatCtx;

/**

* 音视频数据流标志

*/

NSInteger _videoStream;

NSInteger _audioStream;

/**

* 非直播流控制播放位置进度

*/

CGFloat _duration;

/**

* 主被动退出线程条件标志

*/

BOOL _isErrorOccur;

BOOL volatile _closeStream;

BOOL _decoderRunning;

读取数据流线程操作

-(BOOL)startDecodeThread{

NSOperationQueue *queue = [[NSOperationQueue alloc] init];

NSInvocationOperation *readOperation = [[NSInvocationOperation alloc] initWithTarget:wself selector:@selector(readFramesThreadProc) object:nil];

NSInvocationOperation *popOperation = [[NSInvocationOperation alloc] initWithTarget:wself selector:@selector(popFramesThreadProc) object:nil];

[queue addOperation:readOperation];

[queue addOperation:popOperation];

}

/**

* 读流数据,读数据的同时解码

*/

-(void)readFramesThreadProc{

@autoreleasepool {

_decoderRunning = true;

_isErrorOccur = false;

AVPacket packet;

av_init_packet(&packet);

NSLog(@"读数据流线程开始") UTF8String]);

while (!(_closeStream || _isErrorOccur)) {

_readFrameStartTime = av_gettime();

[_condition lock];

//具体问题具体分析可活动配置视频帧率

while (_decodeFrames.count > 0x30)

[_condition wait];

if (_closeStream) {

[_condition unlock];

break;

}

//读取数据

int result = av_read_frame(_inFormatCtx, &packet);

if (result < 0) {

_isErrorOccur = true;

[_condition unlock];

NSLog(@"数据读取错误 error->,%s", av_err2str(result));

break;

}

if (packet.stream_index ==_videoStream) {

[self decodeVideo:&packet];//处理视频数据包

} else if (packet.stream_index == _audioStream) {

[self decodeAudio:&packet];//处理音频数据包

}

[_condition unlock];

av_packet_unref(&packet);

}

_decoderRunning = false;

if (_isErrorOccur){

//如果出错做相应处理通知外部控制器

}

NSLog(@"读取数据线程结束,是否正常结束:(1:正常结束 0:网络错误结束) %d", _closeStream);

}

}

/**

* 取流数据

*/

-(void)popFramesThreadProc{

int64_t popCounts = 0;

int64_t prePopCounts = 0;

CGFloat playPosition = 0;

int spaceCount = 15;

@autoreleasepool {

NSLog(@"送数据线程开始");

while (!(_closeStream || _isErrorOccur)) {

@synchronized(_decodeFrames) {

if (_decodeFrames.count) {

DJMediaFrame* frame = [_decodeFrames firstObject];

if (enumFrameTypeAudio == frame.type) {

[self popAudioFrame:(DJAudioFrame*)frame];

[_decodeFrames removeObject:frame];

} else if (enumFrameTypeVideo == frame.type) {

popCounts ++;

playPosition = frame.position;

//音视频流同步操作暂不做说明

// [self checkAVPosition:playPosition];

[self popVideoFrame:(DJVideoFrame *)frame];

@synchronized (_videoFramesRecycle) {

[_videoFramesRecycle addObject:frame];

}

[_decodeFrames removeObject:frame];

}

}

//锁信号标志与解码同步30/s帧

if (_decodeFrames.count < 0x30){

[_condition signal];

}

}

}

NSLog(@"送数据线程结束");

}

}

音视频数据解码avcodec_send_packet avcodec_receive_frame

ffmpeg3版本的解码接口做了不少调整,之前的视频解码接口avcodec_decode_video2和avcodec_decode_audio4音频解码被设置为deprecated,对这两个接口做了合并,使用统一的接口。并且将音视频解码步骤分为了两步,第一步avcodec_send_packet,第二步avcodec_receive_frame,通过接口名字我们就可以知道第一步是发送编码数据包,第二步是接收解码后数据。

avcodec_send_packet avcodec_receive_frame成对出现但不是一一对应

-(void)decodeVideo:(AVPacket*)packet{

@autoreleasepool {

int result = avcodec_send_packet(_videoCodecCtx, packet);

if (result < 0) {

NSLog(@"发送视频编码数据包错误 error-> %s", av_err2str(result));

return;

}

while (avcodec_receive_frame(_videoCodecCtx, _videoFrame) >= 0) {

//视频录制处理

/* if (self.recorderbool) {

_errorOccur = ![self.assetWriter writeVideoFrame:_videoFrame];

if (_errorOccur) {

}else{

}

}*/

DJXVideoFrame *frame = [self handleVideoFrame];

if (frame) {

[self addDecodeDJMediaFrame:frame];

}

}

}

}

-(void)decodeAudio:(AVPacket*)packet{

@autoreleasepool {

int result = avcodec_send_packet(_audioCodecCtx, packet);

if (result < 0){

NSLog(@"发送音频编码数据包错误 error-> %s", av_err2str(result));

return;

}

while (avcodec_receive_frame(_audioCodecCtx, _audioFrame) >= 0) {

DJMediaFrame * frame = [self handleAudioFrame];

if (frame){

[self addDecodeDJMediaFrame:frame];

}

}

}

}

-(void)addDecodeDJMediaFrame:(DJMediaFrame*)frame{

@synchronized (_decodeFrames) {

NSInteger index = _decodeFrames.count - 1;

for (; index >= 0; index --) {

DJMediaFrame* tempFrame = [_decodeFrames objectAtIndex:index];

if (tempFrame.position < frame.position) break;

}

//向缓存容器添加视频帧

[_decodeFrames insertObject:frame atIndex:index + 1];

}

}

//不加 @autoreleasepool ,内存会得不到及时释放

-(void)popAudioFrame:(DJAudioFrame*)frame{

@autoreleasepool {

//1通过音频单元 AudioUnit 播放

//2通过音频队列AudioQueueRef播放

}

}

-(void)popVideoFrame:(DJVideoFrame*)frame{

@autoreleasepool {

//1通过YUV转RGB->UIimage 直接播放

//2将YUV处理通过OpenGLES渲染播放

}

}