参考:Face Detection in iOS Using Core Image

面部识别API不仅可以是识别面部,也可识别面部的特殊细节,例如微笑甚至眨眼睛。

建立初始项目

原文建好了初始项目,我自己新建了初始项目

- 新建项目Detector

- 删除IB中原本View Controller Scene。

- 拖动

UITabBarController到IB中,得到三个Scene。选择UITabBarController的Is Initial View Controller,使其作为初始控制器。 - 修改Item 1的title和其Bar Item都为Photo,修改其

Class为ViewController。 - 向Assets中添加几张人物图片

- 想Photo Scene中添加一个Image View,

Content Mode改为Aspect Fit,选择一个图片。在ViewController添加图片对应@IBOutlet:

@IBOutlet var personPic: UIImageView! - 选中Item 2,点击菜单栏EDitor > Embed In > Navigation Controller,新生成一个与之关联的Scene。

- 新建

CameraViewController类,继承至UIViewController。修改上面生成的Scene的Class属性为CameraViewController。 - 拖动一个

UIBarButtonItem到Camera View Controller Scene的UINavigationItem的右边,并选择System Item为Camera - 在

CameraViewController中建立outlet和Action

识别照片的面部

- 在

ViewController.swift中引入CoreImage:

import CoreImage - 在

ViewController.swift中添加函数detect():

func detect() {

// 1

guard let personciImage = CIImage(image: personPic.image!) else {

return

}

// 2

let accuracy = [CIDetectorAccuracy: CIDetectorAccuracyHigh]

let faceDetector = CIDetector(ofType: CIDetectorTypeFace, context: nil, options: accuracy)

let faces = faceDetector?.features(in: personciImage)

// 3

for face in faces as! [CIFaceFeature] {

print("Found bounds are \(face.bounds)")

let faceBox = UIView(frame: face.bounds)

faceBox.layer.borderWidth = 3

faceBox.layer.borderColor = UIColor.red.cgColor

faceBox.backgroundColor = UIColor.clear

personPic.addSubview(faceBox)

// 4

if face.hasLeftEyePosition {

print("Left eye bounds are \(face.leftEyePosition)")

}

if face.hasRightEyePosition {

print("Right eye bounds are \(face.rightEyePosition)")

}

}

}

- 1 根据

UIImage获取CoreImage中图片对象。guard与if功能类似,区别可查看以撸代码的形式学习Swift-5:Control Flow的6 guard 与 if。 - 2 初始化检测器

CIDetector,accuray是检查器配置选项,表示精确度;因为CIDetector可以进行几种类型的检测,所以CIDetectorTypeFace用来表示面部检测;features方法返回具体的检测结果 - 3 给每个检测到的脸添加红色框

- 4 检测是否有左眼位置

- 在

viewDidLoad中添加detect(),运行结果类似:

打印结果,显示检测到的面部位置是不对的:

Found bounds are (177.0, 416.0, 380.0, 380.0)

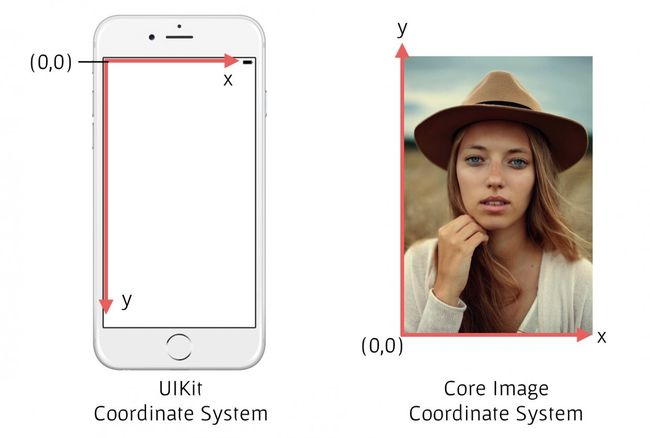

这是因为UIKit的坐标系统与Core Image的坐标系统是不同的:

- 把Core Image的坐标系统转换为UIKit的坐标系统,修改

detect()为:

func detect() {

guard let personciImage = CIImage(image: personPic.image!) else {

return

}

let accuracy = [CIDetectorAccuracy: CIDetectorAccuracyHigh]

let faceDetector = CIDetector(ofType: CIDetectorTypeFace, context: nil, options: accuracy)

let faces = faceDetector?.features(in: personciImage)

//

let ciImageSize = personciImage.extent.size

var transform = CGAffineTransform(scaleX: 1, y: -1)

transform = transform.translatedBy(x: 0, y: -ciImageSize.height)

for face in faces as! [CIFaceFeature] {

print("Found bounds are \(face.bounds)")

// Apply the transform to convert the coordinates

var faceViewBounds = face.bounds.applying(transform)

// Calculate the actual position and size of the rectangle in the image view

let viewSize = personPic.bounds.size

let scale = min(viewSize.width / ciImageSize.width,

viewSize.height / ciImageSize.height)

let offsetX = (viewSize.width - ciImageSize.width * scale) / 2

let offsetY = (viewSize.height - ciImageSize.height * scale) / 2

faceViewBounds = faceViewBounds.applying(CGAffineTransform(scaleX: scale, y: scale))

faceViewBounds.origin.x += offsetX

faceViewBounds.origin.y += offsetY

let faceBox = UIView(frame: faceViewBounds)

faceBox.layer.borderWidth = 3

faceBox.layer.borderColor = UIColor.red.cgColor

faceBox.backgroundColor = UIColor.clear

personPic.addSubview(faceBox)

if face.hasLeftEyePosition {

print("Left eye bounds are \(face.leftEyePosition)")

}

if face.hasRightEyePosition {

print("Right eye bounds are \(face.rightEyePosition)")

}

}

}

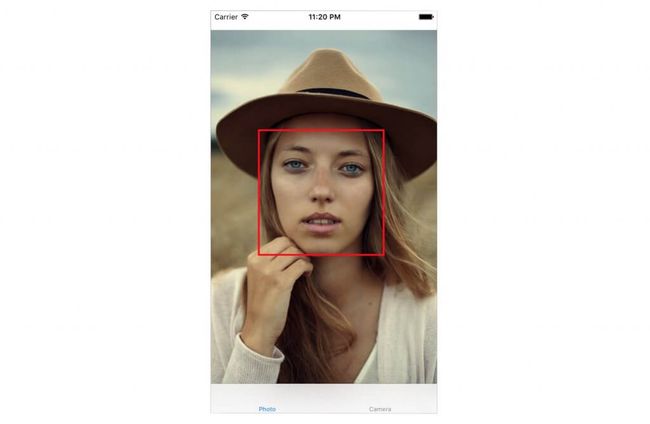

运行可看到正确识别位置:

相机拍照后的脸部识别

之前是项目中照片识别,现在是拍完照再识别,原理是相同的,就是多一个拍完照,取照片的过程。

- 更新

CameraViewController类的代码

// 1

class CameraViewController: UIViewController, UIImagePickerControllerDelegate, UINavigationControllerDelegate {

@IBOutlet var imageView: UIImageView!

// 2

let imagePicker = UIImagePickerController()

override func viewDidLoad() {

super.viewDidLoad()

imagePicker.delegate = self

}

@IBAction func takePhoto(_ sender: AnyObject) {

// 3

if !UIImagePickerController.isSourceTypeAvailable(.camera) {

return

}

imagePicker.allowsEditing = false

imagePicker.sourceType = .camera

present(imagePicker, animated: true, completion: nil)

}

// 4

//MARK: -UIImagePickerControllerDelegate

func imagePickerController(_ picker: UIImagePickerController, didFinishPickingMediaWithInfo info: [String : Any]) {

if let pickedImage = info[UIImagePickerControllerOriginalImage] as? UIImage {

imageView.contentMode = .scaleAspectFit

imageView.image = pickedImage

}

dismiss(animated: true, completion: nil)

self.detect()

}

// 5

func imagePickerControllerDidCancel(_ picker: UIImagePickerController) {

dismiss(animated: true, completion: nil)

}

}

- 1 实现

UIImagePickerControllerDelegate协议,用于拍照相关代理。 - 2 初始化

UIImagePickerController。UIImagePickerController是照相或摄影界面和功能管理的类。 - 3 判断设备照相机是否可用。

- 4 实现一个

UIImagePickerControllerDelegate中的代理方法,当拍摄完备确实使用照片时调用。 - 5 也是

UIImagePickerControllerDelegate中的代理方法,取消拍摄时调用。

- 添加

detect()代码,与ViewController中不同的是,不用红色框框处识别出的面部,而是识别出面部的细节,并用UIAlertController弹出显示。

func detect() {

let imageOptions = NSDictionary(object: NSNumber(value: 5) as NSNumber, forKey: CIDetectorImageOrientation as NSString)

let personciImage = CIImage(cgImage: imageView.image!.cgImage!)

let accuracy = [CIDetectorAccuracy: CIDetectorAccuracyHigh]

let faceDetector = CIDetector(ofType: CIDetectorTypeFace, context: nil, options: accuracy)

let faces = faceDetector?.features(in: personciImage, options: imageOptions as? [String : AnyObject])

if let face = faces?.first as? CIFaceFeature {

print("found bounds are \(face.bounds)")

var message = "有个脸"

if face.hasSmile {

print("脸是笑的")

message += ",脸是笑的"

}

if face.hasMouthPosition {

print("有嘴唇")

message += ",有嘴唇"

}

if face.hasLeftEyePosition {

print("左眼镜的位置是 \(face.leftEyePosition)")

message += ",左眼镜的位置是 \(face.leftEyePosition)"

}

if face.hasRightEyePosition {

print("右眼镜的位置是 \(face.rightEyePosition)")

message += ",右眼镜的位置是 \(face.rightEyePosition)"

}

let alert = UIAlertController(title: "嘿嘿", message: message, preferredStyle: .alert)

alert.addAction(UIAlertAction(title: "OK", style: .default, handler: nil))

self.present(alert, animated: true, completion: nil)

} else {

let alert = UIAlertController(title: "没脸了", message: "没有检测到脸", preferredStyle: .alert)

alert.addAction(UIAlertAction(title: "OK", style: .default, handler: nil))

self.present(alert, animated: true, completion: nil)

}

}

运行就可以识别照片的面部具体细节

CIFaceFeature还提供了其他很多面部细节:

open var hasLeftEyePosition: Bool { get }

open var leftEyePosition: CGPoint { get }

open var hasRightEyePosition: Bool { get }

open var rightEyePosition: CGPoint { get }

open var hasMouthPosition: Bool { get }

open var mouthPosition: CGPoint { get }

open var hasTrackingID: Bool { get }

open var trackingID: Int32 { get }

open var hasTrackingFrameCount: Bool { get }

open var trackingFrameCount: Int32 { get }

open var hasFaceAngle: Bool { get }

open var faceAngle: Float { get }

open var hasSmile: Bool { get }

open var leftEyeClosed: Bool { get }

open var rightEyeClosed: Bool { get }

代码

Detector