opencv实现双目视觉测距

有个群193369905,相关毕设也可找群主,最近一直在研究双目视觉测距,资料真的特别多网上,有matlab 的,python的,C++的,但个人感觉都不详细,对于小白,特别不容易上手,在这里我提供一个傻瓜式教程吧,利用matlab来进行标注,图形界面,无须任何代码,然后利用C++实现测距与深度图,原理太多我就不提了,小白直接照做就OK

1、准备工作

硬件准备

https://item.taobao.com/item.htm?spm=a1z10.1-c-s.w4004-17093912817.2.6af681c0jaZTur&id=562773790704

摄像头一个(如图),淘宝连接

软件准备

VS+opencv3.1

Matlab+toolbox标定工具箱

C++代码

Vs+opencv配置各位见这篇博客 https://www.cnblogs.com/linshuhe/p/5764394.html,讲解的够详细了,我们需要用VS+opencv3.1实现实时测距

2matlab标定****

matlab用于单目摄像头,双目摄像头的标定,这个我也已经做过了,各位直接参考这篇博客 http://blog.csdn.net/hyacinthkiss/article/details/41317087

各位准备好上面那些工具以后我们才能正式开始

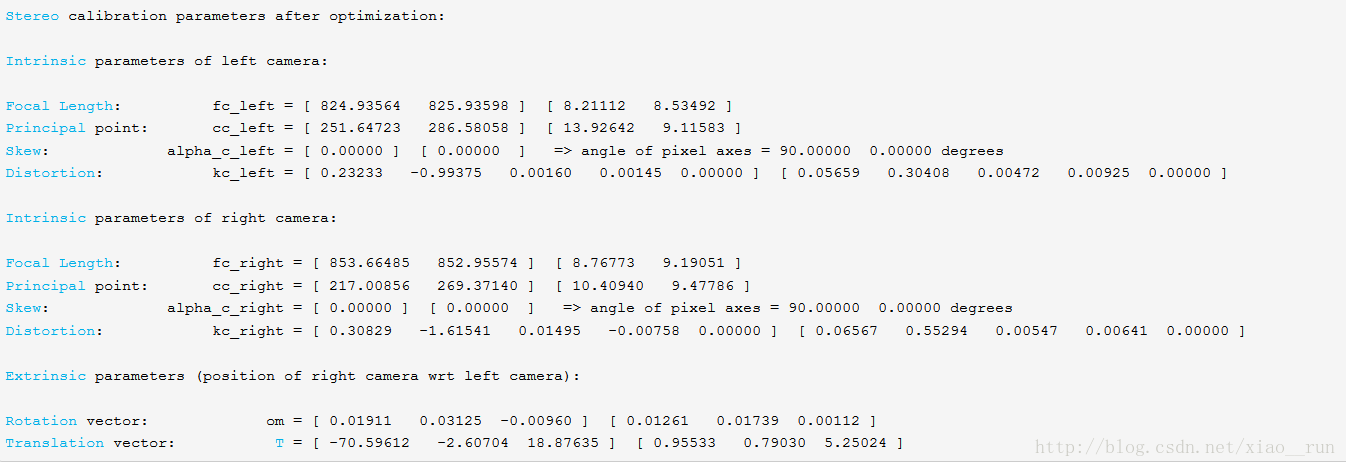

我们通过matlab标定可以获得以下数据

或者使用C++ 进行单双目标定

单目标定

#include 双目标定

//双目相机标定

#include **3 **C++与opencv实现测距 ****

我们把matlab数据填写到下列代码中,各位切记不要填写错误,以免导致出现离奇的数据,我已经注释的非常清楚了;

/*

事先标定好的相机的参数

fx 0 cx

0 fy cy

0 0 1

*/

Mat cameraMatrixL = (Mat_<double>(3, 3) << 682.55880, 0, 384.13666,

0, 682.24569, 311.19558,

0, 0, 1);

//对应matlab里的左相机标定矩阵

Mat distCoeffL = (Mat_<double>(5, 1) << -0.51614, 0.36098, 0.00523, -0.00225, 0.00000);

//对应Matlab所得左i相机畸变参数

Mat cameraMatrixR = (Mat_<double>(3, 3) << 685.03817, 0, 397.39092,

0, 682.54282, 272.04875,

0, 0, 1);

//对应matlab里的右相机标定矩阵

Mat distCoeffR = (Mat_<double>(5, 1) << -0.46640, 0.22148, 0.00947, -0.00242, 0.00000);

//对应Matlab所得右相机畸变参数

Mat T = (Mat_<double>(3, 1) << -61.34485, 2.89570, -4.76870);//T平移向量

//对应Matlab所得T参数

Mat rec = (Mat_<double>(3, 1) << -0.00306, -0.03207, 0.00206);//rec旋转向量,对应matlab om参数

Mat R;//R 旋转矩阵

4、完整代码 (我这里以上述摄像头拍摄的两张图来作为测距,同样可修改作为视频的实时测距,一定要将图片拷贝到你的工程目录下)

/******************************/

/* 立体匹配和测距 */

/******************************/

#include 如果对此代码和标定过程,或者遇到什么困难,大家可发邮件到[email protected],或者加入到 193369905进行讨论,谢谢大家,原创不易,欢迎打赏