大数据学习之路02——第一个MapReduce程序

文章目录

- 目标

- 准备工作

- 新建目录

- 准备文件

- 运行例子

- 在集群上运行 WordCount 程序

- MapReduce 执行过程显示信息

- 查看结果

- 遇到的坑

- 问题一:执行到Running job: job_1557977819409_0004的地方就不往下执行了。

2019-05-17 | 大数据学习之路系列02

目标

单词计数是最简单也是最能体现 MapReduce 思想的程序之一,可以称为 MapReduce 版“Hello World”。

单词计数主要完成功能是:统计一系列文本文件中每个单词出现的次数,如下图所示。

准备工作

新建目录

WZB-MacBook:~ wangzhibin$ hadoop fs -mkdir -p /practice/20190517_mr/input

WZB-MacBook:~ wangzhibin$ hadoop fs -mkdir -p /practice/20190517_mr/output

WZB-MacBook:~ wangzhibin$ hadoop fs -ls -R /practice/20190517_mr

drwxr-xr-x - wangzhibin supergroup 0 2019-05-17 13:53 /practice/20190517_mr/input

drwxr-xr-x - wangzhibin supergroup 0 2019-05-17 13:53 /practice/20190517_mr/output

准备文件

WZB-MacBook:~ wangzhibin$ hadoop fs -put - /practice/20190517_mr/input/file1.txt

Hello World

WZB-MacBook:~ wangzhibin$ hadoop fs -put - /practice/20190517_mr/input/file2.txt

Hello Hadoop

WZB-MacBook:~ wangzhibin$ hadoop fs -ls -R /practice/20190517_mr

drwxr-xr-x - wangzhibin supergroup 0 2019-05-17 14:50 /practice/20190517_mr/input

-rw-r--r-- 1 wangzhibin supergroup 12 2019-05-17 14:49 /practice/20190517_mr/input/file1.txt

-rw-r--r-- 1 wangzhibin supergroup 13 2019-05-17 14:49 /practice/20190517_mr/input/file2.txt

drwxr-xr-x - wangzhibin supergroup 0 2019-05-17 13:53 /practice/20190517_mr/output

运行例子

在集群上运行 WordCount 程序

备注:以 input 作为输入目录,output 目录作为输出目录。

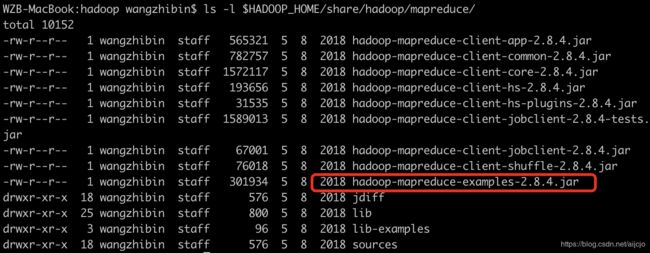

已经编译好的 WordCount 的 Jar 在“$HADOOP_HOME/share/hadoop/mapreduce/”下面,就是“hadoop-mapreduce-examples-2.8.4.jar”,

MapReduce 执行过程显示信息

执行命令:

hadoop jar /Users/wangzhibin/00_dev_suite/50_bigdata/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.8.4.jar wordcount /practice/20190517_mr/input /practice/20190517_mr/output

执行过程:

WZB-MacBook:hadoop wangzhibin$ hadoop jar /Users/wangzhibin/00_dev_suite/50_bigdata/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.8.4.jar wordcount /practice/20190517_mr/input /practice/20190517_mr/output

19/05/17 15:39:19 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

19/05/17 15:39:20 INFO input.FileInputFormat: Total input files to process : 2

19/05/17 15:39:20 INFO mapreduce.JobSubmitter: number of splits:2

19/05/17 15:39:20 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1558078701666_0002

19/05/17 15:39:20 INFO impl.YarnClientImpl: Submitted application application_1558078701666_0002

19/05/17 15:39:20 INFO mapreduce.Job: The url to track the job: http://WZB-MacBook.local:8088/proxy/application_1558078701666_0002/

19/05/17 15:39:20 INFO mapreduce.Job: Running job: job_1558078701666_0002

19/05/17 15:39:28 INFO mapreduce.Job: Job job_1558078701666_0002 running in uber mode : false

19/05/17 15:39:28 INFO mapreduce.Job: map 0% reduce 0%

19/05/17 15:39:33 INFO mapreduce.Job: map 100% reduce 0%

19/05/17 15:39:39 INFO mapreduce.Job: map 100% reduce 100%

19/05/17 15:39:39 INFO mapreduce.Job: Job job_1558078701666_0002 completed successfully

19/05/17 15:39:39 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=55

FILE: Number of bytes written=474472

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=271

HDFS: Number of bytes written=25

HDFS: Number of read operations=9

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=2

Launched reduce tasks=1

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=6213

Total time spent by all reduces in occupied slots (ms)=2848

Total time spent by all map tasks (ms)=6213

Total time spent by all reduce tasks (ms)=2848

Total vcore-milliseconds taken by all map tasks=6213

Total vcore-milliseconds taken by all reduce tasks=2848

Total megabyte-milliseconds taken by all map tasks=6362112

Total megabyte-milliseconds taken by all reduce tasks=2916352

Map-Reduce Framework

Map input records=2

Map output records=4

Map output bytes=41

Map output materialized bytes=61

Input split bytes=246

Combine input records=4

Combine output records=4

Reduce input groups=3

Reduce shuffle bytes=61

Reduce input records=4

Reduce output records=3

Spilled Records=8

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=97

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=603979776

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=25

File Output Format Counters

Bytes Written=25

查看结果

WZB-MacBook:hadoop wangzhibin$ hadoop dfs -ls -R /practice/20190517_mr/output/

-rw-r--r-- 1 wangzhibin supergroup 0 2019-05-17 15:39 /practice/20190517_mr/output/_SUCCESS

-rw-r--r-- 1 wangzhibin supergroup 25 2019-05-17 15:39 /practice/20190517_mr/output/part-r-00000

WZB-MacBook:hadoop wangzhibin$ hadoop fs -cat /practice/20190517_mr/output/*

Hadoop 1

Hello 2

World 1

遇到的坑

问题一:执行到Running job: job_1557977819409_0004的地方就不往下执行了。

WZB-MacBook:hadoop wangzhibin$ hadoop jar /Users/wangzhibin/00_dev_suite/50_bigdata/hadoopoop/mapreduce/hadoop-mapreduce-examples-2.8.4.jar wordcount /practice/20190517_mr/input /practice/20190517_mr/output

19/05/17 15:01:03 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

19/05/17 15:01:03 INFO input.FileInputFormat: Total input files to process : 2

19/05/17 15:01:03 INFO mapreduce.JobSubmitter: number of splits:2

19/05/17 15:01:04 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1557977819409_0004

19/05/17 15:01:04 INFO impl.YarnClientImpl: Submitted application application_1557977819409_0004

19/05/17 15:01:04 INFO mapreduce.Job: The url to track the job: http://WZB-MacBook.local:8088/proxy/application_1557977819409_0004/

19/05/17 15:01:04 INFO mapreduce.Job: Running job: job_1557977819409_0004

参考:

- Hadoop相关总结

- Hadoop 运行wordcount任务卡在job running的一种解决办法

- hadoop2.7.x运行wordcount程序卡住在INFO mapreduce.Job: Running job:job _1469603958907_0002

- Can’t run a MapReduce job on hadoop 2.4.0

解决方案:在$HADOOP_HOME/etc/hadoop/yarn-site.xml中增加配置。

<property>

<name>yarn.nodemanager.disk-health-checker.min-healthy-disksname>

<value>0.0value>

property>

<property>

<name>yarn.nodemanager.disk-health-checker.max-disk-utilization-per-disk-percentagename>

<value>100.0value>

property>

最终的yarn-site.xml如下:

<configuration>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.classname>

<value>org.apache.hadoop.mapred.ShuffleHandlervalue>

property>

<property>

<name>yarn.nodemanager.disk-health-checker.min-healthy-disksname>

<value>0.0value>

property>

<property>

<name>yarn.nodemanager.disk-health-checker.max-disk-utilization-per-disk-percentagename>

<value>100.0value>

property>

configuration>

重启yarn:

./sbin/stop-yarn.sh

./sbin/start-yarn.sh