墨尘的模型部署2--nvidia-docker部署Tensorflow Serving(CPU+GPU)环境准备(一)

nvidia-docker部署Tensorflow Serving(CPU+GPU) 环境准备

- 一、Docker部署Tensorflow Serving CPU版本

- 二、Docker部署Tensorflow Serving GPU版本

- 1.nvidia-docker安装

- 2.下载docker镜像,运行docker,启动服务,端口映射

- 3.启动模型推理

- 三、gRPC部署手写体mnist模型

- 1. 客户端环境配置(必须提前配置好)

- 2. 训练并保存mnist模型

- 3. 以服务的形式运行模型

官网:TensorFlow Serving with Docker

一、Docker部署Tensorflow Serving CPU版本

# Download the TensorFlow Serving Docker image and repo

docker pull tensorflow/serving

git clone https://github.com/tensorflow/serving

# Location of demo models

TESTDATA="$(pwd)/serving/tensorflow_serving/servables/tensorflow/testdata"

# Start TensorFlow Serving container and open the REST API port

# 方法一

sudo docker run -t --rm -p 8501:8501 -v "/home/mochen/tmp/tfserving/serving/tensorflow_serving/servables/tensorflow/testdata/saved_model_half_plus_two_cpu:/models/half_plus_two" -e MODEL_NAME=half_plus_two tensorflow/serving

# 方法二

sudo docker run -dt -p 8501:8501 -v "/home/mochen/tmp/tfserving/serving/tensorflow_serving/servables/tensorflow/testdata/saved_model_half_plus_two_cpu:/models/half_plus_two" -e MODEL_NAME=half_plus_two tensorflow/serving

# 方法三

sudo docker run -d -p 8501:8501 --mount type=bind,source=/home/mochen/tmp/tfserving/serving/tensorflow_serving/servables/tensorflow/testdata/saved_model_half_plus_two_cpu/,target=/models/half_plus_two -e MODEL_NAME=half_plus_two -t --name testserver tensorflow/serving

# Query the model using the predict API

curl -d '{"instances": [1.0, 2.0, 5.0]}' -X POST http://localhost:8501/v1/models/half_plus_two:predict

# Returns => { "predictions": [2.5, 3.0, 4.5] }

- -t:

- –rm:

- -p:

- -v:

- -e:

二、Docker部署Tensorflow Serving GPU版本

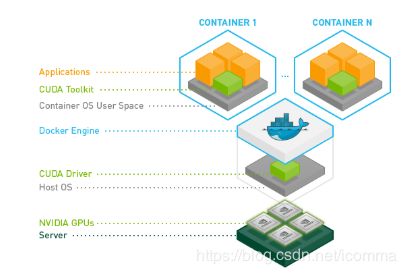

为了使服务能调用底层GPU,需要安装nvidia-docker,否则普通的docker是不能使用GPU的。

如图所示,最下层是有NVIDIA GPU的服务器,再往上是操作系统和CUDA驱动,再往上就是不同的docker容器,里边会包含CUDA Toolkit和CUDNN,比如可以启动一个docker来跑Tensorflow Serving 1.12.0,它使用的是CUDA 9.0和CUDNN 7;也可以启动一个docker来跑Tensorflow Serving 1.6.0,它使用的是CUDA 8。

nvidia-docker的安装方法如下,适用于ubuntu系统(官方链接)

1.nvidia-docker安装

仓库配置官网

Ubuntu 16.04/18.04, Debian Jessie/Stretch/Buster

此处我的docker为最新版本:docker 19.03

# Add the package repositories

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

sudo apt-get update && sudo apt-get install -y nvidia-container-toolkit

sudo systemctl restart docker

更新仓库key

curl -s -L https://nvidia.github.io/nvidia-container-runtime/gpgkey | sudo apt-key add -

成功输出

ok

安装nvidia-contaner-runtime软件包

# Ubuntu distributions

sudo apt-get install nvidia-container-runtime

Docker 引擎设置即将runtime注册到docker

sudo mkdir -p /etc/systemd/system/docker.service.d

sudo tee /etc/systemd/system/docker.service.d/override.conf <<EOF

[Service]

ExecStart=

ExecStart=/usr/bin/dockerd --host=fd:// --add-runtime=nvidia=/usr/bin/nvidia-container-runtime

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

配置daemon配置文件

sudo tee /etc/docker/daemon.json <<EOF

{

"runtimes": {

"nvidia": {

"path": "/usr/bin/nvidia-container-runtime",

"runtimeArgs": []

}

}

}

EOF

sudo pkill -SIGHUP dockerd

2.下载docker镜像,运行docker,启动服务,端口映射

- latest:安装TensorFlow Serving编译好的二进制文件的最简docker镜像,无法进行任何修改,可直接部署。

- latest-gpu:GPU版本的latest镜像。

- latest-devel -包括所有源/依赖项/工具链,以及在CPU上运行的已编译二进制文件,devel是指development,可开启镜像容器bash修改配置,然后使用docker commit制作新镜像。

- latest-devel-gpu -GPU版本的latest-devel镜像。

gpu后缀代表可使用gpu加速,devel代表可开启容器终端自定义配置容器

# Download the TensorFlow Serving Docker image and repo

docker pull tensorflow/serving:latest-gpu

mkdir -p /home/mochen/tmp/tfserving

cd /home/mochen/tmp/tfserving

# 下载示例程序

git clone https://github.com/tensorflow/serving

# Start TensorFlow Serving container and open the REST API port

# 方法一

sudo docker run -t --rm -p 8501:8501 -v "/home/mochen/tmp/tfserving/serving/tensorflow_serving/servables/tensorflow/testdata/saved_model_half_plus_two_gpu:/models/half_plus_two" -e MODEL_NAME=half_plus_two tensorflow/serving

# 方法二

sudo docker run -dt -p 8501:8501 -v "/home/mochen/tmp/tfserving/serving/tensorflow_serving/servables/tensorflow/testdata/saved_model_half_plus_two_gpu:/models/half_plus_two" -e MODEL_NAME=half_plus_two tensorflow/serving

# 方法三(建议)

sudo docker run --runtime=nvidia --restart always -d -p 8501:8501 --mount type=bind,source=$(pwd)/serving/tensorflow_serving/servables/tensorflow/testdata/saved_model_half_plus_two_gpu/,target=/models/half_plus_two -e MODEL_NAME=half_plus_two -t --name testserver tensorflow/serving:latest-gpu &

- -d: 后台运行(可选),只有执行前台运行才会显示如下的Docker运行成功信息。

- –restart always: 让其作为服务方式自动启动

- -e NVIDIA_VISIBLE_DEVICES=0选项,指定所使用GPU

运行docker容器,并指定用nvidia-docker,表示调用GPU,启动TensorFlow服务模型,绑定REST API端口8501(gRPC端口为8500),并映射出来到宿主机的8501端口,使外部可以访问,当然也可以设置成其它端口,如1234,只需要指定-p 1234:8501即可。通过mount将我们所需的模型从主机(source)映射到容器中预期模型的位置(target)。我们还将模型的名称作为环境变量传递,这在查询模型时非常重要。

提示:当出现如下提示表示GPU 版本的Docker运行成功,服务器准备好接受请求:

2018-07-27 00:07:20.773693: I tensorflow_serving/model_servers/main.cc:333]

Exporting HTTP/REST API at:localhost:8501 ...

3.启动模型推理

# Query the model using the predict API

curl -d '{"instances": [1.0, 2.0, 5.0]}' -X POST http://localhost:8501/v1/models/half_plus_two:predict

# Returns => { "predictions": [2.5, 3.0, 4.5] }

三、gRPC部署手写体mnist模型

1. 客户端环境配置(必须提前配置好)

1.1 客户端用conda创建单独环境(建议采用)

# 创建conda环境并进入

conda create tensorflow python=3.6

source activate tensorflow

# 安装tensorflow

conda install tensorflow-gpu==1.13.1

# 修改pip源

pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

# 安装图像相关软件库

pip install matplotlib Pillow opencv-python

# 安装相关api

pip install -U grpcio grpcio-tools protobuf

pip install tensorflow-serving-api==1.13.1

1.2 在现有环境中添加包

安装gprc包

pip install --upgrade pip

pip install -U grpcio

pip install -U grpcio-tools

pip install -U protobuf

- -U:表示如果原来已经安装,此时会升级到最新版本

安装tensorflow-serving-api包

参照官网

tensorflow-serving-api包 版本必须和客户端执行环境的版本相同,此处我的tensorflow版本为gpu-1.13.1

pip install tensorflow-serving-api==1.13.1

2. 训练并保存mnist模型

首先训练一个模型,如果要用GPU训练,则需要修改mnist_saved_model.py文件,在头文件处新增如下代码

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

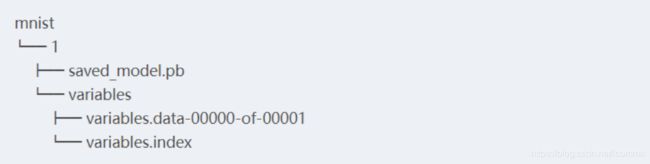

创建模型目录中创建mnist文件夹,然后运行脚本,将结果文件保存在mnist文件夹中.

mkdir /home/mochen/hd/models_zoo/mnist

cd serving

python tensorflow_serving/example/mnist_saved_model.py /home/mochen/hd/models_zoo/mnist

3. 以服务的形式运行模型

CPU版本

sudo docker run -p 8500:8500 --mount type=bind,source=/home/mochen/hd/models_zoo/mnist,target=/models/mnist -e MODEL_NAME=mnist -t tensorflow/serving

GPU版本

sudo docker run --runtime=nvidia -p 8500:8500 --mount type=bind,source=/home/mochen/hd/models_zoo/mnist,target=/models/mnist -e MODEL_NAME=mnist -t tensorflow/serving:latest-gpu

客户端验证:

python tensorflow_serving/example/mnist_client.py --num_tests=1000 --server=127.0.0.1:8500