hadoop的安装与配置方法详解

1、概述

Hadoop安装分为三种方式,分别为单机、伪分布式、完全分布式,安装过程不难,在此主要详细叙述完全分布式的安装配置过程,毕竟生产环境都使用的完全分布式,前两者作为学习和研究使用。按照下述步骤一步一步配置一定可以正确的安装Hadoop分布式集群环境。

2、Hadoop分布式集群搭建

2.1、软件准备

2.2、环境准备

2.3、操作步骤

配置hosts

说明:配置hosts文件的作用,主要用于确定每个节点的IP地址,方便后续master节点能快速查询到并访问各个节点,hosts文件路径为/etc/hosts,三台服务器上的配置过程如下:

以下操作在三台服务器上都操作。

[root@master~]#vi/etc/hosts

配置好之后在各台服务器上使用域名看是否能ping通

在master上pingslave1

在master上pingslave2

在slave1上pingmaster

在slave1上pingslave2

在slave2上pingmaster

在slave2上pingslave1

如果以上步骤均和图中操作一致则说明hosts没有配置错误,接下来关闭三台服务器的防火墙操作,以免在后面的操作引起不必要的错误。

创建Hadoop账户

说明:创建hadoop集群专门设置的一个用户组以及用户。

以下操作在三台服务器上都操作

#创建用户组为hadoop

#[root@master~]#groupaddhadoop

添加一个hadoop用户,此用户属于hadoop组,并且具有root权限

[root@master~]#useradd-s/bin/bash-d/home/hodoop-mhadoop-ghadoop-Groot

#设置hadoop用户的密码为hadoop(自定义即可)

[root@master~]#passwdhadoop

更改用户hadoop的密码。

新的密码:

无效的密码:它基于字典单词

无效的密码:过于简单

重新输入新的密码:

passwd:所有的身份验证令牌已经成功更新。

#创建好账户之后最好用suhadoop验证下能否正常切换至hadoop账户

[root@slave1~]#suhadoop

[hadoop@slave1root]$

至此创建hadoop账户步骤完成,很简单吧,创建hadoop密码建议三台服务器的hadoop账户密码均为一样,避免在后续的操作中出现不必要的麻烦和错误,因为后续很多地方需要输入hadoop密码。

SSH无密码访问主机

说明:这一部分在搭建hadoop集群的环境过程很重要,非常重要,很多细小的点需要注意,请仔细按照步骤一步一步的来完成配置,在此也对SSH做一个简单的介绍,SSH主要通过RSA算法来产生公钥与私钥,在数据传输过程中对数据进行加密来保障数据的安全性和可靠性,公钥部分是公共部分,网络上任一节点均可以访问,私钥主要用来对数据进行加密。以防数据被盗取,总而言之,这是一种非对称加密算法,想要破解还是非常有难度的,hadoop集群的各个节点之间需要进行数据访问,被访问的节点对于访问用户节点的可靠性必须进行验证,hadoop采用的是ssh的方法通过密钥验证以及数据加解密的方式进行远程安全登录操作,当然,如果hadoop对每个节点的访问均需要进行验证,其效率将会大大的降低,所以才需要配置SSH免密码的方法直接远程连入被访问的节点,这样将大大提高访问效率。

以下操作三台服务器均要操作

#确定每台服务器的是否安装ssh服务,如下则说明ssh服务已经正常安装,下面操作为通过ssh远程访问登陆自己

[root@master~]#sshlocalhost

root@localhost'spassword:

Lastlogin:FriJun2616:48:432015from192.168.1.102

[root@master~]#

#退出当前登陆

[root@master~]#exit

logout

Connectiontolocalhostclosed.

[root@master~]#

#当然还可以通过以下方式验证ssh的安装(非必须)

#如果没有上述截图所显示的,可以通过yum或者rpm安装进行安装(非必须)

[root@master~]#yuminstallssh

#安装好之后开启ssh服务,ssh服务一般又叫做sshd(非必须,前提是没有安装ssh)

[root@master~]#servicesshdstart

#或者使用/etc/init.d/sshdstart(非必须)

[root@master~]#/etc/init.d/sshdstart

#查看或者编辑ssh服务配置文件(必须,且三台服务器都要操作)

[root@master~]#vi/etc/ssh/sshd_config

#找到sshd_config配置文件中的如下三行,并将前面的#去掉

说明:当然你可以在该配置文件中对ssh服务进行各种配置,比如说端口,在此我们就只更改上述图中三行配置,其他保持默认即可

#重启ssh服务(必须)

[root@master~]#servicesshdrestart

停止sshd:[确定]

正在启动sshd:[确定]

说明:接下来的步骤非常关键,严格对照用户账户,以及路径进行操作

#每个节点分别产生公私钥。

[hadoop@master~]$ssh-keygen-tdsa-P''-f~/.ssh/id_dsa

以上是产生公私密钥,产生的目录在用户主目录下的.ssh目录下

说明:id_dsa.pub为公钥,id_dsa为私钥。

#将公钥文件复制成authorized_keys文件,这个步骤很关键,且authorized_keys文件名要认真核对,它和之前的步骤修改sshd_config中需要去掉注释中的文件名是一致的,不能出错。

[[email protected]]$catid_dsa.pub>>authorized_keys

#单机回环ssh免密码登陆测试

可以注意到红线框中标注,为什么还需要提示输入确认指令以及密码,不是免密码登陆吗?先将疑问抛在此处,在后续操作中我们解决这个问题。

#修改authorized_keys文件权限为600

[[email protected]]$chmod600authorized_keys

以上步骤三台服务器均要操作,再次提醒!!!

#让主节点(master)能够通过SSH免密码登陆两个节点(slave)

说明:为了实现这个功能,两个slave节点的公钥文件中必须包含主节点(master)的公钥信息,这样当master就可以顺利安全地访问这两个slave节点了

注意:以下操作只在slave1和slave2节点上操作

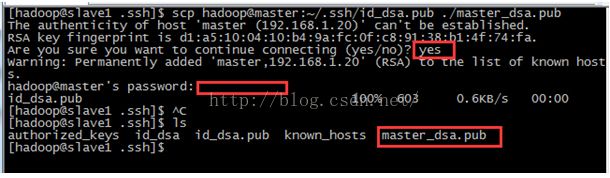

[[email protected]]$scphadoop@master:~/.ssh/id_dsa.pub./master_dsa.pub

上图中密码红线框处很关键,是master主机的hadoop账户的密码,如果你三台服务器hadoop账户的密码设置不一致,就有可能出现忘记或者输入错误密码的问题。同时在.ssh目录下多出了master_dsa.pub公钥文件(master主机中的公钥)

#将master_dsa.pub文件包含的公钥信息追加到slave1的authorized_keys

[[email protected]]$catmaster_dsa.pub>>authorized_keys

说明:操作至此还不能实现master中ssh免密码登陆slave1,为什么呢?因为ssh访问机制中需要远程账户有权限控制

#修改authorized_keys文件权限为600

[[email protected]]$chmod600authorized_keys

#重启sshd服务,此时需要切换账号为root账号

[root@slave1hodoop]#servicesshdrestart

停止sshd:[确定]

正在启动sshd:[确定]

#在master节点中实现sshslave1免密码远程登陆slave1

[hadoop@slave1~]$sshslave1

Theauthenticityofhost'slave1(::1)'can'tbeestablished.

RSAkeyfingerprintisc8:0e:ff:b2:91:d7:3a:bb:63:43:c8:0a:3e:2a:94:04.

Areyousureyouwanttocontinueconnecting(yes/no)?yes

Warning:Permanentlyadded'slave1'(RSA)tothelistofknownhosts.

Lastlogin:SunJun2802:20:382015frommaster

[hadoop@slave1~]$exit

logout

Connectiontoslave1closed.

[hadoop@slave1~]$sshslave1

Lastlogin:SunJun2802:24:052015fromslave1

[hadoop@slave1~]$

说明:上述红色标注命令处在master节点首次远程访问slave1时,需要yes指令确认链接,当yes输入链接成功后,退出exit,在此sshslave1时,就不要求输入yes指令,此时你就可以像上述显示中一样通过hadoop账户登陆到slave1中了。

slave2节点也要进行上述操作,最终实现如下即可表明master节点可以免密码登陆到slave2节点中

[hadoop@master~]$sshslave2

Lastlogin:SatJun2702:01:152015fromlocalhost.localdomain

[hadoop@slave2~]$

至此SSH免密码登陆各个节点配置完成。

安装JDK

此过程请参考以下操作,最终结果保证三台服务器jdk均能正常运行即可

http://www.cnblogs.com/zhoulf/archive/2013/02/04/2891608.html

注意选择服务器对应的版本

jdk1.7.0_51

安装Hadoop

以下操作均在master上进行,注意对应的账户

#解压缩hadoop-2.6.0.tar.gz

[root@mastersrc]#tar-zxvfhadoop-2.6.0.tar.gz

#修改解压缩后文件为hadoop

[root@mastersrc]#mvhadoop-2.6.0hadoop

[root@mastersrc]#ll

总用量310468

drwxr-xr-x.2rootroot409611月112010debug

drwxr-xr-x.92000020000409611月142014hadoop

-rw-r--r--.1rootroot1952576046月262015hadoop-2.6.0.tar.gz

-rw-r--r--.1rootroot1226395929月262014jdk-7u51-Linux-x64.rpm

drwxr-xr-x.2rootroot409611月112010kernels

#配置hadoop环境变量

exportJAVA_HOME=/usr/Java/jdk1.7.0_51

exportJRE_HOME=/usr/java/jdk1.7.0_51/jre

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

exportPATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

exportHADOOP_HOME=/usr/src/hadoop

exportPATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

#修改hadoop-env.sh文件

说明:该文件目录为/etc/hadoop/hadoop-env.sh

#配置slaves

说明:该文件目录为/etc/hadoop/slaves

#配置core-site.xml文件

说明:改文件目录为/etc/hadoop/core-site.xml

[root@masterhadoop]#vicore-site.xml

fs.defaultFS

hdfs://master:9000

io.file.buffer.size

131072

hadoop.tmp.dir

file:/usr/src/hadoop/tmp

Abaseforothertemporarydirectories.

hadoop.proxyuser.Spark.hosts

*

hadoop.proxyuser.spark.groups

*

#配置yarn-env.sh在开头添加如下环境变量

说明:该文件目录/etc/hadoop/yarn-env.sh

#someJavaparameters

exportJAVA_HOME=/usr/java/jdk1.7.0_51

#配置hdfs-site.xml

说明:该文件目录为/etc/hadoop/hdfs-site.xml

dfs.namenode.secondary.http-address

master:9001

dfs.namenode.name.dir

file:/usr/src/hadoop/dfs/name

dfs.datanode.data.dir

file:/usr/src/hadoop/dfs/data

dfs.replication

2

dfs.webhdfs.enabled

true

#配置mapred-site.xml

说明:

该文件目录为该文件目录为/etc/hadoop/mapred-site.xml

[root@masterhadoop]#cpmapred-site.xml.templatemapred-site.xml

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

master:10020

mapreduce.jobhistory.webapp.address

master:19888

#配置yarn-site.xml文件

说明:该文件在/etc/hadoop/yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce.shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.resourcemanager.address

master:8032

yarn.resourcemanager.scheduler.address

master:8030

yarn.resourcemanager.resource-tracker.address

master:8035

yarn.resourcemanager.admin.address

master:8033

yarn.resourcemanager.webapp.address

master:8088

#将hadoop目录读权限分配给hadoop用户

[root@mastersrc]#chown-Rhadoop:hadoophadoop

[root@mastersrc]#ll

总用量310468

drwxr-xr-x.2rootroot409611月112010debug

drwxr-xr-x.9hadoophadoop409611月142014hadoop

-rw-r--r--.1rootroot1952576046月262015hadoop-2.6.0.tar.gz

-rw-r--r--.1rootroot1226395929月262014jdk-7u51-linux-x64.rpm

drwxr-xr-x.2rootroot409611月112010kernels

[root@mastersrc]#

#创建tmp目录,并且将读权限分配给hadoop用户

[root@mastersrc]#cdhadoop

[root@masterhadoop]#ll

总用量52

drwxr-xr-x.2hadoophadoop409611月142014bin

drwxr-xr-x.3hadoophadoop409611月142014etc

drwxr-xr-x.2hadoophadoop409611月142014include

drwxr-xr-x.3hadoophadoop409611月142014lib

drwxr-xr-x.2hadoophadoop409611月142014libexec

-rw-r--r--.1hadoophadoop1542911月142014LICENSE.txt

-rw-r--r--.1hadoophadoop10111月142014NOTICE.txt

-rw-r--r--.1hadoophadoop136611月142014README.txt

drwxr-xr-x.2hadoophadoop409611月142014sbin

drwxr-xr-x.4hadoophadoop409611月142014share

[root@masterhadoop]#mkdirtmp

[root@masterhadoop]#ll

总用量56

drwxr-xr-x.2hadoophadoop409611月142014bin

drwxr-xr-x.3hadoophadoop409611月142014etc

drwxr-xr-x.2hadoophadoop409611月142014include

drwxr-xr-x.3hadoophadoop409611月142014lib

drwxr-xr-x.2hadoophadoop409611月142014libexec

-rw-r--r--.1hadoophadoop1542911月142014LICENSE.txt

-rw-r--r--.1hadoophadoop10111月142014NOTICE.txt

-rw-r--r--.1hadoophadoop136611月142014README.txt

drwxr-xr-x.2hadoophadoop409611月142014sbin

drwxr-xr-x.4hadoophadoop409611月142014share

drwxr-xr-x.2rootroot40966月2619:17tmp

[root@masterhadoop]#chown-Rhadoop:hadooptmp

[root@masterhadoop]#ll

总用量56

drwxr-xr-x.2hadoophadoop409611月142014bin

drwxr-xr-x.3hadoophadoop409611月142014etc

drwxr-xr-x.2hadoophadoop409611月142014include

drwxr-xr-x.3hadoophadoop409611月142014lib

drwxr-xr-x.2hadoophadoop409611月142014libexec

-rw-r--r--.1hadoophadoop1542911月142014LICENSE.txt

-rw-r--r--.1hadoophadoop10111月142014NOTICE.txt

-rw-r--r--.1hadoophadoop136611月142014README.txt

drwxr-xr-x.2hadoophadoop409611月142014sbin

drwxr-xr-x.4hadoophadoop409611月142014share

drwxr-xr-x.2hadoophadoop40966月2619:17tmp

[root@masterhadoop]#

master服务器上配置hadoop就完成了,以上过程只在master上进行操作

Slave1配置:

#从master上将hadoop复制到slave1的/usr/src目录下,这个路径很关键,因为在配置core-site.xml中有配置node的目录,所以应该和那里的路径保存一致

命令:scp-r/usr/hadooproot@服务器IP:/usr/

[root@mastersrc]#scp-rhadooproot@slave1:/usr/src

#同样复制到slave2中

[root@mastersrc]#scp-rhadooproot@slave2:/usr/src

以上两部操作均在master上进行

#确认slave1上复制成功hadoop文件

[hadoop@slave1src]$ll

总用量119780

drwxr-xr-x.2rootroot409611月112010debug

drwxr-xr-x.10rootroot40966月2803:40hadoop

-rw-r--r--.1rootroot1226395929月262014jdk-7u51-linux-x64.rpm

drwxr-xr-x.2rootroot409611月112010kernels

[hadoop@slave1src]$

#切换root用户,修改hadoop的文件目录权限为hadoop用户

[root@slave1src]#chown-Rhadoop:hadoophadoop

[root@slave1src]#ll

总用量119780

drwxr-xr-x.2rootroot409611月112010debug

drwxr-xr-x.10hadoophadoop40966月2803:40hadoop

-rw-r--r--.1rootroot1226395929月262014jdk-7u51-linux-x64.rpm

drwxr-xr-x.2rootroot409611月112010kernels

[root@slave1src]#

#在slave1配置hadoop的环境变量

#使环境变量生效

[root@slave1src]#source/etc/profile

[root@slave1src]#echo$HADOOP_HOME

/usr/src/hadoop

[root@slave1src]#

#确认slave2上的hadoop文件复制成功

[hadoop@slave2src]$ll

总用量119780

drwxr-xr-x.2rootroot409611月112010debug

drwxr-xr-x.10rootroot40966月2703:32hadoop

-rw-r--r--.1rootroot1226395929月262014jdk-7u51-linux-x64.rpm

drwxr-xr-x.2rootroot409611月112010kernels

[hadoop@slave2src]$

#修改hadoop目录权限为hadoop用户

[root@slave2src]#chown-Rhadoop:hadoophadoop

[root@slave2src]#ll

总用量119780

drwxr-xr-x.2rootroot409611月112010debug

drwxr-xr-x.10hadoophadoop40966月2703:32hadoop

-rw-r--r--.1rootroot1226395929月262014jdk-7u51-linux-x64.rpm

drwxr-xr-x.2rootroot409611月112010kernels

[root@slave2src]#

#配置slave2的hadoop环境变量

#使其生效,并验证

[root@slave2src]#source/etc/profile

[root@slave2src]#echo$HADOOP_HOME

/usr/src/hadoop

[root@slave2src]#

至此master、slave1、slave2节点上hadoop配置安装均已完成

最后在三台服务器上确定下防火墙是否都已经关闭

[[email protected]]#serviceiptablesstop

2.4、验证

2.4.1、格式化namenode

在master上执行

#格式化namenode操作

[hadoop@masterhadoop]$hdfsnamenode-format

15/06/2619:38:57INFOnamenode.NameNode:STARTUP_MSG:

/************************************************************

STARTUP_MSG:StartingNameNode

STARTUP_MSG:host=master/192.168.1.20

STARTUP_MSG:args=[-format]

STARTUP_MSG:version=2.6.0

STARTUP_MSG:classpath=/usr/src/hadoop/etc/hadoop:/usr/src/hadoop/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/src/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/src/hadoop/share/hadoop/common/lib/gson-2.2.4.jar:/usr/src/hadoop/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-el-1.0.jar:/usr/src/hadoop/share/hadoop/common/lib/activation-1.1.jar:/usr/src/hadoop/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/src/hadoop/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/src/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/src/hadoop/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/src/hadoop/share/hadoop/common/lib/htrace-core-3.0.4.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/src/hadoop/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-collections-3.2.1.jar:/usr/src/hadoop/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/src/hadoop/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/src/hadoop/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-net-3.1.jar:/usr/src/hadoop/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/src/hadoop/share/hadoop/common/lib/hadoop-auth-2.6.0.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/src/hadoop/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/src/hadoop/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/src/hadoop/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/src/hadoop/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/src/hadoop/share/hadoop/common/lib/curator-client-2.6.0.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/src/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/src/hadoop/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/src/hadoop/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/src/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/src/hadoop/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/src/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/src/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/usr/src/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/src/hadoop/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/src/hadoop/share/hadoop/common/lib/xz-1.0.jar:/usr/src/hadoop/share/hadoop/common/lib/hadoop-annotations-2.6.0.jar:/usr/src/hadoop/share/hadoop/common/lib/asm-3.2.jar:/usr/src/hadoop/share/hadoop/common/lib/curator-framework-2.6.0.jar:/usr/src/hadoop/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/src/hadoop/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/src/hadoop/share/hadoop/common/lib/avro-1.7.4.jar:/usr/src/hadoop/share/hadoop/common/lib/junit-4.11.jar:/usr/src/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/src/hadoop/share/hadoop/common/lib/commons-io-2.4.jar:/usr/src/hadoop/share/hadoop/common/lib/guava-11.0.2.jar:/usr/src/hadoop/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/src/hadoop/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/src/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/src/hadoop/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/src/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/src/hadoop/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/src/hadoop/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/src/hadoop/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/src/hadoop/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/src/hadoop/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/src/hadoop/share/hadoop/common/hadoop-common-2.6.0-tests.jar:/usr/src/hadoop/share/hadoop/common/hadoop-nfs-2.6.0.jar:/usr/src/hadoop/share/hadoop/common/hadoop-common-2.6.0.jar:/usr/src/hadoop/share/hadoop/hdfs:/usr/src/hadoop/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/src/hadoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/src/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.6.0.jar:/usr/src/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.6.0-tests.jar:/usr/src/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.0.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/src/hadoop/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/src/hadoop/share/hadoop/yarn/lib/activation-1.1.jar:/usr/src/hadoop/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/src/hadoop/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/usr/src/hadoop/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/src/hadoop/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/usr/src/hadoop/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/src/hadoop/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/src/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/src/hadoop/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/src/hadoop/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/src/hadoop/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/src/hadoop/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/src/hadoop/share/hadoop/yarn/lib/guice-3.0.jar:/usr/src/hadoop/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/src/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/src/hadoop/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/src/hadoop/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/src/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/usr/src/hadoop/share/hadoop/yarn/lib/asm-3.2.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/src/hadoop/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/src/hadoop/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/src/hadoop/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jline-0.9.94.jar:/usr/src/hadoop/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/src/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.0.jar:/usr/src/hadoop/share/hadoop/yarn/hadoop-yarn-registry-2.6.0.jar:/usr/src/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-2.6.0.jar:/usr/src/hadoop/share/hadoop/yarn/hadoop-yarn-api-2.6.0.jar:/usr/src/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.0.jar:/usr/src/hadoop/share/hadoop/yarn/hadoop-yarn-client-2.6.0.jar:/usr/src/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.0.jar:/usr/src/hadoop/share/hadoop/yarn/hadoop-yarn-common-2.6.0.jar:/usr/src/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.0.jar:/usr/src/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.0.jar:/usr/src/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.0.jar:/usr/src/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/src/hadoop/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/src/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0-tests.jar:/usr/src/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.0.jar:/usr/src/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar:/usr/src/hadoop/contrib/capacity-scheduler/*.jar

STARTUP_MSG:build=https://Git-wip-us.apache.org/repos/asf/hadoop.git-re3496499ecb8d220fba99dc5ed4c99c8f9e33bb1;compiledby'jenkins'on2014-11-13T21:10Z

STARTUP_MSG:java=1.7.0_51

************************************************************/

15/06/2619:38:57INFOnamenode.NameNode:registeredUNIXsignalhandlersfor[TERM,HUP,INT]

15/06/2619:38:57INFOnamenode.NameNode:createNameNode[-format]

15/06/2619:38:59WARNutil.NativeCodeLoader:Unabletoloadnative-hadooplibraryforyourplatform...usingbuiltin-javaclasseswhereapplicable

Formattingusingclusterid:CID-fcc57b68-258e-4678-a20d-0798a1583185

15/06/2619:39:01INFOnamenode.FSNamesystem:NoKeyProviderfound.

15/06/2619:39:01INFOnamenode.FSNamesystem:fsLockisfair:true

15/06/2619:39:01INFOblockmanagement.DatanodeManager:dfs.block.invalidate.limit=1000

15/06/2619:39:01INFOblockmanagement.DatanodeManager:dfs.namenode.datanode.registration.ip-hostname-check=true

15/06/2619:39:01INFOblockmanagement.BlockManager:dfs.namenode.startup.delay.block.deletion.secissetto000:00:00:00.000

15/06/2619:39:01INFOblockmanagement.BlockManager:Theblockdeletionwillstartaround2015六月2619:39:01

15/06/2619:39:01INFOutil.GSet:ComputingcapacityformapBlocksMap

15/06/2619:39:01INFOutil.GSet:VMtype=64-bit

15/06/2619:39:01INFOutil.GSet:2.0%maxmemory966.7MB=19.3MB

15/06/2619:39:01INFOutil.GSet:capacity=2^21=2097152entries

15/06/2619:39:01INFOblockmanagement.BlockManager:dfs.block.access.token.enable=false

15/06/2619:39:01INFOblockmanagement.BlockManager:defaultReplication=2

15/06/2619:39:01INFOblockmanagement.BlockManager:maxReplication=512

15/06/2619:39:01INFOblockmanagement.BlockManager:minReplication=1

15/06/2619:39:01INFOblockmanagement.BlockManager:maxReplicationStreams=2

15/06/2619:39:01INFOblockmanagement.BlockManager:shouldCheckForEnoughRacks=false

15/06/2619:39:01INFOblockmanagement.BlockManager:replicationRecheckInterval=3000

15/06/2619:39:01INFOblockmanagement.BlockManager:encryptDataTransfer=false

15/06/2619:39:01INFOblockmanagement.BlockManager:maxNumBlocksToLog=1000

15/06/2619:39:01INFOnamenode.FSNamesystem:fsOwner=hadoop(auth:SIMPLE)

15/06/2619:39:01INFOnamenode.FSNamesystem:supergroup=supergroup

15/06/2619:39:01INFOnamenode.FSNamesystem:isPermissionEnabled=true

15/06/2619:39:01INFOnamenode.FSNamesystem:DeterminednameserviceID:hadoop-cluster1

15/06/2619:39:01INFOnamenode.FSNamesystem:HAEnabled:false

15/06/2619:39:01INFOnamenode.FSNamesystem:AppendEnabled:true

15/06/2619:39:02INFOutil.GSet:ComputingcapacityformapINodeMap

15/06/2619:39:02INFOutil.GSet:VMtype=64-bit

15/06/2619:39:02INFOutil.GSet:1.0%maxmemory966.7MB=9.7MB

15/06/2619:39:02INFOutil.GSet:capacity=2^20=1048576entries

15/06/2619:39:02INFOnamenode.NameNode:Cachingfilenamesoccuringmorethan10times

15/06/2619:39:02INFOutil.GSet:ComputingcapacityformapcachedBlocks

15/06/2619:39:02INFOutil.GSet:VMtype=64-bit

15/06/2619:39:02INFOutil.GSet:0.25%maxmemory966.7MB=2.4MB

15/06/2619:39:02INFOutil.GSet:capacity=2^18=262144entries

15/06/2619:39:02INFOnamenode.FSNamesystem:dfs.namenode.safemode.threshold-pct=0.9990000128746033

15/06/2619:39:02INFOnamenode.FSNamesystem:dfs.namenode.safemode.min.datanodes=0

15/06/2619:39:02INFOnamenode.FSNamesystem:dfs.namenode.safemode.extension=30000

15/06/2619:39:02INFOnamenode.FSNamesystem:Retrycacheonnamenodeisenabled

15/06/2619:39:02INFOnamenode.FSNamesystem:Retrycachewilluse0.03oftotalheapandretrycacheentryexpirytimeis600000millis

15/06/2619:39:02INFOutil.GSet:ComputingcapacityformapNameNodeRetryCache

15/06/2619:39:02INFOutil.GSet:VMtype=64-bit

15/06/2619:39:02INFOutil.GSet:0.029999999329447746%maxmemory966.7MB=297.0KB

15/06/2619:39:02INFOutil.GSet:capacity=2^15=32768entries

15/06/2619:39:02INFOnamenode.NNConf:ACLsenabled?false

15/06/2619:39:02INFOnamenode.NNConf:XAttrsenabled?true

15/06/2619:39:02INFOnamenode.NNConf:Maximumsizeofanxattr:16384

15/06/2619:39:02INFOnamenode.FSImage:AllocatednewBlockPoolId:BP-1680202825-192.168.1.20-1435318742720

15/06/2619:39:02INFOcommon.Storage:Storagedirectory/usr/src/hadoop/dfs/namehasbeensuccessfullyformatted.

15/06/2619:39:03INFOnamenode.NNStorageRetentionManager:Goingtoretain1imageswithtxid>=0

15/06/2619:39:03INFOutil.ExitUtil:Exitingwithstatus0

15/06/2619:39:03INFOnamenode.NameNode:SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG:ShuttingdownNameNodeatmaster/192.168.1.20

************************************************************/

[hadoop@masterhadoop]$

#启动所有服务

************************************************************/

[hadoop@masterhadoop]$start-all.sh

ThisscriptisDeprecated.Insteadusestart-dfs.shandstart-yarn.sh

15/06/2620:19:18WARNutil.NativeCodeLoader:Unabletoloadnative-hadooplibraryforyourplatform...usingbuiltin-javaclasseswhereapplicable

Startingnamenodeson[master]

master:startingnamenode,loggingto/usr/src/hadoop/logs/hadoop-hadoop-namenode-master.out

slave2:startingdatanode,loggingto/usr/src/hadoop/logs/hadoop-hadoop-datanode-slave2.out

slave1:startingdatanode,loggingto/usr/src/hadoop/logs/hadoop-hadoop-datanode-slave1.out

Startingsecondarynamenodes[master]

master:startingsecondarynamenode,loggingto/usr/src/hadoop/logs/hadoop-hadoop-secondarynamenode-master.out

15/06/2620:19:47WARNutil.NativeCodeLoader:Unabletoloadnative-hadooplibraryforyourplatform...usingbuiltin-javaclasseswhereapplicable

startingyarndaemons

startingresourcemanager,loggingto/usr/src/hadoop/logs/yarn-hadoop-resourcemanager-master.out

slave1:startingnodemanager,loggingto/usr/src/hadoop/logs/yarn-hadoop-nodemanager-slave1.out

slave2:startingnodemanager,loggingto/usr/src/hadoop/logs/yarn-hadoop-nodemanager-slave2.out

[hadoop@masterhadoop]$jps

30406SecondaryNameNode

30803Jps

30224NameNode

30546ResourceManager

在slave1上验证

[hadoop@masterhadoop]$sshslave1

Lastlogin:SunJun2804:36:222015frommaster

[hadoop@slave1~]$jps

27615DataNode

27849Jps

27716NodeManager

[hadoop@slave1~]$

在slave2上验证

[hadoop@masterhadoop]$sshslave2

Lastlogin:SatJun2704:26:302015frommaster

[hadoop@slave2~]$jps

4605DataNode

4704NodeManager

4837Jps

[hadoop@slave2~]$

查看集群状态

Connectiontoslave2closed.

[hadoop@masterhadoop]$hdfsdfsadmin-report

15/06/2620:21:12WARNutil.NativeCodeLoader:Unabletoloadnative-hadooplibraryforyourplatform...usingbuiltin-javaclasseswhereapplicable

ConfiguredCapacity:37073182720(34.53GB)

PresentCapacity:27540463616(25.65GB)

DFSRemaining:27540414464(25.65GB)

DFSUsed:49152(48KB)

DFSUsed%:0.00%

Underreplicatedblocks:0

Blockswithcorruptreplicas:0

Missingblocks:0

-------------------------------------------------

Livedatanodes(2):

Name:192.168.1.22:50010(slave2)

Hostname:slave2

DecommissionStatus:Normal

ConfiguredCapacity:18536591360(17.26GB)

DFSUsed:24576(24KB)

NonDFSUsed:4712751104(4.39GB)

DFSRemaining:13823815680(12.87GB)

DFSUsed%:0.00%

DFSRemaining%:74.58%

ConfiguredCacheCapacity:0(0B)

CacheUsed:0(0B)

CacheRemaining:0(0B)

CacheUsed%:100.00%

CacheRemaining%:0.00%

Xceivers:1

Lastcontact:FriJun2620:21:13CST2015

Name:192.168.1.21:50010(slave1)

Hostname:slave1

DecommissionStatus:Normal

ConfiguredCapacity:18536591360(17.26GB)

DFSUsed:24576(24KB)

NonDFSUsed:4819968000(4.49GB)

DFSRemaining:13716598784(12.77GB)

DFSUsed%:0.00%

DFSRemaining%:74.00%

ConfiguredCacheCapacity:0(0B)

CacheUsed:0(0B)

CacheRemaining:0(0B)

CacheUsed%:100.00%

CacheRemaining%:0.00%

Xceivers:1

Lastcontact:FriJun2620:21:14CST2015

[hadoop@masterhadoop]$

至此hadoop集群搭建完成

2.5、测试

在本机配置三台服务器的hosts

192.168.1.20master

192.168.1.21slave1

192.168.1.22slave2

此时可以在本地访问master的web管理

通过浏览器输入:http://master:50070/,在此可以访问到hadoop集群各种状态

通过浏览器输入:http://master:8088/

运行示例程序wordcount

[hadoop@masterhadoop]$hdfsdfs-ls/

15/06/2620:37:49WARNutil.NativeCodeLoader:Unabletoloadnative-hadooplibraryforyourplatform...usingbuiltin-javaclasseswhereapplicable

[hadoop@masterhadoop]$hdfsdfs-mkdir/input

15/06/2620:38:44WARNutil.NativeCodeLoader:Unabletoloadnative-hadooplibraryforyourplatform...usingbuiltin-javaclasseswhereapplicable

[hadoop@masterhadoop]$hdfsdfs-ls/

15/06/2620:38:50WARNutil.NativeCodeLoader:Unabletoloadnative-hadooplibraryforyourplatform...usingbuiltin-javaclasseswhereapplicable

Found1items

drwxr-xr-x-hadoopsupergroup02015-06-2620:38/input

在本地创建三个文本11.txt12.txt13.txt,分别输入以下内容

11.txt:helloworld!

12.txt:hellohadoop

13.txt:helloJD.com

[root@mastersrc]#suhadoop

[hadoop@mastersrc]$ll

总用量310480

-rw-r--r--.1rootroot136月2620:4011.txt

-rw-r--r--.1rootroot146月2620:4112.txt

-rw-r--r--.1rootroot136月2620:4113.txt

drwxr-xr-x.2rootroot409611月112010debug

drwxr-xr-x.12hadoophadoop40966月2620:15hadoop

-rw-r--r--.1rootroot1952576046月262015hadoop-2.6.0.tar.gz

-rw-r--r--.1rootroot1226395929月262014jdk-7u51-linux-x64.rpm

drwxr-xr-x.2rootroot409611月112010kernels

[hadoop@mastersrc]$

将本地文本保存打hdfs文件系统中

[hadoop@mastersrc]$hdfsdfs-put1*.txt/input

15/06/2620:43:04WARNutil.NativeCodeLoader:Unabletoloadnative-hadooplibraryforyourplatform...usingbuiltin-javaclasseswhereapplicable

[hadoop@mastersrc]$hdfsdfs-ls/input

15/06/2620:43:18WARNutil.NativeCodeLoader:Unabletoloadnative-hadooplibraryforyourplatform...usingbuiltin-javaclasseswhereapplicable

Found3items

-rw-r--r--2hadoopsupergroup132015-06-2620:43/input/11.txt

-rw-r--r--2hadoopsupergroup142015-06-2620:43/input/12.txt

-rw-r--r--2hadoopsupergroup132015-06-2620:43/input/13.txt

[hadoop@masterhadoop]$hadoopjarshare/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jarwordcount/input/out

查看统计结果:

[hadoop@masterhadoop]$hdfsdfs-ls/out

15/06/2718:18:20WARNutil.NativeCodeLoader:Unabletoloadnative-hadooplibraryforyourplatform...usingbuiltin-javaclasseswhereapplicable

Found1items

-rw-r--r--2hadoopsupergroup352015-06-2718:18/out/part-r-00000

[hadoop@masterhadoop]$hdfsdfs-cat/out/part-r-00000

15/06/2718:18:40WARNutil.NativeCodeLoader:Unabletoloadnative-hadooplibraryforyourplatform...usingbuiltin-javaclasseswhereapplicable

JD.com1

hadoop1

hello3

world!1

[hadoop@masterhadoop]$

停止服务

[hadoop@masterhadoop]$stop-all.sh

ThisscriptisDeprecated.Insteadusestop-dfs.shandstop-yarn.sh

15/06/2718:19:37WARNutil.NativeCodeLoader:Unabletoloadnative-hadooplibraryforyourplatform...usingbuiltin-javaclasseswhereapplicable

Stoppingnamenodeson[master]

master:stoppingnamenode

slave2:stoppingdatanode

slave1:stoppingdatanode

Stoppingsecondarynamenodes[master]

master:stoppingsecondarynamenode

15/06/2718:20:08WARNutil.NativeCodeLoader:Unabletoloadnative-hadooplibraryforyourplatform...usingbuiltin-javaclasseswhereapplicable

stoppingyarndaemons

stoppingresourcemanager

slave2:stoppingnodemanager

slave1:stoppingnodemanager

slave2:nodemanagerdidnotstopgracefullyafter5seconds:killingwithkill-9

slave1:nodemanagerdidnotstopgracefullyafter5seconds:killingwithkill-9

noproxyservertostop

[hadoop@masterhadoop]$

至此hadoop集群环境测试完成!!!