HBase启动问题总结

HBase启动问题总结

- 1) Server is not running yet

- 解决办法

- 2)KeeperErrorCode = NoNode for /hbase/master

- 解决办法:

1) Server is not running yet

./hbase shell进入HBase Client端后尝试新建一个表格报错 Server is not running yet:

$ ./hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/root/hadoop-2.10.0/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/root/hbase-2.2.2/lib/client-facing-thirdparty/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

For Reference, please visit: http://hbase.apache.org/2.0/book.html#shell

Version 2.2.2, re6513a76c91cceda95dad7af246ac81d46fa2589, Sat Oct 19 10:10:12 UTC 2019

Took 0.0322 seconds

hbase(main):001:0> create 'test','cf'

ERROR: org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:2794)

at org.apache.hadoop.hbase.master.MasterRpcServices.isMasterRunning(MasterRpcServices.java:1134)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:413)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:133)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:338)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:318)

For usage try 'help "create"'

解决办法

网上搜索的办法是,由于Hadoop进入安全模式引起

在分布式文件系统启动的时候,开始的时候会有安全模式,当分布式文件系统处于安全模式的情况下,文件系统中的内容不允许修改也不允许删除,直到安全模式结束。安全模式主要是为了系统启动的时候检查各个DataNode上数据块的有效性,同时根据策略必要的复制或者删除部分数据块。运行期通过命令也可以进入安全模式。在实践过程中,系统启动的时候去修改和删除文件也会有安全模式不允许修改的出错提示,只需要等待一会儿即可。)一般可以选择强制关闭安全模式:

hadoop dfsadmin -safemode leave

但是我运行hadoop dfsadmin -safemode leave时继续报错Call From master/1.1.1.5 to master:8020 failed on connection exception: java.net.ConnectException: Connection refused

[root@master bin]# hdfs dfsadmin -safemode leave

19/12/28 01:45:53 DEBUG util.NativeCodeLoader: Trying to load the custom-built native-hadoop library...

19/12/28 01:45:53 DEBUG util.NativeCodeLoader: Loaded the native-hadoop library

safemode: Call From master/1.1.1.5 to master:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

master:8020是之前配置Hadoop时的fs.defaultFS的值,参考《HDFS的fs.defaultFS的端口》一文

在hadoop2的HDFS中fs.defaultFS在core-site.xml中配置,默认端口是8020,但是由于其接收Client连接的RPC端口,所以如果在hdfs-site.xml中配置了RPC端口9000,所以fs.defaultFS端口变为9000

发现core-site.xml和hdfs-site.xml文件中的端口配置确实不同,修改一致为9000,再重启,继续强制关闭hdfs的安全模式,依旧无法关闭,Safe mode is ON:

[root@master sbin]# hdfs dfsadmin -safemode leave

19/12/28 02:02:55 DEBUG util.NativeCodeLoader: Trying to load the custom-built native-hadoop library...

19/12/28 02:02:55 DEBUG util.NativeCodeLoader: Loaded the native-hadoop library

Safe mode is ON

[root@master sbin]# hdfs dfsadmin -safemode get

19/12/28 02:03:02 DEBUG util.NativeCodeLoader: Trying to load the custom-built native-hadoop library...

19/12/28 02:03:02 DEBUG util.NativeCodeLoader: Loaded the native-hadoop library

Safe mode is ON

检查磁盘占用情况发现也没有问题

[root@master sbin]# df -hl

Filesystem Size Used Avail Use% Mounted on

/dev/sda3 18G 6.4G 12G 36% /

devtmpfs 898M 0 898M 0% /dev

tmpfs 912M 156K 912M 1% /dev/shm

tmpfs 912M 9.1M 903M 1% /run

tmpfs 912M 0 912M 0% /sys/fs/cgroup

/dev/sda1 297M 152M 146M 51% /boot

tmpfs 183M 20K 183M 1% /run/user/1000

tmpfs 183M 0 183M 0% /run/user/0

结果在Browse Directory中删除之前上传的文档时,直接提示 hdfs dfsadmin -safemode forceExit,好吧,虽然不懂为啥我要用这个命令才能关闭安全模式,好歹终于关了

[root@master sbin]# hdfs dfsadmin -safemode forceExit

19/12/28 02:39:09 DEBUG util.NativeCodeLoader: Trying to load the custom-built native-hadoop library...

19/12/28 02:39:09 DEBUG util.NativeCodeLoader: Loaded the native-hadoop library

Safe mode is OFF

再次尝试在HBase Shell中创建表,但事实证明,上帝给你关了门后开了防盗窗,继续报错,开始第二个问题探究:

2)KeeperErrorCode = NoNode for /hbase/master

新建一个表格报错 KeeperErrorCode = NoNode for /hbase/master:

hbase(main):003:0> create 'test','cf'

ERROR: KeeperErrorCode = NoNode for /hbase/master/master

For usage try 'help "create"'

Took 8.3137 seconds

解决办法:

1、首先盲改,根据网上的办法将Hadoop的配置文件放入hbase

cp /root/hadoop-2.10.0/etc/hadoop/core-site.xml /root/hbase-2.2.2/conf/

cp /root/hadoop-2.10.0/etc/hadoop/core-site.xml /root/hbase-2.2.2/conf/

2、无效后查看日志,发现zookeeper的配置文件regionserver中集群配置错误,修改与hbase一致

zookeeper.ClientCnxnSocketNIO: Unable to open socket to master/1.1.1.5:2181

2019-12-29 13:04:48,261 WARN [main-SendThread(master:2181)] zookeeper.ClientCnxn: Session 0x2000120e9000003 for server null, unexpected error, closing socket connection and attempting reconnect

java.net.SocketException: Network is unreachable

at sun.nio.ch.Net.connect0(Native Method)

at sun.nio.ch.Net.connect(Net.java:454)

at sun.nio.ch.Net.connect(Net.java:446)

at sun.nio.ch.SocketChannelImpl.connect(SocketChannelImpl.java:648)

at org.apache.zookeeper.ClientCnxnSocketNIO.registerAndConnect(ClientCnxnSocketNIO.java:277)

at org.apache.zookeeper.ClientCnxnSocketNIO.connect(ClientCnxnSocketNIO.java:287)

at org.apache.zookeeper.ClientCnxn$SendThread.startConnect(ClientCnxn.java:1024)

at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1060)

3、hbaseshell中建表继续报错,日志中也没有任何记录

hbase(main):002:0> create 'test','fc'

ERROR:

For usage try 'help "create"

在hbase-site.xml文件中添加相关参数,盲改中

<property>

<name>hbase.tmp.dir</name>

<value>/root/hbase-2.2.2/tmp</value>

<description>Temporary directory on the local filesystem.</description>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

4、无效,且habse的web页面报错500

java.lang.IllegalArgumentException: org.apache.hbase.thirdparty.com.google.protobuf.InvalidProtocolBufferException: CodedInputStream encountered an embedded string or message which claimed to have negative size.,

参考《HBase 2.1.3 集群 web 报错InvalidProtocolBufferException 解决方法》,

./zkCli.sh -server 1.1.1.5进入zookeeper后rmr /hbase删除/hbase

再重启hbase/root/hbase-2.2.2/bin/./hbase-daemon.sh start master,

web恢复正常

hbasehell中可正常创建表

[root@master bin]# ./hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/root/hadoop-2.10.0/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/root/hbase-2.2.2/lib/client-facing-thirdparty/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

For Reference, please visit: http://hbase.apache.org/2.0/book.html#shell

Version 2.2.2, re6513a76c91cceda95dad7af246ac81d46fa2589, Sat Oct 19 10:10:12 UTC 2019

Took 0.0490 seconds

hbase(main):001:0> list

TABLE

0 row(s)

Took 1.8437 seconds

=> []

hbase(main):002:0> create 'test','fc'

Created table test

Took 2.6992 seconds

=> Hbase::Table - test

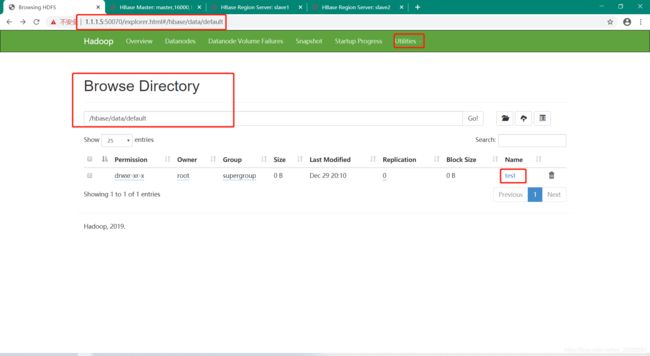

在http://1.1.1.5(master):50070/中的Utilities→Browse the file system,进入目录/hbase/data/default可以看到之前创建的test表在HDFS中的痕迹

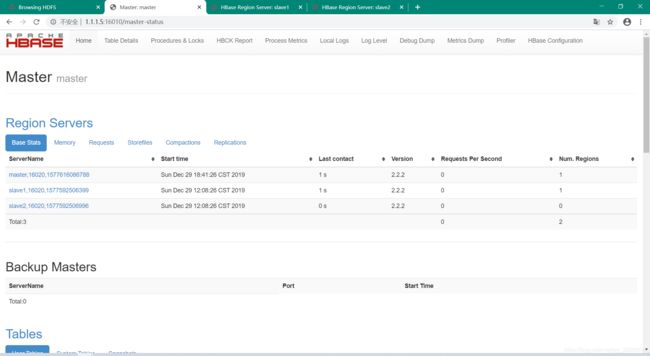

在http://1.1.1.5(master):16010中也可以看到新建的table