【论文翻译】知识图谱论文中英对照翻译----(KnowLife:a versatile approach ... )

【论文题目】KnowLife: a versatile approach for constructing a large knowledge graph for biomedical sciences

【论文题目对应中文】KnowLife:一种用于构建生物医学大知识图的通用方法

【论文链接】https://link.springer.com/content/pdf/10.1186%2Fs12859-015-0549-5.pdf

Abstract(摘要)

Background: Biomedical knowledge bases (KB’s) have become important assets in life sciences. Prior work on KB construction has three major limitations. First, most biomedical KBs are manually built and curated, and cannot keep up with the rate at which new findings are published. Second, for automatic information extraction (IE), the text genre of choice has been scientific publications, neglecting sources like health portals and online communities. Third, most prior work on IE has focused on the molecular level or chemogenomics only, like protein-protein interactions or gene-drug relationships, or solely address highly specific topics such as drug effects.

背景:生物医学知识库(KB’s)已成为生命科学中的重要资产。 关于生物医学知识库构造的先前工作具有三个主要限制。 首先,大多数生物医学知识库都是手动构建和管理的,无法跟上新发现的发布速度。 其次,对于自动信息提取(IE),首选的文本类型是科学出版物,而忽略了诸如健康门户网站和在线社区之类的资源。 第三,关于信息提取的大多数先前工作仅集中在分子水平或化学基因组学上,例如蛋白质-蛋白质相互作用或基因-药物关系,或仅针对高度特定的主题,例如药物作用。

Results: We address these three limitations by a versatile and scalable approach to automatic KB construction. Using a small number of seed facts for distant supervision of pattern-based extraction, we harvest a huge number of facts in an automated manner without requiring any explicit training.

成果:我们通过通用且可扩展的方法来自动构建知识库,从而解决了这三个限制。 使用少量种子事实对基于模式的提取进行远程监控,我们无需任何明确的培训就可以自动方式收集大量事实。

We extend previous techniques for pattern-based IE with confidence statistics, and we combine this recall-oriented stage with logical reasoning for consistency constraint checking to achieve high precision. To our knowledge, this is the first method that uses consistency checking for biomedical relations. Our approach can be easily extended to incorporate additional relations and constraints.

我们使用置信度统计信息扩展了基于模式的信息提取的先前技术,并且将面向召回的阶段与逻辑推理相结合以进行一致性约束检查,以实现高精度。 据我们所知,这是将一致性检查用于生物医学关系的第一种方法。 我们的方法可以轻松扩展,以包含其他关系和约束。

Conclusion: KnowLife is a large knowledge base for health and life sciences, automatically constructed from different Web sources. As a unique feature, KnowLife is harvested from different text genres such as scientific publications, health portals, and online communities. Thus, it has the potential to serve as one-stop portal for a wide range of relations and use cases. To showcase the breadth and usefulness, we make the KnowLife KB accessible through the health portal (http://knowlife.mpi-inf.mpg.de).

结论:KnowLife是用于健康和生命科学的大型知识库,可以从不同的Web来源自动构建。 作为一项独特功能,KnowLife收集自不同文本类型,例如科学出版物,健康门户网站和在线社区。 因此,它有潜力作为一站式门户,用于各种关系和用例。 为了展示其广度和实用性,我们通过健康门户(http://knowlife.mpi-inf.mpg.de)使KnowLife KB可以访问。

Keywords: Biomedical text mining, Knowledge base, Relation extraction

关键字:生物医学文本挖掘,知识库,关系提取

Introduction(介绍)

Large knowledge bases (KB’s) about entities, their prop- erties, and the relationships between entities, have become an important asset for semantic search, ana- lytics, and smart recommendations over Web contents and other kinds of Big Data [1,2]. Notable projects are DBpedia [3], Yago [4], and the Google Knowledge Graph with its public core Freebase (freebase.com).

有关实体,它们的属性以及实体之间的关系的大型知识库(KB’s)已成为针对Web内容和其他种类的大数据进行语义搜索,分析和智能推荐的重要资产[1,2] 。 值得注意的项目是DBpedia [3],Yago [4]和带有公共核心Freebase(freebase.com)的Google知识图。

In the biomedical domain, KB’s such as the Gene Ontology, the Disease Ontology, the National Drug File - Reference Terminology, and the Foundational Model of Anatomy are prominent examples of the rich knowledge that is digitally available. However, each of these KB’s is highly specialized and covers only a relative narrow topic within the life sciences, and there is very little interlinkage between the KB’s. Thus, in contrast to the general-domain KB’s that powerWeb search and analytics, there is no way of obtaining an integrated view on all aspects of biomed- ical knowledge. The lack of a “one-stop” KB that spans biological, medical, and health knowledge, hinders the development of advanced search and analytic applications in this field.

在生物医学领域,知识库(例如基因本体论,疾病本体论,国家药品档案-参考术语和解剖学基础模型)是可数字获取的丰富知识的突出示例。 但是,这些知识库中的每一个都是高度专业化的,并且仅涵盖生命科学中相对狭窄的主题,知识库之间的联系很少。 因此,与PowerWeb搜索和分析的通用域知识库相反,无法获得有关生物医学知识所有方面的综合视图。 缺乏涵盖生物学,医学和健康知识的“一站式”知识库,阻碍了该领域高级搜索和分析应用程序的发展。

In order to build a comprehensive biomedical KB, the following three bottlenecks must be addressed.

为了构建全面的生物医学知识库,必须解决以下三个瓶颈。

Beyond manual curation. Biomedical knowledge is advancing at rates far greater than any single human can absorb. Therefore, relying on manual curation of KB’s is bound to be a bottleneck. To fully leverage all published knowledge, automated information extraction (IE) from input texts is mandatory.

不再是手动管理。 生物医学知识的发展速度远远超过任何一个人类所能吸收的速度。 因此,依靠手动管理知识库必将成为瓶颈。 为了充分利用所有已发布的知识,必须从输入文本中自动提取信息(IE)。

Beyond scientific literature. Besides scientific publications found in PubMed Medline and PubMed Central, there are substantial efforts on patient-oriented health portals such as Mayo Clinic, Medline Plus, UpToDate, Wikipedia’s Health Portal, and there are also popular online discussion forums such as healthboards.com or patient.co.uk. All this constitutes a rich universe of information, but the information is spread across many sources, mostly in textual, unstructured and sometimes noisy form. Prior work on biomedical IE has focused on scientific literature only, and completely disregards the opportunities that lie in tapping into health portals and communities for automated IE.

远远不止科学文献。 除了在PubMed Medline和PubMed Central中发现的科学出版物之外,在面向患者的健康门户网站(例如Mayo Clinic,Medline Plus,UpToDate,Wikipedia的健康门户网站)上也进行了大量工作,并且还存在流行的在线讨论诸如healthboards.com或Patient.co.uk之类的论坛。 所有这些构成了一个丰富的信息世界,但是信息却分散在许多来源中,大部分以文本,非结构化的,有时是嘈杂的形式传播。 以前有关生物医学提取信息的工作仅关注科学文献,而完全忽略了利用卫生门户网站和社区获得自动化提取信息的机会。

Beyond molecular entities. IE from biomedical texts has strongly focused on entities and relations at themolecular level; a typical IE task is to extract protein-protein interactions. There is very little work on comprehensive approaches that link diverse entity types, spanning genes, diseases, symptoms, anatomic parts, drugs, drug effects, etc. In particular, no prior work on KB construction has addressed the aspects of environmental and lifestyle risk factors in the development of diseases and the effects of drugs and therapies.

超越分子实体。 生物医学文献中的信息提取高度关注分子水平的实体和关系; 信息提取的典型任务是提取蛋白质相互作用。 关于将各种实体类型,跨越基因,疾病,症状,解剖部位,药物,药物作用等联系起来的综合方法的工作很少。特别是,以前关于知识库构建的工作都没有涉及环境和生活方式风险因素的方面。 在疾病的发展以及药物和疗法的作用。

Background(背景)

The main body of IE research in biomedical informatics has focused on molecular entities and chemogenomics, like Protein-Protein Interactions (PPI) or gene-drug relations. These efforts have been driven by competitions such as BioNLP Shared Task (BioNLP-ST) [5] and BioCreative [6]. These shared tasks come with pre-annotated corpora as gold standard, such as the GENIA corpus [7], the multi-level event extraction (MLEE) corpus [5], and various BioCreative corpora. Efforts such as the Pharmacogenetics Research Network and Knowledge Base (PharmGKB) [8], which curates and disseminates knowledge about the impact of human genetic variations on drug responses, or the Open PHACTS project [9], a pharmacological information platform for drug discovery, offer knowledge bases with annotated text corpora to facilitate approaches for these use cases.

信息抽取在生物医学信息学领域的研究重点集中在分子实体和化学基因组学上,例如蛋白质-蛋白质相互作用(PPI)或基因-药物关系。 这些努力是由诸如BioNLP共享任务(BioNLP-ST)[5]和BioCreative [6]之类的竞争推动的。 这些共享任务带有预先注释的语料库作为黄金标准,例如GENIA语料库[7],多级事件提取(MLEE)语料库[5]和各种BioCreative语料库。 诸如药理遗传学研究网络和知识库(PharmGKB)[8](用于策划和传播有关人类遗传变异对药物反应影响的知识)或Open PHACTS项目[9](用于药物发现的药理学信息平台) 提供带有注释文本语料库的知识库,以促进这些用例的方法。

Most IE work in this line ofresearch relies on supervised learning, like Support Vector Machines [10-13] or Probabilistic Graphical Models [14,15]. The 2012 i2b2 challenge aimed at extracting temporal relations from clinical narratives [16]. Unsupervised approaches have been pursued by [17-20], to discover associations between genes and diseases based on the co-occurrence of entities as cues for relations. To further improve the quality of discovered associations, crowdsourcing has also been applied [21,22]. Burger et al. [23] uses Amazon Mechanical Turk to validate gene-mutation relations which are extracted from PubMed abstracts. Aroyo et al. [24] describes a crowdsourcing approach to generate gold standard annotations for medical relations, taking into account the disagreement between crowd workers.

此研究领域中的大多数信息抽取工作都依赖于监督学习,例如支持向量机[10-13]或概率图形模型[14,15]。 2012年的i2b2挑战旨在从临床叙事中提取时间关系[16]。 [17-20]一直采用无监督的方法,以实体的同时出现作为关系的线索来发现基因与疾病之间的关联。 为了进一步提高发现的协会的质量,众包也已被应用[21,22]。 Burger等。 [23]使用Amazon Mechanical Turk来验证从PubMed摘要中提取的基因突变关系。 Aroyo等。 [24]描述了一种众包方法,该方法考虑了人群工人之间的分歧,为医疗关系生成了黄金标准注释。

Pattern-based approaches exploit text patterns that connect entities. Many of them [25-28] manually define extraction patterns. Koláˇrik et al. [29] uses Hearst patterns [30] to identify terms that describe various properties of drugs. SemRep [31] manually specifies extraction rules obtained from dependency parse trees. Outside the biomedical domain, sentic patterns [32] leverage commonsense and syntactic dependencies to extract sentiments from movie reviews. However, while manually defined patterns yield high precision, they rely on expert guidance and do not scale to large and potentially noisy inputs and a broader scope of relations. Bootstrapping approaches such as [33,34] use a limited number of seeds to learn extraction patterns; these techniques go back to [35,36]. Our method follows this paradigm, but extends prior work with additional statistics to quantify the confidence of patterns and extracted facts.

基于模式的方法利用了连接实体的文本模式。 其中许多[25-28]手动定义提取模式。 Koláˇrik等。 [29]使用赫斯特(Hearst)模式[30]来识别描述药物各种特性的术语。 SemRep [31]手动指定从依赖关系分析树获得的提取规则。 在生物医学领域之外,情感模式[32]利用常识和句法依赖性从电影评论中提取情感。 但是,尽管手动定义的模式可以产生很高的精度,但是它们依赖于专家的指导,无法扩展到较大且可能有噪声的输入以及更广泛的关系范围。 自举方法(例如[33,34])使用有限数量的种子来学习提取模式; 这些技术可以追溯到[35,36]。 我们的方法遵循此范式,但是使用其他统计信息扩展了先前的工作,以量化模式和提取的事实的置信度。

A small number of projects like Sofie/Prospera [37,38] and NELL [39] have combined pattern-based extraction with logical consistency rules that constrain the space of fact candidates. Nebot et al. [40] harness the IE methods of [38] for populating disease-centric relations. This approach uses logical consistency reasoning for high precision, but the small scale of this work leads to a very restricted KB. Movshovitz-Attias et al. [41] used NELL to learn instances of biological classes, but did not extract binary relations and did not make use of constraints either. The other works on constrained extraction tackle non-biological relations only (e.g., birthplaces of people or headquarters of companies). Our method builds on Sofie/Prospera, but additionally develops customized constraints for the biomedical relations targeted here.

少数项目,例如Sofie / Prospera [37,38]和NELL [39],已将基于模式的提取与逻辑一致性规则相结合,从而限制了事实候选者的空间。 Nebot等。 [40]利用[38]的信息抽取方法填充以疾病为中心的关系。 这种方法使用逻辑一致性推理以实现高精度,但是这项工作的规模小,导致知识库非常受限制。 Movshovitz-Attias等。 [41]使用NELL来学习生物类的实例,但没有提取二进制关系,也没有利用约束。 关于受限提取的其他工作仅处理非生物关系(例如,人的出生地或公司总部)。 我们的方法建立在Sofie / Prospera的基础上,但另外针对此处针对的生物医学关系开发了自定义约束。

Most prior work in biomedical Named Entity Recognition (NER) specializes in recognizing specific types of entities such as proteins and genes, chemicals, diseases, and organisms. MetaMap [42] is the most notable tool capable of recognizing a wide range of entities. As for biomedical Named Entity Disambiguation (NED), there is relatively little prior work. MetaMap offers limited NED functionality, while others focus on disambiguating between genes [43] or small sets ofword senses [44].

生物医学命名实体识别(NER)的大多数先前工作专门致力于识别特定类型的实体,例如蛋白质和基因,化学物质,疾病和生物。 MetaMap [42]是最著名的工具,能够识别各种各样的实体。 至于生物医学命名实体歧义消除(NED),先前的工作相对较少。 MetaMap提供的NED功能有限,而其他一些则专注于在基因[43]或少量词义[44]之间进行歧义消除。

Most prior IE work processes only abstracts of Pubmed articles; few projects have considered full-length articles from Pubmed Central, let alone Web portals and online communities. Vydiswaran et al. [45] addressed the issue of assessing the credibility of medical claims about diseases and their treatments in health portals. Mukherjee et al. [46] tapped discussion forums to assess statements about side effects of drugs. White et al. [47] demonstrated how to derive insight on drug effects from query logs of search engines. Building a comprehensive KB from such raw assets has been beyond the scope ofthese prior works.

大多数以前的信息抽取工作只处理Pubmed文章的摘要; 很少有项目考虑过Pubmed Central的全文,更不用说Web门户和在线社区了。 Vydiswaran等。 [45]解决了评估健康门户网站中有关疾病及其治疗的医疗要求的可信度的问题。 Mukherjee等。 [46]利用讨论论坛来评估有关药物副作用的陈述。 怀特等。 [47]展示了如何从搜索引擎的查询日志中获得关于药物作用的见解。 从此类原始资产构建全面的知识库已经超出了这些先前的工作范围。

Contributions(贡献)

We present KnowLife, a large KB that captures a wide variety of biomedical knowledge, automatically extracted from different genres of input sources. KnowLife’s novel approach to KB construction overcomes the following three limitations of prior work.

我们介绍了KnowLife,这是一个大知识库,可捕获各种生物医学知识,并自动从不同类型的输入源中提取。 KnowLife新颖的知识库构建方法克服了先前工作的以下三个限制。

Beyond manual curation. Using distant supervision in the form of seed facts from existing expert-level knowledge collections, the KnowLife processing pipeline is able to automatically learn textual patterns and harvest a large number of relational facts from such patterns. In contrast to prior work on IE for biomedical data which relies on extraction patterns only, our method achieves high precision by specifying and checking logical consistency constraints that fact candidates have to satisfy. These constraints are customized for the relations of interest in KnowLife, and include constraints that couple different relations. The consistency constraints are available as supplementary material (see Additional file 1). KnowLife is easily extensible, since new relations can be added with little manual effort and without requiring explicit training; only a small number of seed facts for each new relation is needed.

除了手动管理之外。 通过使用来自现有专家级知识收藏的种子事实的形式进行远程监督,KnowLife处理管道能够自动学习文本模式并从此类模式中收集大量关系事实。 与以前仅基于提取模式的用于信息抽取的生物医学数据工作相反,我们的方法通过指定和检查事实候选者必须满足的逻辑一致性约束来实现高精度。 这些约束是针对KnowLife中感兴趣的关系定制的,并且包括耦合不同关系的约束。 一致性约束可用作补充材料(请参阅附加文件1)。 KnowLife易于扩展,因为无需手动培训即可添加新关系,而无需进行明确的培训。 每个新关系只需要少量的种子事实。

Beyond scientific literature. KnowLife copes with input text at large scale – considering not only knowledge from scientific publications, but also tapping into previ- ously neglected textual sources like Web portals on health issues and online communities with discussion boards. We present an extensive evaluation of22,000 facts on how these different genres of input texts affect the resulting precision and recall of the KB. We also present an error analysis that provides further insight on the quality and contribution of different text genres.

远远不止科学文献。 KnowLife可以应对输入文本的大量内容–不仅考虑科学出版物的知识,还考虑利用以前被忽视的文本资源,例如有关健康问题的Web门户和带有讨论板的在线社区。 我们对22,000个事实进行了广泛的评估,这些事实涉及这些不同体裁的输入文本如何影响生成的知识库的准确性和查全率。 我们还提供了错误分析,可提供有关不同文本类型的质量和贡献的进一步见解。

Beyond molecular entities. The entities and facts in KnowLife go way beyond the traditionally covered level of proteins and genes. Besides genetic factors ofdiseases, the KB also captures diseases, therapies, drugs, and risk factors like nutritional habits, life-style properties, and side effects of treatments.

超越分子实体。 KnowLife中的实体和事实远远超出了蛋白质和基因的传统涵盖范围。 除了疾病的遗传因素外,知识库还捕获疾病,疗法,药物和诸如营养习惯,生活方式特征和治疗副作用等危险因素。

In summary, the novelty of KnowLife is its versatile, largely automated, and scalable approach for the comprehensive construction of a KB – covering a spectrum of different text genres as input and distilling a wide variety facts from different biomedical areas as output. Coupled with an entity recognition module that covers the entire range of biomedical entities, the resulting KB features a much wider spectrum of knowledge and use-cases than previously built, highly specialized KB’s. In terms of methodology, our extraction pipeline builds on existing techniques but extends them, and is specifically customized to the life-science domain. Most notably, unlike prior work on biomedical IE, KnowLife employs logical reasoning for checking consistency constraints, tailored to the different relations that connect diseases, symptoms, drugs, genes, risk factors, etc. This constraint checking eliminates many false positives that are produced by methods that solely rely on pattern-based extraction.

总而言之,KnowLife的新颖之处在于它用于知识库的全面构建的通用,高度自动化和可扩展的方法-涵盖了多种不同文本类型作为输入,并提取了来自不同生物医学领域的多种事实作为输出。结合涵盖整个生物医学实体范围的实体识别模块,所生成的知识库具有比以前构建的高度专业化知识库更大的知识和用例范围。就方法论而言,我们的提取流程建立在现有技术的基础上,但对它们进行了扩展,并且专门针对生命科学领域进行了定制。最值得注意的是,与先前有关生物医学信息抽取的工作不同,KnowLife采用逻辑推理来检查一致性约束,该约束针对与疾病,症状,药物,基因,危险因素等相关的不同关系而量身定制。这种约束检查消除了由错误产生的许多误报,仅依赖基于模式的提取的方法。

In its best configuration, the KnowLife KB contains a total of 542,689 facts for 13 different relations, with an average precision of 93% (i.e., validity of the acquired facts) as determined by extensive sampling with manual assessment. The precision for the different relations ranges from 71% (createsRisk: ecofactor x disease) to 97% (sideEffect: (symptom ∪ disease) x drug). All facts in KnowLife carry provenance information, so that one can explore the evidence for a fact and filter by source. We developed a web portal that showcases use-cases from speed-reading to semantic search along with richly annotated literature, the details of which are described in the demo paper [48].

在最佳配置下,KnowLife 知识库包含针对13种不同关系的总计542,689个事实,通过手动评估进行的大量采样确定,平均精度为93%(即所获取事实的有效性)。 不同关系的精度范围从71%(创建风险:生态因子x疾病)到97%(副作用:(症状∪疾病)x药物)。 KnowLife中的所有事实均带有来源信息,因此人们可以探索事实的证据并按来源进行过滤。 我们开发了一个门户网站,展示了从快速阅读到语义搜索的用例,以及内容丰富的带有注释的文献,有关详细信息,请参见演示文件[48]。

Methods(研究方法)

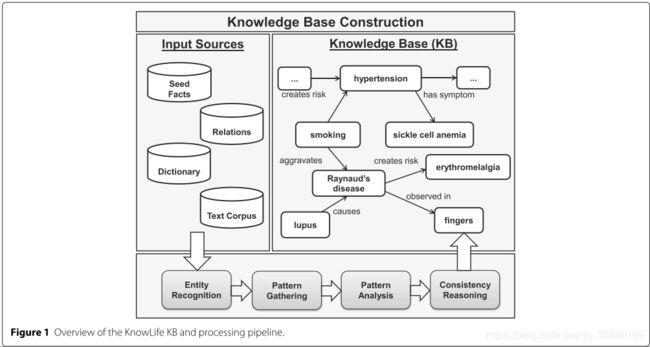

Our method for harvesting relational facts from text sources is designed as a pipeline of processing stages; Figure 1 gives a pictorial overview. A fact is a triple consisting of two entities e1, e2 and a relation R between them; we denote a fact by R(e1, e2).Inthe following,wedescribe the input data and each stage of the pipeline.

我们从文本源中收集相关事实的方法被设计为处理阶段的管道; 图1给出了图形概述。 事实是由两个实体e1,e2和它们之间的关系R组成的三元组; 我们用R(e1,e2)表示一个事实。在下面,我们描述输入数据和管道的每个阶段。

Input sources(输入源)

Dictionary We use UMLS (Unified Medical Language System) as the dictionary of biomedical entities. UMLS is a metathesaurus, the largest collection of biomedical dictionaries containing 2.9 million entities and 11.4 million entity names and synonyms. Each entity has a semantic type assigned by experts. For instance, the entities IL4R and asthma are of semantic types Gene or Genome and Disease or Syndrome, respectively. The UMLS dictionary enables KnowLife to detect entities in text, going beyond genes and proteins and covering entities about anatomy, physiology, and therapy.

词典 我们使用UMLS(统一医学语言系统)作为生物医学实体的词典。 UMLS是一个词库,是生物医学词典的最大集合,包含290万个实体以及1,140万个实体名称和同义词。 每个实体都有专家分配的语义类型。 例如,实体IL4R和哮喘分别具有语义类型“基因”或“基因组”和“疾病或综合症”。 UMLS词典使KnowLife能够检测文本中的实体,这些实体不仅涉及基因和蛋白质,还涉及有关解剖结构,生理学和疗法的实体。

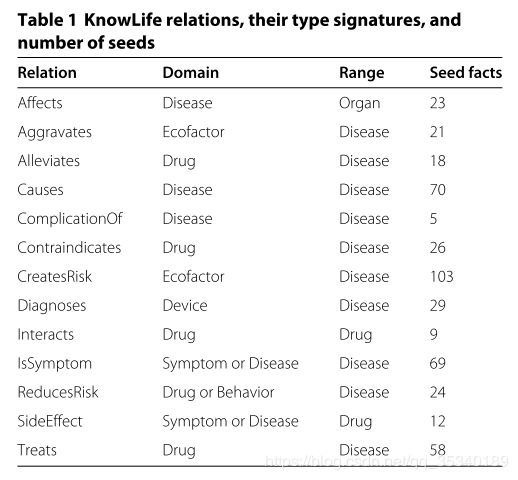

Relations KnowLife currently supports 13 binary relations between entities, each with a type signature constraining its domain and range (i.e., its left and right argument types). Table 1 shows that, for instance, the relation affects only holds between diseases and organs, but not between diseases and drugs. Each type signature consists ofmultiple fine-grained semantic types defined by UMLS; specifics for all relations are provided as supplementary material (see Additional file 2).

关系 KnowLife当前支持实体之间的13种二元关系,每个实体的类型签名都限制了其域和范围(即,其左右参数类型)。 表1显示,例如,该关系仅影响疾病和器官之间的关系,而不影响疾病和药物之间的关系。 每个类型签名由UMLS定义的多个细粒度语义类型组成; 所有关系的详细信息均作为补充材料提供(请参见附加文件2)。

Seed facts. A seed fact R(e1, e2) for relation R is a triple presumed to be true based on expert statements. We collected 467 seed facts (see Table 1) from the medical online portal uptodate.com, a highly regarded clinical resource written by physician authors. These seed facts are further cross-checked in other sources to assert their veracity. Example seed facts include isSymptom(Chest Pain,Myocardial Infarction) and createsRisk(Obesity, Diabetes).

种子事实。 根据专家的陈述,关系R的种子事实R(e1,e2)是一个三元组,被认为是正确的。 我们从医学在线门户网站uptodate.com上收集了467个种子事实(请参阅表1),这是由医生作者撰写的备受赞誉的临床资源。 这些种子事实在其他来源中进一步进行了交叉检验,以断言其准确性。 示例种子事实包括isSymptom(胸痛,心肌梗塞)和createdRisk(肥胖症,糖尿病)。

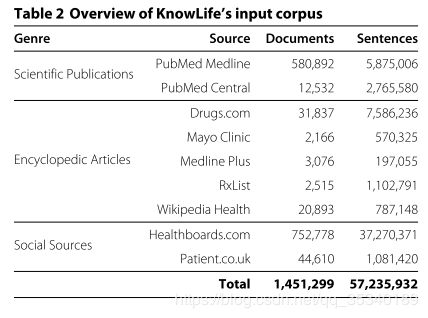

Text Corpus. A key asset of this work is that we tap into different genres of text; Table 2 gives an overview. PubMed documents are scientific texts with specialized jargon; they have been the de-facto standard corpus for biomedical text mining. We took all PubMed documents published in 2011 that are indexed with disease-, drug-, and therapy-related MeSH (Medical Subject Heading) terms. We further prune out documents from inapplicable journals such as those not in the English language, or those about medical ethics. Web portals and encyclopedic articles are collaboratively or professionally edited, providing credible information in layman-oriented language. Examples include uptodate.com, mayoclinic.com, and the relevant parts ofen.wikipedia.org. In contrast, discussion forums ofonline communities, where patients and physicians engage in discussions (often anonymously), have a colloquial language style, sometimes even slang. We tap into all three genres of text to demonstrate not only the applicability of our system, but also the amount of information buried in all of them. We use the Stanford CoreNLP software to preprocess all texts, such that they are tokenized, split into sentences, tagged with parts-of-speech, lemmatized, and parsed into syntactic dependency graphs.

**文本语料库。**这项工作的关键资产是我们利用了不同类型的文本。表2给出了概述。 PubMed文档是带有专业术语的科学文本。它们已经成为生物医学文本挖掘的事实上的标准语料库。我们采用了2011年发布的所有PubMed文档,这些文档均以与疾病,药物和治疗相关的MeSH(医学主题词)术语进行索引。我们还会从不适用的期刊(例如非英语的期刊或有关医学伦理的期刊)中删除文档。 Web门户和百科全书均经过协作或专业编辑,以面向外行的语言提供可靠的信息。示例包括uptodate.com,mayoclinic.com以及en.wikipedia.org的相关部分。相比之下,在线社区的讨论论坛(患者和医生在其中进行讨论(通常是匿名地进行))具有口语化的语言风格,有时甚至是语。我们利用所有三种类型的文本来证明我们的系统不仅适用于系统,而且还演示了隐藏在其中的所有信息。我们使用Stanford CoreNLP软件对所有文本进行预处理,以便对它们进行标记处理,拆分为句子,用词性标记,进行词形化并解析为语法相关性图。

Entity recognition (实体识别)

The first stage in the KnowLife pipeline identifies sentences that may express a relational fact. We apply entity recognition to every sentence: a sentence with one or more entities is relevant for further processing. To efficiently handle the large dictionary and process large input corpora, we employ our own method [49], using string-similarity matching against the names in the UMLS dictionary. This method is two orders of magnitude faster than MetaMap [42], the most popular biomedical entity recognition tool, while maintaining comparable accuracy. Specifically, we use locality sensitive hashing (LSH) [50] with min-wise independent permutations (MinHash) [51] to quickly find matching candidates. LSH probabilistically reduces the high-dimensional space of all character-level 3-grams, while MinHash quickly estimates the similarity between two sets of 3-grams. A successful match provides us also with the entity’s semantic type. If multiple entities are matched to the same string in the input text, we currently do not apply explicit NED to determine the correct entity. Instead, using the semantic type hierarchy of UMLS, we select the most specifically typed entities. Later in the consistency reasoning stage, we leverage the type signatures to futher prune out mismatching entities. At the end of this processing stage, we have marked-up sentences such as

KnowLife管道中的第一阶段识别可以表达关系事实的句子。我们将实体识别应用于每个句子:具有一个或多个实体的句子与进一步处理有关。为了有效地处理大型词典并处理大型输入语料库,我们采用了自己的方法[49],对UMLS词典中的名称使用字符串相似性匹配。这种方法比最流行的生物医学实体识别工具MetaMap [42]快两个数量级,同时保持了相当的准确性。具体来说,我们使用局部敏感哈希(LSH)[50]和最小独立排列(MinHash)[51]快速找到匹配的候选对象。 LSH可能会减少所有字符级3克的高维空间,而MinHash可以快速估计两组3克之间的相似度。成功的匹配还为我们提供了实体的语义类型。如果多个实体与输入文本中的相同字符串匹配,我们当前不应用显式NED来确定正确的实体。相反,使用UMLS的语义类型层次结构,我们选择类型最明确的实体。在一致性推理阶段的后期,我们利用类型签名进一步修剪不匹配的实体。在此处理阶段的最后,我们添加了标记语句,例如

- Anemia is a common symptom of sarcoidosis.

- 贫血是结节病的常见症状。

- Eventually, a heart attack leads to arrythmias.

- 最终,心脏病发作会导致心律不齐

- Ironically, a myocardialinfarction can also lead to pericarditis.

- 具有讽刺意味的是,心肌梗塞也会导致心包炎。

where myocardial infarction and heart attack are synonyms representing the same canonical entity.

其中,心肌梗塞和心脏病发作是代表相同规范实体的同义词。

Pattern gathering (模型收集)

Our method extracts textual patterns that connect two recognized entities, either by the syntactic structure of a sentence or by a path in the DOM (Document Object Model) tree of a Web page. We extract two types of patterns:

我们的方法通过句子的句法结构或网页DOM(文档对象模型)树中的路径来提取连接两个已识别实体的文本模式。 我们提取两种类型的模式:

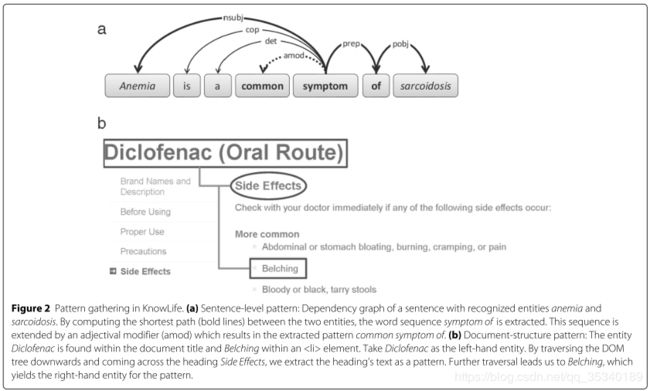

Sentence-level Patterns: For each pair of entities in a sentence, we extract a sequence of text tokens connecting the entities in the syntactic structure of the sentence. Specifically, this is the shortest path between the entities in the dependency graph obtained from parsing the sentence. However, this path does not necessarily contain the full information to deduce a relation; for instance, negations are not captured or essential adjectives are left out. Therefore, for every captured word the following grammatical dependencies are added: negation, adjectival modifiers, and adverbial modifiers. The resulting word sequence constitutes a sentence-level pattern. An example is shown in Figure 2(a).

句级模式: 对于句子中的每对实体,我们提取一系列文本标记,将这些实体连接到句子的句法结构中。 具体来说,这是通过分析句子而获得的依赖关系图中实体之间的最短路径。 但是,此路径不一定包含推断关系的完整信息; 例如,否定否定语没有被捕获或基本形容词被忽略了。 因此,对于每个捕获的单词,添加以下语法依赖性:否定,形容词修饰语和副词修饰语。 所得的单词序列构成了句子级模式。 图2(a)中显示了一个示例。

Document-structure Patterns: InWeb portals like Mayo Clinic or Wikipedia, it is common that authors state medical facts by using specific document structures, like titles, sections, and listings. Such structures are encoded in the DOM tree of the underlying HTML markup. First, we detect if the document title, that is, the text within the

tag in terms of HTML markup, is a single entity. Next, we detect if an entity appears in an HTML listing, that is, within an

tag, our method traverses the DOM tree downwards and determines all intermediate headings, i.e. to tags, until we reach the aforementioned

tags, until we reach the aforementioned

文档结构模式: 在诸如Mayo Clinic或Wikipedia之类的Web门户中,作者通常使用特定的文档结构(例如标题,章节和清单)来陈述医学事实。 这样的结构被编码在基础HTML标记的DOM树中。 首先,我们检测文档标题(即,根据HTML标记在

标记内的文本)是否为单个实体。 接下来,我们检测一个实体是否出现在HTML列表中,即

标记开始,我们的方法向下遍历DOM树并确定所有中间标题,即到标记,直到到达上述

标记,直到到达上述

Pattern analysis (模型分析)

The goal of the pattern analysis is to identify the most useful seed patterns out of all the pattern candidates gathered thus far. A seed pattern should generalize the over-specific phrases encountered in the input texts, by containing only the crucial words that express a relation and masking out (by a wildcard or part-of-speech tag) inessential words. This way we arrive at high-confidence patterns.

模型分析的目的是从到目前为止收集的所有模型候选中识别出最有用的种子模型。 种子模型应通过仅包含表示关系的关键词并掩盖(通过通配符或词性标记)不重要的词来概括输入文本中遇到的过分特定的短语。 这样,我们得出了高置信度的模型。

We harness the techniques developed in the Prospera tool [38]. First, an itemset mining algorithm is applied to find frequent sub-sequences in the patterns. The sub- sequences are weighed by statistical analysis, in terms of confidence and support. We use the seed facts and their co-occurrences with certain patterns as a basis to compute confidence, such that the confidence for a pattern q in a set of sentences S is defined as

c o n f i d e n c e ( q ) = ∣ { s ∈ S ∣ ∃ ( e 1 , e 2 ) ∈ S X ( R i ) q , e 1 , e 2 o c c u r i n s } ∣ ∣ { s ∈ S ∣ ∃ ( e 1 , e 2 ) ∈ S X ( R i ) ∪ C X ( R i ) q , e 1 , e 2 o c c u r i n s } ∣ confidence(q)=\frac{\left | \left \{ s \in S | {\exists}(e_{1},e_{2})\in SX(R_{i}) \ q,e_{1},e_{2} \ occur \ in \ s \right \} \right |}{\left | \left \{ s \in S | {\exists}(e_{1},e_{2})\in SX(R_{i})\cup CX(R_{i}) \ q,e_{1},e_{2} \ occur \ in \ s \right \} \right |} confidence(q)=∣{s∈S∣∃(e1,e2)∈SX(Ri)∪CX(Ri) q,e1,e2 occur in s}∣∣{s∈S∣∃(e1,e2)∈SX(Ri) q,e1,e2 occur in s}∣

where SX(Ri) is the set of all entity tuples (e1, e2) appear- ing in any seed fact with relation Ri and CX(Ri) is the set of all entity tuples (e1, e2) appearing in any seed fact with- out relation Ri. The rationale is that the more strongly a pattern correlates with the seed-fact entities of a par- ticular relation, the more confident we are that the pat- tern expresses the relation. The patterns with confidence greater than a threshold (set to 0.3 in our experiments) are selected as seed patterns.

我们利用Prospera工具[38]中开发的技术。 首先,应用项目集挖掘算法来查找模式中的频繁子序列。 通过置信度和支持度的统计分析对子序列进行加权。 我们使用种子事实及其与某些模式的共现作为计算置信度的基础,从而将句子集S中模式q的置信度定义为

c o n f i d e n c e ( q ) = ∣ { s ∈ S ∣ ∃ ( e 1 , e 2 ) ∈ S X ( R i ) q , e 1 , e 2 o c c u r i n s } ∣ ∣ { s ∈ S ∣ ∃ ( e 1 , e 2 ) ∈ S X ( R i ) ∪ C X ( R i ) q , e 1 , e 2 o c c u r i n s } ∣ confidence(q)=\frac{\left | \left \{ s \in S | {\exists}(e_{1},e_{2})\in SX(R_{i}) \ q,e_{1},e_{2} \ occur \ in \ s \right \} \right |}{\left | \left \{ s \in S | {\exists}(e_{1},e_{2})\in SX(R_{i})\cup CX(R_{i}) \ q,e_{1},e_{2} \ occur \ in \ s \right \} \right |} confidence(q)=∣{s∈S∣∃(e1,e2)∈SX(Ri)∪CX(Ri) q,e1,e2 occur in s}∣∣{s∈S∣∃(e1,e2)∈SX(Ri) q,e1,e2 occur in s}∣

其中SX(Ri)是出现在具有关系Ri的任何种子事实中的所有实体元组(e1,e2)的集合,而CX(Ri)是出现在具有以下关系的任何种子事实中的所有实体元组(e1,e2)的集合 -出关系里。 理由是,模式与特定关系的种子事实实体之间的关联越强,我们就越有信心该模式表达该关系。 选择置信度大于阈值(在我们的实验中设置为0.3)的模式作为种子模式。

Each non-seed pattern p is then matched against the seed pattern set Q using Jaccard similarity to compute a weight w associating p with a relation.

w = m a x { J a c c a r d ( p , q ) × c o n f i d e n c e ( q ) ∣ q ∈ Q } w=max\left \{ Jaccard(p, q)\times confidence(q) | q \in Q\right \} w=max{Jaccard(p,q)×confidence(q)∣q∈Q}

然后,使用Jaccard相似度将每个非种子模式p与种子模式集Q相匹配,以计算将w与一个关系相关联的权重w。

w = m a x { J a c c a r d ( p , q ) × c o n f i d e n c e ( q ) ∣ q ∈ Q } w=max\left \{ Jaccard(p, q)\times confidence(q) | q \in Q\right \} w=max{Jaccard(p,q)×confidence(q)∣q∈Q}

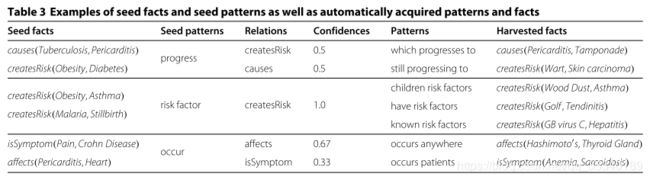

The pattern occurrences together with their weights and relations serve as fact candidates. Table 3 shows sample seed patterns computed from seed facts. The table also gives examples for automatically acquired patterns and facts.

模式出现及其权重和关系可作为事实候选。 表3显示了根据种子事实计算出的样本种子模式。 该表还提供了自动获取的模式和事实的示例。

Consistency reasoning(一致性推理)

The pattern analysis stage provides us with a large set of fact candidates and their supporting patterns. However, these contain many false positives. To prune these out and improve precision, the last stage of KnowLife applies logical consistency constraints to the fact candidates and accepts only a consistent subset of them.

模式分析阶段为我们提供了大量事实候选者及其支持模式。 但是,这些包含许多误报。 为了减少这些错误并提高准确性,KnowLife的最后阶段将逻辑一致性约束应用于事实候选者,并仅接受它们的一致子集。

We leverage two kinds of manually defined semantic constraints: i) the type signatures of relations (see Table 1) for type checking of fact candidates, and ii) mutual exclusion constraints between certain pairs of relations. For example, if a drug has a certain symptom as a side effect, it cannot treat this symptom at the same time. These rules allow us to handle conflicting candidate facts. The reasoning uses probabilistic weights derived from the statistics of the candidate gathering phase.

我们利用两种手动定义的语义约束:i)关系的类型签名(请参阅表1),用于事实候选的类型检查,以及ii)某些关系对之间的互斥约束。 例如,如果药物有某种症状作为副作用,则无法同时治疗该症状。 这些规则使我们能够处理矛盾的候选事实。 推理使用从候选收集阶段的统计数据得出的概率权重。

To reason with consistency constraints, we follow the framework of [37], by encoding all facts, patterns, and grounded (i.e., instantiated) constraints into weighted logical clauses. We extend this prior work by computing informative weights from the confidence statistics obtained in the pattern-based stage of our IE pipeline. We then use a weighted Max-Sat solver to reason on the hypotheses space of fact candidates, to compute a consistent subset of clauses with the largest total weight.

为了对一致性约束进行推理,我们遵循[37]的框架,将所有事实,模式和基础(即实例化)约束编码为加权逻辑子句。 我们通过从在信息抽取管道的基于模式的阶段中获得的置信度统计值计算信息权重来扩展此先前的工作。 然后,我们使用加权的Max-Sat求解器对事实候选者的假设空间进行推理,以计算总权重最大的子句的一致子集。

Due to the NP-hardness of the weighted Max-Sat problem, we resort to an approximation algorithm that combines the dominating-unit-clause technique [52] with Johnson’s heuristic algorithm [53]. Suchanek et al. [37] has shown that this combination empirically gives very good approximation ratios. The complete set of consistency constraints is in the supplementary material (see Additional file 1).

由于加权Max-Sat问题的NP硬度,我们求助于一种近似算法,该算法将支配子句技术[52]与约翰逊的启发式算法[53]结合在一起。 Suchanek等。 [37]已经表明,该组合在经验上给出了非常好的近似比。 完整的一致性约束集在补充材料中(请参阅附加文件1)。

Results and discussion(结果和讨论)

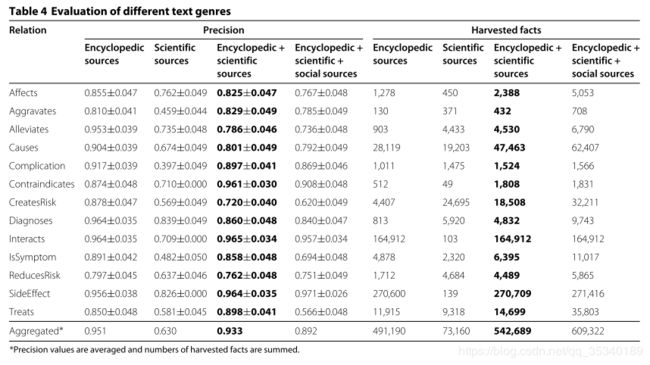

We ran extensive experiments with the input corpora listed in Table 2, and created different KB’s based on different configurations. We assess the size and quality of each KB, in terms of their numbers of facts and their precision evaluated by random sampling of facts. Tables 4 and 5 give the results, for different choices of input corpora and different configurations of the KnowLife pipeline, respectively. Recall is not evaluated, as there is no gold standard for fully comprehensive facts. To ensure that our findings are significant, for each relation, we computed the Wilson confidence interval at α =5%, andkeptevaluatingfacts until the interval width fell below 5%. An interval width of 0% means that all the facts were evaluated. Four different annotators evaluated the facts, judging them as true or false based on provenance information. As for interannotator agreement, 22,002 facts were evaluated; the value of Fleiss’ Kappa was 0.505, which indicates a moderate agreement among all four annotators. The complete set ofevaluated facts is in the supplementary material (see Additional file 3).

我们对表2中列出的输入语料库进行了广泛的实验,并根据不同的配置创建了不同的知识库。我们根据事实的数量和通过事实随机抽样评估的精度来评估每个知识库的大小和质量。表4和表5分别给出了输入语料库的不同选择和KnowLife管道的不同配置的结果。召回不进行评估,因为没有针对全面事实的金标准。为了确保我们的发现有意义,对于每个关系,我们将Wilson可信区间计算为α= 5%,并继续评估事实,直到区间宽度降至5%以下。间隔宽度为0%表示已评估所有事实。四个不同的注释者对事实进行了评估,并根据出处信息将其判断为正确还是错误。关于注释者之间的协议,评估了22,002个事实; Fleiss的Kappa值为0.505,表明所有四个注释器之间的协议都一致。完整的评估事实在补充材料中(请参阅附加文件3)。

Impact of different text genres(不同文字类型的影响)

We first discuss the results obtained from the different text genres: i) scientific (PubMed publications), ii) encyclopedic (Web portals like Mayo Clinic or Wikipedia), iii) social (discussion forums). Table 4 gives, column-wise, the number of facts and precision figures for four different combinations of genres.

我们首先讨论从不同文本类型获得的结果:i)科学(PubMed出版物),ii)百科全书(诸如Mayo Clinic或Wikipedia之类的Web门户),iii)社会(讨论论坛)。 表4逐栏给出了四种不同类型流派的事实数和精确度图。

Generally, combining genres gave more facts at a lower precision, as texts of lower quality like social sources introduced noise. The combination that gave the best balance of precision and total yield was scientific with encyclopedic sources, with a micro-averaged precision of 0.933 for a total of 542,689 facts. We consider this the best of the KB’s that KnowLife generated.

通常,由于社会来源等质量较低的文本引入了噪音,因此结合体裁会以较低的精度提供更多事实。 结合百科全书来源,可以在精度和总产量之间取得最佳平衡的组合是科学的,其微平均精度为0.933,总共542,689个事实。 我们认为这是KnowLife生成的知识库中最好的。

The best overall precision was achieved when using encyclopedic texts only. This confirmed our hypothesis that a pattern-based approach works best when the language is simple and grammatically correct. Contrast this with scientific publications which often exhibit convoluted language, and online discussions with a notable fraction of grammatically incorrect language. In these cases, the quality of patterns degraded and precision dropped. Incorrect facts stemming from errors in the entity recognition step were especially rampant in online discussions, where colloquial language (for example, meds,orshort for medicines) led to incorrect entities (acronym for Microcephaly, Epilepsy, and Diabetes Syndrome).

仅使用百科全书文本时,可以获得最佳的整体精度。 这证实了我们的假设,即当语言简单且语法正确时,基于模式的方法效果最佳。 与此形成鲜明对比的是,科学出版物通常会表现出令人费解的语言,而在线讨论则带有相当一部分语法错误的语言。 在这些情况下,图案质量下降并且精度下降。 在实体讨论步骤中,由于实体识别步骤中的错误而产生的不正确事实尤其普遍,口语(例如meds或药品简称)导致了不正确的实体(小头症,癫痫病和糖尿病综合症的缩写)。

The results vary highly across the 13 relations in our experiments. The number of facts depends on the extent to which the text sources express a relation, while precision reflects how decisively patterns point to that relation. Interacts and SideEffect are prime examples: the drugs.com portal lists many side effects and drug-drug interactions by the DOM structure, which boosted the extraction accuracy of KnowLife, leading to many facts at precisions of 95.6% and 96.4%, respectively. Facts for the relations Alleviates, CreatesRisk,and ReducesRisk, on the other hand, mostly came from scientific publications, which resulted in fewer facts and lower precision.

在我们实验的13个关系中,结果差异很大。 事实的数量取决于文本源表达某种关系的程度,而精确度则反映出模式是如何明确地指出这种关系的。 Interacts和SideEffect是主要的例子:drugs.com门户网站通过DOM结构列出了许多副作用和药物相互作用,这提高了KnowLife的提取准确性,从而导致许多事实的精确度分别为95.6%和96.4%。 另一方面,关系的事实“缓解”,“创建风险”和“减少风险”主要来自科学出版物,从而导致事实少,准确性低。

A few relations, however, defied these general trends. Patterns of Contraindicates were too sparse and ambigu- ous within encyclopedic texts alone and also within scientific publications alone. However, when the two genres were combined, the good patterns reached a critical mass to break through the confidence threshold, giving rise to a sudden increase in harvested facts. For the CreatesRisk and ReducesRisk relations, combining encyclopedic and scientific sources increased the number of facts compared to using only encyclopedic texts, and increased the precision compared to using only scientific publications.

但是,一些关系违背了这些总体趋势。 仅在百科全书中以及仅在科学出版物中,禁忌的模式都过于稀疏和模糊。 但是,当两种体裁相结合时,良好的模式达到了突破置信度阈值的临界质量,导致收获事实的突然增加。 对于CreatesRisk和ReducesRisk关系,与仅使用百科全书的文本相比,将百科全书和科学的资料相结合可以增加事实的数量,并且可以提高准确性。

As Table 4 shows, incorporating social sources brought a significant gain in the number of harvested facts, at a trade-off of lowered precision. As [46] pointed out, there are facts that come only from social sources and, depending on the use case, it is still worthwhile to incorporate them; for example, to facilitate search and discovery applications where recall may be more important. Morever, the patterns extracted from encyclopedic and scientific sources could be reused to annotate text in social sources, so as to identify existing information.

如表4所示,以降低的精度为代价,整合社会资源在收获的事实数量上带来了巨大的收益。 正如[46]所指出的那样,有些事实仅来自社会来源,根据使用情况,仍然值得将它们纳入其中。 例如,在可能更重要的是召回的搜索和发现应用程序中提供便利。 此外,从百科全书和科学资源中提取的模式可以重新用于注释社会资源中的文本,从而识别现有信息。

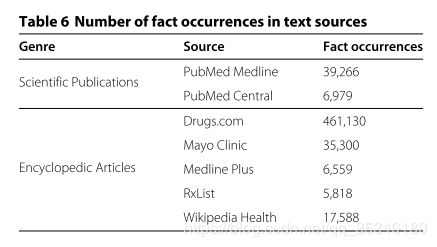

Taking a closer look at the best experimental setting, we see that scientific and encyclopedic sources in KnowLife contribute to a different extent to the number ofharvested facts. Table 6 shows the number of fact occurrences in our input sources. Recall that a fact can occur in multiple sentences in multiple text sources. Our experiments show that encyclopedic articles are more amenable for harvesting facts than scientific publications.

仔细研究最佳的实验环境,我们发现KnowLife中的科学和百科全书对不同事实的贡献程度不同。 表6显示了我们输入源中的事实发生次数。 回想一下事实可以在多个文本源中的多个句子中发生。 我们的实验表明,与科学出版物相比,百科全书更适合于获取事实。

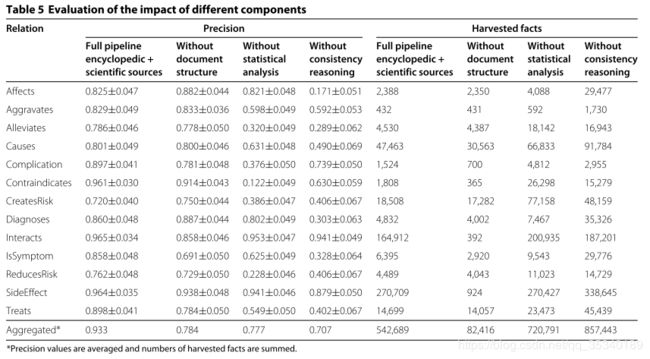

Impact of different components(不同组成部分的影响)

In each setting, only one component was disabled, and the processing pipeline ran with all other components enabled. We used the KnowLife setting with scientific and encyclopedic sources, which, by and large, performed best, as the basis for investigating the impact of different components in the KnowLife pipeline. To this end, we disabled individual components: DOM tree patterns, statistical analysis of patterns, consistency reasoning – each disabled separatelywhile retaining the others. This waywe obtained insight into how strongly KnowLife depends on each component. Table 5 shows the results of this ablation study.

在每种设置中,只有一个组件被禁用,并且处理管道在启用所有其他组件的情况下运行。 我们将KnowLife设置与科学和百科全书资源结合使用,总体上讲,效果最好,这是调查KnowLife管道中不同组件的影响的基础。 为此,我们禁用了各个组件:DOM树模式,模式的统计分析,一致性推理–每个组件都被单独禁用,而其他组件则被保留。 通过这种方式,我们了解了KnowLife对每个组件的依赖程度。 表5显示了该消融研究的结果。

No DOM tree patterns: When disregarding patterns on the document structure and solely focusing on textual patterns, KnowLife degrades in precision (from 93% to 78%) and sharply drops in the number of acquired facts (from ca. 540,000 to 80,000). The extent of these general effects varies across the different relations. Relations whose patterns are predominantly encoded in document structures – once again Interacts and SideEffect – exhibit the most drastic loss. On the other hand, relations like Affects, Aggravates, Alleviates,and Treats, are affected only to a minor extent, as their patterns are mostly found in free text.

**没有DOM树模式:**当不考虑文档结构上的模式而只关注文本模式时,KnowLife的精度会下降(从93%降至78%),获取的事实数量急剧下降(从大约540,000减少至 80,000)。 这些一般影响的程度因不同的关系而异。 关系的模式主要在文档结构中进行编码-再次是“交互”和“副作用”-表现出最大的损失。 另一方面,“情感”,“加重”,“减轻”和“对待”等关系仅受到较小的影响,因为它们的模式主要出现在自由文本中。

No statistical pattern analysis: Here we disabled the statistical analysis of pattern confidence and the frequent itemset mining for generalizing patterns. This way, without confidence values, KnowLife kept all patterns, including many noisy ones. Patterns that would be pruned in the full configuration led to poor seed patterns; for example, the single word causes was taken as a seed pattern for both relations SymptomOf and Contraindicates. Without frequent itemset mining, long and overly specific patterns also contributed to poor seed patterns. The combined effect greatly increased the number of false positives, thus dropping in precision (from 93% to 77%). In terms of acquired facts, not scrutinizing the patterns increased the yield (from ca. 540,000 to 720,000 facts).

没有统计模式分析: 在这里,我们禁用了模式置信度和频繁项集挖掘的统计分析以概括模式。 这样,在没有置信度值的情况下,KnowLife会保留所有模式,包括许多嘈杂的模式。 在完整配置中将被修剪的模式导致较差的种子模式; 例如,将单个单词cause作为关系SymptomOf和Contraindicates的种子模式。 如果没有频繁的项目集挖掘,那么长期且过于具体的模式也会导致不良的种子模式。 组合效应大大增加了误报的数量,因此准确性下降了(从93%降至77%)。 就获得的事实而言,不仔细检查模式可提高产量(从大约540,000个事实增加到720,000个事实)。

Relations mainly extracted from DOM tree patterns, such as Interacts and SideEffect, were not much affected. Also, relations like Affects and Diagnoses exhibited only small losses in precision; for these relations, the cooccurrence of two types of entities is often already sufficient to express a relation. The presence of consistency constraints on type signatures also helped to keep the output quality high.

对主要从DOM树模式中提取的关系(如Interacts和SideEffect)的影响不大。 同样,诸如“情感和诊断”之类的关系在精度上仅表现出很小的损失; 对于这些关系,两种类型实体的共现通常已经足以表达一种关系。 类型签名的一致性约束的存在还有助于保持较高的输出质量。

No consistency reasoning: In this setting, neither type signatures nor other consistency constraints were checked. Thus, conflicting facts could be accepted, leading to a large fraction of false positives. This effect was unequivocally witnessed by an increase in the number of facts (from ca. 540,000 to 850,000) accompanied by a sharp decrease in precision (from 93% to 70%).

无一致性推理: 在此设置中,既不检查类型签名也不检查其他一致性约束。 因此,可以接受相互矛盾的事实,从而导致很大一部分误报。 事实数量的增加(从大约540,000增加到850,000)以及精确度的急剧下降(从93%减少到70%)可以清楚地看到这种效果。

The relations Interacts and SideEffect were least affected by this degradation, as they are mostly expressed in the via document structure of encyclopedic texts where entity types are implicitly encoded in the DOM tree tags (see Figure 2). Here, consistency reasoning was not vital.

这种交互作用对Interacts和SideEffect的影响最小,因为它们主要在百科全书的通过文档结构中表示,其中实体类型隐式编码在DOM树标记中(参见图2)。 在这里,一致性推理并不重要。

Lessons learned: Overall, this ablation study clearly shows that all major components of the KnowLife pipeline are essential for high quality (precision) and high yield (number of facts) of the constructed KB. Each of the three configurations where one component is disabled suffered substantial if not dramatic losses in either precision or acquired facts, and sometimes both. We conclude that the full pipeline is a well-designed architecture whose strong performance cannot be easily achieved by a simpler approach.

经验教训: 总体而言,该消融研究清楚地表明,KnowLife管道的所有主要组成部分对于所构造KB的高质量(精度)和高产量(事实数量)都是必不可少的。 禁用一个组件的三种配置中的每一种都会在准确性或获得的事实方面遭受重大的损失,即使不是很大的损失,有时还会遭受两者的损失。 我们得出的结论是,完整的流水线是一个设计良好的体系结构,通过简单的方法无法轻松实现强大的性能。

Error analysis (错误分析)

We analyzed the causes of error for all 760 facts annotated as incorrect from the experimental setting using the full information extraction pipeline and all three text genres. This setting allows us to compare the utility of the differ- ent components as well as the different genres. As seen in Table 7, we categorize the errors as follows:

我们使用完整的信息提取管道和所有三种文本类型分析了从实验设置中被注释为不正确的所有760个事实的错误原因。 此设置使我们可以比较不同组件以及不同类型的效用。 如表7所示,我们将错误分类如下:

Preprocessing: At the start of the pipeline, incorrect sentence segmentation divided a text passage into incomplete sentences, or left multiple sentences undivided. This in turn lead to incorrect parsing of syntactic dependency graphs. In addition, there were incorrectly parsed DOM trees in Web portal documents. Not surprisingly, almost all preprocessing errors came from encyclopedic and social sources due to their DOM tree structure and poor language style, respectively.

预处理: 在管道的开始,不正确的句子分割将文本段落分割为不完整的句子,或者使多个句子未分割。 反过来,这会导致语法依赖图的错误解析。 此外,在Web门户文档中存在错误地解析DOM树的情况。 毫不奇怪,由于它们的DOM树结构和较差的语言风格,几乎所有预处理错误都来自百科全书和社交资源。

Entity Recognition: Certain entities were not correctly recognized. Complex entities are composed of multiple simple entities; examples include muscle protein break- down recognized as muscle protein and breakdown,or arrest of cystic growth recognized as arrest and cystic growth. Paraphrasing and misspelling entities cause their textual expressions to deviate from dictionary entries. Idiomatic expressions were incorrectly picked up as enti- ties. For instance, there is no actual physical activity in the English idiom in the long run.

实体识别: 某些实体未正确识别。 复杂实体由多个简单实体组成; 例子包括被识别为肌肉蛋白的肌肉蛋白分解和分解,或被识别为停滞和囊性生长的囊性生长停滞。 释义和拼写错误的实体会导致其文本表达方式与字典条目有所不同。 惯用语被错误地当作实体。 例如,从长远来看,英语习语中没有实际的身体活动。

Entity Disambiguation: Selecting an incorrect entity out of multiple matching candidates caused this error, primarily due to two reasons. First, the type signatures of our relations were not sufficient to futher prune out mismatching entities during fact extraction. Second, col- loquial terms not curated in the UMLS dictionary were incorrectly resolved. For example, meds for medicines was disambiguated as the entity Microcephaly, Epilepsy, and Diabetes Syndrome.

**实体消歧:**从多个匹配候选者中选择一个不正确的实体会导致此错误,主要是由于两个原因。 首先,我们关系的类型签名不足以进一步在事实提取过程中删减不匹配的实体。 第二,未正确解析UMLS词典中未定义的口语术语。 例如,用于药物的药物被明确区分为小头畸形,癫痫和糖尿病综合症。

Coreferencing: Due to the lack of coreference resolution, correct entities were obscured by phrases such as this protein or the tunnel structure.

公共参考: 由于缺乏公共参考分辨率,正确的实体被诸如该蛋白或隧道结构之类的短语遮盖。

Nonexistent relation: Two entities might co-occur within the same sentence without sharing a relation. When a pattern occurrence between such entities was nevertheless extracted, it resulted in an unsubstantiated relation.

不存在的关系: 两个实体可能在同一句子中同时出现而没有共享关系。 但是,当提取这些实体之间的模式出现时,会导致没有根据的关系。

Pattern Relation Duality: A pattern that can express two relations was harvested but assigned to an incorrect relation. For example, the pattern mimic was incorrectly assigned to the relation isSymptom.

模式关系对偶: 可以表达两种关系的模式已被获取,但分配给了错误的关系。 例如对于模式模仿被错误地分配给关系isSymptom。

Swapped left and right-hand entity: The harvested fact was incorrect because the left- and right-hand entities were swapped. Consider the example fact isSymptom(Anemia,isSymptom(Anemia, Sarcoidosis), which can be expressed by either sentence:

- Anemia is a common symptom of sarcoidosis.

- A common symptom of sarcoidosis is anemia.

交换左手和右手实体: 收获的事实是错误的,因为交换了左手和右手实体。 考虑示例事实isSymptom(Anemia,isSymptom(Anemia,Sarcoidosis),可以用以下任一句子表示:

- 贫血是结节病的常见症状。

- 结节病的常见症状是贫血。

In both cases, the same pattern is a common symptom of is extracted. In sentence 2, however, an incorrect fact would be extracted since the order in which the entities occur is reversed.

在两种情况下,相同的模式都是被提取的常见症状。 但是,在句子2中,将提取不正确的事实,因为实体出现的顺序是相反的。

Negation: This error was caused by not detecting nega- tion expressed in the text. The word expressing the negation may occur textually far away from the entities, as in It is disputed whether early antibiotic treatment prevents reactive arthritis, and thus escaped our pattern gathering method. In other cases, the negation phrase will require subtle semantic understanding to tease out, as in Except for osteoarthritis, I think my symptoms are all from heart disease.

否定: 此错误是由于未检测到文本中表示的否定引起的。 表示否定的单词可能在文字上远离实体,例如。有争议的是,早期抗生素治疗是否可以预防反应性关节炎,从而摆脱了我们的模式收集方法。 在其他情况下,否定短语将需要细微的语义理解才能弄清,例如,除了骨关节炎之外,我认为我的症状都来自心脏病。

FactuallyWrong: Although our methods successfully harvested a fact, the underlying text evidence made a wrong statement.

实际上是错误的: 尽管我们的方法成功地收集了一个事实,但潜在的文字证据做出了错误的陈述。

Lessons learned: Overall, this error analysis confirms that scientific and encyclopedic sources contain wellwritten texts that are amenable to a text mining pipeline. Social sources, with their poorer quality of language style as well as information content, were the biggest contributor in almost all error categories. Errors in entity recognition and disambiguation accounted for close to 60% of all errors; overcoming them will require better methods that go beyond a dictionary, and incorporate deeper linguistic and semantic understanding.

经验教训: 总体而言,此错误分析确认科学和百科全书来源包含适用于文本挖掘管道的精心撰写的文本。 社交来源的语言风格和信息内容质量较差,几乎是所有错误类别中的最大贡献者。 实体识别和歧义消除中的错误占所有错误的近60%; 克服它们将需要更好的方法,这些方法不仅需要字典,还需要更深入的语言和语义理解。

Coverage (覆盖范围)

The overriding goal of KnowLife has been to create a versatile KB that spans many areas within the life sciences. To illustrate which areas are covered by KnowLife, we refer to the semantic groups defined by [54]. Table 8 shows the number of acquired facts for pairs of the thirteen different areas inter-connected in our KB. This can be seen as an indicator that we achieved our goal at least to some extent.

KnowLife的首要目标是创建一个涵盖生命科学许多领域的通用知识库。 为了说明KnowLife覆盖哪些区域,我们参考[54]定义的语义组。 表8显示了在我们的知识库中相互关联的十三对不同区域的成对事实的数量。 这可以看作是我们至少在一定程度上实现了目标的指标。

The predominant number of facts involves entities of the semantic group Disorders, for two reasons. First, with our choice of relations, disorders appear in almost all type signatures. Second, entities of type clinical finding are covered by the group Disorders, and these are frequent in all text genres. However, this type also includes diverse, non-disorder entities such as pregnancy, which is clearly not a disorder.

事实的主要数量涉及语义组Disorders的实体,这有两个原因。 首先,通过我们选择的关系,疾病几乎以所有类型的特征出现。 其次,类型为临床发现的实体属于“障碍”类别,并且在所有文本类型中都很常见。 但是,这种类型还包括各种非疾病性实体,例如怀孕,这显然不是疾病。

Conclusions (总结)

Application benefit(应用优势)

To showcase the usefulness of KnowLife, we developed a health portal (http://knowlife.mpi-inf.mpg.de) that allows interactive exploration of the harvested facts and their input sources. The KnowLife portal supports a number of use cases for different information needs [48]. A patient may wish to find out the side effects of a specific drug, by searching for the drug name and browsing the SideEffect facts and their provenance. A physician may want to “speed read” publications and online discussions on treatment options for an unfamiliar disease. Provenance information is vital here, as the physician would want to consider the recency and authority of the sources for certain statements. The health portal also provides a function for on-the-fly annotation of new text from publications or social media, leveraging known patterns to highlight any relations found.

为了展示KnowLife的有用性,我们开发了一个健康门户网站(http://knowlife.mpi-inf.mpg.de),该门户可以交互方式浏览所收集的事实及其输入源。 KnowLife门户支持针对不同信息需求的许多用例[48]。 患者可能希望通过搜索药物名称并浏览SideEffect事实及其来源来找出特定药物的副作用。 医师可能希望“快速阅读”出版物和有关不熟悉疾病的治疗选择的在线讨论。 来源信息在这里至关重要,因为医师可能希望考虑某些陈述的来源的最新性和权威性。 健康门户网站还提供了一种功能,可以利用已知的模式突出显示找到的任何关系,从而对出版物或社交媒体中的新文本进行即时注释。

Future work(展望未来)

In the future, we plan to improve the entity recognition to accommodate a wider variety of entities beyond those in UMLS. For instance, colloquial usage (meds for medicines) and composite entities (amputation of right leg) are not yet addressed. Entities within UMLS also require more sophisticated disambiguation. For instance, the text occurrence stress may be correctly distinguished between the brand name of a drug and the psychological feeling.

将来,我们计划改善实体识别能力,以适应UMLS中的实体以外的更多实体。 例如,口语用法(药品)和复合实体(右腿截肢)尚未解决。 UMLS中的实体也需要更复杂的歧义消除。 例如,可以在药物的品牌名称和心理感觉之间正确地区分文本出现压力。

Finally, we would like to address the challenge of mining and representing the context ofharvested facts. Binary relations are often not sufficient to express medical knowledge. For example, the statement Fever is a symptom of Lupus Flare during pregnancy cannot be suitably repre- sented by a binary fact.

最后,我们要解决挖掘和代表已收获事实的挑战。 二元关系通常不足以表达医学知识。 例如,陈述“发烧是怀孕期间狼疮耀斑的症状”不能用二元事实来恰当地表示。

We plan to cope with such statements by extracting ternary and higher-arity relations, with appropriate exten- sions of both pattern-based extraction and consistency reasoning.

我们计划通过提取三元和更高关系的关系,以及基于模式的提取和一致性推理的适当扩展,来应对此类陈述。

References(参考文献)

- Barbosa D, Wang H, Yu C. Shallow information extraction for the knowledge web. In: Proceedings of International Conference On Data Engineering (ICDE). Washington, DC, USA: IEEE Computer Society; 2013. p. 1264–7.

- Suchanek F, Weikum G. Knowledge harvesting from text and web sources. In: Proceedings of International Conference On Data Engineering (ICDE). Washington, DC, USA: IEEE Computer Society; 2013. p. 1250–3.

- Lehmann J, Isele R, Jakob M, Jentzsch A, Kontokostas D, Mendes PN, et al. DBpedia – a large-scale, multilingual knowledge base extracted from Wikipedia. Semantic Web J. 2013;6(2):167–95.

- Hoffart J, Suchanek F, Berberich K, Weikum G. YAGO2: A Spatially and Temporally Enhanced Knowledge Base from Wikipedia. In: Proceedings of Special issue of the Artificial Intelligence Journal. Menlo Park, CA, USA: AAAI Press; 2013. p. 28–61.

- Pyysalo S, Ohta T, Miwa M, Cho H-C, Tsujii J, Ananiadou S. Event extraction across multiple levels of biological organization. Bioinformatics. 2012;28(18):575–81.

- Arighi C, Roberts P, Agarwal S, Bhattacharya S, Cesareni G, Chatr-aryamontri A, et al. BioCreative III interactive task: An overview. BMC Bioinformatics. 2011;12(Suppl 8):4.

- Kim JD, Ohta T, Tsujii J. Corpus annotation for mining biomedical events from literature. BMC Bioinformatics. 2008;9(1):10.

- Whirl-Carrillo M, McDonagh E, Hebert J, Gong L, Sangkuhl K, Thorn C, et al. Pharmacogenomics knowledge for personalized medicine. Clinical Pharmacol Ther. 2012;92(4):414–7.

- Williams AJ, Harland L, Groth P, Pettifer S, Chichester C, Willighagen EL, et al. Open PHACTS: semantic interoperability for drug discovery. Drug Discov Today. 2012;17(21):1188–98.

- Buyko E, Faessler E, Wermter J, Hahn U. Event extraction from trimmed dependency graphs. In: Proceedings of Workshop on Current Trends in Biomedical Natural Language Processing (BioNLP): Shared Task. Stroudsburg, PA, USA: ACL; 2009. p. 19–27.

- Miwa M, Sætre R, Kim J-D, Tsujii J. Event extraction with complex event classification using rich features. J Bioinformatics Comput Biol. 2010;8(1):131–46.

- Björne J, Salakoski T. Generalizing biomedical event extraction. In: Proceedings of Workshop on Current Trends in Biomedical Natural Language Processing (BioNLP): Shared Task. Stroudsburg, PA, USA: ACL; 2011. p. 183–91.

- Krallinger M, Izarzugaza JMG, Penagos CR, Valencia A. Extraction of human kinase mutations from literature, databases and genotyping studies. BMC Bioinformatics. 2009;10(S8):1.

- Rosario B, Hearst MA. Classifying semantic relations in bioscience texts. In: Proceedings of Annual Meeting on Association for Computational Linguistics (ACL). Stroudsburg, PA, USA: ACL; 2004. p. 430.

- Bundschus M, Dejori M, Stetter M, Tresp V, Kriegel HP. Extraction of semantic biomedical relations from text using conditional random fields. BMC Bioinformatics. 2008;9(1):207.

- Sun W, Rumshisky A, Uzuner O. Evaluating temporal relations in clinical text: 2012 i2b2 challenge. J AmMed Informatics Assoc. 2013;20(5):806–13.

- Bravo A, Cases M, Queralt-Rosinach N, Sanz F, Furlong L. A knowledge-driven approach to extract disease-related biomarkers from the literature. BioMed Res Int. 2014. article ID: 253128.

- Chun HW, Tsuruoka Y, Kim JD, Shiba R, Nagata N, Hishiki T, et al. Extraction of gene-disease relations from medline using domain dictionaries and machine learning. In: Proceedings of Pacific Symposium of Biocomputing; 2006. p. 4–15.

- Leroy G, Chen H. Genescene: An ontology-enhanced integration of linguistic and co-occurrence based relations in biomedical texts. J Am Soc Inform Sci Technol. 2005;56(5):457–68.

- Rindflesch TC, Libbus B, Hristovski D, Aronson AR, Kilicoglu H. Semantic relations asserting the etiology of genetic diseases. In: Proceedings of American Medical Informatics Association (AMIA) Annual Symposium. Bethesda, MD, USA: AMIA; 2003. p. 554–8.

- Good BM, Su AI. Crowdsourcing for bioinformatics. Bioinformatics. 2013;29(16):1925–33.

- Ranard BL, Ha YP, Meisel ZF, Asch DA, Hill SS, Becker LB, et al. Crowdsourcing–harnessing the masses to advance health and medicine, a systematic review. J General Intern Med. 2014;29(1):187–203.

- Burger JD, Doughty E, Khare R, Wei C-H, Mishra R, Aberdeen J, et al. Hybrid curation of gene–mutation relations combining automated extraction and crowdsourcing. Database. 2014;2014:. article ID: bau094.

- Aroyo L, Welty C. Measuring crowd truth for medical relation extraction. In: AAAI Fall Symposium Series. Menlo Park, CA, USA: AAAI Press; 2013.

- Hunter L, Lu Z, Firby J, Baumgartner W, Johnson H, Ogren P, et al. OpenDMAP: An open source, ontology-driven concept analysis engine, with applications to capturing knowledge regarding protein transport, protein interactions and cell-type-specific gene expression. BMC Bioinformatics. 2008;9(1):78.

- Torii M, Arighi CN, Wang Q, Wu CH, Vijay-Shanker K. Text mining of protein phosphorylation information using a generalizable rule-based approach. In: Proceedings of International Conference on Bioinformatics, Computational Biology and Biomedical Informatics (BCB). New York, NY, USA: ACM Press; 2013. p. 201–10.

- Müller HM, Kenny EE, Sternberg PW. Textpresso: an ontology-based information retrieval and extraction system for biological literature. PLoS Biology. 2004;2(11):309.

- Wattarujeekrit T, Shah P, Collier N. PASBio: predicate-argument structures for event extraction in molecular biology. BMC Bioinformatics. 2004;5(1):155.

- Koláˇrik C, Hofmann-Apitius M, Zimmermann M, Fluck J. Identification of new drug classification terms in textual resources. Bioinformatics. 2007;23(13):i264–72.

- Hearst M. Automatic acquisition of hyponyms from large text corpora. In: Proceedings of the 14th Conference on Computational Linguistics (CoLing). Stroudsburg, PA, USA: ACL; 1992. p. 539–45.

- Rindflesch TC, Fiszman M. The interaction of domain knowledge and linguistic structure in natural language processing: interpreting hypernymic propositions in biomedical text. J Biomed Inform. 2003;36(6):462–77.

- Poria S, Cambria E, Winterstein G, Huang G-B. Sentic patterns: dependency-based rules for concept-level sentiment analysis. Knowledge-Based Syst. 2014;69(0):45–63.

- Thomas P, Starlinger J, Vowinkel A, Arzt S, Leser U. GeneView: a comprehensive semantic search engine for PubMed. Nucleic Acids Res. 2012;40(W1):585–91.

- Xu R, Li L, Wang Q. dRiskKB: a large-scale disease-disease risk relationship knowledge base constructed from biomedical text. BMC Bioinformatics. 2014;15(1):105.

- Brin S. Extracting patterns and relations from the World Wide Web. In: Selected Papers from the International Workshop on The World Wide Web and Databases (WebDB). New York, NY, USA: Springer; 1998. p. 172–83.

- Agichtein E, Gravano L. Snowball: Extracting relations from large plain-text collections. In: Proceedings of the Fifth ACM Conference on Digital Libraries (DL). New York, NY, USA: ACM Press; 2000. p. 85–94.

- Suchanek F, Sozio M, Weikum G. SOFIE: A self-organizing framework for information extraction. In: Proceedings of International World Wide Web Conference (WWW). New York, NY, USA: ACM Press; 2009. p. 631–40.

- Nakashole N, Theobald M, Weikum G. Scalable knowledge harvesting with high precision and high recall. In: Proceedings of International Conference on Web Search and Data Mining (WSDM). New York, NY, USA: ACM Press; 2011. p. 227–36.

- Carlson A, Betteridge J, Kisiel B, Settles B, Hruschka Jr ER, Mitchell TM. Toward an architecture for never-ending language learning. In: Proceedings of the Association for the Advancement of Artificial Intelligence (AAAI) Conference. Menlo Park, CA, USA: AAAI Press; 2010. p. 1306–13.

- Nebot V, Ye M, Albrecht M, Eom J-H, Weikum G. DIDO: A disease-determinants ontology from Web sources. In: Proceedings of International World Wide Web Conference (WWW). New York, NY, USA: ACM Press; 2011. p. 237–40.

- Movshovitz-Attias D, Cohen WW. Bootstrapping biomedical ontologies for scientific text using NELL. In: Proceedings of Workshop on Biomedical Natural Language Processing (BioNLP). Stroudsburg, PA, USA: ACL; 2012. p. 11–19.

- Aronson AR, Lang FM. An overview of MetaMap: historical perspective and recent advances. J AmMed Inform Assoc. 2010;17(3):229–36.

- Harmston N, Filsell W, StumpfM. Which species is it? Species-driven gene name disambiguation using random walks over a mixture of adjacency matrices. Bioinformatics. 2012;28(2):254–60.

- Chasin R, Rumshisky A, Uzuner Ö, Szolovits P. Word sense disambiguation in the clinical domain: a comparison of knowledge-rich and knowledge-poor unsupervised methods. J Am Med Inform Assoc. 2014;21(5):842–9.

- Vydiswaran VGV, Zhai C, Roth D. Gauging the Internet doctor: Ranking medical claims based on community knowledge. In: Proceedings of Workshop on Data Mining for Medicine and Healthcare (DMMH). New York, NY, USA: ACM Press; 2011. p. 42–51.

- Mukherjee S, Weikum G, Danescu-Niculescu-Mizil C. People on drugs: Credibility of user statements in health communities. In: Proceedings of Conference on Knowledge Discovery and Data Mining (KDD). New York, NY, USA: ACM Press; 2014. p. 65–74.

- White RW, Harpaz R, Shah NH, DuMouchel W, Horvitz E. Toward enhanced pharmacovigilance using patient-generated data on the Internet. Clin Pharmacol Ther. 2014;96(2):239–46.

- Ernst P, Meng C, Siu A, Weikum G. KnowLife: a knowledge graph for health and life sciences. In: Proceedings of International Conference on Data Engineering (ICDE). Washington, DC, USA: IEEE Computer Society; 2014. p. 1254–7.

- Siu A, Nguyen DB, Weikum G. Fast entity recognition in biomedical text. In: Proceedings of Workshop on Data Mining for Healthcare (DMH) at Conference on Knowledge Discovery and Data Mining (KDD). New York, NY, USA: ACM Press; 2013.

- Charikar MS. Similarity estimation techniques from rounding algorithms. In: Proceedings of Symposium on Theory of Computing (STOC). New York, NY, USA: ACM Press; 2002. p. 380–8.

- Broder AZ, Charikar M, Frieze AM, Mitzenmacher M. Min-wise independent permutations. In: Proceedings of Symposium on Theory of Computing (STOC). New York, NY, USA: ACM Press; 1998. p. 327–36.

- Niedermeier R, Rossmath P. New upper bounds for maximum satisfiability. J Algorithms. 2000;36(1):63–88.

- Johnson DS. Approximation algorithms for combinatorial problems. J Comput Syst Sci. 1974;9(3):256–78.

- McCray AT, Burgun A, Bodenreider O. Aggregating UMLS semantic types for reducing conceptual complexity. Stud Health Technol Informatics. 2001;1:216–20.