pytorch LSTM模块 理解

最近在看RNN模型,想阅读一下别人关于LSTM的开源代码,于是决定先去pytorch的官网lstm看一下示例教程,谁知我连示例教程都看得很懵,以为理论懂了看代码实现应该很快;于是在网上看了各位大神关于LSTM的分析解读,结合自己的理解勉强理解了pytorch的LSTM模块;本文的目的主要是记录下自己的理解,方便日后查阅。

先把官方给的quick example给理解了:

# Author: Robert Guthrie

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

torch.manual_seed(1)

lstm = nn.LSTM(3, 3) # Input dim is 3, output dim is 3

inputs = [torch.randn(1, 3) for _ in range(5)] # make a sequence of length 5

# initialize the hidden state.

hidden = (torch.randn(1, 1, 3),

torch.randn(1, 1, 3))

for i in inputs:

# Step through the sequence one element at a time.

# after each step, hidden contains the hidden state.

out, hidden = lstm(i.view(1, 1, -1), hidden)

# alternatively, we can do the entire sequence all at once.

# the first value returned by LSTM is all of the hidden states throughout

# the sequence. the second is just the most recent hidden state

# (compare the last slice of "out" with "hidden" below, they are the same)

# The reason for this is that:

# "out" will give you access to all hidden states in the sequence

# "hidden" will allow you to continue the sequence and backpropagate,

# by passing it as an argument to the lstm at a later time

# Add the extra 2nd dimension

inputs = torch.cat(inputs).view(len(inputs), 1, -1)

hidden = (torch.randn(1, 1, 3), torch.randn(1, 1, 3)) # clean out hidden state

out, hidden = lstm(inputs, hidden)

print(out)

print(hidden)

代码解读:

lstm = nn.LSTM(3, 3) # Input dim is 3, output dim is 3

这一句代码初始化LSTM模块的一些基本属性;

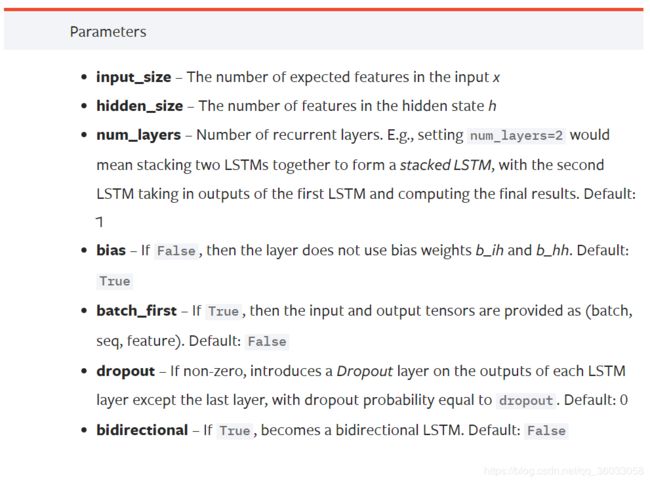

LSTM模块初始化的时候有如下参数:

在官方给的教程中,3对应input_size,另一个3对应hidden_size;在NLP相关的任务中,input_size可以理解为词向量的embedding_size,hidden_size则是LSTM单元中神经网络的隐藏层的神经元数量,和输出的隐藏状态 h t h_t ht的维度是一致的。

inputs = [torch.randn(1, 3) for _ in range(5)] # make a sequence of length 5

生成一个sequence data sample,这里没有batch的概念,或者可以理解为mini_batch=1,这个sequence的长度为5,对应的embedding_size=3,这里要和上一步的input_size=3保持一致。

# initialize the hidden state.

hidden = (torch.randn(1, 1, 3),

torch.randn(1, 1, 3))

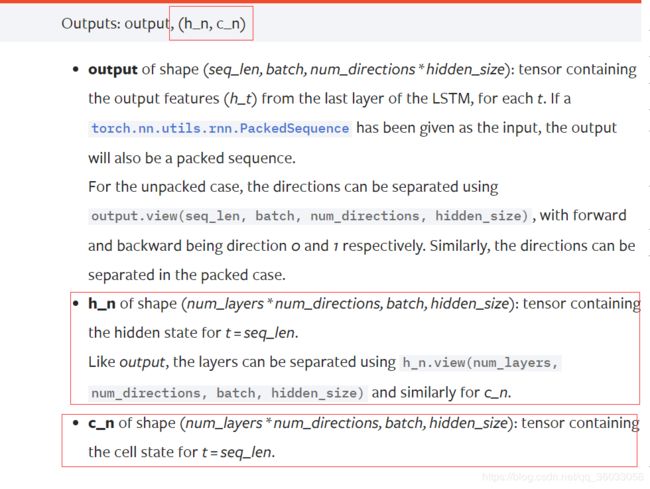

对照着官方文档进行理解:

hidden[0]对应h_n,即隐藏状态的初始化;hidden[1]对应c_n,即cell状态的初始化;为什么初始化的维度是(1,1,3)呢?因为num_layers和num_directions都为1,即单层的LSTM和单向LSTM(与之对应的是多层LSTM进行堆叠和双向LSTM),3和LSTM初始化的hidden_size=3保持一致;

for i in inputs:

# Step through the sequence one element at a time.

# after each step, hidden contains the hidden state.

out, hidden = lstm(i.view(1, 1, -1), hidden)

这个for循环其实就是每个时间步的具体计算过程;inputs的长度为5,即对于这一个sequence data sample而言,有5个时间步,每一个时间步都要用lstm去计算;i.view(1,1,-1)表示lstm的Inputs,因为inputs中的每个tensor是二维的,不符合lstm输入的要求,lstm的input要求维度为(seq_len, batch, input_size),对于一个时间步而言,seq_len自然为1,这里只针对单个sample,所以batch=1,input_size=-1表示自动推算;hidden每一个时间步都要更新一次。

inputs = torch.cat(inputs).view(len(inputs), 1, -1)

hidden = (torch.randn(1, 1, 3), torch.randn(1, 1, 3)) # clean out hidden state

out, hidden = lstm(inputs, hidden)

这里就是把所有时间步合在一起,直接送入lstm,不用for循环每个时间步单独计算了。

至于后面的其他输入格式后续在更新。

preference:

1、https://zhuanlan.zhihu.com/p/79064602

2、https://www.zhihu.com/question/41949741/answer/318771336