2019移动广告反欺诈算法挑战赛baseline

前言:

分享这个baseline之前,首先先感谢一下我的好朋友油菜花一朵给予的一些帮助。然后呢介绍一下最近比赛中碰到的几个问题,以及解释。如果有可能的话,明天分享一个94.47左右的baseline吧,初赛之前设置为粉丝可见,初赛后在设置所有人可见吧。本来想分享47的baseline的,但是后来发现版本找不到了。就把自己的想法融合了一下,也不知道多少分。比赛名次不重要学到东西才重要。

第一:为什么使用kaggle上的方案效果不好

因为科大讯飞的移动广告反欺诈算法挑战赛和之前kaggle上的‘TalkingData AdTracking Fraud Detection Challenge’比赛题目是一样的,和kaggle上不同的是科大讯飞增加了一些属性。所以有很多人都是仿照kaggle上的比赛进行的,但是发现效果不是很好。这个原因是由于kaggle上的广告欺诈是最后的标签是由TalkingData公司自己的算法生成的,最后公司也说了他们的算法中加上了时间信息。所以这也是为什么几乎每个开源代码都统计了很多关于时间的特征。

第二:catboost的baseline抖动特别厉害

训练集的数据100万条,测试集仅仅只有10万条。这可能是由于训练集和测试集的数据都太少了导致的。所以同样的模型,相同的随机种子最后生成的结果差距也是有的。当跑的代码不是很好的时候,可以尝试一下再跑一次。

第三: 为什么数据清洗之后会更差

我们发现最后训练的模型线上结果好的时候,往往模型中单个属性是比较强的特征。所以我想会不会科大讯飞官方的label也是由自己模型生成的,而且训练的时候没有对数据做过多的处理。如果我们要数据清洗的话,我们清理之后的属性一定要比之前的强。

第四: 一些强特征加上关于label的统计之后线下会很好,但是线上提分不是很高

这个我一直很好奇,在我的模型中得到一些强特征之后我统计一下,我尝试使用统计这些特征关于label的均值和方差,最后显示特征的重要程度的时候,这些也都是不错的特征,但是最后线上提交的时候却发现增加分数不是很明显。这个可能是catboost的特征对于label而言比较敏感,所以可以换成其他的模型例如lgb试一下。

第五: 加上时间数据之后线下很好但是线上不好

之前我统计同一个model前一次点击时间,和之后的点击时间差,但是同样是线下有提升,但是线上效果不好,我之前是以为数据泄露,但是按照网上的一些教程修改之后效果依旧存在同样的问题。暂时没有想到解释的方案。

第六:介绍一下我这个baseline的特点吧

1、基本架构使用的是catboost的baseline

2、增加了一些关于强特征关于label的统计特征 例如均值 方差 出现次数

3、使用make的均值填充 h, w, ppi

4、对于一些强特征进行组合,统计组合特征的出现次数count(),以及累积计数cumcount()

5、为了节约内存,优化训练过程,删除一些不必要的,输出信息

6、删除了catboost模型中一些不必要的特征

7、使用相同的特征,但是加上xgb, lgb, catboost进行stacking, 最后使用logist进行回归分析

代码:

# -*- coding: utf-8 -*-

# @Time : 2019/8/18 9:28

# @Author : YYLin

# @Email : [email protected]

# @File : A_Simple_Stacking_Model.py

# 特征部分选择使用之前简单的特征 加上lgb catboost xgb进行stacking操作 分数大约46

import numpy as np

import pandas as pd

import gc

from tqdm import tqdm

from sklearn.model_selection import KFold

from sklearn.preprocessing import LabelEncoder

from datetime import timedelta

import catboost as cbt

import lightgbm as lgb

import xgboost as xgb

from sklearn.metrics import f1_score

from sklearn.linear_model import LogisticRegression

import warnings

warnings.filterwarnings('ignore')

from scipy import stats

test = pd.read_table("../A_Data/testdata.txt", nrows=10000)

train = pd.read_table("../A_Data/traindata.txt", nrows=10000)

all_data = pd.concat([train, test], ignore_index=True)

all_data['time'] = pd.to_datetime(all_data['nginxtime'] * 1e+6) + timedelta(hours=8) # 转换为北京时间24小时格式

all_data['day'] = all_data['time'].dt.dayofyear # 今年的第几天

all_data['hour'] = all_data['time'].dt.hour # 每天的第几小时

all_data['model'].replace('PACM00', "OPPO R15", inplace=True) #

all_data['model'].replace('PBAM00', "OPPO A5", inplace=True)

all_data['model'].replace('PBEM00', "OPPO R17", inplace=True)

all_data['model'].replace('PADM00', "OPPO A3", inplace=True)

all_data['model'].replace('PBBM00', "OPPO A7", inplace=True)

all_data['model'].replace('PAAM00', "OPPO R15_1", inplace=True)

all_data['model'].replace('PACT00', "OPPO R15_2", inplace=True)

all_data['model'].replace('PABT00', "OPPO A5_1", inplace=True)

all_data['model'].replace('PBCM10', "OPPO R15x", inplace=True)

# for fea in ['model', 'make', 'lan', 'new_make', 'new_model']:

for fea in ['model', 'make', 'lan']:

all_data[fea] = all_data[fea].astype('str')

all_data[fea] = all_data[fea].map(lambda x: x.upper()) # .upper()字符转大写

from urllib.parse import unquote

def url_clean(x):

x = unquote(x, 'utf-8').replace('%2B', ' ').replace('%20', ' ').replace('%2F', '/').replace('%3F', '?').replace(

'%25', '%').replace('%23', '#').replace(".", ' ').replace('??', ' '). \

replace('%26', ' ').replace("%3D", '=').replace('%22', '').replace('_', ' ').replace('+', ' ').replace('-',

' ').replace(

'__', ' ').replace(' ', ' ').replace(',', ' ')

if (x[0] == 'V') & (x[-1] == 'A'):

return "VIVO {}".format(x)

elif (x[0] == 'P') & (x[-1] == '0'):

return "OPPO {}".format(x)

elif (len(x) == 5) & (x[0] == 'O'):

return "Smartisan {}".format(x)

elif ('AL00' in x):

return "HW {}".format(x)

else:

return x

all_data[fea] = all_data[fea].map(url_clean)

all_data['big_model'] = all_data['model'].map(lambda x: x.split(' ')[0])

all_data['model_equal_make'] = (all_data['big_model'] == all_data['make']).astype(int)

# 处理 ntt 的数据特征 但是不删除之前的特征 将其归为新的一列数据

all_data['new_ntt'] = all_data['ntt']

all_data.new_ntt[(all_data.new_ntt == 0) | (all_data.new_ntt == 7)] = 0

all_data.new_ntt[(all_data.new_ntt == 1) | (all_data.new_ntt == 2)] = 1

all_data.new_ntt[all_data.new_ntt == 3] = 2

all_data.new_ntt[(all_data.new_ntt >= 4) & (all_data.new_ntt <= 6)] = 3

# 使用make填充 h w ppi值为0.0的数据

all_data['h'].replace(0.0, np.nan, inplace=True)

all_data['w'].replace(0.0, np.nan, inplace=True)

# all_data['ppi'].replace(0.0, np.nan, inplace=True)

# cols = ['h', 'w', 'ppi']

cols = ['h', 'w']

gp_col = 'make'

for col in tqdm(cols):

na_series = all_data[col].isna()

names = list(all_data.loc[na_series, gp_col])

# 使用均值 或者众数进行填充缺失值

# df_fill = all_data.groupby(gp_col)[col].mean()

df_fill = all_data.groupby(gp_col)[col].agg(lambda x: stats.mode(x)[0][0])

t = df_fill.loc[names]

t.index = all_data.loc[na_series, col].index

# 相同的index进行赋值

all_data.loc[na_series, col] = t

all_data[col].fillna(0.0, inplace=True)

del df_fill

gc.collect()

# H, W, PPI

all_data['size'] = (np.sqrt(all_data['h'] ** 2 + all_data['w'] ** 2) / 2.54) / 1000

all_data['ratio'] = all_data['h'] / all_data['w']

all_data['px'] = all_data['ppi'] * all_data['size']

all_data['mj'] = all_data['h'] * all_data['w']

# 强特征进行组合

Fusion_attributes = ['make_adunitshowid', 'adunitshowid_model', 'adunitshowid_ratio', 'make_model',

'make_osv', 'make_ratio', 'model_osv', 'model_ratio', 'model_h', 'ratio_osv']

for attribute in tqdm(Fusion_attributes):

name = "Fusion_attr_" + attribute

dummy = 'label'

cols = attribute.split("_")

cols_with_dummy = cols.copy()

cols_with_dummy.append(dummy)

gp = all_data[cols_with_dummy].groupby(by=cols)[[dummy]].count().reset_index().rename(index=str,

columns={dummy: name})

all_data = all_data.merge(gp, on=cols, how='left')

# 对ip地址和reqrealip地址进行分割 定义一个machine的关键字

all_data['ip2'] = all_data['ip'].apply(lambda x: '.'.join(x.split('.')[0:2]))

all_data['ip3'] = all_data['ip'].apply(lambda x: '.'.join(x.split('.')[0:3]))

all_data['reqrealip2'] = all_data['reqrealip'].apply(lambda x: '.'.join(x.split('.')[0:2]))

all_data['reqrealip3'] = all_data['reqrealip'].apply(lambda x: '.'.join(x.split('.')[0:3]))

all_data['machine'] = 1000 * all_data['model'] + all_data['make']

var_mean_attributes = ['adunitshowid', 'make', 'model', 'ver']

for attr in tqdm(var_mean_attributes):

# 统计关于ratio的方差和均值特征

var_label = 'ratio'

var_name = 'var_' + attr + '_' + var_label

gp = all_data[[attr, var_label]].groupby(attr)[var_label].var().reset_index().rename(index=str,

columns={var_label: var_name})

all_data = all_data.merge(gp, on=attr, how='left')

all_data[var_name] = all_data[var_name].fillna(0).astype(int)

mean_label = 'ratio'

mean_name = 'mean_' + attr + '_' + mean_label

gp = all_data[[attr, mean_label]].groupby(attr)[mean_label].mean().reset_index().rename(index=str, columns={

mean_label: mean_name})

all_data = all_data.merge(gp, on=attr, how='left')

all_data[mean_name] = all_data[mean_name].fillna(0).astype(int)

# 统计关于h的方差和均值特征

var_label = 'h'

var_name = 'var_' + attr + '_' + var_label

gp = all_data[[attr, var_label]].groupby(attr)[var_label].var().reset_index().rename(index=str,

columns={var_label: var_name})

all_data = all_data.merge(gp, on=attr, how='left')

all_data[var_name] = all_data[var_name].fillna(0).astype(int)

mean_label = 'h'

mean_name = 'mean_' + attr + '_' + mean_label

gp = all_data[[attr, mean_label]].groupby(attr)[mean_label].mean().reset_index().rename(index=str, columns={

mean_label: mean_name})

all_data = all_data.merge(gp, on=attr, how='left')

all_data[mean_name] = all_data[mean_name].fillna(0).astype(int)

# 统计关于h的方差和均值特征

var_label = 'w'

var_name = 'var_' + attr + '_' + var_label

gp = all_data[[attr, var_label]].groupby(attr)[var_label].var().reset_index().rename(index=str,

columns={var_label: var_name})

all_data = all_data.merge(gp, on=attr, how='left')

all_data[var_name] = all_data[var_name].fillna(0).astype(int)

mean_label = 'w'

mean_name = 'mean_' + attr + '_' + mean_label

gp = all_data[[attr, mean_label]].groupby(attr)[mean_label].mean().reset_index().rename(index=str, columns={

mean_label: mean_name})

all_data = all_data.merge(gp, on=attr, how='left')

all_data[mean_name] = all_data[mean_name].fillna(0).astype(int)

del gp

gc.collect()

cat_col = [i for i in all_data.select_dtypes(object).columns if i not in ['sid', 'label']]

for i in tqdm(cat_col):

lbl = LabelEncoder()

all_data['count_' + i] = all_data.groupby([i])[i].transform('count')

all_data[i] = lbl.fit_transform(all_data[i].astype(str))

for i in tqdm(['h', 'w', 'ppi', 'ratio']):

all_data['{}_count'.format(i)] = all_data.groupby(['{}'.format(i)])['sid'].transform('count')

feature_name = [i for i in all_data.columns if i not in ['sid', 'label', 'time']]

print(feature_name)

print('all_data.info:', all_data.info())

# cat_list = ['pkgname', 'ver', 'adunitshowid', 'mediashowid', 'apptype', 'ip', 'city', 'province', 'reqrealip',

# 'adidmd5',

# 'imeimd5', 'idfamd5', 'openudidmd5', 'macmd5', 'dvctype', 'model', 'make', 'ntt', 'carrier', 'os', 'osv',

# 'orientation', 'lan', 'h', 'w', 'ppi', 'ip2', 'new_make', 'new_model', 'country', 'new_province',

# 'new_city',

# 'ip3', 'reqrealip2', 'reqrealip3']

cat_list = ['pkgname', 'ver', 'adunitshowid', 'mediashowid', 'apptype', 'ip', 'city', 'province', 'reqrealip',

'adidmd5',

'imeimd5', 'idfamd5', 'openudidmd5', 'macmd5', 'dvctype', 'model', 'make', 'ntt', 'carrier', 'os', 'osv',

'orientation', 'lan', 'h', 'w', 'ppi', 'ip2',

'ip3', 'reqrealip2', 'reqrealip3']

tr_index = ~all_data['label'].isnull()

X_train = all_data[tr_index][list(set(feature_name))].reset_index(drop=True)

y = all_data[tr_index]['label'].reset_index(drop=True).astype(int)

X_test = all_data[~tr_index][list(set(feature_name))].reset_index(drop=True)

print(X_train.shape, X_test.shape)

# 节约一下内存

del all_data

gc.collect()

# 以下代码是5折交叉验证的结果 + lgb catboost xgb 最后使用logist进行回归预测

def get_stacking(clf, x_train, y_train, x_test, feature_name, n_folds=5):

print('len_x_train:', len(x_train))

train_num, test_num = x_train.shape[0], x_test.shape[0]

second_level_train_set = np.zeros((train_num,))

second_level_test_set = np.zeros((test_num,))

test_nfolds_sets = np.zeros((test_num, n_folds))

kf = KFold(n_splits=n_folds)

for i, (train_index, test_index) in enumerate(kf.split(x_train)):

x_tra, y_tra = x_train[feature_name].iloc[train_index], y_train[train_index]

x_tst, y_tst = x_train[feature_name].iloc[test_index], y_train[test_index]

clf.fit(x_tra[feature_name], y_tra, eval_set=[(x_tst[feature_name], y_tst)])

second_level_train_set[test_index] = clf.predict(x_tst[feature_name])

test_nfolds_sets[:, i] = clf.predict(x_test[feature_name])

second_level_test_set[:] = test_nfolds_sets.mean(axis=1)

return second_level_train_set, second_level_test_set

def lgb_f1(labels, preds):

score = f1_score(labels, np.round(preds))

return 'f1', score, True

lgb_model = lgb.LGBMClassifier(random_seed=2019, n_jobs=-1, objective='binary', learning_rate=0.05, n_estimators=3000,

num_leaves=31, max_depth=-1, min_child_samples=50, min_child_weight=9, subsample_freq=1,

subsample=0.7, colsample_bytree=0.7, reg_alpha=1, reg_lambda=5,

early_stopping_rounds=400)

xgb_model = xgb.XGBClassifier(objective='binary:logistic', eval_metric='auc', learning_rate=0.02, max_depth=6,

early_stopping_rounds=400, feval=lgb_f1)

cbt_model = cbt.CatBoostClassifier(iterations=3000, learning_rate=0.05, max_depth=11, l2_leaf_reg=1, verbose=10,

early_stopping_rounds=400, task_type='GPU', eval_metric='F1')

train_sets = []

test_sets = []

for clf in [xgb_model, cbt_model, lgb_model]:

print('begin train clf:', clf)

train_set, test_set = get_stacking(clf, X_train, y, X_test, feature_name)

train_sets.append(train_set)

test_sets.append(test_set)

meta_train = np.concatenate([result_set.reshape(-1, 1) for result_set in train_sets], axis=1)

meta_test = np.concatenate([y_test_set.reshape(-1, 1) for y_test_set in test_sets], axis=1)

# 使用逻辑回归作为第二层模型

bclf = LogisticRegression()

bclf.fit(meta_train, y)

test_pred = bclf.predict_proba(meta_test)[:, 1]

# 提交结果

submit = test[['sid']]

submit['label'] = (test_pred >= 0.5).astype(int)

print(submit['label'].value_counts())

submit.to_csv("A_Simple_Stacking_Model.csv", index=False)

# 打印预测地概率 方便以后使用融合模型

df_sub = pd.concat([test['sid'], pd.Series(test_pred)], axis=1)

df_sub.columns = ['sid', 'label']

df_sub.to_csv('A_Simple_Stacking_Model_proba.csv', sep=',', index=False)

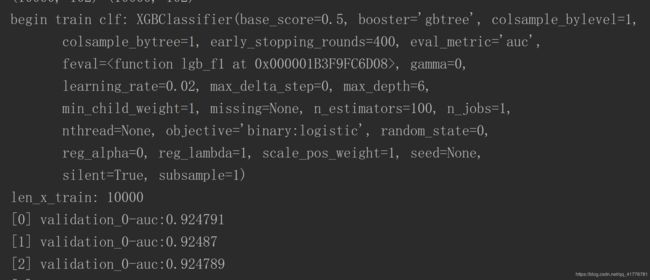

XGB输出结果:

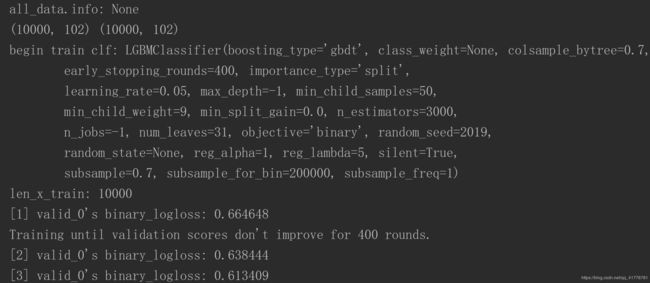

lgb输出的结果:

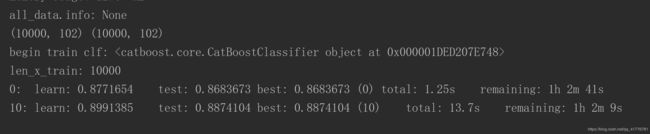

catboost输出的结果: