Ceph Monitor Paxos实现

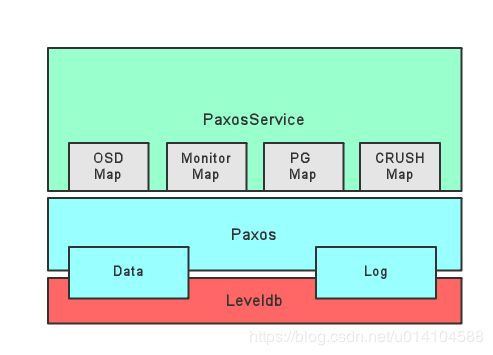

Monitor的Paxos实现主要包括Leveldb、Paxos和PaxosService三层,其中Leveldb负责底层存储,同时也负责PaxosService给Paxos传递数据。Paxos负责Paxos算法的具体实现,PaxosService则是基于Paxos提供的服务,包括OSDMap、MonitorMap、PGMap、CRUSHMap。

下面以MonitorMap为例,分析PaxosService和Paxos是如何工作的。利用ceph mon add添加monitor节点,则monitor判断命令前缀是"mon add",会把新节点加入到pending_map中,如下

if (!prepare_update(op)) // 调用bool MonmapMonitor::prepare_command(MonOpRequestRef op)

if (prefix == "mon add")

pending_map.add(name, addr);

pending_map.last_changed = ceph_clock_now();

propose = true; //说明要propose给其它节点

else if (prefix == "mon remove" || prefix == "mon rm")

...

mon->reply_command(op, err, rs, get_last_committed());

return propose;

return true;

// 无论是立即propose还是延迟propose,都会调用这个函数

propose_pending();

MonitorDBStore::TransactionRef t = paxos->get_pending_transaction();

return pending_proposal;

encode_pending(t);

// 编码pending_map

pending_map.encode(bl, mon->get_quorum_con_features());

put_version(t, pending_map.epoch, bl);

t->put(get_service_name(), ver, bl); // MonmapMonitor的service_name 是monmap

put_last_committed(t, pending_map.epoch);

t->put(get_service_name(), last_committed_name, ver);

have_pending = false;

proposing = true;

paxos->queue_pending_finisher(new C_Committed(this)); //具有回调函数finish,但不会立马调用

paxos->trigger_propose();

if (is_active()) // return state == STATE_ACTIVE;

propose_pending();

pending_proposal->encode(bl);

committing_finishers.swap(pending_finishers);

state = STATE_UPDATING;

begin(bl);

return true;

else

return false;

可以看到,当pending_map改变时,会将新的<“monmap”+pendin_map.epoch,pending_map>和<“monmap”+“last_committed”,pending_map.epoch>存储到MonitorDBStore缓存中。

Paxos实现

Monitor节点分为一个leader节点和多个peon节点,且只有leader节点可以发起提议,下面以monmap更新分析Paxos实现。

leader向各个peon节点发送OP_BEGIN消息

begin(new_value);

accepted.insert(mon->rank);

auto t(std::make_shared<MonitorDBStore::Transaction>());

t->put(get_name(), last_committed+1, new_value);

t->put(get_name(), "pending_v", last_committed + 1);

t->put(get_name(), "pending_pn", accepted_pn);

get_store()->apply_transaction(t);

// 给peon发送OP_BEGIN消息

for (set<int>::const_iterator p = mon->get_quorum().begin(); p != mon->get_quorum().end(); ++p)

if (*p == mon->rank)

continue;

MMonPaxos *begin = new MMonPaxos(mon->get_epoch(), MMonPaxos::OP_BEGIN, ceph_clock_now());

begin->values[last_committed+1] = new_value;

begin->last_committed = last_committed;

begin->pn = accepted_pn;

mon->messenger->send_message(begin, mon->monmap->get_inst(*p));

last_committed为最新确认的消息,所以下一个要确认的消息的版本号就是last_committed+1,每次选举成功后都会生成一个accepted_pn,并且直到下一次选举,该accepted_pb都不会变。

从节点受到OP_BEGIN消息后,处理如下

void Paxos::handle_begin(MonOpRequestRef op)

MMonPaxos *begin = static_cast<MMonPaxos*>(op->get_req());

// 如果对方的accepted_pn小于当前的accepted_pn,就说明是一个旧老的leader节点发的提议

if (begin->pn < accepted_pn)

op->mark_paxos_event("have higher pn, ignore");

return ;

state = STATE_UPDATING;

//未处理的版本号只比最新确认的版本号多1,说明一次只能提确认一个消息

version_t v = last_committed+1;

auto t(std::make_shared<MonitorDBStore::Transaction>());

t->put(get_name(), v, begin->values[v]);

t->put(get_name(), "pending_v", v);

t->put(get_name(), "pending_pn", accepted_pn);

get_store()->apply_transaction(t);

// 回应接受消息

MMonPaxos *accept = new MMonPaxos(mon->get_epoch(), MMonPaxos::OP_ACCEPT,

ceph_clock_now());

accept->pn = accepted_pn;

accept->last_committed = last_committed;

begin->get_connection()->send_message(accept);

主节点收到OP_ACCEPT消息后

void Paxos::handle_accept(MonOpRequestRef op)

// 对方的last_committed太小

if (last_committed > 0 && accept->last_committed < last_committed-1)

return;

// 记录收到了哪些节点的回应消息

accepted.insert(from);

// 如果收到全部节点的回应消息

if (accepted == mon->get_quorum())

commit_start();

auto t(std::make_shared<MonitorDBStore::Transaction>());

t->put(get_name(), "last_committed", last_committed + 1);

decode_append_transaction(t, new_value);

get_store()->queue_transaction(t, new C_Committed(this)); //会立马调用回调函数

paxos->commit_finish();

last_committed++;

first_committed = get_store()->get(get_name(), "first_committed");

for (set<int>::const_iterator p = mon->get_quorum().begin(); p != mon->get_quorum().end(); ++p)

if (*p == mon->rank) continue;

// 发送OP_COMMIT消息

MMonPaxos *commit = new MMonPaxos(mon->get_epoch(), MMonPaxos::OP_COMMIT, ceph_clock_now());

commit->values[last_committed] = new_value;

commit->pn = accepted_pn;

commit->last_committed = last_committed;

mon->messenger->send_message(commit, mon->monmap->get_inst(*p));

new_value.clear();

state = STATE_REFRESH;

do_refresh()

mon->refresh_from_paxos(&need_bootstrap);

for (int i = 0; i < PAXOS_NUM; ++i)

// 以MonmapMonitor为例分析refresh函数

paxos_service[i]->refresh(need_bootstrap);

cached_first_committed = mon->store->get(get_service_name(), first_committed_name);

cached_last_committed = mon->store->get(get_service_name(), last_committed_name);

update_from_paxos(need_bootstrap);

version_t version = get_last_committed();

return cached_last_committed;

if (version <= mon->monmap->get_epoch())

return;

// MonmapMonitor的cached_last_committed就是pending_map的版本号,

// 如果和当前map的版本号不同,就得重新选举

if (need_bootstrap && version != mon->monmap->get_epoch())

*need_boostrap = true;

// 依据pending_map的版本号,获取pending_map内容

get_version(version, monmap_bl);

return mon->store->get(get_service_name(), ver, bl);

// 将最新pending_map解码到monmap中

mon->monmap->decode(monmap_bl);

if (need_bootstrap)

mon->bootstrap();

return false;

return true;

从节点收到OP_COMMIT消息

void Paxos::handle_commit(MonOpRequestRef op)

store_state(commit);

auto t(std::make_shared<MonitorDBStore::Transaction>());

map<version_t,bufferlist>::iterator start = m->values.begin();

map<version_t,bufferlist>::iterator end = start;

while (end != m->values.end() && end->first <= m->last_committed)

last_committed = end->first;

++end;

if (start != end)

// 更新最新的last_committed

t->put(get_name(), "last_committed", last_committed);

map<version_t,bufferlist>::iterator it;

for (it = start; it != end; ++it)

t->put(get_name(), it->first, it->second);

decode_append_transaction(t, it->second);

get_store()->apply_transaction(t);

(void)do_refresh();

mon->refresh_from_paxos(&need_bootstrap);

for (int i = 0; i < PAXOS_NUM; ++i)

paxos_service[i]->refresh(need_bootstrap);

cached_first_committed = mon->store->get(get_service_name(), first_committed_name);

cached_last_committed = mon->store->get(get_service_name(), last_committed_name);

update_from_paxos(need_bootstrap);

version_t version = get_last_committed();

return cached_last_committed;

if (version <= mon->monmap->get_epoch())

return;

// 如果monmap发生变化,就得重新选举

if (need_bootstrap && version != mon->monmap->get_epoch())

*need_boostrap = true;

get_version(version, monmap_bl);

return mon->store->get(get_service_name(), ver, bl);

mon->monmap->decode(monmap_bl);

if (need_bootstrap)

mon->bootstrap();

return false;

return true;

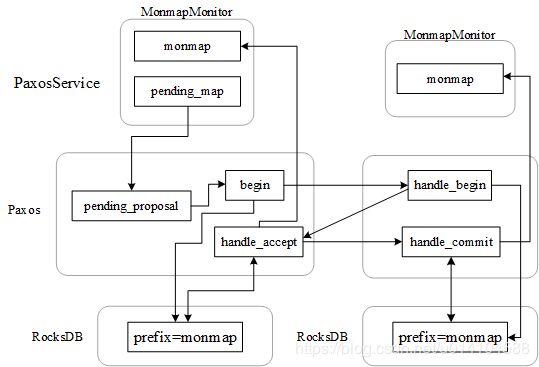

通过上面的代码分析,可以知道ceph monitor正常模式下,monmap更新的流程如下图所示:

(1) 主monitor收到客户端修改monmap请求,monitor节点更新暂时记录在pending_map中。

(2) 将pending_map编码(bl),并将<“monmap”+pending_map.epoch, bl>和<“monmap”+“last_committed”,pending_map.epoch >(下面的说明中,省去"monmap"前缀)添加到pending_proposal所代表的MonitorDBStore缓存中,pending_proposal就代表了需要在monitor集群间同步的数据。

(3) 主monitor调用paxos层的begin函数开启paxos算法的第一阶段,begin记录

(4) 从节点收到OP_BEGIN消息后,如果对方的提议号小于自己接受过的最大提议号,则不作任何回应,否则和主节点一样,记录

(5) 如果主节点收到了全部从节点的OP_ACCEPT消息,就记录<“last_committed”, last_committed+1>键值对到数据库,并给从节点发送OP_COMMIT消息。自己则从数据库中读取最新版本的monmap。

(6) 从节点收到OP_COMMIT消息后,将最新commit的内容记录到RocksDB数据库,并更新monmap。