一, Ceph RBD的特性

支持完整和增量的快照

自动精简配置

写时复制克隆

动态调整大小

二、RBD基本应用

2.1 创建RBD池

[root@ceph2 ceph]# ceph osd pool create rbd 64 pool 'rbd' created [root@ceph2 ceph]# ceph osd pool application enable rbd rbd enabled application 'rbd' on pool 'rbd'

2.2 客户端验证

[root@ceph2 ceph]# ceph auth get-or-create client.rbd -o ./ceph.client.rbd.keyring #导出秘钥 [root@ceph2 ceph]# cat !$ cat ./ceph.client.rbd.keyring [client.rbd] key = AQD+Qo5cdS0mOBAA6bPb/KKzSkSvwCRfT0nLXA== [root@ceph2 ceph]# scp ./ceph.client.rbd.keyring ceph1:/etc/ceph/ #把秘钥发送到客户端 [root@ceph1 ceph-ansible]# ceph -s --id rbd #客户端验证失败,没有授权 2019-03-17 20:54:53.528212 7fb6082aa700 0 librados: client.rbd authentication error (13) Permission denied [errno 13] error connecting to the cluster [root@ceph2 ceph]# ceph auth get client.rbd exported keyring for client.rbd #查看权限信息 [client.rbd] key = AQD+Qo5cdS0mOBAA6bPb/KKzSkSvwCRfT0nLXA== [root@ceph2 ceph]# ceph auth caps client.rbd mon 'allow r' osd 'allow rwx pool=rbd' #修改权限 updated caps for client.rbd [root@ceph2 ceph]# ceph auth get client.rbd #查看,对rbd池有权限 exported keyring for client.rbd [client.rbd] key = AQD+Qo5cdS0mOBAA6bPb/KKzSkSvwCRfT0nLXA== caps mon = "allow r" caps osd = "allow rwx pool=rbd" [root@ceph1 ceph-ansible]# ceph -s --id rbd #客户端验证成功 cluster: id: 35a91e48-8244-4e96-a7ee-980ab989d20d health: HEALTH_OK services: mon: 3 daemons, quorum ceph2,ceph3,ceph4 mgr: ceph4(active), standbys: ceph2, ceph3 osd: 9 osds: 9 up, 9 in data: pools: 2 pools, 192 pgs objects: 5 objects, 22678 bytes usage: 975 MB used, 133 GB / 134 GB avail pgs: 192 active+clean [root@ceph1 ceph-ansible]# ceph osd pool ls --id rbd testpool rbd [root@ceph1 ceph-ansible]# rbd ls rbd --id rbd

2.3 创建块设备

[root@ceph1 ceph-ansible]# rbd create --size 1G rbd/testimg --id rbd [root@ceph1 ceph-ansible]# rbd ls rbd --id rbd testimg

2.4 映射块设备

[root@ceph1 ceph-ansible]# rbd map rbd/testimg --id rbd #映射失败 rbd: sysfs write failed RBD image feature set mismatch. Try disabling features unsupported by the kernel with "rbd feature disable". In some cases useful info is found in syslog - try "dmesg | tail". rbd: map failed: (6) No such device or address [root@ceph1 ceph-ansible]# rbd info rbd/testimg --id rbd rbd image 'testimg': size 1024 MB in 256 objects order 22 (4096 kB objects) block_name_prefix: rbd_data.fb1574b0dc51 format: 2 features: layering, exclusive-lock, object-map, fast-diff, deep-flatten #要支持所有的属性,必须内核在4.4以上,目前内核不支持,必须禁掉,否则不能创建映射 flags: create_timestamp: Sun Mar 17 21:08:01 2019 [root@ceph1 ceph-ansible]# rbd feature disable rbd/testimg exclusive-lock, object-map, fast-diff, deep-flatten --id rbd #去掉其他属性 [root@ceph1 ceph-ansible]# rbd info rbd/testimg --id rbd rbd image 'testimg': size 1024 MB in 256 objects #大小是1024M,被分成256个对象 order 22 (4096 kB objects) #每个对象大小是4M block_name_prefix: rbd_data.fb1574b0dc51 #命名格式 format: 2 #指定磁盘格式,raw裸磁盘。qcow和qcow2支持更丰富的特性(精简置备) features: layering #开启某种特性 flags: create_timestamp: Sun Mar 17 21:08:01 2019 [root@ceph1 ceph-ansible]# rbd map rbd/testimg --id rbd #映射成功 /dev/rbd0 [root@ceph1 ceph-ansible]# fdisk -l #会多一个/dev/rbd块设备 ……略 Disk /dev/rbd0: 1073 MB, 1073741824 bytes, 2097152 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 4194304 bytes / 4194304 bytes

2.5 客户端操作

RBD客户端说明

Ceph客户端可使用原生linux内核模块krbd挂载RBD镜像

对于OpenStack和libvirt等云和虚拟化解决方案使用librbd将RBD镜像作为设备提供给虚拟机实例

librbd无法利用linux页面缓存,所以它包含了自己的内存内缓存 ,称为RBD缓存

RBD缓存是使用客户端上的内存

RBD缓存又分为两种模式:

回写(write back):数据先写入本地缓存,定时刷盘

直写(derect back):数据直接写入磁盘

RBD缓存参数说明,优化配置

RBD缓存参数必须添加到发起I/O请求的计算机上的配置文件的[client]部分中。

操作

[root@ceph1 ceph-ansible]# mkfs.xfs /dev/rbd0

[root@ceph1 ceph-ansible]# mkdir /mnt/ceph

[root@ceph1 ceph-ansible]# mount /dev/rbd0 /mnt/ceph

[root@ceph1 ceph-ansible]# df -hT

尝试写数据

[root@ceph1 ceph-ansible]# cd /mnt/ceph/

[root@ceph1 ceph]# touch 111

[root@ceph1 ceph]# echo "A boy and a girl" > 111

[root@ceph1 ceph]# ll

![]()

客户端正常挂载使用

2.6 创建另一个块设备测试

[root@ceph1 ceph]# rbd create --size 1G rbd/cephrbd1 --id rbd

[root@ceph1 ceph]# rbd feature disable rbd/cephrbd1 exclusive-lock, object-map, fast-diff, deep-flatten --id rbd

[root@ceph1 ceph]# rbd info rbd/cephrbd1 --id rbd

[root@ceph1 ceph]# rbd ls --id rbd

![]()

[root@ceph1 ceph]# mkdir /mnt/ceph2

[root@ceph1 ceph]# rbd map rbd/cephrbd1 --id rbd

![]()

[root@ceph1 ceph]# mkfs.xfs /dev/rbd1

[root@ceph1 ceph]# mount /dev/rbd1 /mnt/ceph2

[root@ceph1 ceph]# df -hT

2.7 块设备的其他操作

块设备在底层还是对象存储,一个带下为1024M的分成256个对象,每个对象的大小是4M

![]()

存储这些对象的命名格式,可以根据这些命名格式,可以给用户基于对象前缀授权

可以很具rados查询底层对象

[root@ceph1 ceph]# rados -p rbd ls --id rbd

rbd_header.fb1574b0dc51 rbd_data.fb2074b0dc51.000000000000001f rbd_data.fb1574b0dc51.00000000000000ba rbd_data.fb2074b0dc51.00000000000000ff rbd_data.fb1574b0dc51.0000000000000000 rbd_data.fb1574b0dc51.000000000000007e rbd_data.fb1574b0dc51.000000000000007d rbd_directory rbd_header.fb2074b0dc51 rbd_data.fb2074b0dc51.000000000000005d rbd_data.fb2074b0dc51.00000000000000d9 rbd_data.fb2074b0dc51.000000000000009b rbd_data.fb1574b0dc51.000000000000001f rbd_info rbd_data.fb2074b0dc51.000000000000003e rbd_data.fb1574b0dc51.00000000000000ff rbd_data.fb2074b0dc51.000000000000007d rbd_id.testimg rbd_data.fb2074b0dc51.000000000000007c rbd_id.cephrbd1 rbd_data.fb1574b0dc51.00000000000000d9 rbd_data.fb2074b0dc51.000000000000007e rbd_data.fb2074b0dc51.00000000000000ba rbd_data.fb1574b0dc51.000000000000003e rbd_data.fb1574b0dc51.000000000000007c rbd_data.fb1574b0dc51.00000000000000f8 rbd_data.fb1574b0dc51.000000000000005d rbd_data.fb2074b0dc51.00000000000000f8 rbd_data.fb1574b0dc51.0000000000000001 rbd_data.fb1574b0dc51.000000000000009b rbd_data.fb2074b0dc51.0000000000000000 rbd_data.fb2074b0dc51.0000000000000001

查看状态

[root@ceph1 ceph]# rbd status rbd/testimg --id rbd #查看状态 Watchers: watcher=172.25.250.10:0/310641078 client.64285 cookie=1 [root@ceph1 ceph]# rbd status rbd/cephrbd1 --id rbd Watchers: watcher=172.25.250.10:0/310641078 client.64285 cookie=2 [root@ceph1 ceph]# rbd rm testimg --id rbd #执行删除动作,由于正在使用,不能删除 2019-03-17 21:48:32.890752 7fac95ffb700 -1 librbd::image::RemoveRequest: 0x55785f1ee540 check_image_watchers: image has watchers - not removing Removing image: 0% complete...failed. rbd: error: image still has watchers This means the image is still open or the client using it crashed. Try again after closing/unmapping it or waiting 30s for the crashed client to timeout. [root@ceph1 ceph]# rbd du testimg --id rbd #查看大小 warning: fast-diff map is not enabled for testimg. operation may be slow. NAME PROVISIONED USED testimg 1024M 53248k

三、RBD的复制与修改

3.1 RBD的复制

[root@ceph1 ceph]# rbd cp testimg testimg-copy --id rbd Image copy: 100% complete...done. [root@ceph1 ceph]# rbd ls --id rbd cephrbd1 testimg testimg-copy

3.2 客户端挂载使用

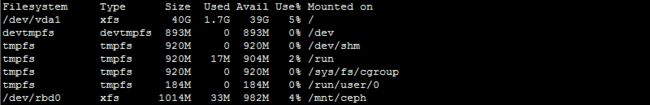

[root@ceph1 ceph]# rbd map rbd/testimg-copy --id rbd #映射复制的块设备 /dev/rbd2 [root@ceph1 ceph]# mkdir /mnt/ceph3 [root@ceph1 ceph]# mount /dev/rbd2 /mnt/ceph3 #挂载失败,原设备正在挂载使用,挂载不上 mount: wrong fs type, bad option, bad superblock on /dev/rbd2, missing codepage or helper program, or other error In some cases useful info is found in syslog - try dmesg | tail or so. [root@ceph1 ceph]# cd [root@ceph1 ~]# umount /mnt/ceph #取消挂载原设备 [root@ceph1 ~]# mount /dev/rbd2 /mnt/ceph3 #再次挂载,成功 [root@ceph1 ~]# df -hT Filesystem Type Size Used Avail Use% Mounted on /dev/vda1 xfs 40G 1.7G 39G 5% / devtmpfs devtmpfs 893M 0 893M 0% /dev tmpfs tmpfs 920M 0 920M 0% /dev/shm tmpfs tmpfs 920M 17M 904M 2% /run tmpfs tmpfs 920M 0 920M 0% /sys/fs/cgroup tmpfs tmpfs 184M 0 184M 0% /run/user/0 /dev/rbd1 xfs 2.0G 33M 2.0G 2% /mnt/ceph2 /dev/rbd2 xfs 2.0G 33M 2.0G 2% /mnt/ceph3

3.3 检查数据

[root@ceph1 ~]# cd /mnt/ceph3 [root@ceph1 ceph3]# ll #复制成功 total 4 -rw-r--r-- 1 root root 17 Mar 17 21:21 111

3.4 RBD的删除与恢复

[root@ceph1 ceph3]# rbd trash mv testimg --id rbd #删除rbd需要先将其移动至回收站内 [root@ceph1 ceph3]# rbd ls --id rbd #已经移至回收站 cephrbd1 testimg-copy [root@ceph1 ceph3]# rbd trash ls --id rbd #查看回收站有一个快设备 fb1574b0dc51 testimg [root@ceph1 ceph3]# rbd trash restore fb1574b0dc51 --id rbd #从回收站恢复 [root@ceph1 ceph3]# rbd ls --id rbd #已经恢复 cephrbd1 testimg testimg-copy 从回收站删除RBD rbd trash rm [pool-name/]image-name

四、RBD快照操作

4.1创建快照

[root@ceph1 ceph]# rbd snap create testimg-copy@snap1 --id rbd [root@ceph1 ceph]# rbd snap ls testimg-copy --id rbd SNAPID NAME SIZE TIMESTAMP 4 snap1 2048 MB Sun Mar 17 22:08:12 2019 [root@ceph1 ceph]# rbd showmapped --id rbd id pool image snap device 0 rbd testimg - /dev/rbd0 1 rbd cephrbd1 - /dev/rbd1 2 rbd testimg-copy - /dev/rbd2 [root@ceph1 ceph3]# df -hT Filesystem Type Size Used Avail Use% Mounted on /dev/vda1 xfs 40G 1.7G 39G 5% / devtmpfs devtmpfs 893M 0 893M 0% /dev tmpfs tmpfs 920M 0 920M 0% /dev/shm tmpfs tmpfs 920M 17M 904M 2% /run tmpfs tmpfs 920M 0 920M 0% /sys/fs/cgroup tmpfs tmpfs 184M 0 184M 0% /run/user/0 /dev/rbd1 xfs 2.0G 33M 2.0G 2% /mnt/ceph2 /dev/rbd2 xfs 2.0G 33M 2.0G 2% /mnt/ceph3 [root@ceph1 ceph]# cd /mnt/ceph3 [root@ceph1 ceph3]# ll total 4 -rw-r--r-- 1 root root 17 Mar 17 21:21 111

4.2 新加数据

[root@ceph1 ceph3]# touch 222 [root@ceph1 ceph3]# echo aaa>test [root@ceph1 ceph3]# echo bbb>test1 [root@ceph1 ceph3]# ls 111 222 test test1

4.3 再创建快照

root@ceph1 ceph3]# rbd snap ls testimg-copy --id rbd SNAPID NAME SIZE TIMESTAMP 4 snap1 2048 MB Sun Mar 17 22:08:12 2019 5 snap2 2048 MB Sun Mar 17 22:15:14 2019

4.4 删除数据并恢复

[root@ceph1 ceph3]# rm -rf test #删除数据 [root@ceph1 ceph3]# rm -rf 111 [root@ceph1 ceph3]# ls 222 test1 [root@ceph1 ceph3]#rbd snap revert testimg-copy@snap2 --id rbd #回退快照 Rolling back to snapshot: 100% complete...done. [root@ceph1 ceph3]# ls #检查没有恢复 222 test1 [root@ceph1 ceph3]# cd [root@ceph1 ~]# umount /mnt/ceph3 #取消挂载 [root@ceph1 ~]# mount /dev/rbd2 /mnt/ceph3 #挂载失败 mount: wrong fs type, bad option, bad superblock on /dev/rbd2, missing codepage or helper program, or other error In some cases useful info is found in syslog - try dmesg | tail or so. [root@ceph1 ~]# rbd snap revert testimg-copy@snap2 --id rbd #需要重新回滚快照 Rolling back to snapshot: 100% complete...done. [root@ceph1 ~]# mount /dev/rbd2 /mnt/ceph3 #正常挂载 [root@ceph1 ~]# cd /mnt/ceph3 [root@ceph1 ceph3]# ll #数据恢复 total 12 -rw-r--r-- 1 root root 17 Mar 17 21:21 111 -rw-r--r-- 1 root root 0 Mar 17 22:13 222 -rw-r--r-- 1 root root 4 Mar 17 22:13 test -rw-r--r-- 1 root root 4 Mar 17 22:14 test1

4.5 删除快照

[root@ceph1 ceph3]# rbd snap rm testimg-copy@snap1 --id rbd #删除一个快照 Removing snap: 100% complete...done. [root@ceph1 ceph3]# rbd snap ls testimg-copy --id rbd SNAPID NAME SIZE TIMESTAMP 5 snap2 2048 MB Sun Mar 17 22:15:14 2019 [root@ceph1 ceph3]# rbd snap purge testimg-copy --id rbd #清除所有快照 Removing all snapshots: 100% complete...done. [root@ceph1 ceph3]# rbd snap ls testimg-copy --id rbd

五、RBD克隆

RBD克隆是RBD镜像副本,将RBD快照作基础,转换为彻底独立于原始来源的RBD镜像

5.1 创建镜像克隆

[root@ceph1 ceph3]# rbd snap create testimg-copy@for-clone --id rbd [root@ceph1 ceph3]# rbd snap ls testimg-copy --id rbd SNAPID NAME SIZE TIMESTAMP 8 for-clone 2048 MB Sun Mar 17 22:32:56 2019 [root@ceph1 ceph3]# rbd snap protect testimg-copy@for-clone --id rbd [root@ceph1 ceph3]# rbd clone testimg-copy@for-clone rbd/test-clone --id rbd [root@ceph1 ceph3]# rbd ls --id rbd cephrbd1 test-clone testimg testimg-copy

5.2 客户端挂载

[root@ceph1 ceph3]# rbd info test-clone --id rbd rbd image 'test-clone': size 2048 MB in 512 objects order 22 (4096 kB objects) block_name_prefix: rbd_data.fb773d1b58ba format: 2 features: layering flags: create_timestamp: Sun Mar 17 22:35:12 2019 parent: rbd/testimg-copy@for-clone overlap: 2048 MB [root@ceph1 ceph3]# rbd flatten test-clone --id rbd #执行合并操作 Image flatten: 100% complete...done. [root@ceph1 ceph3]# rbd info test-clone --id rbd rbd image 'test-clone': size 2048 MB in 512 objects order 22 (4096 kB objects) block_name_prefix: rbd_data.fb773d1b58ba format: 2 features: layering flags: create_timestamp: Sun Mar 17 22:35:12 2019 [root@ceph1 ceph3]# rbd map rbd/test-clone --id rbd #映射块设备 /dev/rbd3 [root@ceph1 ceph3]# cd [root@ceph1 ~]# mount /dev/rbd3 /mnt/ceph #挂载失败,是因为原设备被挂载 mount: wrong fs type, bad option, bad superblock on /dev/rbd3, missing codepage or helper program, or other error In some cases useful info is found in syslog - try dmesg | tail or so. [root@ceph1 ~]# umount /mnt/ceph3 #取消挂载元块设备 [root@ceph1 ~]# mount /dev/rbd3 /mnt/ceph #成功挂载 [root@ceph1 ~]# cd /mnt/ceph [root@ceph1 ceph]# ll total 12 -rw-r--r-- 1 root root 17 Mar 17 21:21 111 -rw-r--r-- 1 root root 0 Mar 17 22:13 222 -rw-r--r-- 1 root root 4 Mar 17 22:13 test -rw-r--r-- 1 root root 4 Mar 17 22:14 test1 [root@ceph1 ceph]# df -hT Filesystem Type Size Used Avail Use% Mounted on /dev/vda1 xfs 40G 1.7G 39G 5% / devtmpfs devtmpfs 893M 0 893M 0% /dev tmpfs tmpfs 920M 0 920M 0% /dev/shm tmpfs tmpfs 920M 17M 904M 2% /run tmpfs tmpfs 920M 0 920M 0% /sys/fs/cgroup tmpfs tmpfs 184M 0 184M 0% /run/user/0 /dev/rbd1 xfs 2.0G 33M 2.0G 2% /mnt/ceph2 /dev/rbd3 xfs 2.0G 33M 2.0G 2% /mnt/ceph

5.3 删除快照

[root@ceph2 ceph]# rbd snap rm testimg-copy@for-clone Removing snap: 0% complete...failed. rbd: snapshot 'for-clone' 2019-03-17 22:46:23.648170 7f39da26ad40 -1 librbd::Operations: snapshot is protected is protected from removal. [root@ceph2 ceph]# rbd snap unprotect testimg-copy@for-clone [root@ceph2 ceph]# rbd snap rm testimg-copy@for-clone Removing snap: 100% complete...done.

5.5 再次克隆

[root@ceph1 ~]# rbd snap create testimg-copy@for-clone2 --id rbd [root@ceph1 ~]# rbd snap protect testimg-copy@for-clone2 --id rbd [root@ceph1 ~]# rbd clone testimg-copy@for-clone2 test-clone2 --id rbd 2019-03-17 22:56:38.296404 7f9a7bfff700 -1 librbd::image::CreateRequest: 0x7f9a680204f0 handle_create_id_object: error creating RBD id object: (17) File exists 2019-03-17 22:56:38.296440 7f9a7bfff700 -1 librbd::image::CloneRequest: error creating child: (17) File exists rbd: clone error: (17) File exists [root@ceph2 ceph]# rbd rm test-clone2 Removing image: 100% complete...done. [root@ceph2 ceph]# rbd clone testimg-copy@for-clone2 rbd/test-clone2 --id rbd [root@ceph2 ceph]# rbd flatten test-clone2 --id rbd Image flatten: 100% complete...done. [root@ceph1 ~]# rbd map rbd/test-clone2 --id rbd /dev/rbd4 [root@ceph1 ~]# mkdir /mnt/ceph4 [root@ceph1 ~]# umount /mnt/ceph [root@ceph1 ~]# mount /dev/rbd4 /mnt/ceph4 #如果在这里挂载不上,请查看元块设备和元数据相关的块设备是否挂载,如果有,取消元块设备挂载后,再次挂载此设备 [root@ceph1 ~]# cd /mnt/ceph4 [root@ceph1 ceph4]# ll total 12 -rw-r--r-- 1 root root 17 Mar 17 21:21 111 -rw-r--r-- 1 root root 0 Mar 17 22:13 222 -rw-r--r-- 1 root root 4 Mar 17 22:13 test -rw-r--r-- 1 root root 4 Mar 17 22:14 test1

5.6 查看一个子镜像的克隆

[root@ceph2 ceph]# rbd clone testimg-copy@for-clone2 test-clone3 [root@ceph2 ceph]# rbd info test-clone3 rbd image 'test-clone3': size 2048 MB in 512 objects order 22 (4096 kB objects) block_name_prefix: rbd_data.fbba3d1b58ba format: 2 features: layering flags: create_timestamp: Sun Mar 17 23:07:34 2019 parent: rbd/testimg-copy@for-clone2 overlap: 2048 MB [root@ceph2 ceph]# rbd children testimg-copy@for-clone2 #查看拥有的子镜像 rbd/test-clone3

六、实现开机自启

在关机开机之后,挂载会取消,写到/etc/fstab中写入开机挂载,但是必须要map映射之后。

6.1配置/etc/fstab 文件

[root@ceph1 ceph]# vim /etc/fstab /dev/rbd/rbd/testimg-copy /mnt/ceph xfs defaults,_netdev 0 0 /dev/rbd/rbd/cephrbd1 /mnt/ceph2 xfs defaults,_netdev 0 0

6.2 配置rbdmap

root@ceph1 ceph4]# cd /etc/ceph/ [root@ceph1 ceph]# ls ceph.client.ning.keyring ceph.client.rbd.keyring ceph.conf ceph.d rbdmap [root@ceph1 ceph]# vim rbdmap # RbdDevice Parameters #poolname/imagename id=client,keyring=/etc/ceph/ceph.client.keyring rbd/testimg-copy id=rbd,keyring=/etc/ceph/ceph.client.rbd.keyring rbd/cephrbd1 id=rbd,keyring=/etc/ceph/ceph.client.rbd.keyring

6.3启动rbd服务并重启验证

[root@ceph1 ceph]# systemctl start rbdmap #启动服务 [root@ceph1 ~]# systemctl enable rbdmap #开机自启 Created symlink from /etc/systemd/system/multi-user.target.wants/rbdmap.service to /usr/lib/systemd/system/rbdmap.service. [root@ceph1 ceph]# df -hT Filesystem Type Size Used Avail Use% Mounted on /dev/vda1 xfs 40G 1.7G 39G 5% / devtmpfs devtmpfs 893M 0 893M 0% /dev tmpfs tmpfs 920M 0 920M 0% /dev/shm tmpfs tmpfs 920M 17M 904M 2% /run tmpfs tmpfs 920M 0 920M 0% /sys/fs/cgroup tmpfs tmpfs 184M 0 184M 0% /run/user/0 /dev/rbd0 xfs 2.0G 33M 2.0G 2% /mnt/ceph /dev/rbd1 xfs 2.0G 33M 2.0G 2% /mnt/ceph2 [root@ceph1 ~]# reboot #重启 [root@ceph1 ~]# df -hT #开机启动,挂载正常 Filesystem Type Size Used Avail Use% Mounted on /dev/vda1 xfs 40G 1.7G 39G 5% / devtmpfs devtmpfs 893M 0 893M 0% /dev tmpfs tmpfs 920M 0 920M 0% /dev/shm tmpfs tmpfs 920M 17M 904M 2% /run tmpfs tmpfs 920M 0 920M 0% /sys/fs/cgroup /dev/rbd0 xfs 2.0G 33M 2.0G 2% /mnt/ceph /dev/rbd1 xfs 2.0G 33M 2.0G 2% /mnt/ceph2 tmpfs tmpfs 184M 0 184M 0% /run/user/0

验证成功!!!

博主声明:本文的内容来源主要来自誉天教育晏威老师,由本人实验完成操作验证,需要的博友请联系誉天教育(http://www.yutianedu.com/),获得官方同意或者晏老师(https://www.cnblogs.com/breezey/)本人同意即可转载,谢谢!