tensorflow6---tensorboard可视化简易教程(一看就懂!)

tensorboard可视化张量(节点)、图结构、监控变量,tensorflow版本1.4

import tensorflow as tf

input1=tf.constant([1.0,2.0,3.0],name='input1')

with tf.name_scope('input2'):

input2=tf.Variable(tf.random_uniform([3]),name='input2')

output=tf.add_n([input1,input2],name='add')

writer=tf.summary.FileWriter("/path/to/log",tf.get_default_graph())

writer.close()name_scope命名空间管理器,便于管理模块。

创建写日志节点:writer=tf.summary.FileWriter(“/path/to/log”,tf.get_default_graph())

日志节点的创建一般在会话下,变量初始化后创建一个train_writer

第一个参数为保存日志(summary)的路径,第二个参数是要保存的图

writer.close()要在最后关闭日志记录器。

一般来说,记录相关量的方式如下:

1.记录张量的方式:tf.summary.scalar(a,b) “参数a为生成的图的名字,b为要记录的张量(节点)”

2.记录变量的方式:tf.summary.histogram(a,b) “此处参数b为要记录的变量,a为变量的名字”

3.记录image与audio的方式:

tf.summary.audio(name, tensor, sample_rate, max_outputs=3)

tf.summary.image(name, tensor, max_outputs=3)

4.记录图的结构:

run_options=tf.RunOptions(trace_level=tf.RunOptions.FULL_TRACE) 运行节点

run_metadata=tf.RunMetadata() 运行节点需要保存的元图数据

,loss_value,step=sess.run([train_op,loss,global_step],feed_dict={x:xs,y:ys},

options=run_options,run_metadata=run_metadata) 提供运行节点和需要保存的数据节点,然后在会话的运行中记录相关信息。

train_writer.add_run_metadata(run_metadata,’step%03d’ % i)

将第i步迭代的图结构保存下来

5.记录张量、变量、image和audio的这些节点,最后需要融合到一起,即:

定义融合所有需要记录节点的信息到这个节点*

merged_summary_op = tf.summary.merge_all()

这个merged_summary_op节点将在会话下运行,只有run了以后,才真实地记录信息

,loss_value,step,summary=sess.run([train_op,loss,global_step,merged_summary_op],feed_dict={x:xs,y:ys})

将需要记录步数i的信息放到summary中

train_writer.add_summary(summary, i)

以下具体融合了tensorflow代码梳理2中的神经网络样例使其可视化。

#深层神经网络的三部曲inference.py train.py evaluate.py

#主要看训练后的权重如何保存、网络计算图如何载入、训练好的权重系数如何载入

#mnist_inference.py

import tensorflow as tf

INPUT_NODE=784

OUTPUT_NODE=10

LAYER1_NODE=500

def get_weight_variable(shape,regularizer):

weights=tf.get_variable('weights',shape,initializer=tf.truncated_normal_initializer(stddev=0.1))

if regularizer !=None:

tf.add_to_collection('losses',regularizer(weights))

return weights

def inference(input_tensor,regularizer):

with tf.variable_scope('layer1',reuse=tf.AUTO_REUSE):

weights=get_weight_variable([INPUT_NODE,LAYER1_NODE],regularizer)

biases=tf.get_variable('biases',[LAYER1_NODE],initializer=tf.constant_initializer(0.0))

layer1=tf.nn.relu(tf.matmul(input_tensor,weights)+biases)

with tf.variable_scope('layer2',reuse=tf.AUTO_REUSE):

weights=get_weight_variable([LAYER1_NODE,OUTPUT_NODE],regularizer)

biases=tf.get_variable('biases',[OUTPUT_NODE],initializer=tf.constant_initializer(0.0))

layer2=tf.matmul(layer1,weights)+biases

return layer2#mnist_train.py

import os

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

#import mnist_inference

#配置神经网络参数

LAYER1_NODE=500

BATCH_SIZE=100

LEARNING_RATE_BASE=0.8

LEARNING_RATE_DECAY=0.99

REGULARIZTION_RATE=0.0001

TRAINING_STEPS=3000

MOVING_AVERAGE_DECAY=0.99

#模型保存的路径和文件名

MODEL_SAVE_PATH='/path/to/'

MODEL_NAME='model.ckpt'

def train(mnist):

with tf.name_scope('input'):

x=tf.placeholder(tf.float32,[None,INPUT_NODE],name='x-input')

y_=tf.placeholder(tf.float32,[None,OUTPUT_NODE],name='y-input')

regularizer=tf.contrib.layers.l2_regularizer(REGULARIZTION_RATE)

#y=mnist_inference.inference(x,regularizer)

y=inference(x,regularizer)

global_step=tf.Variable(0,trainable=False)

with tf.name_scope('moving_averages'):

variable_averages=tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY,global_step)

variable_averages_op=variable_averages.apply(tf.trainable_variables())

with tf.name_scope('loss_function'):

cross_entropy=tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y,labels=tf.argmax(y_,1))

cross_entropy_mean=tf.reduce_mean(cross_entropy)

loss=cross_entropy_mean+tf.add_n(tf.get_collection('losses'))

with tf.name_scope('train_step'):

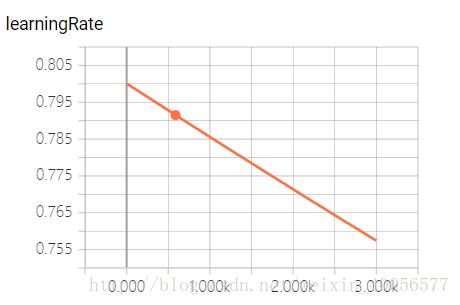

learning_rate=tf.train.exponential_decay(LEARNING_RATE_BASE,global_step,mnist.train.num_examples/BATCH_SIZE,LEARNING_RATE_DECAY)

train_step=tf.train.GradientDescentOptimizer(learning_rate).minimize(loss,global_step=global_step)

train_op=tf.group(train_step,variable_averages_op)

#初始化tensorflow持久类

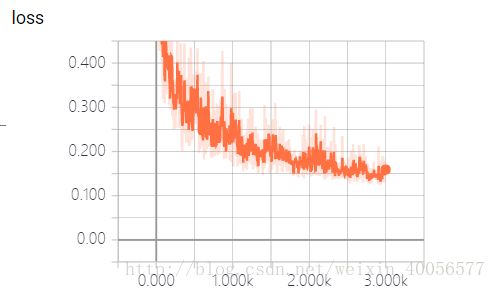

tf.summary.scalar("loss",loss)

tf.summary.scalar("learningRate",learning_rate)

for var in tf.trainable_variables():

tf.summary.histogram(var.name, var)

# Merge all summaries into a single op

merged_summary_op = tf.summary.merge_all()

saver=tf.train.Saver()

with tf.Session() as sess:

tf.global_variables_initializer().run()

train_writer = tf.summary.FileWriter("/path/to/log",sess.graph)

for i in range(1,TRAINING_STEPS+1):

xs,ys=mnist.train.next_batch(BATCH_SIZE)

#记录每一个节点的信息

if i % 1000 ==0:

saver.save(sess,os.path.join(MODEL_SAVE_PATH,MODEL_NAME),global_step=global_step)

run_options=tf.RunOptions(trace_level=tf.RunOptions.FULL_TRACE)

run_metadata=tf.RunMetadata()

_,loss_value,step=sess.run([train_op,loss,global_step],feed_dict={x:xs,y_:ys},

options=run_options,run_metadata=run_metadata)

train_writer.add_run_metadata(run_metadata,'step%03d' % i)

print('After %d training step(s), loss on training batch is %g.' % (step, loss_value))

else:

_,loss_value,step,summary=sess.run([train_op,loss,global_step,merged_summary_op],feed_dict={x:xs,y_:ys})

train_writer.add_summary(summary, i)

train_writer.close()

def main(argv=None):

mnist=input_data.read_data_sets('/tmp/data',one_hot=True)

train(mnist)

if __name__=='__main__':

tf.app.run()

**最后,Windows系统下,按Win+R,打开cmd命令行窗口,然后输入

“tensorboard –logdir=”D:/path/to/log”,打开浏览器地址栏输入http://localhost:6006即可看见**