filebeat日志收集实践

在ELK的架构中,Beats取代了logstash,直接扮演了面向日志源的角色,只专做"收集"一项工作,而logstash则负责居中的过滤和分析

filebeat 6.6.1源码包

前提ELK已部署完成,其中elasticsearch,kibana,logstash全部采用6.6.1源码包

- 下载

从官网上直接下载

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.6.1-linux-x86_64.tar.gz

- 配置

解压并复制到/usr/local/filebeat,目录下包括filebeat的配置文件和log文件等

/usr/local/filebeat$ ls -l

total 36476

drwxr-x--- 2 root root 4096 Jul 17 04:10 data

-rw-r--r-- 1 root root 131266 Jul 17 02:51 fields.yml

-rwxr-xr-x 1 root root 36927942 Jul 17 02:51 filebeat

-rw-r--r-- 1 root root 69578 Jul 17 02:51 filebeat.reference.yml

-rw------- 1 root root 7717 Jul 17 03:08 filebeat.yml

drwxr-xr-x 4 root root 4096 Jul 17 02:51 kibana

-rw-r--r-- 1 root root 13675 Jul 17 02:51 LICENSE.txt

drwx------ 2 root root 4096 Jul 17 03:17 logs

drwxr-xr-x 20 root root 4096 Jul 17 02:51 module

drwxr-xr-x 2 root root 4096 Jul 17 02:51 modules.d

-rw-r--r-- 1 root root 163067 Jul 17 02:51 NOTICE.txt

-rw-r--r-- 1 root root 802 Jul 17 02:51 README.md

修改filebeat.yml

- type: log

# Change to true to enable this input configuration.

enabled: true #此处修改为true

# Paths that should be crawled and fetched. Glob based paths.

paths:

- /var/log/auth.log #此处配置需要收集的日志文件路径

#-------------------------- Elasticsearch output ------------------------------

#output.elasticsearch: #注释输出到elasticsearch

#----------------------------- Logstash output -------------------------------

-

output.logstash: #开启输出到logstash

# The Logstash hosts

hosts: ["10.x.x.5:5044"]

配置完成后,需先将logstash服务启动后,才能运行filebeat,否则将报错不能正常运行

logstash 6.6.1源码

- 下载

https://www.elastic.co/downloads/past-releases/logstash-6-6-1

- 配置

下载完成后,解压将其复制到 /usr/local/logstash/,然后在 /usr/local/logstash/config/下,复制logstash-sample.conf到配置文件logstash.conf

配置/usr/local/logstash/config/logstash.conf

input {

beats {

port => 5044 #默认filebeat输出的端口,可自定义

}

}

output {

elasticsearch {

hosts => ["http://10.x.x.5:9200"]

index => "backup-%{[@metadata][version]}-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

}

配置/usr/local/logstash/bin/logstash.yml

#修改如下内容,ip与elasticsearch配置中的ip的一致

http.host: "10.x.x.5"

http.port: 9600-9700

- 运行logstash

/usr/local/logstash/bin/logstash -f /usr/local/logstash/config/logstash.conf

Sending Logstash logs to /usr/local/logstash/logs which is now configured via log4j2.properties

[2019-07-16T23:39:15,460][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2019-07-16T23:39:15,485][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.6.1"}

[2019-07-16T23:39:24,435][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2019-07-16T23:39:25,277][INFO ][logstash.outputs.elasticsearch] Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://10.x.x.5:9200/]}}

[2019-07-16T23:39:25,556][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://10.26.80.50:9200/"}

[2019-07-16T23:39:25,693][INFO ][logstash.outputs.elasticsearch] ES Output version determined {:es_version=>6}

[2019-07-16T23:39:25,697][WARN ][logstash.outputs.elasticsearch] Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>6}

[2019-07-16T23:39:25,753][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["http://10.x.x.5:9200"]}

[2019-07-16T23:39:25,786][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil}

[2019-07-16T23:39:25,824][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>60001, "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date"}, "@version"=>{"type"=>"keyword"}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}

[2019-07-16T23:39:25,955][INFO ][logstash.outputs.elasticsearch] Installing elasticsearch template to _template/logstash

[2019-07-16T23:39:26,486][INFO ][logstash.inputs.beats ] Beats inputs: Starting input listener {:address=>"0.0.0.0:5044"}

[2019-07-16T23:39:26,586][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#" }

[2019-07-16T23:39:26,760][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2019-07-16T23:39:26,794][INFO ][org.logstash.beats.Server] Starting server on port: 5044

[2019-07-16T23:39:27,237][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

- 运行filebeat

/usr/local/filebeat/filebeat -e -c /usr/local/filebeat/filebeat.yml &

2019-07-17T03:39:57.162Z INFO instance/beat.go:616 Home path: [/usr/local/filebeat] Config path: [/usr/local/filebeat] Data path: [/usr/local/filebeat/data] Logs path: [/usr/local/filebeat/logs]

2019-07-17T03:39:57.163Z INFO instance/beat.go:623 Beat UUID: 8450b74d-7276-4a06-a733-5c5e7864d70e

2019-07-17T03:39:57.164Z INFO [seccomp] seccomp/seccomp.go:116 Syscall filter successfully installed

2019-07-17T03:39:57.164Z INFO [beat] instance/beat.go:936 Beat info {"system_info": {"beat": {"path": {"config": "/usr/local/filebeat", "data": "/usr/local/filebeat/data", "home": "/usr/local/filebeat", "logs": "/usr/local/filebeat/logs"}, "type": "filebeat", "uuid": "8450b74d-7276-4a06-a733-5c5e7864d70e"}}}

2019-07-17T03:39:57.165Z INFO [beat] instance/beat.go:945 Build info {"system_info": {"build": {"commit": "928f5e3f35fe28c1bd73513ff1cc89406eb212a6", "libbeat": "6.6.1", "time": "2019-02-13T16:12:26.000Z", "version": "6.6.1"}}}

2019-07-17T03:39:57.165Z INFO [beat] instance/beat.go:948 Go runtime info {"system_info": {"go": {"os":"linux","arch":"amd64","max_procs":2,"version":"go1.10.8"}}}

2019-07-17T03:39:57.167Z INFO [beat] instance/beat.go:952 Host info {"system_info": {"host": {"architecture":"x86_64","boot_time":"2019-07-08T02:11:17Z","containerized":false,"name":"backup","ip":["127.0.0.1/8","::1/128","10.x.x.x/24","fe80::20c:29ff:fea0:268a/64","172.17.0.1/16"],"kernel_version":"4.15.0-54-generic","mac":["00:0c:29:a0:26:8a","02:42:05:9d:0f:03"],"os":{"family":"debian","platform":"ubuntu","name":"Ubuntu","version":"18.04 LTS (Bionic Beaver)","major":18,"minor":4,"patch":0,"codename":"bionic"},"timezone":"UTC","timezone_offset_sec":0,"id":"d197b8797f2e4c05a7ba292f5a0694a2"}}}

2019-07-17T03:39:57.168Z INFO [beat] instance/beat.go:981 Process info {"system_info": {"process": {"capabilities": {"inheritable":null,"permitted":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend","audit_read"],"effective":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend","audit_read"],"bounding":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend","audit_read"],"ambient":null}, "cwd": "/usr/local/filebeat", "exe": "/usr/local/filebeat/filebeat", "name": "filebeat", "pid": 28135, "ppid": 27695, "seccomp": {"mode":"filter","no_new_privs":true}, "start_time": "2019-07-17T03:39:56.700Z"}}}

2019-07-17T03:39:57.169Z INFO instance/beat.go:281 Setup Beat: filebeat; Version: 6.6.1

2019-07-17T03:40:00.171Z INFO add_cloud_metadata/add_cloud_metadata.go:319 add_cloud_metadata: hosting provider type not detected.

2019-07-17T03:40:00.172Z INFO [publisher] pipeline/module.go:110 Beat name: backup

2019-07-17T03:40:00.173Z INFO instance/beat.go:403 filebeat start running.

2019-07-17T03:40:00.173Z INFO registrar/registrar.go:134 Loading registrar data from /usr/local/filebeat/data/registry

2019-07-17T03:40:00.173Z INFO [monitoring] log/log.go:117 Starting metrics logging every 30s

2019-07-17T03:40:00.173Z INFO registrar/registrar.go:141 States Loaded from registrar: 1

2019-07-17T03:40:00.174Z WARN beater/filebeat.go:367 Filebeat is unable to load the Ingest Node pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the Ingest Node pipelines or are using Logstash pipelines, you can ignore this warning.

2019-07-17T03:40:00.175Z INFO crawler/crawler.go:72 Loading Inputs: 1

2019-07-17T03:40:00.176Z INFO log/input.go:138 Configured paths: [/var/log/auth.log]

2019-07-17T03:40:00.176Z INFO input/input.go:114 Starting input of type: log; ID: 14014765733417081504

2019-07-17T03:40:00.177Z INFO crawler/crawler.go:106 Loading and starting Inputs completed. Enabled inputs: 1

2019-07-17T03:40:00.178Z INFO cfgfile/reload.go:150 Config reloader started

2019-07-17T03:40:00.178Z INFO cfgfile/reload.go:205 Loading of config files completed.

2019-07-17T03:40:00.178Z INFO log/harvester.go:255 Harvester started for file: /var/log/auth.log

2019-07-17T03:40:01.180Z INFO pipeline/output.go:95 Connecting to backoff(async(tcp://10.x.x.5:5044))

2019-07-17T03:40:01.195Z INFO pipeline/output.go:105 Connection to backoff(async(tcp://10.x.x.5:5044)) established

- 查看服务

在filebeat的主机上

netstat -antlp |grep 5044

tcp 0 0 10.x.x.x:57192 10.x.x.5:5044 ESTABLISHED 28135/./filebeat

在logstash的主机上

netstat -antlp |grep 5044

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

tcp6 0 0 :::5044 :::* LISTEN -

tcp6 0 0 10.x.x.5:5044 10.x.x.x:57192 ESTABLISHED -

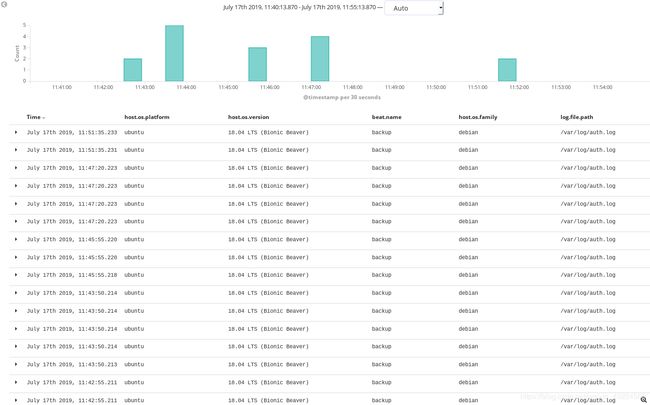

- Kibana展示