import pandas as pd

import numpy as np

import matplotlib

import matplotlib.pyplot as plt

import seaborn as sns

import os

from tqdm import tqdm

import re

import inspect

import tensorflow as tf

from tensorflow import keras

from sklearn.model_selection import train_test_split

from nltk.corpus import stopwords

import nltk

import datetime

import transformers

from transformers import BertConfig,TFBertPreTrainedModel,BertTokenizer,TFBertMainLayer,TFBertModel

print("tf_version_ : ",tf.__version__)

print("transformers:",transformers.__version__)

tf_version_ : 2.0.0

transformers: 2.5.1

MAX_LENGTH = 36

BATCH_SIZE = 16

path_home = r"/home/lowry/pro/kaggle_tweets/kaggle_tweets_emotion"

path_data = os.path.join(path_home,"data")

data_train = pd.read_csv(os.path.join(path_data,"train.csv"),encoding="utf-8")

data_test = pd.read_csv(os.path.join(path_data,"test.csv"),encoding="utf-8")

data_submit = pd.read_csv(os.path.join(path_data,"sample_submission.csv"),encoding="utf-8")

stopwords_english = stopwords.words("english")

def cleanword(s):

s = s.lower()

temp = re.findall("http\S*",s)

for deletStr in temp:

if deletStr != "":

s = s.replace(deletStr," ")

temp = re.findall("@\S*",s)

for deletStr in temp:

if deletStr != "":

s = s.replace(deletStr," ")

temp = re.findall("\d*",s)

for deletStr in temp:

if deletStr != "":

s = s.replace(deletStr," ")

temp = re.findall("\x89\S*",s)

for deletStr in temp:

if deletStr != "":

s = s.replace(deletStr[:5]," ")

s = s.replace("\n"," ")

s = s.replace(","," ")

s = s.replace("?"," ")

s = s.replace("..."," ")

s = s.replace("."," ")

s = s.replace("["," ")

s = s.replace("]"," ")

s = s.replace("!"," ")

s = s.replace(":"," ")

s = s.replace("-"," ")

s = s.replace("#"," ")

s = s.replace("|"," ")

s = s.replace("("," ")

s = s.replace(")"," ")

s = s.replace(";"," ")

s = s.replace("="," ")

s = s.replace(">"," ")

s = s.replace("<"," ")

s = s.replace("/"," ")

s_new = ""

word = ""

for i in range(len(s)):

if s[i] != " " :

word += s[i]

else:

if word != "":

s_new = s_new + " " + word

word = ""

if word != "":

s_new += word

s_new = s_new.strip()

return s_new

data_test['text'] = data_test['text'].apply(cleanword)

data_train['text'] = data_train['text'].apply(cleanword)

path_bert = "/home/lowry/pro/model/bert_model_h5/bert-base-uncased"

tokenizer = BertTokenizer.from_pretrained(f"{path_bert}/vocab.txt")

def bert_encode(texts,tokenizer,max_length = MAX_LENGTH):

input_ids = []

input_masks = []

input_segment = []

for text in tqdm(texts):

inputs = tokenizer.encode_plus(

text,

add_special_tokens = True,

max_length = max_length

)

input_ids_temp = inputs["input_ids"]

input_masks_temp = inputs["attention_mask"]

input_segment_temp = [0] * max_length

padding_length = max_length - len(input_ids_temp)

input_ids_temp += [0]*padding_length

input_masks_temp += [0]*padding_length

input_ids.append(input_ids_temp)

input_masks.append(input_masks_temp)

input_segment.append(input_segment_temp)

return [

np.array(input_ids,dtype=np.int32),

np.array(input_masks,dtype=np.int32),

np.array(input_segment,dtype=np.int32)

]

train_input = bert_encode(data_train.text.values,tokenizer,MAX_LENGTH)

test_input = bert_encode(data_test.text.values,tokenizer,MAX_LENGTH)

train_label = np.array(data_train['target'].tolist(),dtype=np.int32)

word_len = data_train.text.apply(lambda x : len(tokenizer.encode(x)))

print("word_len_percent:",np.percentile(word_len.tolist(),99))

Calling BertTokenizer.from_pretrained() with the path to a single file or url is deprecated

100%|██████████| 7613/7613 [00:02<00:00, 3689.10it/s]

100%|██████████| 3263/3263 [00:00<00:00, 3741.57it/s]

word_len_percent: 36.0

"""

bert return:

1:last_hidden_state : shape = (batch_size, sequence_length, hidden_size)):

2:pooler_output : shape = (batch_size, hidden_size)):

3:hidden_states : shape = (1+12) * (batch_size, sequence_length, hidden_size))

ps:1+12 = 1 embeddings + 12 layer

"""

class TweetBERT(tf.keras.Model):

def __init__(self):

super(TweetBERT,self).__init__()

config = BertConfig.from_pretrained(f"{path_bert}/config.json",output_hidden_states=True)

self.hidden_size = config.hidden_size

self.bert_model = TFBertModel.from_pretrained(f"{path_bert}/tf_model.h5", config=config)

self.concat = tf.keras.layers.Concatenate(axis=2)

self.avgpool = tf.keras.layers.GlobalAveragePooling1D()

self.dropout = tf.keras.layers.Dropout(0.15)

self.output_ = tf.keras.layers.Dense(1,activation="sigmoid")

def call(self,inputs):

input_id, input_mask,input_segment = inputs

sequence_output, pooler_output, hidden_states = self.bert_model(input_id,attention_mask=input_mask,token_type_ids=input_segment)

h12 = tf.reshape(hidden_states[-1][:,0],(-1,1,self.hidden_size))

h11 = tf.reshape(hidden_states[-2][:,0],(-1,1,self.hidden_size))

h10 = tf.reshape(hidden_states[-3][:,0],(-1,1,self.hidden_size))

h09 = tf.reshape(hidden_states[-4][:,0],(-1,1,self.hidden_size))

concat_hidden = self.concat(([h12,h11,h10,h09]))

x = self.avgpool(concat_hidden)

x = self.dropout(x)

x = self.output_(x)

return x

model = TweetBERT()

optimizer = keras.optimizers.Adam(learning_rate=1e-5)

loss = "binary_crossentropy"

model.compile(loss=loss,optimizer=optimizer,metrics=["accuracy"])

path_save_model = "/home/lowry/pro/kaggle_tweets/kaggle_tweets_emotion/model/" + "bert-base" + '/'

if not os.path.exists(path_save_model):

os.mkdir(path_save_model)

path_save_model += "saveModelWeightCheckpoint"

checkpoint = keras.callbacks.ModelCheckpoint(

filepath = path_save_model,

monitor = "val_accuracy",

mode = "max",

verbose = 1,

save_best_only = True,

save_weight_only = True,

)

history = model.fit(

train_input,

train_label,

epochs=3,

batch_size=BATCH_SIZE,

validation_split=0.2,

callbacks = [checkpoint],

)

WARNING:tensorflow:Entity > could not be transformed and will be executed as-is. Please report this to the AutoGraph team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output. Cause: Failed to parse source code of >, which Python reported as:

def call(self,inputs):

input_id, input_mask,input_segment = inputs

sequence_output, pooler_output, hidden_states = self.bert_model(input_id,attention_mask=input_mask,token_type_ids=input_segment)

h12 = tf.reshape(hidden_states[-1][:,0],(-1,1,self.hidden_size))

h11 = tf.reshape(hidden_states[-2][:,0],(-1,1,self.hidden_size))

h10 = tf.reshape(hidden_states[-3][:,0],(-1,1,self.hidden_size))

h09 = tf.reshape(hidden_states[-4][:,0],(-1,1,self.hidden_size))

concat_hidden = self.concat(([h12,h11,h10,h09]))

x = self.avgpool(concat_hidden)

# x = sequence_output[:,0,:]

x = self.dropout(x)

x = self.output_(x)

return x

This may be caused by multiline strings or comments not indented at the same level as the code.

WARNING: Entity > could not be transformed and will be executed as-is. Please report this to the AutoGraph team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output. Cause: Failed to parse source code of >, which Python reported as:

def call(self,inputs):

input_id, input_mask,input_segment = inputs

sequence_output, pooler_output, hidden_states = self.bert_model(input_id,attention_mask=input_mask,token_type_ids=input_segment)

h12 = tf.reshape(hidden_states[-1][:,0],(-1,1,self.hidden_size))

h11 = tf.reshape(hidden_states[-2][:,0],(-1,1,self.hidden_size))

h10 = tf.reshape(hidden_states[-3][:,0],(-1,1,self.hidden_size))

h09 = tf.reshape(hidden_states[-4][:,0],(-1,1,self.hidden_size))

concat_hidden = self.concat(([h12,h11,h10,h09]))

x = self.avgpool(concat_hidden)

# x = sequence_output[:,0,:]

x = self.dropout(x)

x = self.output_(x)

return x

This may be caused by multiline strings or comments not indented at the same level as the code.

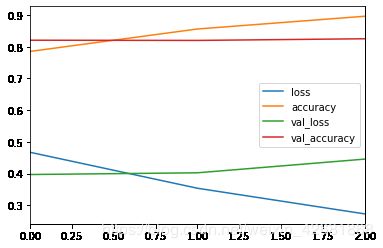

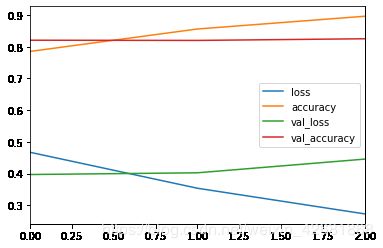

Train on 6090 samples, validate on 1523 samples

Epoch 1/3

WARNING:tensorflow:Gradients do not exist for variables ['tf_bert_model_7/bert/pooler/dense/kernel:0', 'tf_bert_model_7/bert/pooler/dense/bias:0'] when minimizing the loss.

WARNING:tensorflow:Gradients do not exist for variables ['tf_bert_model_7/bert/pooler/dense/kernel:0', 'tf_bert_model_7/bert/pooler/dense/bias:0'] when minimizing the loss.

6080/6090 [============================>.] - ETA: 0s - loss: 0.4599 - accuracy: 0.7929

Epoch 00001: val_accuracy improved from -inf to 0.82928, saving model to /home/lowry/pro/kaggle_tweets/kaggle_tweets_emotion/model/bert-base/saveModelWeightCheckpoint

6090/6090 [==============================] - 70s 12ms/sample - loss: 0.4602 - accuracy: 0.7928 - val_loss: 0.3834 - val_accuracy: 0.8293

Epoch 2/3

6080/6090 [============================>.] - ETA: 0s - loss: 0.3485 - accuracy: 0.8546

Epoch 00002: val_accuracy did not improve from 0.82928

6090/6090 [==============================] - 41s 7ms/sample - loss: 0.3483 - accuracy: 0.8548 - val_loss: 0.3990 - val_accuracy: 0.8240

Epoch 3/3

6080/6090 [============================>.] - ETA: 0s - loss: 0.2758 - accuracy: 0.8914

Epoch 00003: val_accuracy did not improve from 0.82928

6090/6090 [==============================] - 41s 7ms/sample - loss: 0.2756 - accuracy: 0.8916 - val_loss: 0.4515 - val_accuracy: 0.8267

model = TweetBERT()

model.load_weights(path_save_model)

print(new_model)

<__main__.TweetBERT object at 0x7f749ad53b38>

data = pd.DataFrame(history.history).plot()

plt.show()

result = model.predict(test_input)

print(result)

WARNING:tensorflow:Entity > could not be transformed and will be executed as-is. Please report this to the AutoGraph team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output. Cause: Failed to parse source code of >, which Python reported as:

def call(self,inputs):

input_id, input_mask,input_atn = inputs

sequence_output, pooler_output, hidden_states = self.bert_model(input_id,attention_mask=input_mask,token_type_ids=input_atn)

h12 = tf.reshape(hidden_states[-1][:,0],(-1,1,self.hidden_size))

h11 = tf.reshape(hidden_states[-2][:,0],(-1,1,self.hidden_size))

h10 = tf.reshape(hidden_states[-3][:,0],(-1,1,self.hidden_size))

h09 = tf.reshape(hidden_states[-4][:,0],(-1,1,self.hidden_size))

concat_hidden = self.concat(([h12,h11,h10,h09]))

x = self.avgpool(concat_hidden)

# x = sequence_output[:,0,:]

x = self.dropout(x)

x = self.output_(x)

return x

This may be caused by multiline strings or comments not indented at the same level as the code.

WARNING: Entity > could not be transformed and will be executed as-is. Please report this to the AutoGraph team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output. Cause: Failed to parse source code of >, which Python reported as:

def call(self,inputs):

input_id, input_mask,input_atn = inputs

sequence_output, pooler_output, hidden_states = self.bert_model(input_id,attention_mask=input_mask,token_type_ids=input_atn)

h12 = tf.reshape(hidden_states[-1][:,0],(-1,1,self.hidden_size))

h11 = tf.reshape(hidden_states[-2][:,0],(-1,1,self.hidden_size))

h10 = tf.reshape(hidden_states[-3][:,0],(-1,1,self.hidden_size))

h09 = tf.reshape(hidden_states[-4][:,0],(-1,1,self.hidden_size))

concat_hidden = self.concat(([h12,h11,h10,h09]))

x = self.avgpool(concat_hidden)

# x = sequence_output[:,0,:]

x = self.dropout(x)

x = self.output_(x)

return x

This may be caused by multiline strings or comments not indented at the same level as the code.

[[0.5131391 ]

[0.9968698 ]

[0.98543626]

...

[0.99892104]

[0.9709744 ]

[0.98978955]]