【读书1】【2017】MATLAB与深度学习——批处理方法的实现(1)

该代码不是使用每个训练数据点的权值更新dW,并立即训练神经网络。

This code does not immediately train theneural network with the weight update, dW, of the individual training datapoints.

而是将所有训练数据的权值更新累加得到dWsum,然后使用平均权值更新dWavg来一次性调节权值。

It adds the individual weight updates ofthe entire training data to dWsum and adjusts the weight just once using theaverage, dWavg.

这是批处理方法与SGD方法之间最基本的不同之处。

This is the fundamental difference thatseparates this method from the SGD method.

批处理方法中运用的平均特性使得训练过程相对于训练数据的敏感程度较低。

The averaging feature of the batch methodallows the training to be less sensitive to the training data.

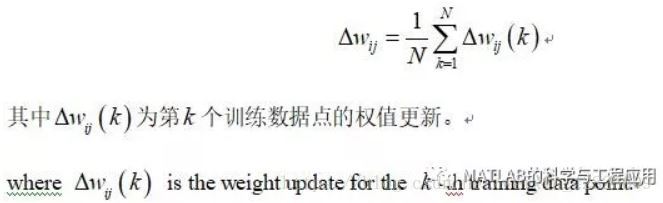

回顾一下式2.6中的权值更新方法。

Recall that Equation 2.6 yields the weightupdate.

当你使用以上代码深入学习时,将会很容易理解该表达式的含义。

It will be much easier to understand thisequation when you look into it using the previous code.

为方便阅读,这里将式2.6再次撰写如下。

Equation 2.6 is shown here again, for yourconvenience.

下面的程序列表给出了测试函数DeltaBatch的MATLAB文件TestDeltaBatch.m。

The following program listing shows theTestDeltaBatch.m file that tests the function DeltaBatch.

该文件调用了DeltaBatch函数,训练神经网络40000次。

This program calls in the functionDeltaBatch and trains the neural network 40,000 times.

将所有的训练数据输入到神经网络中,并显示出结果。

All the training data is fed into thetrained neural network, and the output is displayed.

对比训练结果与训练数据的正确输出,从而验证训练的有效性。

Check the output and correct output fromthe training data to verify the adequacy of the training.

clear all

X = [ 0 0 1;

0 1 1;

1 0 1;

1 1 1;

];

D = [ 0 0 1 1 ];

W = 2*rand(1, 3) - 1;

for epoch = 1:40000

W =DeltaBatch(W, X, D);

end

N = 4;

for k = 1:N

x = X(k, ?’;

v = W*x;

y = Sigmoid(v)

end

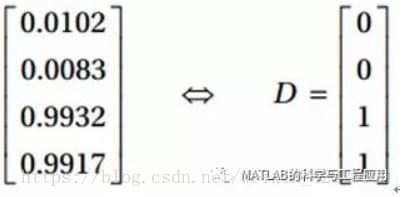

接下来,执行以上代码,将在屏幕上看到以下的输出结果。

Next, execute this code, and you will seethe following values on your screen.

神经网络的训练输出与正确输出D非常相似。

The output is very similar to the correctoutput, D.

这也证明了该神经网络得到了正确的训练。

This verifies that the neural network hasbeen properly trained.

由于这个测试程序与TestDeltaSGD.m文件几乎相同,因此我们不再进行详细解释。

As this test program is almost identical tothe TestDeltaSGD.m file, we will skip the detailed explanation.

该方法有趣的地方是它训练了神经网络40000次。

An interesting point about this method isthat it trained the neural network 40,000 times.

回忆一下,SGD方法只进行了10000次训练。

Recall that the SGD method performed only10,000 trainings.

——本文译自Phil Kim所著的《Matlab Deep Learning》