ELKStack

ELK介绍

- 需求背景

- 一般我们需要进行日志分析场景:直接在日志文件中 grep、awk 就可以获得自己想要的信息。但在规模较大的场景中,此方法效率低下,面临问题包括日志量太大如何归档、文本搜索太慢怎么办、如何多维度查询。需要集中化的日志管理,所有服务器上的日志收集汇总。常见解决思路是建立集中式日志收集系统,将所有节点上的日志统一收集,管理,访问。

- 一般大型系统是一个分布式部署的架构,不同的服务模块部署在不同的服务器上,问题出现时,大部分情况需要根据问题暴露的关键信息,定位到具体的服务器和服务模块,构建一套集中式日志系统,可以提高定位问题的效率。

一个完整的集中式日志系统,需要包含以下几个主要特点: - 收集-能够采集多种来源的日志数据

- 传输-能够稳定的把日志数据传输到中央系统

- 存储-如何存储日志数据

- 分析-可以支持 UI 分析

- 警告-能够提供错误报告,监控机制

ELK提供了一整套解决方案,并且都是开源软件,之间互相配合使用,完美衔接,高效的满足了很多场合的应用。目前主流的一种日志系统。

- Logstash : 开源的服务器端数据处理管道,能够同时从多个来源采集数据,转换数据,然后将数据存储到数据库中(默认连接ES,内存占用大)。

- Logstash 主要是用来日志的搜集、分析、过滤日志的工具,支持大量的数据获取方式。一般工作方式为c/s架构,client端安装在需要收集日志的主机上,server端负责将收到的各节点日志进行过滤、修改等操作在一并发往elasticsearch上去。

- ElasticSearch:搜索,分析和存储数据,分布式数据库。(简称ES)

- Kibana:数据可视化。

- Beats:轻量型采集器的平台,从边缘机器向Logstash和Elasticsearch发送数据。

- Filebeat:轻量型日志采集器(内存占用非常小)。

https://www.elastic.co/cn/

https://www.elastic.co/subscriptions

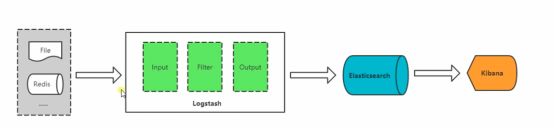

通过logstash收集数据,并对需要的数据进行过滤,然后将数据写入ES中,Kibana在ES中读取数据。运维查看的是以Web界面显示的Kibana。

- ELK Stack架构

由于多个客户端/数据源同时向负责过滤的Logstash传送数据(由于数据量太大,Logstash承受不了),此时,在数据源与Logstash之间必须添加消息队列,可以降低向Logstash传输的压力,

- 消息队列:是一个“生产者——消费者”的模型架构,生产者指的是往超市里送数据的人(超市用来存放数据),消费者指的是取走数据,消费者可以按需去取(不超过写入速度),多余的数据存放在超市,永久降低数据库写压力。

500台以下的中小型,消息队列用redis轻型消息队列;redis速度非常快,用于小数据(<1M),但是大数据传输就会非常慢。 - Redis单进程单线程,异步非阻塞I/O模型(epoll)

Logstash三大重点模块: - Input:输入,输出数据可以是Stdin,File,TCP,Redis,Syslog等

- Filter:过滤,将日志格式化。有丰富的过滤插件:Grok正则捕获,Date时间处理,Json编码解码,Mutate数据修改等

- Output:输出,输出目标可以是Stdout,File,TCP,Redis,ES等

环境部署

- 环境要求

[root@localhost ~]# cat /etc/redhat-release

CentOS Linux release 7.5.1804 (Core)

[root@localhost ~]# uname -r

3.10.0-862.el7.x86_64

[root@localhost ~]# systemctl stop firewalld

[root@localhost ~]# systemctl disable firewalld

[root@localhost ~]# sestatus

SELinux status: disabled

- 安装jdk环境

[root@localhost ~]# ls

anaconda-ks.cfg apache-tomcat-8.5.33.tar.gz jdk-8u60-linux-x64.tar.gz nginx-1.10.2.tar.gz

[root@localhost ~]# tar xf jdk-8u60-linux-x64.tar.gz -C /usr/local/

[root@localhost ~]# mv /usr/local/jdk1.8.0_60 /usr/local/jdk

- 配置java环境变量

[root@localhost ~]# vim /etc/profile

[root@localhost ~]# tail -3 /etc/profile

export JAVA_HOME=/usr/local/jdk/

export PATH=$PATH:$JAVA_HOME/bin

export CLASSPATH=.:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar:$CLASSPATH

[root@localhost ~]# source /etc/profile

[root@localhost ~]# java -version

java version "1.8.0_60"

Java(TM) SE Runtime Environment (build 1.8.0_60-b27)

Java HotSpot(TM) 64-Bit Server VM (build 25.60-b23, mixed mode)

kibana的安装和启动

- kibana下载地址

kibana主要用来展现数据,它本身不存储数据

https://artifacts.elastic.co/downloads/kibana/kibana-6.2.3-linux-x86_64.tar.gz - 编译部署kibana

[root@localhost ~]# ls

anaconda-ks.cfg jdk-8u60-linux-x64.tar.gz nginx-1.10.2.tar.gz

apache-tomcat-8.5.33.tar.gz kibana-6.2.3-linux-x86_64.tar.gz

[root@localhost ~]# useradd -s /sbin/nologin -M elk

[root@localhost ~]# tar xf kibana-6.2.3-linux-x86_64.tar.gz -C /usr/local/

[root@localhost ~]# mv /usr/local/kibana-6.2.3-linux-x86_64 /usr/local/kibana

- 修改kibana配置文件

[root@localhost ~]# cd /usr/local/kibana/config/

[root@localhost config]# cp kibana.yml{,.bak}

[root@localhost config]# vim kibana.yml

2 server.port: 5601 #暂时就先修改这两行

7 server.host: "0.0.0.0" #暂时就先修改这两行

21 #elasticsearch.url: "http://localhost:9200"

39 #elasticsearch.username: "user"

40 #elasticsearch.password: "pass"

- 把kibana目录改为elk用户

[root@localhost config]# chown -R elk.elk /usr/local/kibana/

- 新增启动脚本vim /usr/local/kibana/bin/start.sh

[root@localhost config]# vim /usr/local/kibana/bin/start.sh

nohup /usr/local/kibana/bin/kibana >> /tmp/kibana.log 2>> /tmp/kibana.log &

[root@localhost config]# chmod a+x /usr/local/kibana/bin/start.sh

- 用普通用户启动kibana

[root@localhost config]# su -s /bin/bash elk '/usr/local/kibana/bin/start.sh'

[root@localhost config]# ps -ef | grep elk | grep -v grep

elk 1311 1 10 09:07 pts/0 00:00:08 /usr/local/kibana/bin/../node/bin/node --no-warnings /usr/local/kibana/bin/../src/cli

如果有防火墙需要开放tcp5601端口

- 查看错误日志

[root@localhost config]# cat /tmp/kibana.log | grep warning | head -5

{"type":"log","@timestamp":"2019-01-09T14:07:14Z","tags":["warning","elasticsearch","admin"],"pid":1311,"message":"Unable to revive connection: http://localhost:9200/"}

{"type":"log","@timestamp":"2019-01-09T14:07:14Z","tags":["warning","elasticsearch","admin"],"pid":1311,"message":"No living connections"}

{"type":"log","@timestamp":"2019-01-09T14:07:17Z","tags":["warning","elasticsearch","admin"],"pid":1311,"message":"Unable to revive connection: http://localhost:9200/"}

{"type":"log","@timestamp":"2019-01-09T14:07:17Z","tags":["warning","elasticsearch","admin"],"pid":1311,"message":"No living connections"}

{"type":"log","@timestamp":"2019-01-09T14:07:19Z","tags":["warning","elasticsearch","admin"],"pid":1311,"message":"Unable to revive connection: http://localhost:9200/"}

这里有个警告,意思是连接不上elasticsearch,忽略,因为我们还没有装它。

使用nginx来限制访问kibana

- 使用nginx转发kibana

由于kibana没有权限控制,可以借助nginx来部署认证和进行ip控制,通过访问nginx的80端口反向代理给kibana。或者通过修改nginx的端口访问kibana

#修改kibana的配置文件,改为监听127.0.0.1

[root@localhost config]# vim /usr/local/kibana/config/kibana.yml

7 server.host: "127.0.0.1"

#关闭kibana,重启动kibana

[root@localhost config]# ps -ef | grep elk

elk 1311 1 1 09:07 pts/0 00:00:12 /usr/local/kibana/bin/../node/bin/node --no-warnings /usr/local/kibana/bin/../src/cli

root 1360 1241 0 09:19 pts/0 00:00:00 grep --color=auto elk

[root@localhost config]# kill -9 1311

[root@localhost config]# ps -ef | grep elk

root 1362 1241 0 09:19 pts/0 00:00:00 grep --color=auto elk

[root@localhost nginx]# su -s /bin/bash elk '/usr/local/kibana/bin/start.sh'

[root@localhost nginx]# ps -ef | grep elk | grep -v grep

elk 1415 1 12 09:35 pts/0 00:00:02 /usr/local/kibana/bin/../node/bin/node --no-warnings /usr/local/kibana/bin/../src/cli

- 借助nginx来限制访问,控制源IP的访问

#编译安装nginx

[root@localhost ~]# tar xf nginx-1.10.2.tar.gz -C /usr/src/

[root@localhost ~]# cd /usr/src/nginx-1.10.2/

[root@localhost nginx-1.10.2]# yum -y install pcre-devel openssl-devel

[root@localhost nginx-1.10.2]# useradd -s /sbin/nologin -M www

[root@localhost nginx-1.10.2]# ./configure --user=www --group=www --prefix=/usr/local/nginx --with-http_stub_status_module --with-http_ssl_module

[root@localhost nginx-1.10.2]# make && make install

[root@localhost nginx-1.10.2]# ln -s /usr/local/nginx/sbin/* /usr/local/sbin/

[root@localhost config]# nginx -V

nginx version: nginx/1.10.2

built by gcc 4.8.5 20150623 (Red Hat 4.8.5-36) (GCC)

built with OpenSSL 1.0.2k-fips 26 Jan 2017

TLS SNI support enabled

configure arguments: --user=www --group=www --prefix=/usr/local/nginx --with-http_stub_status_module --with-http_ssl_module

#编辑nginx配置文件,进行访问控制,并启动nginx

[root@localhost nginx]# cp conf/nginx.conf{,.bak}

[root@localhost nginx]# egrep -v "#|^$" conf/nginx.conf.bak > conf/nginx.conf

[root@localhost nginx]# vim conf/nginx.conf

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

log_format main '$remote_addr - $remote_user [$time_local] "$request"'

'$status $body_bytes_sent "$http_referer"'

'"$http_user_agent""$http_x_forwarded_for"';

server {

listen 5609;

access_log /usr/local/nginx/logs/kibana_access.log main;

error_log /usr/local/nginx/logs/kibana_error.log error;

location / {

allow 192.168.100.1;

deny all;

proxy_pass http://127.0.0.1:5601;

}

}

}

[root@localhost nginx]# /usr/local/nginx/sbin/nginx -t

nginx: the configuration file /usr/local/nginx/conf/nginx.conf syntax is ok

nginx: configuration file /usr/local/nginx/conf/nginx.conf test is successful

[root@localhost nginx]# /usr/local/nginx/sbin/nginx

[root@localhost nginx]# netsta -antup | grep nginx

-bash: netsta: command not found

[root@localhost nginx]# netstat -antup | grep nginx

tcp 0 0 0.0.0.0:5609 0.0.0.0:* LISTEN 1405/nginx: master

# nginx编译完毕

[root@www html]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.146.174 www.yunjisuan.com

location / {

auth_basic "elk auth";

auth_basic_user_file /usr/local/nginx/conf/htpasswd;

proxy_pass http://127.0.0.1:5601;

}

elasticsearch的安装和启动

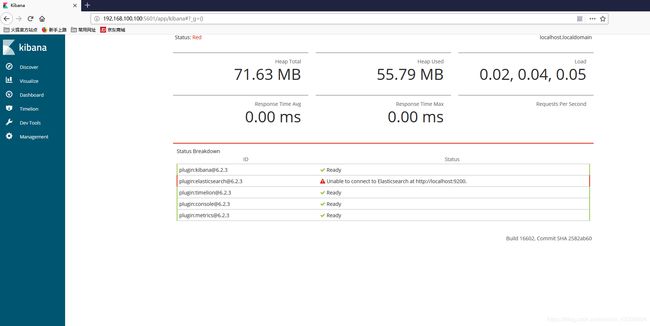

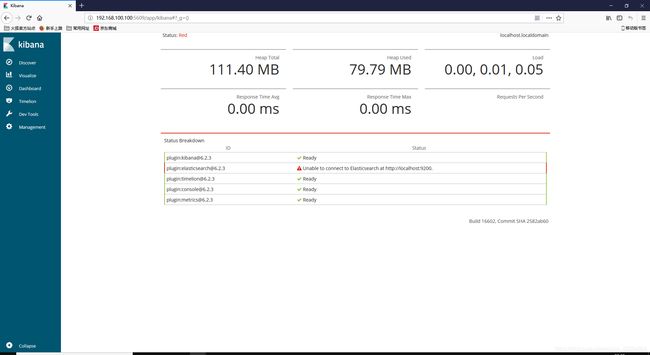

elasticsearch未安装之前,kibana网页上报错,提示找不到elasticsearch。

- elasticsearch的下载地址

elastic search主要用来存储数据,供kibana调取并进行展现

https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.2.3.tar.gz - 解压部署elasticsearch

[root@localhost ~]# tar xf elasticsearch-6.2.3.tar.gz -C /usr/local/

[root@localhost ~]# mv /usr/local/elasticsearch-6.2.3 /usr/local/elasticsearch

- elasticsearch配置

#修改以下配置文件中的代码

[root@localhost ~]# vim /usr/local/elasticsearch/config/elasticsearch.yml

33 path.data: /usr/local/elasticsearch/data

37 path.logs: /usr/local/elasticsearch/logs

55 network.host: 127.0.0.1 #只支持本地写入数据为了ES的安全,在企业中都是用IP地址访问

59 http.port: 9200

- 把elasticsearch目录的用户和属主都更新为elk

[root@localhost ~]# chown -R elk.elk /usr/local/elasticsearch/

- 更改JVM的内存限制(看个人配置,本次实验已将虚拟机配置更改为2g)

因为我们实验环境是虚拟机,1g内存一会儿就会被跑满,就会很慢。所以,我们要调整内存占用的限制。

[root@ELK ~]# vim /usr/local/elasticsearch/config/jvm.options

22 -Xms1g

23 -Xmx1g

- 编辑elasticsearch启动脚本,使用-d进行后台启动。

[root@localhost ~]# vim /usr/local/elasticsearch/bin/start.sh

/usr/local/elasticsearch/bin/elasticsearch -d >> /tmp/elasticsearch.log 2>> /tmp/elasticsearch.log

[root@localhost ~]# chmod a+x /usr/local/elasticsearch/bin/start.sh

- 启动elasticsearch

[root@localhost ~]# su -s /bin/bash elk '/usr/local/elasticsearch/bin/start.sh'

[root@localhost ~]# ps -ef | grep elk | grep -v grep

elk 10389 1 3 10:46 pts/0 00:00:06 /usr/local/kibana/bin/../node/bin/node --no-warnings /usr/local/kibana/bin/../src/cli

elk 10450 1 78 10:49 pts/0 00:00:01 /usr/local/jdk//bin/java -Xms1g -Xmx1g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.io.tmpdir=/tmp/elasticsearch.JfukyhKj -XX:+HeapDumpOnOutOfMemoryError -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintTenuringDistribution -XX:+PrintGCApplicationStoppedTime -Xloggc:logs/gc.log -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=32 -XX:GCLogFileSize=64m -Des.path.home=/usr/local/elasticsearch -Des.path.conf=/usr/local/elasticsearch/config -cp /usr/local/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -d

- 观察日志,看看kibana日志还会不会报elasticsearch错误

[root@localhost ~]# tail -f /tmp/kibana.log

重新刷新url:http://192.168.100.100:5609

观察日志,看看还有没有报错。

备注:假如elasticsearch如果监听在非127.0.0.1,那么需要修改内核参数等,在这里就不多说了。

备注:假如elasticsearch如果监听在非127.0.0.1,那么需要修改内核参数等,在这里就不多说了。

logstash的安装和启动

- logstash的下载地址

用来读取日志,正则分析日志,发送给elasticsearch数据库

https://artifacts.elastic.co/downloads/logstash/logstash-6.2.3.tar.gz - 解压部署logstash

[root@localhost ~]# tar xf logstash-6.2.3.tar.gz -C /usr/local/

[root@localhost ~]# mv /usr/local/logstash-6.2.3 /usr/local/logstash

- 更改logstash jvm配置,加入内存限制

#修改如下配置

[root@localhost ~]# vim /usr/local/logstash/config/jvm.options

6 -Xms1g

7 -Xmx1g

- 修改logstash配置文件

#配置文件没有,需要新建

[root@localhost ~]# vim /usr/local/logstash/config/logstash.conf

input {

file {

path => "/usr/local/nginx/logs/kibana_access.log" #读取日志路径

}

}

output {

elasticsearch {

hosts => ["http://127.0.0.1:9200"] #保存日志url

}

}

- logstash的启动脚本

[root@localhost ~]# vim /usr/local/logstash/bin/start.sh

nohup /usr/local/logstash/bin/logstash -f /usr/local/logstash/config/logstash.conf >> /tmp/logs

tash.log 2>> /tmp/logstash.log &

[root@localhost ~]# chmod a+x /usr/local/logstash/bin/start.sh

- 启动logstash

logstash并没有监听端口,因此不需要用elk用户来启动

[root@localhost ~]# /usr/local/logstash/bin/start.sh

[root@localhost ~]# ps -ef | grep logstash

root 10700 1 76 11:12 pts/0 00:00:12 /usr/local/jdk//bin/java -Xms1g -Xmx1g -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djruby.compile.invokedynamic=true -Djruby.jit.threshold=0 -XX:+HeapDumpOnOutOfMemoryError -Djava.security.egd=file:/dev/urandom -cp /usr/local/logstash/logstash-core/lib/jars/animal-sniffer-annotations-1.14.jar:/usr/local/logstash/logstash-core/lib/jars/commons-compiler-3.0.8.jar:/usr/local/logstash/logstash-core/lib/jars/error_prone_annotations-2.0.18.jar:/usr/local/logstash/logstash-core/lib/jars/google-java-format-1.5.jar:/usr/local/logstash/logstash-core/lib/jars/guava-22.0.jar:/usr/local/logstash/logstash-core/lib/jars/j2objc-annotations-1.1.jar:/usr/local/logstash/logstash-core/lib/jars/jackson-annotations-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/jackson-core-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/jackson-databind-2.9.1.jar:/usr/local/logstash/logstas-core/lib/jars/jackson-dataformat-cbor-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/janino-3.0.8.jar:/usr/local/logstash/logstash-core/lib/jars/javac-shaded-9-dev-r4023-3.jar:/usr/local/logstash/logstash-core/lib/jars/jruby-complete-9.1.13.0.jar:/usr/local/logstash/logstash-core/lib/jars/jsr305-1.3.9.jar:/usr/local/logstash/logstash-core/lib/jars/log4j-api-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/log4j-core-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/log4j-slf4j-impl-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/logstash-core.jar:/usr/local/logstash/logstash-core/lib/jars/slf4j-api-1.7.25.jar org.logstash.Logstash -f /usr/local/logstash/config/logstash.conf

root 10725 10274 0 11:12 pts/0 00:00:00 grep --color=auto logstash

特别提示:

logstash启动的比较慢,需要多等一会儿。

如果在kibana的Discover里能看到添加索引就说明logstash启动好了

运维分析日志的几个方面:

运维分析日志的几个方面:

(1)并发访问量PV

(2)图片流量

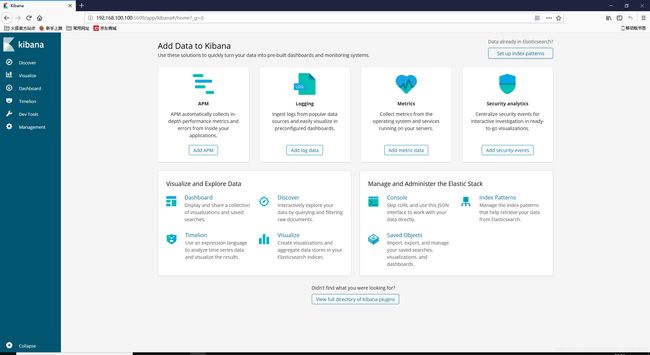

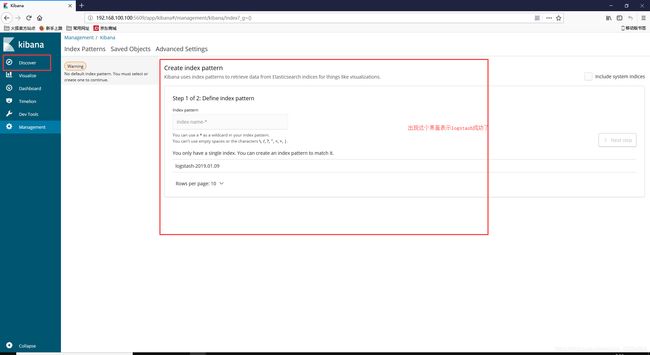

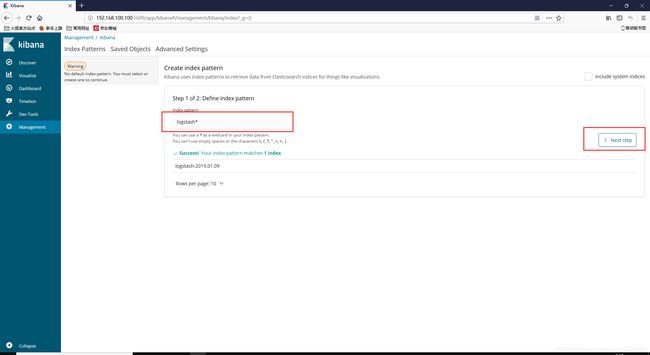

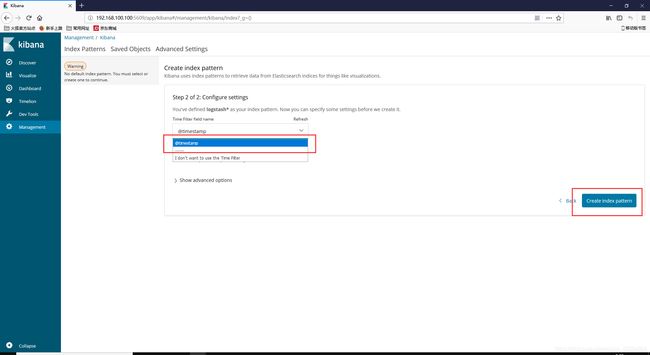

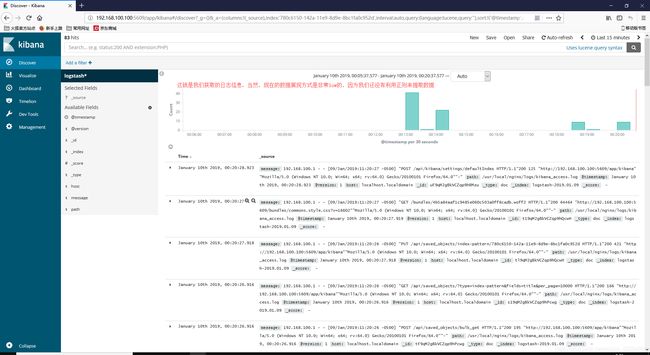

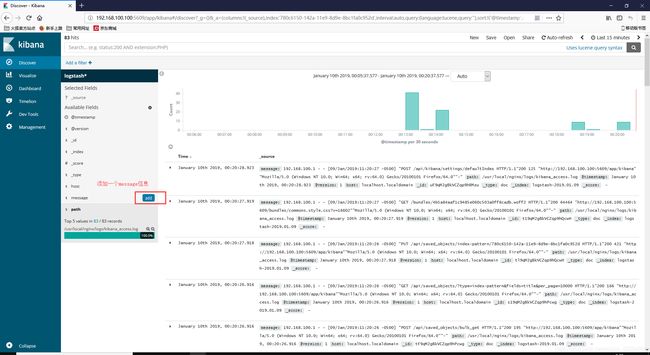

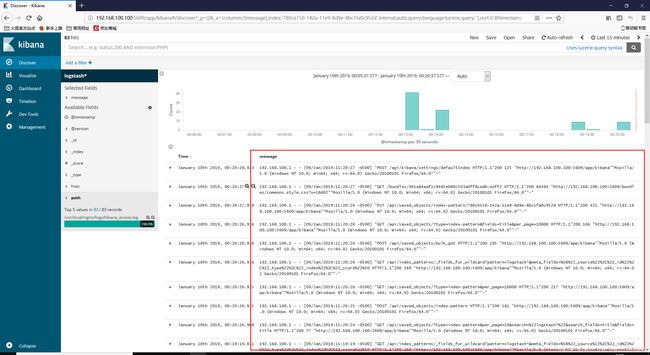

7. 在kibana上配置索引,展现获取的kibana日志数据

进行数据展现字段的筛选

进行数据展现字段的筛选

对nginx的kibana_access.log进行数据追踪,对比分析

对nginx的kibana_access.log进行数据追踪,对比分析

[root@localhost ~]# tail -f /usr/local/nginx/logs/kibana_access.log

logstash使用详解

#执行下边的命令

[root@ELK ~]# /usr/local/logstash/bin/logstash -e ""

welcome #输入的内容

Sending Logstash's logs to /usr/local/logstash/logs which is now configured via log4j2.properties

[2019-01-09T20:32:36,851][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"fb_apache", :directory=>"/usr/local/logstash/modules/fb_apache/configuration"}

[2019-01-09T20:32:36,894][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"netflow", :directory=>"/usr/local/logstash/modules/netflow/configuration"}

[2019-01-09T20:32:39,478][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2019-01-09T20:32:40,873][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.2.3"}

[2019-01-09T20:32:41,811][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

[2019-01-09T20:32:44,847][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

The stdin plugin is now waiting for input:

[2019-01-09T20:32:45,170][INFO ][logstash.pipeline ] Pipeline started succesfully {:pipeline_id=>"main", :thread=>"#"}

[2019-01-09T20:32:45,356][INFO ][logstash.agent ] Pipelines running {:count=>1, :pipelines=>["main"]}

{

"@version" => "1",

"type" => "stdin",

"host" => "localhost",

"message" => "welcome",

"@timestamp" => 2019-01-10T01:32:45.302Z

}

^C[2019-01-09T20:32:58,826][WARN ][logstash.runner ] SIGINT received. Shutting down.

[2019-01-09T20:32:59,392][INFO ][logstash.pipeline ] Pipeline has terminated {:pipeline_id=>"main", :thread=>"#"}

可以看到logstash结尾自动添加了几个字段,时间戳@timestamp,版本@version,输入的类型type,以及主机名host

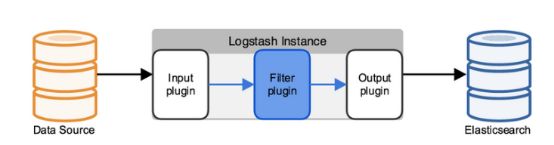

- 1 logstash工作原理

Logstash使用管道方式进行日志的搜集处理和输出。有点类似于管道命令xxx|ccc|ddd,xxx执行完了会执行ccc,然后执行ddd。

在logstash中,包括了三个阶段:

输入input —> 处理filter(不是必须的) —> 输出output

每个阶段都有很多的插件配合工作,比如file,elasticsearch,redis等

每个阶段也可以指定多种方式,比如输出既可以输出到elasticsearch中,也可以指定到stdout在控制台打印。

由于这种插件式的组织方式,使得logstash变得易于扩展和定制 - 命令行中常用的命令

- -f:通过这个命令可以指定Logstash的配置文件,根据配置文件配置logstash

- -e:后面跟着字符串,该字符串可以被当作logstash的配置(如果是""则默认使用stdin作为输入,stdout作为输出)

- -l:日志输出的地址(默认就是stdout直接在控制台中输出)

- -t:测试配置文件是否正确,然后退出。

- 配置文件说明

前面介绍过logstash基本上由三部分组成,input,output以及用户需要才添加的filter,因此标准的配置文件格式如下:

(1) input {…}

(2) filter {…}

(3) output {…}

在每个部分中,也可以指定多个访问方式,例如我想要指定两个日志来源文件,则可以这样写:

在每个部分中,也可以指定多个访问方式,例如我想要指定两个日志来源文件,则可以这样写:

input {

file { path => "/var/log/messages" type => "syslog" }

file { path => "/var/log/apache/access.log" type => "apache" }

}

类似的,如果在filter中添加了多种处理规则,则按照它的顺序----处理,但是有一些插件并不是线程安全的。

比如在filter中指定了两个一样的插件,这两个任务并不能保证准确的按顺序执行,因此官方也推荐避免在filter中重复使用插件。

利用logstash的正则进行日志信息的抓取测试

我们更改一下logstash的配置文件进行正则抓取数据的测试。

#logstash提取数据段配置文件模板

[root@ELK config]# cat logstash.conf

input {

stdin{} #从标准输入读取数据

}

filter {

grok {

match => {

"message" => '(?<字段名>正则表达式).*'

}

}

}

output {

elasticsearch { #如果要输入到elasticsearch里,那么需要注释掉stdout{}

hosts => ["http://127.0.0.1:9200"]

}

stdout { #只将信息输出到屏幕上

codec => rubydebug #用于正则提取测试,将正则抓取结果输出到屏幕上

}

}

- 测试性抓取日志字段

#修改logstash配置文件,将数据输出到数据库

[root@localhost ~]# vim /usr/local/logstash/config/logstash.conf

input {

stdin{}

}

filter {

grok {

match => {

"message" => '(?[a-zA-Z]+ [0-9]+ [0-9:]+) (?[a-zA-Z]+).*'

}

}

}

output {

elasticsearch {

hosts => ["http://127.0.0.1:9200"]

}

}

#交互式启动logstash

[root@localhost ~]# /usr/local/logstash/bin/logstash -f /usr/local/logstash/config/logstash.conf

#输出结果如下

Aug 16 18:29:49 ELK systemd: Startup finished in 789ms (kernel) + 1.465s (initrd) + 18.959s (userspace) = 21.214s.

- 将抓取的字段信息输入到elasticsearch并显示在kibana上

#logstash配置文件如下

[root@localhost ~]# vim /usr/local/logstash/config/logstash.conf

input {

stdin{}

}

filter {

grok {

match => {

"message" => '(?[a-zA-Z]+ [0-9]+ [0-9:]+) (?[a-zA-Z]+).*'

}

}

}

output {

elasticsearch {

hosts => ["http://127.0.0.1:9200"]

}

stdout { #标准输出到屏幕上

codec => rubydebug

}

}

#交互式启动logstash

[root@localhost ~]# /usr/local/logstash/bin/logstash -f /usr/local/logstash/config/logstash.conf

#输出结果如下

Aug 16 18:29:49 ELK systemd: Startup finished in 789ms (kernel) + 1.465s (initrd) + 18.959s (userspace) = 21.214s.

{

"mydate" => "Aug 16 18:29:49",

"@timestamp" => 2019-01-10T06:24:20.842Z,

"hostname" => "ELK",

"message" => "Aug 16 18:29:49 ELK systemd: Startup finished in 789ms (kernel) + 1.465s (initrd) + 18.959s (userspace) = 21.214s.",

"host" => "localhost",

"@version" => "1"

}

ELK技术全量分析nginx日志

logstash如果直接把一整行日志直接发送给elasticsearch,kibana显示出来就没有什么意义,我们需要提取自己想要的字段。假如说我们想要提取响应码,用户访问url,响应时间等,就得依靠正则来提取。

#logstash提取数据段配置文件模板

input { #日志输入来源函数

file {

path => "/usr/local/nginx/logs/kibana_access.log"

}

}

filter { #字段数据提取函数

grok {

match => {

"message" => '(?<字段名>正则表达式).*'

}

}

}

output { #数据输出目的地函数

elasticsearch {

hosts => ["http://127.0.0.1:9200"]

}

}

- 利用正则从message中提取kibana访问日志的IP地址

[root@localhost ~]# vim /usr/local/logstash/config/logstash.conf

input {

file {

path => "/usr/local/nginx/kibana_access.log"

}

}

filter {

grok {

match => {

"message" => '(?[0-9.]+) .*'

}

}

}

output {

elasticsearch {

hosts => ["http://127.0.0.1:9200"]

}

}

[root@localhost ~]# tail -1 /usr/local/nginx/logs/kibana_access.log

192.168.100.1 - - [10/Jan/2019:01:13:41 -0500] "PUT /api/saved_objects/index-pattern/780c6150-142a-11e9-8d9e-8bc1fa0c952d HTTP/1.1"200 430 "http://192.168.100.100:5609/app/kibana""Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:64.0) Gecko/20100101 Firefox/64.0""-"

- 从message中提取kibana访问日志的time,url,返回码,字节大小

[root@localhost ~]# tail -1 /usr/local/nginx/logs/kibana_access.log

192.168.100.1 - - [10/Jan/2019:01:13:41 -0500] "PUT /api/saved_objects/index-pattern/780c6150-142a-11e9-8d9e-8bc1fa0c952d HTTP/1.1"200 430 "http://192.168.100.100:5609/app/kibana""Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:64.0) Gecko/20100101 Firefox/64.0""-"

[root@localhost ~]# vim /usr/local/logstash/config/logstash.conf

input {

file {

path => "/usr/local/nginx/kibana_access.log"

}

}

filter {

grok {

match => {

"message" => '(?[0-9.]+) .*HTTP/[0-9.]+"(?[0-9]+) (?[0-9]+)[ "]+(?[a-zA-Z]+://[0-9.]+:[0-9]+/[a-zA-Z/]+)".*'

}

}

}

output {

elasticsearch {

hosts => ["http://127.0.0.1:9200"]

}

}