安卓音视频整理(一)——音频模块

这是关于安卓音视频的一个系列文章,大家可以从这里随意跳跃:

0.安卓音视频整理

1.安卓音视频整理(一)—— 音频模块

2.安卓音视频整理(二)—— 音视频编解码

3.安卓音视频整理(三)—— 图像模块

4.安卓音视频整理(四)—— 音视频播放器

5.安卓音视频整理(五)—— MediaSDK的封装

摘要:音频模块我将主要分 音频文件、音频录制、音频播放三大部分来叙述。音频文件将会重点描述音频的格式及编码;音频录制将介绍安卓中所有的可以录制音频的API;音频播放将介绍如何对音频文件进行播放。

1.音频文件

顾名思义,音频文件指的就是储存着音频的文件,其格式有 PCM、WAV、MP3、OGG、AAC、M4A等,之所以有不同格式的音频文件是因为其编码方式不同。

我们在日常生活中所用的大部分音频文件的编码都采用了PCM 编码,其能够达到音频数据最高保真的水平,因此能满足大众娱乐的各种需求。

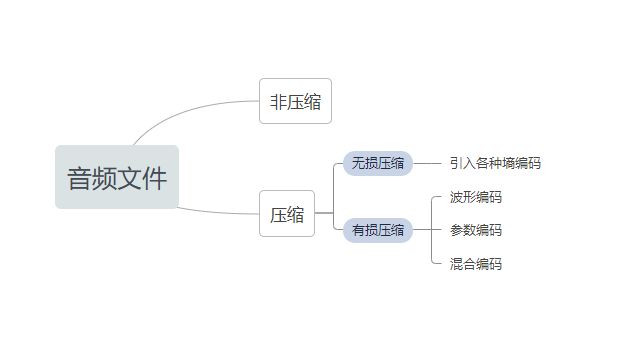

可是,PCM 编码高保真的特性意味着要占用更大的存储空间,所以高音质和小存储成为了互相制约的问题,为了中和考虑这2方面,音频格式也就出现了非压缩(如:WAV)和 压缩(如:MP3)两类。

所谓 音频压缩 是指通过不同的计算方式,忽略人耳不易察觉的频段或者通过制造听觉上的错觉,大幅度降低音频数据的数量,而音质基本不变甚至更好。音频压缩分为了 有损压缩 (如:AAC、M4A)和 无损压缩(如:FLAC、APE) 2种,如下图所示:

注:再次强调一下上一篇文章里的内容,PCM不是一种音频格式,它是声音文件的元数据,也就是声音的数据,用的是PCM编码,没有文件头。需要经过某种格式的压缩、编码算法处理以后,再加上这种格式的文件头,才是这种格式的音频文件。

好了,接下来我们会围绕部分音频格式,重点讲解安卓系统种用到的 音频录制与播放功能。

2.音频录制

安卓系统提供了4种音频录制的API,开发者可以根据应用场景,选择不同的API实现音频文件的录制功能,其中包括:

- 通过Intent调用系统的录音器功能

- MediaRecorder 录制音频

- AudioRecorder 录制音频

- OpenSL ES 录制音频

安卓下录制音频需要录音权限、文件写入权限

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

2.1 通过Intent调用系统的录音器功能

① 通过Intent调用系统的录音器功能,录制完毕后 保存的音频文件路径 uri 会在onActivityResult中返回。

private final static int REQUEST_RECORDER = 1;

private Uri uri;

//去录制

public void startRecord(){

Intent intent = new Intent(MediaStore.Audio.Media.RECORD_SOUND_ACTION);

startActivityForResult(intent,REQUEST_RECORDER);

}

② 在onActivityResult获取返回信息

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if (resultCode == RESULT_OK && REQUEST_RECORDER == requestCode){

//返回 uri

uri = data.getData();

}

}

2.2 通过MediaRecorder来录制音频

MediaRecorder 类可用于音频和视频的录制。虽然已经集成了录音、编码、压缩,但只支持少量的音频格式录制,大概有.aac .amr .3gp等;不支持 wav、mp3。示例如下:

private MediaRecorder mRecorder;

private boolean isRecording = false;

private String mFileName="text.aac";

/**

* 开始录制

*/

private void startRecord() {

try {

// 如果正在录音,那么停止并释放MediaRecorder

if (isRecording && mRecorder != null) {

mRecorder.stop();

mRecorder.release();

mRecorder = null;

}

// 实例化 MediaRecorder

mRecorder = new MediaRecorder();

// 设置声音源为麦克风

mRecorder.setAudioSource(MediaRecorder.AudioSource.MIC);

// 设置输出格式为 aac

mRecorder.setOutputFormat(MediaRecorder.OutputFormat.AAC_ADTS);

// 设置输出文件路径,mFileName为录音音频输出路径

mRecorder.setOutputFile(mFileName);

// 设置声音解码 AAC

mRecorder.setAudioEncoder(MediaRecorder.AudioEncoder.AAC);

// 媒体录制器准备

mRecorder.prepare();

// 开始录制

mRecorder.start();

isRecording = true;

} catch (IOException e) {

e.printStackTrace();

}

}

/**

* 停止录制

*/

private void stopRecord() {

if (isRecording && mRecorder != null) {

mRecorder.stop();

mRecorder.release();

mRecorder = null;

}

}

2.3 通过AudioRecorder来录制音频

输出的是 PCM 的声音数据,如果要得到直接能播放的文件,则需要经过编码;AudioRecorder的特点是可以捕获到声音的原始数据——音频流,可以边录制边对音频数据进行处理,比如编码、变声、混音等。示例如下:

// 录音状态

private boolean isRecording = true;

/**

* 开始录音

*/

private void startRecord(){

// 录音为耗时操作,开线程

new Thread(){

@Override

public void run() {

// 设置音频输入源

int audioSource = MediaRecorder.AudioSource.MIC;

// 设置音频采样率

int sampleRate = 44100;

// 设置音频声道数

int channelConfig = AudioFormat.CHANNEL_IN_STEREO;//双声道

// 设置音频采样位数

int audioFormat = AudioFormat.ENCODING_PCM_16BIT;

// 获取最小缓存区大小

int minBufferSize = AudioRecord.getMinBufferSize(sampleRate, channelConfig, audioFormat);

// 创建录音对象

AudioRecord audioRecord = new AudioRecord(audioSource, sampleRate, channelConfig, audioFormat, minBufferSize);

try {

// 开始

audioRecord.startRecording();

isRecording = true;

while (isRecording) {

int readSize = audioRecord.read(buffer, 0, minBufferSize);

//buffer 即:录音得到的音频原始 PCM 数据,可以对音频数据进行各种处理,如:混音、变调、降噪、编码、边录边播、保存等操作

//readSize 即:每次输出的音频原始 PCM 数据确切长度

}

// 录音停止

audioRecord.stop();

// 释放

audioRecord.release();

} catch (IOException e) {

e.printStackTrace();

}

}

}.start();

}

/**

* 停止录音

*/

private void stop() {

// 停止录音

isRecording = false;

}

2.4 通过OpenSL ES来录制音频

重点来了,后续我们的播放器SDK将要用 OpenSL ES 来处理整个音频部分的录制与播放。

OpenSL ES (Open Sound Library for Embedded Systems)是无授权费、跨平台、针对嵌入式系统精心优化的硬件音频加速API。它为嵌入式移动多媒体设备上的本地应用程序开发者提供标准化, 高性能,低响应时间的音频功能实现方法,并实现软/硬件音频性能的直接跨平台部署,降低执行难度,促进高级音频市场的发展。简单来说OpenSL ES是一个嵌入式跨平台免费的音频处理库。

OpenSL ES 的开发流程主要有如下6个步骤:

- 创建接口对象

- 设置混音器

- 创建录音器(播放器)

- 设置缓冲队列和回调函数

- 设置播放状态

- 启动回调函数

OpenSL ES 可以根据开发者的需要,定制几乎可以满足一切安卓音频所需要的功能,十分的强大。我们采用NDK开发来,从而对OpenSL ES的音频功能进行逐个实现。

首先,要使用OpenSL ES的API,需要引入OpenSL ES的头文件,代码如下:

#include 我发现讲概念好像不怎么通俗易懂,哎!来来来,直接看我封装的,上 代码:

OpenSLHelp.h

//

// Created by 86158 on 2019/8/22.

//

#ifndef YorkAUDIO_OPENSLHELP_H

#define YorkAUDIO_OPENSLHELP_H

#include OpenSLHelp.cpp

//

// Created by 86158 on 2019/8/22.

//

#include "OpenSLHelp.h"

OpenSLHelp::OpenSLHelp() {

}

OpenSLHelp::~OpenSLHelp() {

}

void OpenSLHelp::openSLHelperInit() {

engineObject = NULL;

engineInterface = NULL;

recorderObject = NULL;

recorderInterface = NULL;

outputMixObject = NULL;

playerObject = NULL;

playInterface = NULL;

outputMixEnvironmentalReverb = NULL;

reverbSettings = SL_I3DL2_ENVIRONMENT_PRESET_STONECORRIDOR;

//初始化openSL

slCreateEngine(&engineObject, 0, NULL, 0, NULL, NULL);

(*engineObject)->Realize(engineObject, SL_BOOLEAN_FALSE);

(*engineObject)->GetInterface(engineObject, SL_IID_ENGINE, &engineInterface);

}

void OpenSLHelp::playDestroy() {

if (playerObject != NULL) {

(*playerObject)->Destroy(playerObject);

playerObject = NULL;

playInterface = NULL;

pcmMutePlay = NULL;

pcmVolumePlay = NULL;

}

if (playerObject == NULL&&outputMixObject!=NULL){

(*outputMixObject)->Destroy(outputMixObject);

outputMixObject=NULL;

}

if (playerObject == NULL && recorderObject == NULL) {

if (queueInterface != NULL) {

queueInterface = NULL;

}

if (engineObject != NULL) {

(*engineObject)->Destroy(engineObject);

engineObject = NULL;

engineInterface = NULL;

}

}

}

void OpenSLHelp::recordDestroy() {

if (recorderObject != NULL) {

(*recorderObject)->Destroy(recorderObject);

recorderObject = NULL;

recorderInterface = NULL;

}

if (playerObject == NULL && recorderObject == NULL) {

if (queueInterface != NULL) {

queueInterface = NULL;

}

if (engineObject != NULL) {

(*engineObject)->Destroy(engineObject);

engineObject = NULL;

engineInterface = NULL;

}

}

}

//通道设置

SLuint32 OpenSLHelp::getChannelMask(int numChannels) {

return numChannels > 1 ? SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT

: SL_SPEAKER_FRONT_CENTER;

}

//位深设置

SLuint32 OpenSLHelp::getCurrentFormatForOpensles(int file_format) {

SLuint32 bit;

switch (file_format) {

case 8:

bit = SL_PCMSAMPLEFORMAT_FIXED_8;

break;

case 16:

bit = SL_PCMSAMPLEFORMAT_FIXED_16;

break;

case 24:

bit = SL_PCMSAMPLEFORMAT_FIXED_24;

break;

case 32:

bit = SL_PCMSAMPLEFORMAT_FIXED_32;

break;

default:

bit = SL_PCMSAMPLEFORMAT_FIXED_16;

}

return bit;

}

//采样率设置

SLuint32 OpenSLHelp::getCurrentSampleRateForOpensles(int sample_rate) {

SLuint32 rate;

switch (sample_rate) {

case 8000:

rate = SL_SAMPLINGRATE_8;

break;

case 11025:

rate = SL_SAMPLINGRATE_11_025;

break;

case 12000:

rate = SL_SAMPLINGRATE_12;

break;

case 16000:

rate = SL_SAMPLINGRATE_16;

break;

case 22050:

rate = SL_SAMPLINGRATE_22_05;

break;

case 24000:

rate = SL_SAMPLINGRATE_24;

break;

case 32000:

rate = SL_SAMPLINGRATE_32;

break;

case 44100:

rate = SL_SAMPLINGRATE_44_1;

break;

case 48000:

rate = SL_SAMPLINGRATE_48;

break;

case 64000:

rate = SL_SAMPLINGRATE_64;

break;

case 88200:

rate = SL_SAMPLINGRATE_88_2;

break;

case 96000:

rate = SL_SAMPLINGRATE_96;

break;

case 192000:

rate = SL_SAMPLINGRATE_192;

break;

default:

rate = SL_SAMPLINGRATE_44_1;

}

return rate;

}

怎么用呢?,这是录制部分的代码

YorkRecorder.cpp

//

// Created by 86158 on 2019/8/21.

//

#include "YorkRecorder.h"

YorkRecorder::YorkRecorder(YorkRecordstatus *recordstatus, OpenSLHelp *openSLHelp,

RecordCallJava *callJava, int samplingRate,

SLuint32 numChannels, int format) {

this->recordstatus = recordstatus;

this->openSLHelp = openSLHelp;

this->recordCallJava = callJava;

this->samplingRate = samplingRate;

this->numChannels = numChannels;

this->format = format;

recorderSize = samplingRate * numChannels * 120 / 1000;//兼容视频录制

yorkJiangzao = new YorkJiangzao();//环境降噪算法

yorkRenSheng = new YorkRenSheng();//人声增强算法

yorkLowCut = new YorkLowCut();//低切算法

yorkGain = new YorkGain();//增益调节算法

}

YorkRecorder::~YorkRecorder() {

}

void pcmBufferRecordCallBack(SLAndroidSimpleBufferQueueItf bf, void *context) {

YorkRecorder *yorkRecorder = (YorkRecorder *) context;

if (yorkRecorder != NULL) {

if (!yorkRecorder->recordstatus->recording) {

(*yorkRecorder->openSLHelp->recorderInterface)->SetRecordState(

yorkRecorder->openSLHelp->recorderInterface, SL_RECORDSTATE_STOPPED);

fclose(yorkRecorder->pcmFile);

} else {

//降噪

if (yorkRecorder->jz_rate < 0) {

yorkRecorder->yorkJiangzao->doJiangzao(yorkRecorder->jz_rate,

yorkRecorder->buffer,

yorkRecorder->buffer,

yorkRecorder->recorderSize,

yorkRecorder->format);

}

//人声增强

if (yorkRecorder->rs_rate != 0) {

yorkRecorder->yorkRenSheng->doRenSheng(yorkRecorder->rs_rate,

yorkRecorder->buffer,

yorkRecorder->buffer,

yorkRecorder->recorderSize,

yorkRecorder->format);

}

//低切

if (yorkRecorder->lc_rate != 0) {

yorkRecorder->yorkLowCut->doLowCut(yorkRecorder->lc_rate,

yorkRecorder->buffer,

yorkRecorder->buffer,

yorkRecorder->recorderSize,

yorkRecorder->format,

yorkRecorder->samplingRate);

}

//增益调节

if (yorkRecorder->gain_rate != 1) {

yorkRecorder->yorkGain->setGain(yorkRecorder->gain_rate, yorkRecorder->buffer,

yorkRecorder->buffer,

yorkRecorder->recorderSize,

yorkRecorder->format);

}

//返回数据

yorkRecorder->recordCallJava->onCallRecordData(CHILD_THREAD, yorkRecorder->buffer,

yorkRecorder->recorderSize);

//做边录边播还有分贝

yorkRecorder->recordCallJava->onCallRecordPlaydata(CHILD_THREAD, yorkRecorder->buffer,

yorkRecorder->recorderSize,

yorkRecorder->format);

//显示时间

if (yorkRecorder->recordstatus->recordingsave) {

yorkRecorder->recordCallJava->onCallRecordTime(CHILD_THREAD,

yorkRecorder->timer.getTimeShow());

}

//取完数据,需要调用Enqueue触发下一次数据回调

(*yorkRecorder->openSLHelp->queueInterface)->Enqueue(

yorkRecorder->openSLHelp->queueInterface, yorkRecorder->buffer,

yorkRecorder->recorderSize);

}

}

}

void YorkRecorder::recordOpenSLES() {

if (LOG_DEBUG) {

LOGD("InitRecord : -- sample_rate= %d ,channels= %d ,format= %d", samplingRate, numChannels, format)

}

buffer = (uint8_t *) av_malloc(recorderSize);

//创建引擎对象

openSLHelp->openSLHelperInit();

//设置IO设备(麦克风)

openSLHelp->device = {SL_DATALOCATOR_IODEVICE, SL_IODEVICE_AUDIOINPUT,

SL_DEFAULTDEVICEID_AUDIOINPUT, NULL};

openSLHelp->source = {&openSLHelp->device, NULL};

//设置buffer队列

openSLHelp->android_queue = {SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE, 2};

//设置录制规格:PCM、2声道、44100HZ、16bit

openSLHelp->formatPCM = {

SL_DATAFORMAT_PCM, numChannels,

openSLHelp->getCurrentSampleRateForOpensles(samplingRate),

openSLHelp->getCurrentFormatForOpensles(format),

openSLHelp->getCurrentFormatForOpensles(format),

openSLHelp->getChannelMask(numChannels),

SL_BYTEORDER_LITTLEENDIAN

};

openSLHelp->sink = {&openSLHelp->android_queue, &openSLHelp->formatPCM};

SLInterfaceID id[] = {SL_IID_ANDROIDSIMPLEBUFFERQUEUE};

SLboolean required[] = {SL_BOOLEAN_TRUE};

//创建录制器

(*openSLHelp->engineInterface)->CreateAudioRecorder(

openSLHelp->engineInterface,

&openSLHelp->recorderObject,

&openSLHelp->source,

&openSLHelp->sink,

1,

id,

required);

(*openSLHelp->recorderObject)->Realize(openSLHelp->recorderObject, SL_BOOLEAN_FALSE);

(*openSLHelp->recorderObject)->GetInterface(openSLHelp->recorderObject, SL_IID_RECORD,

&openSLHelp->recorderInterface);

(*openSLHelp->recorderObject)->GetInterface(openSLHelp->recorderObject,

SL_IID_ANDROIDSIMPLEBUFFERQUEUE,

&openSLHelp->queueInterface);

(*openSLHelp->queueInterface)->Enqueue(openSLHelp->queueInterface, buffer, recorderSize);

(*openSLHelp->queueInterface)->RegisterCallback(openSLHelp->queueInterface,

pcmBufferRecordCallBack, this);

recordstatus->recording = true;

//开始录音

(*openSLHelp->recorderInterface)->SetRecordState(openSLHelp->recorderInterface,

SL_RECORDSTATE_RECORDING);

if (recordCallJava != NULL) {

recordCallJava->onCallRecordStart(CHILD_THREAD);

}

if (LOG_DEBUG) {

LOGD("Recorder started ...");

}

}

void *timeSpace(void *data) {

YorkRecorder *recorder = (YorkRecorder *) data;

while (recorder->recordstatus->recording) {

if (recorder->recordstatus->recordingsave) {

usleep(1000);

recorder->recordCallJava->onCallTimeSpace(CHILD_THREAD, recorder->timer.getTimeShow());

}

}

pthread_exit(&recorder->thread_timeSpace);

}

/**

* 开始录制

*/

void YorkRecorder::startRecord() {

yorkJiangzao->initJiangzao(recorderSize, samplingRate, numChannels, format);

yorkRenSheng->initRenSheng();

recordOpenSLES();

pthread_create(&thread_timeSpace, NULL, timeSpace, this);

}

/**

* 暂停录制

*/

void YorkRecorder::pauseRecord() {

if (openSLHelp != NULL && openSLHelp->recorderInterface != NULL) {

(*openSLHelp->recorderInterface)->SetRecordState(openSLHelp->recorderInterface,

SL_RECORDSTATE_PAUSED);

if (recordCallJava != NULL) {

recordCallJava->onCallRecordPause(CHILD_THREAD);

}

if (LOG_DEBUG) {

LOGD("Recorder paused ...");

}

}

}

/**

* 继续录制

*/

void YorkRecorder::resumeRecord() {

if (openSLHelp != NULL && openSLHelp->recorderInterface != NULL) {

(*openSLHelp->recorderInterface)->SetRecordState(openSLHelp->recorderInterface,

SL_RECORDSTATE_RECORDING);

if (recordCallJava != NULL) {

recordCallJava->onCallRecordStart(CHILD_THREAD);

}

if (LOG_DEBUG) {

LOGD("Recorder resumed ...");

}

}

}

/**

* 停止录制

*/

void YorkRecorder::stopRecord() {

recordstatus->recording = false;

if (yorkJiangzao != NULL) {

yorkJiangzao->destryJiangzao();

}

if (openSLHelp != NULL) {

SLuint32 state;

(*openSLHelp->recorderInterface)->GetRecordState(openSLHelp->recorderInterface, &state);

if (state == SL_RECORDSTATE_PAUSED || state == SL_RECORDSTATE_RECORDING) {

(*openSLHelp->recorderInterface)->SetRecordState(openSLHelp->recorderInterface,

SL_RECORDSTATE_STOPPED);

}

openSLHelp->recordDestroy();

if (LOG_DEBUG) {

LOGD("Recorder already stoped !");

}

}

if (recordCallJava != NULL) {

recordCallJava->onCallRecordStop(CHILD_THREAD);

recordCallJava=NULL;

}

}

void YorkRecorder::setJZ(int jz_rate) {

this->jz_rate = jz_rate;

}

void YorkRecorder::setGain(double gain_rate) {

this->gain_rate = gain_rate;

}

void YorkRecorder::setLC(int lc_rate) {

this->lc_rate = lc_rate;

}

void YorkRecorder::setRenShenSwitch(double rs_rate) {

this->rs_rate = rs_rate;

}

int YorkRecorder::getRecordStates() {

SLuint32 state;

int ret = -1;

if (openSLHelp != NULL && openSLHelp->recorderInterface != NULL) {

(*openSLHelp->recorderInterface)->GetRecordState(openSLHelp->recorderInterface,

&state);

}

if (state == SL_RECORDSTATE_RECORDING) {

ret = 0;

}

return ret;

}

void YorkRecorder::startRecordTime() {

recordstatus->recordingsave = true;

timer.Start();

}

void YorkRecorder::pauseRecordTime() {

recordstatus->recordingsave = false;

timer.Pause();;

}

void YorkRecorder::resumeRecordTime() {

recordstatus->recordingsave = true;

timer.Start();

}

void YorkRecorder::stopRecordTime() {

if (recordCallJava != NULL) {

recordCallJava->onCallRecordResult(CHILD_THREAD, timer.getTimeShow());

}

recordstatus->recordingsave = false;

timer.Stop();

}

这样就完成对OpenSL ES的音频录制功能封装。OpenSL ES还可以对音频进行播放,这部分我们在播放模块对它重点讲解。

以上,就是对安卓系统下的音频录制功能的介绍了。

3.音频播放

安卓系统提供了4种音频播放的API,开发者可以根据应用场景,选择不同的API实现对音频文件的播放。其中包括:

- AudioTrack 播放PCM音频

- MediaPlayer 播放音频

- SoundPool 播放音频

- JetPlayer 播放 .jet音频

- Ringtone 播放铃声音频

- OpenSL ES 播放PCM音频

3.1 AudioTrack 播放音频

AudioTrack 属于偏底层的音频播放API,MediaPlayerService的内部就是使用了 AudioTrack。

AudioTrack 用于单个音频的播放,播放PCM 数据,任何一种音频解码成 PCM 后都可以用 AudioTrack 来播放,所以 AudioTrack 相比于MediaPlayer,具有更精炼、高效、灵活的优点。

① AudioTreack 的2种播放模式

- 静态模式—static:静态的言下之意就是数据一次性交付给接收方。好处是简单高效,只需要进行一次操作就完成了数据的传递;缺点当然也很明显,对于数据量较大的音频回放,显然它是无法胜任的,因而通常只用于播放铃声、系统提醒等对内存小的操作

- 流模式-stream:流模式时数据是按照一定规律不断地传递给接收方的。理论上它可用于任何音频播放的场景,我们一般在音频文件过大、还有数据是实时产生的情况下,用流模式进行播放。

② AudioTreack 的播放示例

播放前需要先设置音频的基本参数,用音频数据的采样率、位深、通道数初始化 AudioTreack。示例:

/**

* 开始播放

*/

private void startPlay(){

new Thread(new Runnable() {

@Override

public void run() {

// 获取最小缓冲区

int bufSize = AudioTrack.getMinBufferSize(44100, AudioFormat.CHANNEL_OUT_STEREO, AudioFormat.ENCODING_PCM_16BIT);

// 实例化AudioTrack(设置缓冲区为最小缓冲区的2倍,至少要等于最小缓冲区)

mAudioTrack = new AudioTrack(AudioManager.STREAM_MUSIC, 44100, AudioFormat.CHANNEL_OUT_STEREO,

AudioFormat.ENCODING_PCM_16BIT, bufSize, AudioTrack.MODE_STREAM);

// 设置播放频率

mAudioTrack.setPlaybackRate(10) ;

mAudioTrack.play();

// 获取音乐文件输入流

InputStream is = getResources().openRawResource(R.raw.pcm_441_stereo_16);

byte[] buffer = new byte[bufSize*2] ;

int len ;

try {

while((len=is.read(buffer,0,buffer.length)) != -1){

// 将读取的数据,写入Audiotrack

audioTrack.write(buffer,0,buffer.length) ;

}

is.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}).start();

}

/**

* 停止播放

*/

private void stopPlay(){

if (this.mAudioTrack != null) {

this.mAudioTrack.stop();

this.mAudioTrack.release();

this.mAudioTrack = null;

}

}

以上就是对 AudioTreack 实现音频播放的整理。

3.2 MediaPlayer 播放音频

MediaPlayer 支持:AAC、AMR、FLAC、MP3、MIDI、OGG、PCM 等音频格式,也可以播放在线的流式资源。

MediaPlayer 播放时会在framework 层创建对应的音频解码器,创建 AudioTrack,然后把解码后的PCM数流传递给 AudioTrack,AudioTrack 再传递给 AudioFlinger 进行混音,然后才传递给硬件播放,所以可以说 MediaPlayer 包含了 AudioTrack。我们后期要讲的播放器封装也是一样的逻辑。

以下是对 MediaPlayer 播放功能的一个封装,其中包括 音频源的设置、播放、暂停、继续播放、停止播放、获取播放状态、播放进度控制等封装。代码如下:

public class SampleMediaPlay implements OnPreparedListener, OnBufferingUpdateListener{

public MediaPlayer mediaPlayer; // 媒体播放器

private Timer mTimer = new Timer(); // 计时器

private StateChangeListener mStateChangeListener;

private int presses=0;

private int totaltime=0;

private AudioManager mAudioManager;

public SampleMediaPlay(StateChangeListener mStateChangeListener,AudioManager audioManager) {

super();

this.mAudioManager=audioManager;

this.mStateChangeListener=mStateChangeListener;

try {

mediaPlayer = new MediaPlayer();

mediaPlayer.setAudioStreamType(AudioManager.STREAM_MUSIC);// 设置媒体流类型

mediaPlayer.setOnPreparedListener(this);

mediaPlayer.setOnBufferingUpdateListener(this);

} catch (Exception e) {

e.printStackTrace();

}

mTimer.schedule(timerTask, 0, 50);

}

private AudioManager.OnAudioFocusChangeListener mFocusChangeListener=new AudioManager.OnAudioFocusChangeListener() {

@Override

public void onAudioFocusChange(int focusChange) {

if (focusChange == AudioManager.AUDIOFOCUS_LOSS_TRANSIENT) {

Log.e("york","短暂的失去焦点");

pause();

} else if (focusChange == AudioManager.AUDIOFOCUS_GAIN) {

Log.e("york","重新获取到焦点");

play();

} else if (focusChange == AudioManager.AUDIOFOCUS_LOSS) {

Log.e("york","长时间失去焦点哦");

pause();

} else if (focusChange == AudioManager.AUDIOFOCUS_REQUEST_GRANTED) {

Log.e("york","焦点成功");

}

}

};

TimerTask timerTask = new TimerTask() {

@Override

public void run() {

if (mediaPlayer == null){

return;

}else if (mediaPlayer.isPlaying()) {

handler.sendEmptyMessage(0); // 发送消息

}

}

};

Handler handler = new Handler() {

public void handleMessage(android.os.Message msg) {

if (mediaPlayer == null){

return;

}else if (mediaPlayer.isPlaying() ) {

mediaPlayer.getCurrentPosition();

mediaPlayer.getDuration();

}

};

};

// 设置数据源

public void playUrl(String url) {

if (mediaPlayer != null) {

mAudioManager.requestAudioFocus(mFocusChangeListener,AudioManager.STREAM_MUSIC,AudioManager.AUDIOFOCUS_GAIN);

try {

mediaPlayer.setDataSource(url);

mediaPlayer.prepareAsync();

mStateChangeListener.onUpData();

} catch (Exception e) {

e.printStackTrace();

}

}

}

//播放

public void play() {

if (mediaPlayer != null) {

mAudioManager.requestAudioFocus(mFocusChangeListener,AudioManager.STREAM_MUSIC,AudioManager.AUDIOFOCUS_GAIN);

if(!mediaPlayer.isPlaying()){

mediaPlayer.start();

mStateChangeListener.onPlaying();

}

}

}

// 暂停

public void pause() {

if(mediaPlayer != null ){

if(mediaPlayer.isPlaying()){

mediaPlayer.pause();

mStateChangeListener.onPauseing();

}

}

}

// 停止

public void stop() {

if (mediaPlayer != null) {

timerTask.cancel();

mTimer.cancel();

mTimer=null;

mAudioManager.abandonAudioFocus(mFocusChangeListener);

pause();

if(mediaPlayer != null ){

if(mediaPlayer.isPlaying()){

mediaPlayer.pause();

}

}

mediaPlayer.stop();

mediaPlayer.release();

mediaPlayer=null;

}

}

@Override

public void onPrepared(MediaPlayer mp) {

mAudioManager.requestAudioFocus(mFocusChangeListener,AudioManager.STREAM_MUSIC,AudioManager.AUDIOFOCUS_GAIN);

mp.start();

mStateChangeListener.onPlaying();

}

public int getCurrentPosition(){

if(mediaPlayer==null){

return 0;

}else if(!mediaPlayer.isPlaying()){

return presses;

}else{

presses= mediaPlayer.getCurrentPosition();

return presses;

}

}

public int getDuration(){

if(mediaPlayer==null){

return 0;

}else if(!mediaPlayer.isPlaying()){

return totaltime;

}else{

totaltime= mediaPlayer.getDuration();

return totaltime;

}

}

public void seekTo(int msec){

mediaPlayer.seekTo(msec);

}

@Override

public void onBufferingUpdate(MediaPlayer mp, int percent) {

}

}

3.3 SoundPool 播放音频

SoundPool可以同时播放多个短促的音频,而且占用的资源较少,比较适合短促、密集的场景,适合在程序中播放按键音,或者消息提示音等,也长用于游戏开发中音效的播放。不过比较尴尬的是,已经被安卓弃用了。

① SoundPool 构造器

/**

* 参数maxStreams:指定支持多少个声音;

* 参数streamType:指定声音类型:

* 参数srcQuality:指定声音品质。

*/

new SoundPool(int maxStreams, int streamType, int srcQuality)

用以上实例化SoundPool对象

②SoundPool加载音频

在得到了SoundPool对象之后,接下来就可调用SoundPool的多个重载的load方法来加载声音了。

//从 resld 所对应的资源加载声音。

int load(Context context, int resld, int priority)

//加载 fd 所对应的文件的offset开始、长度为length的声音。

int load(FileDescriptor fd, long offset, long length, int priority)

//从afd 所对应的文件中加载声音。

int load(AssetFileDescriptor afd, int priority)

//从path 对应的文件去加载声音。

int load(String path, int priority)

上面4个方法加载声音之后,返回该声音的ID,程序通过ID来播放该声音。

③SoundPool播放指定ID声音

/**

* 参数soundID:指定播放哪个声音;

* 参数leftVolume、rightVolume:指定左、右的音量:

* 参数priority:指定播放声音的优先级,数值越大,优先级越高;

* 参数loop:指定是否循环,0:不循环,-1:循环,其他值表示要重复播放的次数;

* 参数rate:指定播放的比率,数值可从0.5到2, 1为正常比率。

*/

int play(int soundID, float leftVolume, float rightVolume, int priority, int loop, float rate)

④释放

最后,release()方法,用于释放所有SoundPool对象占据的内存和资源,也可以指定要释放的ID。

以上为 SoundPool 播放音频文件的方法。

3.4 JetPlayer 播放音频

在Android中,还提供了对Jet播放的支持,Jet是由OHA联盟成员SONiVOX开发的一个交互音乐引擎。其包括两部分:JET播放器和JET引擎。JET常用于控制游戏的声音特效,采用MIDI(Musical Instrument Digital Interface)格式。

MIDI数据有一套音乐符号构成,而非实际的音乐,这些音乐符号的一个序列称为MIDI消息,Jet文件包含多个Jet段,而每个Jet段又包含多个轨迹,一个轨迹是MIDI 消息的一个序列。

JetPlayer类内部有个存放Jet段的队列,JetPlayer类的主要作用就是向队列中添加Jet段或者清空队列,其次就是控制Jet段的轨迹是否处于打开状态。需要注意的是,在Android开发中,JetPlayer是基于单子模式实现的,在整个系统中,仅存在一个JetPlayer的对象。

JetPlayer的常用方法包括:

getJetPlayer() //获得JetPlayer的句柄

clearQueue() //清空队列

setEventListener() //设置JetPlayer.OnJetEventListener监听器

loadJetFile() //加载Jet文件

queueJetSegment() //查询Jet段

play() //播放Jet文件

以下为.jet文件播放的示例:

private void startPlay(){

// 获取JetPlayer播放器

JetPlayer mJetPlayer = JetPlayer.getJetPlayer() ;

//清空播放队列

mJetPlayer.clearQueue() ;

//绑定事件监听

mJetPlayer.setEventListener(new JetPlayer.OnJetEventListener() {

//播放次数记录

int playNum = 1 ;

@Override

public void onJetEvent(JetPlayer player, short segment, byte track, byte channel, byte controller, byte value) {

Log.i("york","----->onJetEvent") ;

}

@Override

public void onJetUserIdUpdate(JetPlayer player, int userId, int repeatCount) {

Log.i("york","----->onJetUserIdUpdate") ;

}

@Override

public void onJetNumQueuedSegmentUpdate(JetPlayer player, int nbSegments) {

Log.i("york","----->onJetNumQueuedSegmentUpdate") ;

}

@Override

public void onJetPauseUpdate(JetPlayer player, int paused) {

Log.i("york","----->onJetPauseUpdate") ;

if(playNum == 2){

playNum = -1 ;

//释放资源,并关闭jet文件

player.release();

player.closeJetFile() ;

}else{

playNum++ ;

}

}

});

//加载资源

mJetPlayer.loadJetFile(getResources().openRawResourceFd(R.raw.jetaudio)) ;

byte sSegmentID = 0 ;

//指定播放序列

mJetPlayer.queueJetSegment(0, 0, 0, 0, 0, sSegmentID);

mJetPlayer.queueJetSegment(1, 0, 1, 0, 0, sSegmentID);

//开始播放

mJetPlayer.play() ;

}

3.5 Ringtone 播放音频

Ringtone提供了快速播放铃声、通知和其他类似声音的方法。

① Ringtone 实例化

RingtoneManager类提供了Ringtone 的实例化方法:

//通过铃声uri获取

static Ringtone getRingtone(Context context, Uri ringtoneUri)

//通过铃声检索位置获取

Ringtone getRingtone(int position)

② 重要类 RingtoneManager

// 2个构造方法

RingtoneManager(Activity activity)

RingtoneManager(Context context)

// 获取指定声音类型(铃声、通知、闹铃等)的默认声音的Uri

static Uri getDefaultUri(int type)

// 获取系统所有Ringtone的cursor

Cursor getCursor()

// 获取cursor指定位置的Ringtone uri

Uri getRingtoneUri(int position)

// 判断指定Uri是否为默认铃声

static boolean isDefault(Uri ringtoneUri)

//获取指定uri的所属类型

static int getDefaultType(Uri defaultRingtoneUri)

//将指定Uri设置为指定声音类型的默认声音

static void setActualDefaultRingtoneUri(Context context, int type, Uri ringtoneUri)

③ Ringtone播放音频示例

/**

* 播放来电铃声的默认音乐

**/

private void playRingtoneDefault(){

Uri uri = RingtoneManager.getDefaultUri(RingtoneManager.TYPE_RINGTONE) ;

Ringtone mRingtone = RingtoneManager.getRingtone(this,uri);

mRingtone.play();

}

ok,以上是Ringtone播放音频的相关介绍

3.6 OpenSL ES 播放音频

在文章的上面内容,已经对OpenSL ES 做了相应介绍。

OpenSL ES 的开发流程主要有如下6个步骤:

- 创建接口对象

- 设置混音器

- 创建录音器(播放器)

- 设置缓冲队列和回调函数

- 设置播放状态

- 启动回调函数

这里把音频播放部分的代码贴出来,如下:

YorkAudio.cpp

//

// Created by 86158 on 2019/8/19.

//

#include 以上就是我对安卓音频模块的整理。有不足之处或是有好建议可以留言找我。

上一篇:0.安卓音视频整理

下一篇:2.安卓音视频整理(二)—— 音视频编解码

个人网站: www.yorkyue.com