YOLO-v3合并卷积层与BN层

YOLO-v3合并卷积层与BN层

- 批量归一化-BN层(Batch Normalization)

- BN计算公式:

- 合并卷积层与BN层:

- 部分代码实现

- 实验结果

- 具体代码实现

批量归一化-BN层(Batch Normalization)

随机梯度下降法(SGD)对于训练深度网络简单高效,但是它有个毛病,就是需要我们人为的去选择参数,比如学习率、参数初始化、权重衰减系数、Drop out比例等。这些参数的选择对训练结果至关重要,以至于我们很多时间都浪费在这些的调参上。那么使用BN层之后,你可以不需要那么刻意的慢慢调整参数。(详见论文《Batch Normalization_ Accelerating Deep Network Training by Reducing Internal Covariate Shift》 )。

在神经网络训练网络模型时,BN层能够加速网络收敛,并且能够控制过拟合现象的发生,一般放在卷积层之后,激活层之前。BN层将数据归一化后,能够有效解决梯度消失与梯度爆炸问题。虽然BN层在训练时起到了积极作用,然而,在网络Inference时多了一些层的运算,影响了模型的性能,且占用了更多的内存或者显存空间。因此,有必要将 BN 层的参数合并到卷积层,减少计算来提升模型Inference的速度。

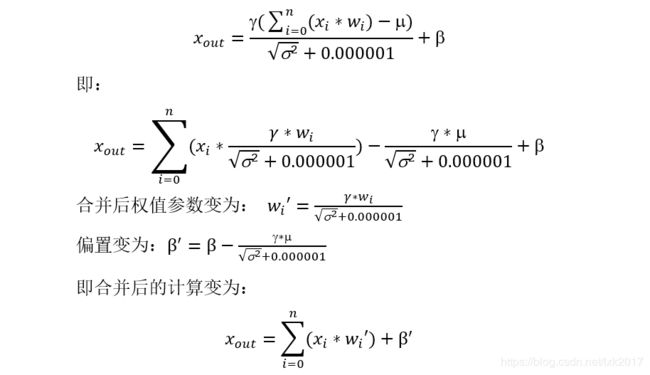

BN计算公式:

在yolo-v3中,BN计算过程如下:

其中x_out为BN计算结果,x_conv为BN前面的卷积计算结果,其余的参数都保存在.weights文件中。

合并卷积层与BN层:

卷积+BN:

此时.weights文件中的参数只剩下权值w和偏置b,合并后的参数要写到新的.weights文件中,注意再次运行Inference代码时,记得将.cfg文件中所有的batch_normalize=1改为batch_normalize=0。

部分代码实现

保存合并后的参数,【文件parser.c中增加代码】

//保存convolutional_weights

void save_convolutional_weights_nobn(layer l, FILE *fp)

{

if(l.binary){

//save_convolutional_weights_binary(l, fp);

//return;

}

#ifdef GPU

if(gpu_index >= 0){

pull_convolutional_layer(l);

}

#endif

int num = l.nweights;

//fwrite(l.biases, sizeof(float), l.n, fp);

/*if (l.batch_normalize){

fwrite(l.scales, sizeof(float), l.n, fp);

fwrite(l.rolling_mean, sizeof(float), l.n, fp);

fwrite(l.rolling_variance, sizeof(float), l.n, fp);

}*/

if (l.batch_normalize) {

for (int j = 0; j < l.n; j++) {

l.biases[j] = l.biases[j] - l.scales[j] * l.rolling_mean[j] / (sqrt(l.rolling_variance[j]) + 0.000001f);

for (int k = 0; k < l.size*l.size*l.c; k++) {

l.weights[j*l.size*l.size*l.c + k] = l.scales[j] * l.weights[j*l.size*l.size*l.c + k] / (sqrt(l.rolling_variance[j]) + 0.000001f);

}

}

}

fwrite(l.biases, sizeof(float), l.n, fp);

fwrite(l.weights, sizeof(float), num, fp);

}

Inference时加载更改后的.weights文件:【文件parser.c中增加代码】

void load_convolutional_weights_nobn(layer l, FILE *fp)

{

if(l.binary){

//load_convolutional_weights_binary(l, fp);

//return;

}

if(l.numload) l.n = l.numload;

int num = l.c/l.groups*l.n*l.size*l.size;

fread(l.biases, sizeof(float), l.n, fp);

//fprintf(stderr, "Loading l.biases num:%d,size:%d*%d\n", l.n, l.n, sizeof(float));

fread(l.weights, sizeof(float), num, fp);

//fprintf(stderr, "Loading weights num:%d,size:%d*%d\n", num, num,sizeof(float));

if(l.c == 3) scal_cpu(num, 1./256, l.weights, 1);

if (l.flipped) {

transpose_matrix(l.weights, l.c*l.size*l.size, l.n);

}

if (l.binary) binarize_weights(l.weights, l.n, l.c*l.size*l.size, l.weights);

}

增加配置参数代码:在文件detector.c中

//detector.c

//void run_detector(int argc, char **argv)中增加部分代码

void run_detector(int argc, char **argv)

{

......

if(0==strcmp(argv[2], "test")) test_detector(datacfg, cfg, weights, filename, thresh, hier_thresh, outfile, fullscreen);

else if(0==strcmp(argv[2], "train")) train_detector(datacfg, cfg, weights, gpus, ngpus, clear);

else if(0==strcmp(argv[2], "valid")) validate_detector(datacfg, cfg, weights, outfile);

else if(0==strcmp(argv[2], "valid2")) validate_detector_flip(datacfg, cfg, weights, outfile);

else if(0==strcmp(argv[2], "recall")) validate_detector_recall(cfg, weights);

else if(0==strcmp(argv[2], "demo")) {

list *options = read_data_cfg(datacfg);

int classes = option_find_int(options, "classes", 20);

char *name_list = option_find_str(options, "names", "data/names.list");

char **names = get_labels(name_list);

demo(cfg, weights, thresh, cam_index, filename, names, classes, frame_skip, prefix, avg, hier_thresh, width, height, fps, fullscreen);

}

//add here

else if(0==strcmp(argv[2], "combineBN")) test_detector_comBN(datacfg, cfg, weights, filename, weightname,thresh, hier_thresh, outfile, fullscreen);

}

//增加test_detector_comBN函数

void test_detector_comBN(char *datacfg, char *cfgfile, char *weightfile, char *filename,char *weightname ,float thresh, float hier_thresh, char *outfile, int fullscreen)

{

list *options = read_data_cfg(datacfg);

char *name_list = option_find_str(options, "names", "data/names.list");

char **names = get_labels(name_list);

image **alphabet = load_alphabet();

network *net = load_network(cfgfile, weightfile, 0);

// 定点化保存参数

save_weights_nobn(net, weightname);

}

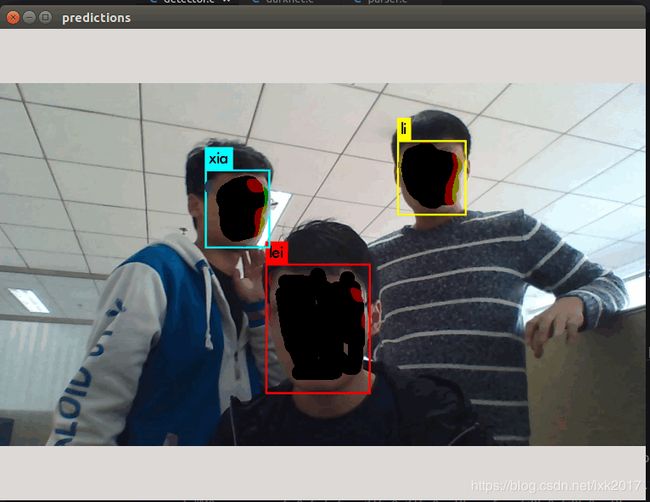

实验结果

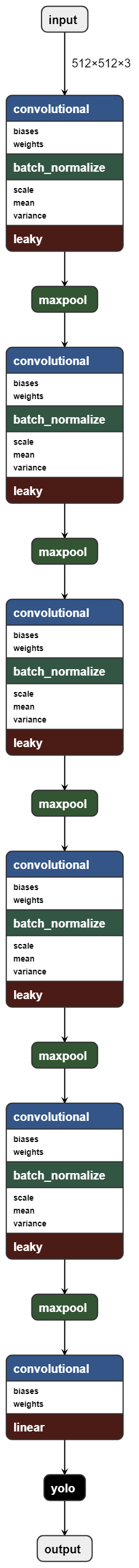

自己训练了一个模型,模型结构如下:

执行combineBN 命令:

./darknet detector combineBN cfg/2024.data cfg/2024-test.cfg 2024_140000.weights data/1.jpg save.weights

其中.data、.cfg、.weights为自己的参数文件,合并后的权值存储为save.weights。

使用合并后的权值进行Inference:

./darknet detector test cfg/2024.data cfg/2024-test-nobn.cfg save.weights data/1.jpg

结果:

实验是在权值和输入参数量化为8bit后进行测试的,平台为笔记本(i7-6700HQ CPU),提升约10.7%。

实验结果:

| 合并前/ms | 合并后/ms |

|---|---|

| 1001 | 894 |

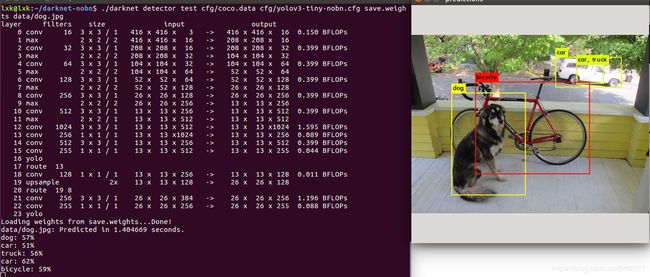

具体代码实现

代码在https://github.com/XiaokangLei/darknet-nobn已经把测试用的yolov3-tiny.weights和yolov3-tiny-nobn.cfg放到代码里面了。

【注意!!】记得将.cfg文件中所有的batch_normalize=1改为batch_normalize=0

git clone https://github.com/XiaokangLei/darknet-nobn.git

cd darknet-nobn/

make all

./darknet detector combineBN cfg/coco.data cfg/yolov3-tiny.cfg yolov3-tiny.weights data/dog.jpg save.weights

./darknet detector test cfg/coco.data cfg/yolov3-tiny-nobn.cfg save.weights data/dog.jpg