反卷积,反池化:论文例子

http://cvlab.postech.ac.kr/research/deconvnet/

https://github.com/HyeonwooNoh/caffe

http://cvlab.postech.ac.kr/research/deconvnet/model/DeconvNet/DeconvNet_inference_deploy.prototxt

Learning Deconvolution Network for Semantic Segmentation

反卷积也可以resize+conv实现,都是矩阵相乘,让网络学习即可

pytorch版本:

https://github.com/csgwon/pytorch-deconvnet

https://github.com/renkexinmay/SemanticSegmentation-FCN-DeconvNet

关于用到的pytorch函数:

https://pytorch.org/docs/stable/nn.html?highlight=convtranspose#torch.nn.ConvTranspose2d

https://pytorch.org/docs/stable/nn.html?highlight=convtranspose#torch.nn.MaxUnpool2d

torch.nn.MaxPool2d(2, stride=2, return_indices=True),

torch.nn.MaxUnpool2d(2, stride=2),源代码:

pytorch-master/aten/src/ATen/native/NaiveConvolutionTranspose2d.cpp:

Tensor slow_conv_transpose2d_cpu(

const Tensor& input,

const Tensor& weight,

IntArrayRef kernel_size,

const Tensor& bias,

IntArrayRef stride,

IntArrayRef padding,

IntArrayRef output_padding,

IntArrayRef dilation) {

Tensor output = at::empty_like(input);

Tensor columns = at::empty_like(input);

Tensor ones = at::empty_like(input);

slow_conv_transpose2d_out_cpu_template(

output,

input,

weight,

kernel_size,

bias,

stride,

padding,

output_padding,

dilation,

columns,

ones);

return output;

}调用:slow_conv_transpose2d_out_cpu_template

void slow_conv_transpose2d_out_cpu_template(

Tensor& output,

const Tensor& input_,

const Tensor& weight_,

IntArrayRef kernel_size,

const Tensor& bias_,

IntArrayRef stride,

IntArrayRef padding,

IntArrayRef output_padding,

IntArrayRef dilation,

Tensor& columns_,

Tensor& ones_) {

TORCH_CHECK(

kernel_size.size() == 2,

"It is expected kernel_size equals to 2, but got size ",

kernel_size.size());

TORCH_CHECK(

dilation.size() == 2,

"It is expected dilation equals to 2, but got size ",

dilation.size());

TORCH_CHECK(

padding.size() == 2,

"It is expected padding equals to 2, but got size ",

padding.size());

TORCH_CHECK(

stride.size() == 2,

"It is expected stride equals to 2, but got size ",

stride.size());

TORCH_CHECK(

output_padding.size() == 2,

"It is expected stride equals to 2, but got size ",

output_padding.size());

Tensor columns = columns_;

Tensor ones = ones_;

int64_t kernel_height = kernel_size[0];

int64_t kernel_width = kernel_size[1];

int64_t dilation_height = dilation[0];

int64_t dilation_width = dilation[1];

int64_t pad_height = padding[0];

int64_t pad_width = padding[1];

int64_t stride_height = stride[0];

int64_t stride_width = stride[1];

int64_t output_padding_height = output_padding[0];

int64_t output_padding_width = output_padding[1];

slow_conv_transpose2d_shape_check(

input_,

Tensor(),

weight_,

bias_,

kernel_height,

kernel_width,

stride_height,

stride_width,

pad_height,

pad_width,

output_padding_height,

output_padding_width,

dilation_height,

dilation_width,

false);

int n_input_plane = weight_.size(0);

int n_output_plane = weight_.size(1);

Tensor input = input_.contiguous();

Tensor weight = weight_.contiguous();

TORCH_CHECK(columns.is_contiguous(), "columns needs to be contiguous");

Tensor bias = Tensor();

if (bias_.defined()) {

bias = bias_.contiguous();

TORCH_CHECK(ones.is_contiguous(), "ones needs to be contiguous");

}

bool is_batch = false;

if (input.dim() == 3) {

// Force batch

is_batch = true;

input.resize_({1, input.size(0), input.size(1), input.size(2)});

}

int64_t input_height = input.size(2);

int64_t input_width = input.size(3);

int64_t output_height = (input_height - 1) * stride_height - 2 * pad_height +

(dilation_height * (kernel_height - 1) + 1) + output_padding_height;

int64_t output_width = (input_width - 1) * stride_width - 2 * pad_width +

(dilation_width * (kernel_width - 1) + 1) + output_padding_width;

// Batch size + input planes

int64_t batch_size = input.size(0);

// Resize output

output.resize_({batch_size, n_output_plane, output_height, output_width});

// Resize temporary columns

columns.resize_({n_output_plane * kernel_width * kernel_height,

input_height * input_width});

columns.zero_();

// Define a buffer of ones, for bias accumulation

// Note: this buffer can be shared with other modules, it only ever gets

// increased, and always contains ones.

if (ones.dim() != 2 ||

ones.size(0) * ones.size(1) < output_height * output_width) {

// Resize plane and fill with ones...

ones.resize_({output_height, output_width});

ones.fill_(1);

}

AT_DISPATCH_FLOATING_TYPES_AND(at::ScalarType::Long,

input.scalar_type(), "slow_conv_transpose2d_out_cpu", [&] {

// For each elt in batch, do:

for (int elt = 0; elt < batch_size; elt++) {

// Helpers

Tensor input_n;

Tensor output_n;

// Matrix mulitply per output:

input_n = input.select(0, elt);

output_n = output.select(0, elt);

// M,N,K are dims of matrix A and B

// (see http://docs.nvidia.com/cuda/cublas/#cublas-lt-t-gt-gemm)

int64_t m = weight.size(1) * weight.size(2) * weight.size(3);

int64_t n = columns.size(1);

int64_t k = weight.size(0);

// Do GEMM (note: this is a bit confusing because gemm assumes

// column-major matrices)

THBlas_gemm(

'n',

't',

n,

m,

k,

1,

input_n.data_ptr(),

n,

weight.data_ptr(),

m,

0,

columns.data_ptr(),

n);

// Unpack columns back into input:

col2im(

columns.data_ptr(),

n_output_plane,

output_height,

output_width,

input_height,

input_width,

kernel_height,

kernel_width,

pad_height,

pad_width,

stride_height,

stride_width,

dilation_height,

dilation_width,

output_n.data_ptr());

// Do Bias after:

// M,N,K are dims of matrix A and B

// (see http://docs.nvidia.com/cuda/cublas/#cublas-lt-t-gt-gemm)

int64_t m_ = n_output_plane;

int64_t n_ = output_height * output_width;

int64_t k_ = 1;

// Do GEMM (note: this is a bit confusing because gemm assumes

// column-major matrices)

if (bias_.defined()) {

THBlas_gemm(

't',

'n',

n_,

m_,

k_,

1,

ones.data_ptr(),

k_,

bias.data_ptr(),

k_,

1,

output_n.data_ptr(),

n_);

}

}

// Resize output

if (is_batch) {

output.resize_({n_output_plane, output_height, output_width});

input.resize_({n_input_plane, input_height, input_width});

}

});

} 调用:

slow_conv_transpose2d_shape_check

static inline void slow_conv_transpose2d_shape_check(

const Tensor& input,

const Tensor& grad_output,

const Tensor& weight,

const Tensor& bias,

int kernel_height,

int kernel_width,

int stride_height,

int stride_width,

int pad_height,

int pad_width,

int output_padding_height,

int output_padding_width,

int dilation_height,

int dilation_width,

bool weight_nullable) {

TORCH_CHECK(

kernel_width > 0 && kernel_height > 0,

"kernel size should be greater than zero, but got kernel_height: ",

kernel_height,

" kernel_width: ",

kernel_width);

TORCH_CHECK(

stride_width > 0 && stride_height > 0,

"stride should be greater than zero, but got stride_height: ",

stride_height,

" stride_width: ",

stride_width);

TORCH_CHECK(

dilation_width > 0 && dilation_height > 0,

"dilation should be greater than zero, but got dilation_height: ",

dilation_height,

", dilation_width: ",

dilation_width);

TORCH_CHECK(

(output_padding_width < stride_width ||

output_padding_width < dilation_width) &&

(output_padding_height < stride_height ||

output_padding_height < dilation_height),

"output padding must be smaller than either stride or dilation, but got output_padding_height: ",

output_padding_height,

" output_padding_width: ",

output_padding_width,

" stride_height: ",

stride_height,

" stride_width: ",

stride_width,

" dilation_height: ",

dilation_height,

" dilation_width: ",

dilation_width);

if (weight.defined()) {

TORCH_CHECK(

weight.numel() != 0 && (weight.dim() == 2 || weight.dim() == 4),

"non-empty 2D or 4D weight tensor expected, but got: ",

weight.sizes());

if (bias.defined()) {

check_dim_size(bias, 1, 0, weight.size(1));

}

} else if (!weight_nullable) {

AT_ERROR("weight tensor is expected to be non-nullable");

}

int ndim = input.dim();

int dimf = 0;

int dimh = 1;

int dimw = 2;

if (ndim == 4) {

dimf++;

dimh++;

dimw++;

}

TORCH_CHECK(

input.numel() != 0 && (ndim == 3 || ndim == 4),

"non-empty 3D or 4D input tensor expected but got a tensor with size ",

input.sizes());

int64_t input_height = input.size(dimh);

int64_t input_width = input.size(dimw);

int64_t output_height = (input_height - 1) * stride_height - 2 * pad_height +

(dilation_height * (kernel_height - 1) + 1) + output_padding_height;

int64_t output_width = (input_width - 1) * stride_width - 2 * pad_width +

(dilation_width * (kernel_width - 1) + 1) + output_padding_width;

if (output_width < 1 || output_height < 1) {

AT_ERROR(

"Given input size per channel: (",

input_height,

" x ",

input_width,

"). "

"Calculated output size per channel: (",

output_height,

" x ",

output_width,

"). Output size is too small");

}

if (weight.defined()) {

int64_t n_input_plane = weight.size(0);

check_dim_size(input, ndim, dimf, n_input_plane);

}

if (grad_output.defined()) {

if (weight.defined()) {

int64_t n_output_plane = weight.size(1);

check_dim_size(grad_output, ndim, dimf, n_output_plane);

} else if (bias.defined()) {

int64_t n_output_plane = bias.size(0);

check_dim_size(grad_output, ndim, dimf, n_output_plane);

}

check_dim_size(grad_output, ndim, dimh, output_height);

check_dim_size(grad_output, ndim, dimw, output_width);

}

}这里我有一点没懂:

import torch

from torch import nn

input = torch.randn(1, 16, 12, 12)

downsample = nn.Conv2d(16, 16, 3, stride=2, padding=1)

upsample = nn.ConvTranspose2d(16, 16, 3, stride=2, padding=1)

h = downsample(input)

print(h.size())

output = upsample(h, output_size=input.size())

print(output.size())例子结果:

torch.Size([1, 16, 6, 6])

torch.Size([1, 16, 12, 12])

但是:

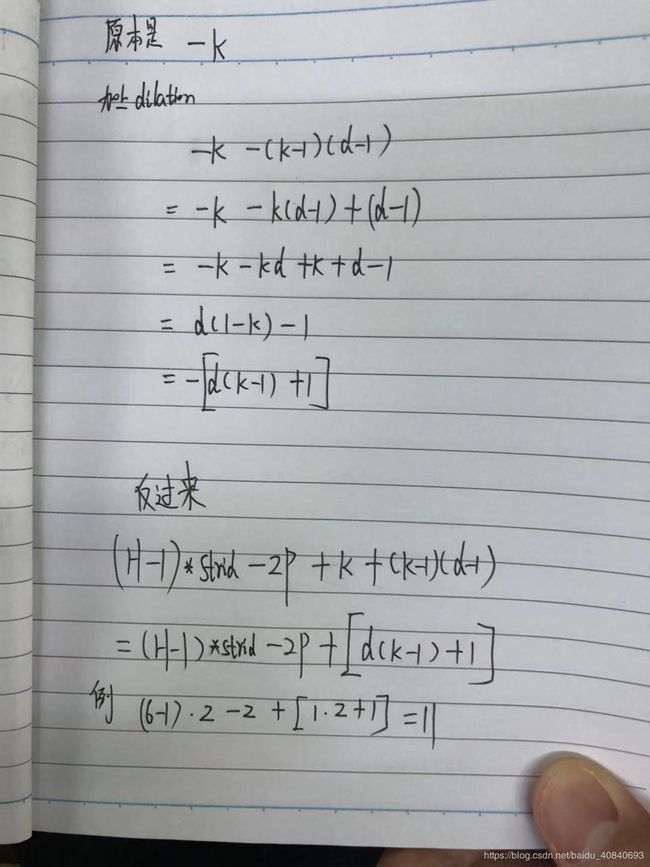

没算出来12,有谁告知一下

ConvTranspose2d

细节:

https://blog.csdn.net/qq_27261889/article/details/86304061

https://blog.csdn.net/qq_41368247/article/details/86626446

https://www.cnblogs.com/wanghui-garcia/p/10791778.html

用途:

https://github.com/csgwon/pytorch-deconvnet/blob/master/models/vgg16_deconv.py

torch.nn.MaxPool2d(2, stride=2, return_indices=True),

torch.nn.Conv2d(64, 128, 3, padding=1),

.........

torch.nn.ConvTranspose2d(128, 64, 3, padding=1),

torch.nn.MaxUnpool2d(2, stride=2),

可视化描述:

https://github.com/vdumoulin/conv_arithmetic/blob/master/README.md