哥伦比亚大学 自然语言处理 公开课 授课讲稿 翻译(四)

前言:心血来潮看了一个自然语言处理公开课,大牛柯林斯讲授的。觉得很好,就自己动手把它的讲稿翻译成中文。一方面,希望通过这个翻译过程,让自己更加理解大牛的讲授内容,锻炼自己翻译能力。另一方面,造福人类,hah。括号内容是我自己的辅助理解内容。

翻译的不准的地方,欢迎大家指正。

课程地址:https://www.coursera.org/course/nlangp

哥伦比亚大学 自然语言处理 公开课 授课讲稿 翻译(四):语言模型问题-2

导读:这节课柯老师会就一个语音识别的例子说明语言模型在自然语言处理应用中的重要性。然后,介绍一个非常简单的语言模型建模方法并指此建模方法的缺陷。

>> So, very soon, we will start to talk about techniques that solve precisely this problem, this problem of taking a training samples input and returning a function p as its output. But the first question to ask really is, you know, why on earth would we want to do this? At first sight, this seems like a rather strange problem to be considering. But actually, there's very strong motivation for considering it. So, there are two reasons I'll give for considering the language modeling problem. The first is that language models are actually useful in a very wide range of applications. So, speech recognition was really the first application of language models. And language models are critical to modern speech recognizers. Other examples are optical character recognition, handwriting recognition, another example we'll see later in the course is machine translation. So, in short, language models are actually useful in many applications. I'll come back to this point in more detail in a second but the other reason for studying language models is that the estimation techniques that we develop, later in this lecture, will be very useful for other problems in NLP. So, for example, we'll see problems such as part of speech tagging, and natural language pausing, and machine translation where the estimation techniques described are applied very directly.

额,我们即将开始讲一些解决这个问题的技术。(上一节中 语言模型问题:给较好的句子赋予高概率,较差的句子赋予低概率)这个问题就是用一些训练样本作为输入,返回函数p作为它的输出。然而,第一个疑问是我们究竟为什么要这么做?乍一看,这似乎的确是一个非常奇怪的事情。但事实上这个问题是非常值得去研究的。这里我指出为什么我们要研究这个问题的两个原因。第一个原因是由于语言模型在在很多自然语言处理应用中有着作用。额,语音识别是第一个用到语言模型的应用。语言模型对现代的从事语音识别的人至关重要。其他的例子包括光学字符识别、手写识别和我们课程会讲到的机器翻译。额,简单的说,语言模型在自然语言处理应用中非常有用。后面我会展开讲讲这一点。研究语言模型的另外一个原因是,后面课程会讲到我们拓展的(语言模型)估计方法,这些方法对处理其他自然语言处理问题中也十分有帮助。比如我们会在词性标注、自然语言句法分析和机器翻译中直接套刚才说的估计方法。

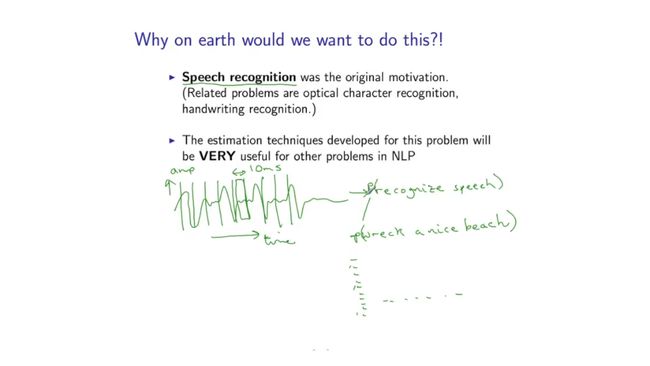

So, let me go back to this first issue and describe in a little more detail how language models are relevant to the problem of speech recognition. And this will be a fairly high level sketch, but hopefully you'll get the basic idea. So, the basic problem in speech recognition is as follows. As input, we have some acoustic recording. So, this is actually somebody speaking. On one axis we have time. On the other axis, we have the amplitude or energy. And in a speech recognizer, we typically go through some pre-processing steps, something like the following. We would typically split the sequence into relatively short time periods. These are often called frames. Each frame might be, for example, around 10 milliseconds long. And then for each frame, we might perform some kind of Fourier analysis, where we get energies of different frequencies within that frame. So, the details aren't too important, but this is the kind of pre-processing we might carry out. Having performed this pre-processing, the task is then to map this acoustic input to the words which were actually spoken. So, let's say, for the sake of example recognized speech was what was actually spoken in this case. The speech recognizer takes an acoustic,sequences input, and outputs a sentence or sequence of words as its output. Now in practice, there are often many possible alternative sentences which could have been spoken which are quite confusable.

好,现在我们回到第一个问题(语言模型的应用),稍微讲点语言模型如何与语音识别搭上关系。这里的介绍是从总体上把握(忽略技术细节),但还是希望大家能够知道他的基本思想。额,下面介绍下语音识别的基本问题。我们把语音记录作为输入,额,这(输入)是人们真实的交谈。(画面是一个语音信号图)它的一个轴(横轴)代表时间,另外一个轴(竖轴)代表振幅或能量。作为一个语音识别者,我们通常会做一些类似下面一样的预处理步骤。我们会将这些(连续的)序列分割成相对短的时间片段。这些(片段)通常称之为帧。每帧一般长为10毫秒。然后,对每帧我们进行某种傅里叶变换,从帧上我们可以得到不同频率的能量。额,处理细节不重要,但是这个预处理步骤还是我们要做的。做完这些预处理后,现在的任务就是把这些声波映射成我们真实交流的单词。为了便于举例我们(假设)这里说的是recognized speech单词。语音识别者取得这个声波序列输入(recognized speech),然后输出单词序列。但实际(处理)中很可能出现一些(和输入序列 比如recognized speech)发音容易混淆的句子。

So, another example sentence might be, wreck a nice beach. It's a famous example from the speech processing community. And the issue here is that these two sentences are quite similar from an acoustic point of view. And if you simply look at a measure of how compatible this sentence is with this acoustics versus this sentence, it's quite possible you might confuse these two sentences, and this is just one example sentence. In practice, there are many, many, many other possibilities which might have a reasonable degree of, of fit with the acoustic input and might be quite confusable with the true sentence recognized speech in this case. Now, if we have a language model, we can actually evaluate a probability, p, of each of these sentences. And a language model adds some very useful information to this whole process, which is the fact that this sentence, recognize speech, is probably more probably than the sentence wreck a nice beach. [laugh] So the, the, the language model is going to provide us additional information in terms of the likelihood or probability of different sentences in a language, and again, there are going to be many others down here with which some of them which might look acoustically like a, like a very good match to the input, but which are completely unlikely as sentences in English. So, in practice, modern speech recognizers use two sources of information. Firstly, they have some way of evaluating how well each of these sentences match the input from an acoustic point of view. But secondly, they also have a language model which gives you a, essentially a prior probability over the different sentences in the language. It can be very useful in getting rid of these kind of confusions.

额,另外一个可能的(输入)句子是wreck a nice beach(与 recognized speech 发音很像)。这是语音处理社区一个著名的例子。现在的问题是从声波角度上看(就是去看这两个句子的语音信号图)这两个句子非常相似。如果大家仅仅简单的关注着如何衡量这个声波和句子的兼容性,大家很可能被这两个句子弄晕(wreck a nice beach 与 recognized speech他们的语音信号图太像了)。而且这仅仅只是一个小例子,实际中在一个合理(误差)范围内有很多很多与输入声波相容的可能的句子,真正的句子(recognized speech)很容易和它们混淆。现在如果我们有一个语言模型,我们就可以评估每个句子的可能性p。语言模型可以为上面的处理过程加入很多有用信息:事实上句子recognize speech比句子wreck a nice beach更具有可能性。(recognize speech比wreck a nice beach更像一个句子)。语言模型会从概率上对语言中不同的句子(进行区分),提供额外信息给我们(帮助我们进行语音识别)。上面例子中有很多与输入(语音信号图)非常匹配但完全不可能出现的英文句子。实践中,当代语音识别者使用两种信息,一个是他们有很多种方法从声波方面评测句子与输入(声波)有多匹配。另外,他们也有语言模型提供语言中不同句子的先验概率,这个对摆脱容易混淆的情况很有帮助。

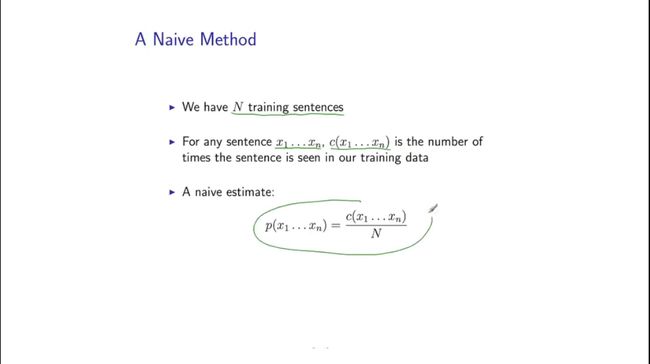

Okay, finally, let's talk about a kind of very naive method for language modeling, just to get us off the ground as a though experiment. So, say, we have N training sentences. Maybe a few million sentences from the New York, New York Times, for example. And for any sentence, X1 up to Xn, I'll just define c of X1 through Xn to be the number of times that, that sentence is seen in our training example, okay? A very simple estimate is then the following, where we define p to be simply c over N, okay? So, we simply count the number of times the sentences being seen and divide by the total number of sentences seen in our training corpus. And this is a language model, you can verify that p is always greater and equal to zero. And also if you sum over all sentences, p will sum to one. It's a perfectly well-formed language model. But it has some very, very clear deficiencies. Most importantly, it will assign probability 0 to any sentence not seen in our training sample. And we know that we're continuiusly seeing new sentences in a language. So, this model really has no ability to generalize to new sentences. So, the most important question in this lecture is essentially, how can we build models to improve upon this naive estimate and in particular, models which generalize well to new test sentences.

好的,最后,我们谈谈一种朴素方法进行语言模型估计,通过这个实验帮我们开始深入。我们有N个训练句子,比如可能是几百万来自纽约时报的句子。每个句子用下X1到Xn表示,我定义句子在训练语料中出现的次数为X1到Xn的c值。好的,接下来说一个简单的估计方法,在此我们定义p值为c除以N。我们简单的数数句子在训练语料中出现次数,然后除以训练语料的句子总数N,哈哈,这就是语言模型。你可以检验一下(语言模型两个条件),单个句子p值是大于等于0的并且如果你把所有句子p值相加,它的和为1。这是一个形式良好的语言模型。但是他有很多明显的缺陷。最大的缺陷是他们给很多训练语料中没有出现的句子赋予0概率。我们知道我们会不断的看到新的句子,但这个模型没有能力生成新的句子。所以本课最重要的问题是我们如何去在朴素评估方法基础上更好的建模,特别是如果建立在新测试句子上泛化能力好的模型。

<第四节完>

哥伦比亚大学 自然语言处理 公开课 授课讲稿 翻译(一):自然语言处理介绍-1

哥伦比亚大学 自然语言处理 公开课 授课讲稿 翻译(二):自然语言处理介绍-2

哥伦比亚大学 自然语言处理 公开课 授课讲稿 翻译(三):语言模型介绍-1