f-GAN: Training Generative Neural Samplers using Variational Divergence Minimization

f f f-GAN: Training Generative Neural Samplers using Variational Divergence Minimization

Paper:http://papers.nips.cc/paper/6066-f-gan-training-generative-neural-samplers-using-variational-divergence-minimization.pdf

Tips:Nips2016的一篇paper,主要研究GAN的object function的问题。

(阅读笔记)

1.Main idea

- 提出问题:GAN的效果很好能够生成一个与目标相似的分布,但是却无法计算出某个事件的似然或者边缘似然。but cannot be used for computing likelihoods or for marginalization.

- GAN做生成只是一个特例。We show that the generative-adversarial approach is a special case of an existing more general variational divergence estimation approach.

- 任意的目标函数(散度)都能用于GAN的训练。We show that any f f f- d i v e r g e n c e divergence divergence can be used for training generative neural samplers.

2.Intro

- 当有了生成模型 Q Q Q后,一般用于:

- 抽样:从模型 Q Q Q中抽样,可以得到重要的信息,如:做决策。

- 估计:从 P P P中得到独立同分布的样本 { x 1 , x 2 , . . . , x n } \{x_1,x_2,...,x_n\} {x1,x2,...,xn},然后可以找到 Q Q Q相似于 P P P分布。

- 似然估计:给定 x x x,估计是分布 Q Q Q的概率。

- 原始GAN是 J S \mathbf{JS} JS散度(Details中详述),如下所示:

D J S ( P ∥ Q ) = 1 2 D K L ( P ∥ 1 2 ( P + Q ) ) + 1 2 D K L ( Q ∥ 1 2 ( P + Q ) ) (1) \begin{aligned} D_{\mathbf{JS}}(P\|Q)=\frac{1}{2}D_{\mathbf{KL}}(P\| \frac{1}{2}(P+Q))+\frac{1}{2}D_{\mathbf{KL}}(Q\| \frac{1}{2}(P+Q)) \tag{1} \end{aligned} DJS(P∥Q)=21DKL(P∥21(P+Q))+21DKL(Q∥21(P+Q))(1)

3.Details

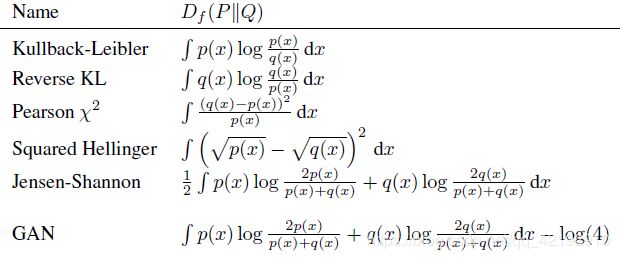

- f f f- d i v e r g e n c e divergence divergence用于衡量两个分布 P P P和 Q Q Q之间得差距的。其中 f ( ⋅ ) f(\cdot) f(⋅)要求:是下凸函数且 f ( 1 ) = 0 f(1)=0 f(1)=0,所以不同的 f ( ⋅ ) f(\cdot) f(⋅)也就用不同的距离散度形式:

D f ( P ∥ Q ) = ∫ X q ( x ) f ( p ( x ) q ( x ) ) d x (2) \begin{aligned} D_f(P\|Q)=\int_{\mathcal{X}}q(x)f(\frac{p(x)}{q(x)})\mathrm{d}x \tag{2} \end{aligned} Df(P∥Q)=∫Xq(x)f(q(x)p(x))dx(2)

其实很明显地,当 p ( x ) p(x) p(x)和 q ( x ) q(x) q(x)相等时, f ( ⋅ ) = 0 f(\cdot)=0 f(⋅)=0,即 D f ( P ∥ Q ) = 0 D_f(P\|Q)=0 Df(P∥Q)=0,之间距离就为0;又 f ( ⋅ ) f(\cdot) f(⋅)是下凸函数,也就是 f ( x 1 + x 2 + , , , + x n n ) ≤ f ( x 1 ) + f ( x 2 ) + . . . + f ( x n ) n → f ( E ( x ) ) ≤ E ( f ( x ) ) f(\frac{x_1+x_2+,,,+x_n}{n})\leq\frac{f(x_1)+f(x_2)+...+f(x_n)}{n}\rightarrow f(\mathbb{E}(x)) \leq \mathbb{E} (f(x)) f(nx1+x2+,,,+xn)≤nf(x1)+f(x2)+...+f(xn)→f(E(x))≤E(f(x)),于是:

E ( f ( x ) ) ≥ f ( E ( x ) ) → ∫ X q ( x ) f ( x ) d x ≥ f ( ∫ X q ( x ) x d x ) → ∫ X q ( x ) f ( p ( x ) q ( x ) ) d x ≥ f ( ∫ X q ( x ) p ( x ) q ( x ) d x ) = f ( ∫ X p ( x ) d x ) = f ( 1 ) = 0 (3) \begin{aligned} \mathbb{E} (f(x)) \geq f(\mathbb{E}(x)) \rightarrow \int_{\mathcal{X}}q(x)f(x)\mathrm{d}x & \geq f(\int_{\mathcal{X}}q(x)x\mathrm{d}x) \\ \rightarrow \int_{\mathcal{X}}q(x)f(\frac{p(x)}{q(x)})\mathrm{d}x & \geq f(\int_{\mathcal{X}}q(x)\frac{p(x)}{q(x)}\mathrm{d}x) \\ &= f(\int_{\mathcal{X}}p(x)\mathrm{d}x) \\ &=f(1) \\ &=0 \tag{3} \end{aligned} E(f(x))≥f(E(x))→∫Xq(x)f(x)dx→∫Xq(x)f(q(x)p(x))dx≥f(∫Xq(x)xdx)≥f(∫Xq(x)q(x)p(x)dx)=f(∫Xp(x)dx)=f(1)=0(3)

D f ( P ∥ Q ) D_f(P\|Q) Df(P∥Q)有下界且是其最小值0,即可用于衡量距离。 - f f f- d i v e r g e n c e divergence divergence中的函数 f ( ⋅ ) f(\cdot) f(⋅)有一共轭函数 f ∗ ( ⋅ ) f^{*}(\cdot) f∗(⋅);在当前自变量 t t t值的时候,取到 f ( ⋅ ) f(\cdot) f(⋅)定义域内所有 u u u,使得 { ⋅ } \{\cdot\} {⋅}中值最大的情况就是函数 f ∗ ( t ) f^*(t) f∗(t)的值,如下式所示:

f ∗ ( t ) = sup u ∈ d o m f { u t − f ( u ) } (4) \begin{aligned} f^*(t)=\sup_{u \in \mathrm{dom}_f} \{ut-f(u)\} \tag{4} \end{aligned} f∗(t)=u∈domfsup{ut−f(u)}(4)

f f f与 f ∗ f^* f∗可以互相转换,即 f ( u ) = sup t ∈ d o m f ∗ { t u − f ∗ ( t ) } f(u)=\sup_{t \in \mathrm{dom}_{f^{*}}} \{tu-f^*(t)\} f(u)=supt∈domf∗{tu−f∗(t)},所以有:

D f ( P ∥ Q ) = ∫ X q ( x ) f ( p ( x ) q ( x ) ) d x = ∫ X q ( x ) sup t ∈ d o m f ∗ { t p ( x ) q ( x ) − f ∗ ( t ) } d x ≥ ∫ X q ( x ) × ( T ( x ) p ( x ) q ( x ) − f ∗ ( T ( x ) ) ) d x = ∫ X T ( x ) p ( x ) − q ( x ) f ∗ ( T ( x ) ) d x = ∫ X T ( x ) p ( x ) d x − ∫ X q ( x ) f ∗ ( T ( x ) ) d x (5) \begin{aligned} D_f(P\|Q)&=\int_{\mathcal{X}}q(x)f(\frac{p(x)}{q(x)})\mathrm{d}x \\ &= \int_{\mathcal{X}} q(x) \sup_{t \in \mathrm{dom}_{f^{*}}} \{t\frac{p(x)}{q(x)}-f^*(t)\} \mathrm{d}x \\ & \geq \int_{\mathcal{X}} q(x) \times (T(x)\frac{p(x)}{q(x)}-f^*(T(x)))\mathrm{d}x \\ &= \int_{\mathcal{X}} T(x)p(x)-q(x)f^*(T(x))\mathrm{d}x \\ &= \int_{\mathcal{X}} T(x)p(x)\mathrm{d}x-\int_{\mathcal{X}}q(x)f^*(T(x))\mathrm{d}x \\ \tag{5} \end{aligned} Df(P∥Q)=∫Xq(x)f(q(x)p(x))dx=∫Xq(x)t∈domf∗sup{tq(x)p(x)−f∗(t)}dx≥∫Xq(x)×(T(x)q(x)p(x)−f∗(T(x)))dx=∫XT(x)p(x)−q(x)f∗(T(x))dx=∫XT(x)p(x)dx−∫Xq(x)f∗(T(x))dx(5)

由上式有,因为 D f ( P ∥ Q ) D_f(P\|Q) Df(P∥Q)要求取到所有的 t t t得到的函数最大值才是最终的结果,直接替换成另一函数 T ( x ) T(x) T(x)必然是更小的,即使 T ( x ) T(x) T(x)是从某一函数集 T \mathcal{T} T得到的最大的函数,所以找到一个很好的最大的 T ( x ) T(x) T(x)就可以去逼近 D f ( P ∥ Q ) D_f(P\|Q) Df(P∥Q);所以上式改写如下:

D f ( P ∥ Q ) ≥ sup T ∈ T { ∫ X T ( x ) p ( x ) d x − ∫ X q ( x ) f ∗ ( T ( x ) ) d x } = sup T ∈ T { E x ∼ P [ T ( x ) ] − E x ∼ Q [ f ∗ ( T ( x ) ) ] } (6) \begin{aligned} D_f(P\|Q) & \geq \sup_{T \in \mathcal{T}} \{ \int_{\mathcal{X}} T(x)p(x)\mathrm{d}x-\int_{\mathcal{X}}q(x)f^*(T(x))\mathrm{d}x \} \\ &=\sup_{T \in \mathcal{T}} \{ \mathbb{E}_{x \sim P}[T(x)] -\mathbb{E}_{x \sim Q}[f^*(T(x))]\} \tag{6} \end{aligned} Df(P∥Q)≥T∈Tsup{∫XT(x)p(x)dx−∫Xq(x)f∗(T(x))dx}=T∈Tsup{Ex∼P[T(x)]−Ex∼Q[f∗(T(x))]}(6)

同时, T ∗ ( x ) = f ′ ( p ( x ) q ( x ) ) T^*(x)=f'(\frac{p(x)}{q(x)}) T∗(x)=f′(q(x)p(x)),见https://arxiv.org/pdf/0809.0853.pdf中5.1节式子 ( 45 ) ( 46 ) (45)(46) (45)(46)。 - 用 f f f- d i v e r g e n c e divergence divergence作为目标函数来训练GAN;其中 P P P是真实分布, T ω T_{\omega} Tω是输入输出函数(给一输入找到最优的结果最大化 ( 6 ) (6) (6)式), Q θ Q_\theta Qθ是生成器(假的分布),如下所示:

F ( θ , ω ) = E x ∼ P [ T ω ( x ) ] − E x ∼ Q θ [ f ∗ ( T ω ( x ) ) ] (7) \begin{aligned} F(\theta,\omega)=\mathbb{E}_{x \sim P}[T_{\omega}(x)] -\mathbb{E}_{x \sim Q_{\theta}}[f^*(T_{\omega}(x))] \tag{7} \end{aligned} F(θ,ω)=Ex∼P[Tω(x)]−Ex∼Qθ[f∗(Tω(x))](7)

正如式子 ( 6 ) ( 7 ) (6)(7) (6)(7)所示,目标是两个分布最小化,即需要 D f ( P ∥ Q ) D_f(P\|Q) Df(P∥Q)最小化,但是需要找到最大化 ( 6 ) (6) (6)式得到最优 T ( x ) T(x) T(x)才能很好衡量 D f D_f Df,其次才对 D f D_f Df最小化。所以训练即关于 ω \omega ω最大化,关于 θ \theta θ最小化。 - 需要考虑 f ∗ f^* f∗的定义域,所以假设 T ω ( x ) = g f ( V ω ( x ) ) T_{\omega}(x)=g_f(V_{\omega}(x)) Tω(x)=gf(Vω(x)),其中 r a n g e V ω → R \mathbf{range}_{V_{\omega}}\rightarrow \mathbb{R} rangeVω→R;同时 r a n g e g f → d o m a i n f ∗ \mathbf{range}_{g_f} \rightarrow \mathbf{domain}_{f^*} rangegf→domainf∗, g f g_f gf激活函数的选择为单调递增函数:

F ( θ , ω ) = E x ∼ P [ g f ( V ω ( x ) ) ] + E x ∼ Q θ [ − f ∗ ( g f ( V ω ( x ) ) ) ] (8) \begin{aligned} F(\theta,\omega)=\mathbb{E}_{x \sim P}[g_f(V_{\omega}(x))] +\mathbb{E}_{x \sim Q_{\theta}}[-f^*(g_f(V_{\omega}(x)))] \tag{8} \end{aligned} F(θ,ω)=Ex∼P[gf(Vω(x))]+Ex∼Qθ[−f∗(gf(Vω(x)))](8)

原始GAN的目标函数如下所示:

F ( θ , ω ) = E x ∼ P [ log D ω ( x ) ] + E x ∼ Q θ [ log ( 1 − D ω ( x ) ) ] (9) \begin{aligned} F(\theta,\omega)=\mathbb{E}_{x \sim P}[\log D_{\omega}(x)] +\mathbb{E}_{x \sim Q_{\theta}}[\log (1-D_{\omega}(x))] \tag{9} \end{aligned} F(θ,ω)=Ex∼P[logDω(x)]+Ex∼Qθ[log(1−Dω(x))](9)

实际中其实找不到 D ω D_{\omega} Dω,只能尽量去接近。

所以参数更新即是,其中 f ( ⋅ ) = u log u − ( u + 1 ) log ( u + 1 ) f(\cdot)=u\log u-(u+1)\log (u+1) f(⋅)=ulogu−(u+1)log(u+1):

{ θ , ω } = arg min G max D D f ( P ∥ G θ ) = arg min G max D { E x ∼ P [ log D ω ( x ) ] + E x ∼ G θ [ log ( 1 − D ω ( x ) ) ] } → ∂ { E x ∼ P [ log D ω ( x ) ] + E x ∼ G θ [ log ( 1 − D ω ( x ) ) ] } ∂ D ω = ∂ { ∫ p ( x ) log D ω ( x ) d x + ∫ g θ ( x ) log ( 1 − D ω ( x ) ) d x } ∂ D ω ( x ) → p ( x ) 1 D ω ( x ) − g θ ( x ) 1 1 − D ω ( x ) = 0 → D ω ( x ) = p ( x ) p ( x ) + g θ ( x ) (10) \begin{aligned} \{\theta,\omega \} & =\arg \min_G \max_D D_f(P \|G_{\theta}) \\ &=\arg \min_G \max_D \{\mathbb{E}_{x \sim P}[\log D_{\omega}(x)] +\mathbb{E}_{x \sim G_{\theta}}[\log (1-D_{\omega}(x))] \} \\ & \rightarrow \frac{\partial \{\mathbb{E}_{x \sim P}[\log D_{\omega}(x)] +\mathbb{E}_{x \sim G_{\theta}}[\log (1-D_{\omega}(x))] \}}{\partial D_{\omega}} \\ &= \frac{\partial \{ \int p(x) \log D_{\omega}(x)\mathrm{d}x + \int g_{\theta}(x)\log (1-D_{\omega}(x))\mathrm{d}x \}}{\partial D_{\omega}(x)} \\ &\rightarrow p(x) \frac{1}{D_{\omega}(x)}-g_{\theta}(x) \frac{1}{1-D_{\omega}(x)}=0\\ &\rightarrow D_{\omega}(x)=\frac{p(x)}{p(x)+g_{\theta}(x)} \tag{10} \end{aligned} {θ,ω}=argGminDmaxDf(P∥Gθ)=argGminDmax{Ex∼P[logDω(x)]+Ex∼Gθ[log(1−Dω(x))]}→∂Dω∂{Ex∼P[logDω(x)]+Ex∼Gθ[log(1−Dω(x))]}=∂Dω(x)∂{∫p(x)logDω(x)dx+∫gθ(x)log(1−Dω(x))dx}→p(x)Dω(x)1−gθ(x)1−Dω(x)1=0→Dω(x)=p(x)+gθ(x)p(x)(10)

将上式子再带回目标函数:

E x ∼ P [ log p ( x ) p ( x ) + g θ ( x ) ] + E x ∼ G θ [ log ( 1 − p ( x ) p ( x ) + g θ ( x ) ) ] = ∫ p ( x ) log p ( x ) p ( x ) + g θ ( x ) d x + ∫ g θ ( x ) log ( 1 − p ( x ) p ( x ) + g θ ( x ) ) d x = ∫ p ( x ) log p ( x ) 2 p ( x ) + g θ ( x ) 2 d x + ∫ g θ ( x ) log ( 1 − p ( x ) 2 p ( x ) + g θ ( x ) 2 ) d x = ∫ p ( x ) log p ( x ) p ( x ) + g θ ( x ) 2 d x + ∫ g θ ( x ) log ( 1 − p ( x ) p ( x ) + g θ ( x ) 2 ) d x − 2 log 2 = K L ( P ∥ P + G θ 2 ) + K L ( G θ ∥ P + G θ 2 ) − 2 log 2 = J S ( P ∥ G θ ) − 2 log 2 (11) \begin{aligned} & \mathbb{E}_{x \sim P}[\log \frac{p(x)}{p(x)+g_{\theta}(x)}] +\mathbb{E}_{x \sim G_{\theta}}[\log (1-\frac{p(x)}{p(x)+g_{\theta}(x)})] \\ &=\int p(x)\log \frac{p(x)}{p(x)+g_{\theta}(x)}\mathrm{d}x +\int g_{\theta}(x) \log (1-\frac{p(x)}{p(x)+g_{\theta}(x)}) \mathrm{d}x \\ &=\int p(x)\log \frac{\frac{p(x)}{2}}{ \frac{p(x)+g_{\theta}(x)}{2}}\mathrm{d}x +\int g_{\theta}(x) \log (1-\frac{\frac{p(x)}{2}}{\frac{p(x)+g_{\theta}(x)}{2}}) \mathrm{d}x \\ &=\int p(x)\log \frac{p(x)}{ \frac{p(x)+g_{\theta}(x)}{2}}\mathrm{d}x +\int g_{\theta}(x) \log (1-\frac{p(x)}{\frac{p(x)+g_{\theta}(x)}{2}}) \mathrm{d}x-2\log 2 \\ &=\mathbf{KL}(P\| \frac{P+G_{\theta}}{2})+\mathbf{KL}(G_{\theta}\| \frac{P+G_{\theta}}{2})-2\log 2 \\ &=\mathbf{JS}(P\|G_{\theta})-2\log 2 \\ \tag{11} \end{aligned} Ex∼P[logp(x)+gθ(x)p(x)]+Ex∼Gθ[log(1−p(x)+gθ(x)p(x))]=∫p(x)logp(x)+gθ(x)p(x)dx+∫gθ(x)log(1−p(x)+gθ(x)p(x))dx=∫p(x)log2p(x)+gθ(x)2p(x)dx+∫gθ(x)log(1−2p(x)+gθ(x)2p(x))dx=∫p(x)log2p(x)+gθ(x)p(x)dx+∫gθ(x)log(1−2p(x)+gθ(x)p(x))dx−2log2=KL(P∥2P+Gθ)+KL(Gθ∥2P+Gθ)−2log2=JS(P∥Gθ)−2log2(11)

所以整体GAN训练过程就是固定G,找D;然后固定D,找G;如 ( 7 ) (7) (7)式所示。 - 用不同 f f f- d i v e r g e n c e divergence divergence作为目标函数就是不同的度量方式来衡量差距,GAN的由来可能是因为理解生成器和判别器打分形象,但是发现其实很多散度都可以用作差距。如下图所示: