OpenVINO InferenceEngine之读取IR

目录

CNNNetReader API列表

CNNNetReader构造函数

CNNNetReaderImpl构造函数

xml网络拓扑文件结构

CNNNetReader::ReadNetwork

CNNNetReaderImpl::ReadNetwork

读取xml文件

获取网络拓扑文件版本

获取解析器

FormatParser::Parse 解析xml节点数据

验证该网络合法性

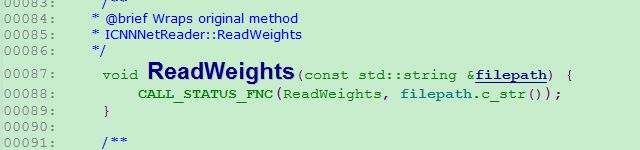

CNNNetReader::ReadWeights

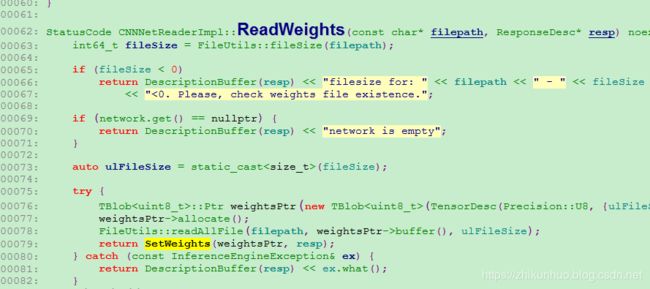

CNNNetReaderImpl::ReadWeights

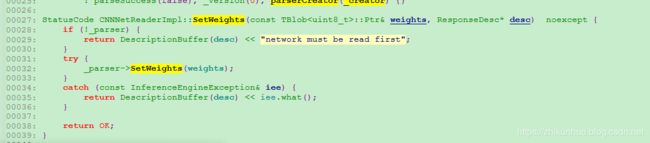

SetWeights

FormatParser::SetWeights

总结

OpenVINO中的inference Engine 中做推理的第一步首先就是要读取IR(.xml和bin)文件,将整个网络拓扑架构xml和相关权重bin文件读取到内存中,才能为接下来做推理打下基础。所以在一般的深度学习框架中,理解整个网络怎么管理的是非常重要的,而理解整个网络是怎么组织的,则可以从读取网络拓扑结构开始,OpenVINO的处理也逃不过该套路。

OpenVINO对外提供的读取IR文件接口统一封装到CNNNetReader类中,该类位于inference-engine\include\cpp\ie_cnn_net_reader.h文件中

CNNNetReader API列表

CNNNetReader类对外提供的接口如下:

| Method | Descript |

| CNNNetReader() | CNNNetReader类默认构造函数 |

| void ReadNetwork(const std::string &filepath) | 读取xml网络拓扑结构文件 |

| void ReadNetwork(const void *model, size_t size) | 读取xml网络拓扑结构文件 |

| void SetWeights(const TBlob |

设置权重 |

| void ReadWeights(const std::wstring &filepath) | 读取权重(.bin)文件 |

| CNNNetwork getNetwork() | 获取到读取到内存中的网络CNNNetwork结构 |

| bool isParseSuccess() | 分析网络是否合法 |

| std::string getDescription() | 获取到网络数据精度 |

| std::string getName() | 获取到网络名称 |

| int getVersion() | 获取到版本 |

该API列表对外提供了基本的对网络操作的功能,下面来看下具体实现。

CNNNetReader构造函数

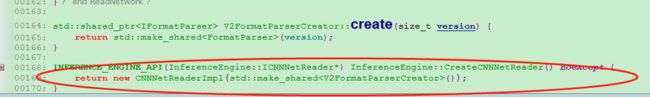

CNNNetReader默认构造函数代码如下:

该构造函数体现了OpenVINO的一个一般处理技巧,调用InferenceEngine::CreateCNNNetReader,创建一个CNNNetReader记录实现类,将接口和具体实现进行分类:

CNNNetReader类的具有实现是在CNNNetReaderImpl类中,调用CNNNetReaderImpl类的构造函数创建一个新类。

CNNNetReaderImpl构造函数

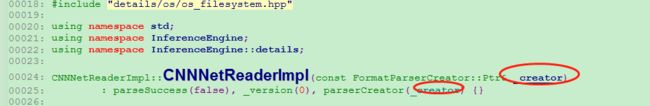

CNNNetReaderImpl构造函数如下:

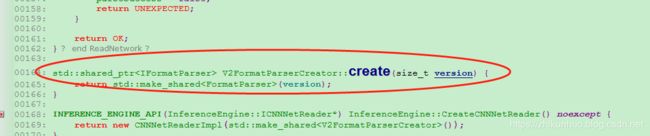

CNNNetReaderImpl构造函数中将_creator指针参数保存在parserCreator变量中,而CNNNetReader的构造函数中传递的是V2FormatParserCreator类,所以_creator保存的是V2FormatParserCreator类地址。

而V2FormatParserCreato中create返回的是FormatParser。

CNNNetReader构造函数嵌套的类比较深,最后具体的实现是在 CNNNetReaderImpl类中,并将该类的实现地址保存到actual。这样设计的好处是将接口与实现进行了分离,减少了之前的耦合度,坏处也是显而易见,增加了代码的复杂度。

xml网络拓扑文件结构

在这里先补充下预备知识,openvino的xml网络拓扑结构文件内容大概框架如下:

XX

XX ...

XX

XX

XX ...

XX

...

...

....

该网络拓扑结构采用xml层级规划管理,每个网络使用

网络中所所有的层在

每一层

所有的layer表述完之后是

与caffe中使用prototxt文件描述网络拓扑结构不同,openvino使用xml文件描述网络拓扑结构,层次化更加明显。

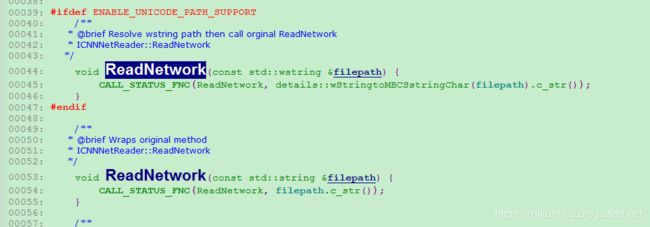

CNNNetReader::ReadNetwork

ReadNetwork()函数的处理的本质就是将上述拓扑网络结构读取到内存中,与caffe中的init函数功能差不多。

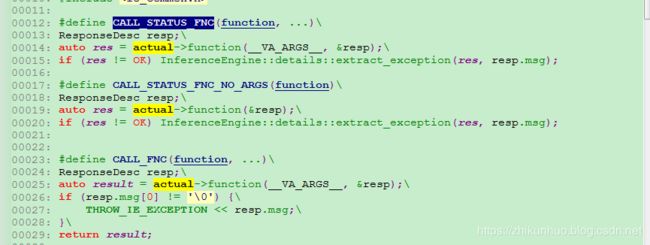

该函数实现较为简单直接调用CALL_STATUS_FNC宏,该宏的实现如下:

直接调用actual指针下的相对应的函数, 而由CNNNetReader构造函数可知,actual挂载的实际对应的类为CNNNetReaderImpl,通过该方法将接口与实现进行分离.

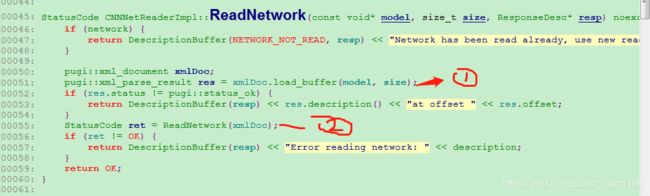

CNNNetReaderImpl::ReadNetwork

CNNNetReaderImpl::ReadNetwork为ReadNetwork的真正实现

读取xml文件

该步骤比较简单主要使用第三方pugixml软件将xml网络拓扑结构按照xml文件格式读取到xmlDoc内存中

接下来是调用ReadNetwork接口对该xml节点数据进行提取,组织成后续所需要的网络格式。

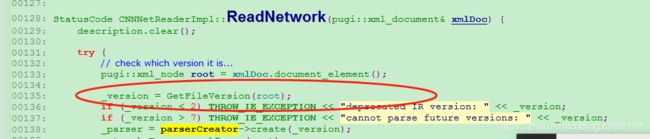

获取网络拓扑文件版本

获取到net节点中version 版本号,最新的一般为2,比较老的openvino版本使用的是版本1

GetFileVersion()函数实现如下:

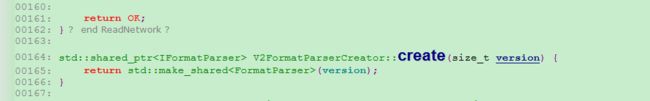

获取解析器

获取解析器,其实质就是获取到解析的类:

_parser = parserCreator->create(_version);parserCreator指向的结构指针由构造函数可知,parserCreator指向的是V2FormatParserCreator类地址:

可知得到的是FormatParser解析类。

FormatParser::Parse 解析xml节点数据

使用生成的解析器,解析读取到的xml节点数据:

network = _parser->Parse(root);FormatParser类中的Parse方法为:

CNNNetworkImplPtr FormatParser::Parse(pugi::xml_node& root) {

_network.reset(new CNNNetworkImpl()); //生成一个CNNNetworkImpl类用于记录各种数据

_network->setName(GetStrAttr(root, "name", ""));//设置网络名称

_defPrecision = Precision::FromStr(GetStrAttr(root, "precision", "UNSPECIFIED"));

_network->setPrecision(_defPrecision);//设置网络江都

// parse the input Data

DataPtr inputData;

// parse the graph layers

auto allLayersNode = root.child("layers");//获取到所有的layers下的layer节点

std::vector< CNNLayer::Ptr> inputLayers;

int nodeCnt = 0;

std::map layerById;

bool identifyNetworkPrecision = _defPrecision == Precision::UNSPECIFIED;

for (auto node = allLayersNode.child("layer"); !node.empty(); node = node.next_sibling("layer")) {//遍历所有的节点,对每层数据单独提取

LayerParseParameters lprms;

ParseGenericParams(node, lprms);//解析layer节点下的数据到LayerParseParameters结构中

CNNLayer::Ptr layer = CreateLayer(node, lprms);//生成对应的Layer

if (!layer) THROW_IE_EXCEPTION << "Don't know how to create Layer type: " << lprms.prms.type;

layersParseInfo[layer->name] = lprms;//将每层的数据保存到 layersParseInfo中

_network->addLayer(layer);//添加该layer到CNNNetwork中

layerById[lprms.layerId] = layer;//以layer id为key 保存每层layer到layerById中

if (equal(layer->type, "input")) {

inputLayers.push_back(layer);//如果是输入层,则将该层保证到inputLayers中

}

if (identifyNetworkPrecision) {//设置网络精度

if (!_network->getPrecision()) {

_network->setPrecision(lprms.prms.precision);

}

if (_network->getPrecision() != lprms.prms.precision) {

_network->setPrecision(Precision::MIXED);

identifyNetworkPrecision = false;

}

}

for (int i = 0; i < lprms.outputPorts.size(); i++) {//将每层的输出保存到layer->outData以及_portsToData中

const auto &outPort = lprms.outputPorts[i];

const auto outId = gen_id(lprms.layerId, outPort.portId);

const std::string outName = lprms.outputPorts.size() == 1

? lprms.prms.name

: lprms.prms.name + "." + std::to_string(i);

DataPtr& ptr = _network->getData(outName.c_str());

if (!ptr) {

ptr.reset(new Data(outName, outPort.dims, outPort.precision, TensorDesc::getLayoutByDims(outPort.dims)));

ptr->setDims(outPort.dims);

}

_portsToData[outId] = ptr;

if (ptr->getCreatorLayer().lock())

THROW_IE_EXCEPTION << "two layers set to the same output [" << outName << "], conflict at offset "

<< node.offset_debug();

ptr->getCreatorLayer() = layer;

layer->outData.push_back(ptr);

}

nodeCnt++;

}

// connect the edges

pugi::xml_node edges = root.child("edges");//获取网络连接信息

FOREACH_CHILD(_ec, edges, "edge") {

int fromLayer = GetIntAttr(_ec, "from-layer");

int fromPort = GetIntAttr(_ec, "from-port");

int toLayer = GetIntAttr(_ec, "to-layer");

int toPort = GetIntAttr(_ec, "to-port");

const auto dataId = gen_id(fromLayer, fromPort);

auto targetLayer = layerById[toLayer];

if (!targetLayer)

THROW_IE_EXCEPTION << "Layer ID " << toLayer << " was not found while connecting edge at offset "

<< _ec.offset_debug();

SetLayerInput(*_network, dataId, targetLayer, toPort);//根据每层的链接信息,设置其每层的输入

}

auto keep_input_info = [&] (DataPtr &in_data) {

InputInfo::Ptr info(new InputInfo());

info->setInputData(in_data);

Precision prc = info->getPrecision();

// Convert precision into native format (keep element size)

prc = prc == Precision::Q78 ? Precision::I16 :

prc == Precision::FP16 ? Precision::FP32 :

static_cast(prc);

info->setPrecision(prc);

_network->setInputInfo(info);

};

// Keep all data from InputLayers

for (auto inLayer : inputLayers) {

if (inLayer->outData.size() != 1)

THROW_IE_EXCEPTION << "Input layer must have 1 output. "

"See documentation for details.";

keep_input_info(inLayer->outData[0]);//将输入层的数据保存到CNNNetworkImpl中的_inputData

}

// Keep all data which has no creator layer//创建每层的输入数据内存空间

for (auto &kvp : _network->allLayers()) {

const CNNLayer::Ptr& layer = kvp.second;

auto pars_info = layersParseInfo[layer->name];

if (layer->insData.empty())

layer->insData.resize(pars_info.inputPorts.size());

for (int i = 0; i < layer->insData.size(); i++) {

if (!layer->insData[i].lock()) {

std::string data_name = (layer->insData.size() == 1)

? layer->name

: layer->name + "." + std::to_string(i);

DataPtr data(new Data(data_name,

pars_info.inputPorts[i].dims,

pars_info.inputPorts[i].precision,

TensorDesc::getLayoutByDims(pars_info.inputPorts[i].dims)));

data->setDims(pars_info.inputPorts[i].dims);

layer->insData[i] = data;

data->getInputTo()[layer->name] = layer;

const auto insId = gen_id(pars_info.layerId, pars_info.inputPorts[i].portId);

_portsToData[insId] = data;

keep_input_info(data);

}

}

}

auto statNode = root.child("statistics");

ParseStatisticSection(statNode);//处理network statistics节点

if (!_network->allLayers().size())

THROW_IE_EXCEPTION << "Incorrect model! Network doesn't contain layers.";

size_t inputLayersNum(0);

CaselessEq cmp;

for (const auto& kvp : _network->allLayers()) {

const CNNLayer::Ptr& layer = kvp.second;

if (cmp(layer->type, "Input") || cmp(layer->type, "Const"))

inputLayersNum++;//统计其输入层有多少个

}

if (!inputLayersNum && !cmp(root.name(), "body"))

THROW_IE_EXCEPTION << "Incorrect model! Network doesn't contain input layers.";

// check all input ports are occupied

for (const auto& kvp : _network->allLayers()) {

const CNNLayer::Ptr& layer = kvp.second;

const LayerParseParameters& parseInfo = layersParseInfo[layer->name];

size_t inSize = layer->insData.size();

if (inSize != parseInfo.inputPorts.size())

THROW_IE_EXCEPTION << "Layer " << layer->name << " does not have any edge connected to it";

for (unsigned i = 0; i < inSize; i++) {

if (!layer->insData[i].lock()) {

THROW_IE_EXCEPTION << "Layer " << layer->name.c_str() << " input port "

<< parseInfo.inputPorts[i].portId << " is not connected to any data";

}

}

layer->validateLayer();

}

// parse mean image

ParsePreProcess(root);//进行预处理

_network->resolveOutput();

// Set default output precision to FP32 (for back-compatibility)

OutputsDataMap outputsInfo;

_network->getOutputsInfo(outputsInfo);//设置其输入数据精度默认为FP32

for (auto outputInfo : outputsInfo) {

if (outputInfo.second->getPrecision() != Precision::FP32 &&

outputInfo.second->getPrecision() != Precision::I32) {

outputInfo.second->setPrecision(Precision::FP32);

}

}

return _network;//将网络拓扑信息保存到CNNNetworkImpl类中,并返回该类指针,用于后续处理

} 整个函数处理比较复杂是CNNetwork管理的核心,对该解析的详细过程可以看

验证该网络合法性

对该网络的合法性进行验证:

void CNNNetworkImpl::validate(int version) {

std::set layerNames;

std::set dataNames;

InputsDataMap inputs;

this->getInputsInfo(inputs);

if (inputs.empty()) {

THROW_IE_EXCEPTION << "No input layers";

}

bool res = CNNNetForestDFS(CNNNetGetAllInputLayers(*this), [&](CNNLayerPtr layer) {

std::string layerName = layer->name;

for (auto i : layer->insData) {

auto data = i.lock();

if (data) {

auto inputTo = data->getInputTo();

auto iter = inputTo.find(layerName);

auto dataName = data->getName();

if (iter == inputTo.end()) {

THROW_IE_EXCEPTION << "Data " << data->getName() << " which inserted into the layer "

<< layerName

<< " does not point at this layer";

}

if (!data->getCreatorLayer().lock()) {

THROW_IE_EXCEPTION << "Data " << dataName << " has no creator layer";

}

} else {

THROW_IE_EXCEPTION << "Data which inserted into the layer " << layerName << " is nullptr";

}

}

for (auto data : layer->outData) {

auto inputTo = data->getInputTo();

std::string dataName = data->getName();

for (auto layerIter : inputTo) {

CNNLayerPtr layerInData = layerIter.second;

if (!layerInData) {

THROW_IE_EXCEPTION << "Layer which takes data " << dataName << " is nullptr";

}

auto insertedDatas = layerInData->insData;

auto it = std::find_if(insertedDatas.begin(), insertedDatas.end(),

[&](InferenceEngine::DataWeakPtr& d) {

return d.lock() == data;

});

if (it == insertedDatas.end()) {

THROW_IE_EXCEPTION << "Layer " << layerInData->name << " which takes data " << dataName

<< " does not point at this data";

}

}

auto dataNameSetPair = dataNames.insert(dataName);

if (!dataNameSetPair.second) {

THROW_IE_EXCEPTION << "Data name " << dataName << " is not unique";

}

}

auto layerSetPair = layerNames.insert(layerName);

if (!layerSetPair.second) {

THROW_IE_EXCEPTION << "Layer name " << layerName << " is not unique";

}

}, false);

std::string inputType = "Input";

for (auto i : inputs) {

CNNLayerPtr layer = i.second->getInputData()->getCreatorLayer().lock();

if (layer && !equal(layer->type, inputType)) {

THROW_IE_EXCEPTION << "Input layer " << layer->name

<< " should have Input type but actually its type is " << layer->type;

}

}

if (!res) {

THROW_IE_EXCEPTION << "Sorting not possible, due to existed loop.";

}

}

详细代码后面在单独进行讲解。

CNNNetReader::ReadWeights

ReadWeights为读取bin文件接口:

最后是调用CNNNetReaderImpl::ReadWeights函数

CNNNetReaderImpl::ReadWeights

CNNNetReaderImp::ReadWeights()函数主要过程如下:

首先读取该权重文件大小,调用TBlob接口申请相应的内存空间,并将该权重文件数据全部保存到weightsPtr内存空间中,最后调用setWeights()函数进行具体设置

SetWeights

SetWeights()函数接口:

最后调用解析器对权重进行设置,解析器为FormatParser

FormatParser::SetWeights

FormatParser::SetWeights()是最终的处理函数:

void FormatParser::SetWeights(const TBlob::Ptr& weights) {

for (auto& kvp : _network->allLayers()) {

auto fit = layersParseInfo.find(kvp.second->name);

// todo: may check that earlier - while parsing...

if (fit == layersParseInfo.end())

THROW_IE_EXCEPTION << "Internal Error: ParseInfo for " << kvp.second->name << " are missing...";

auto& lprms = fit->second;

WeightableLayer* pWL = dynamic_cast(kvp.second.get());

if (pWL != nullptr) {

if (lprms.blobs.find("weights") != lprms.blobs.end()) {

if (lprms.prms.type == "BinaryConvolution") {

auto segment = lprms.blobs["weights"];

if (segment.getEnd() > weights->size())

THROW_IE_EXCEPTION << "segment exceeds given buffer limits. Please, validate weights file";

size_t noOfElement = segment.size;

SizeVector w_dims({noOfElement});

typename TBlobProxy::Ptr binBlob(new TBlobProxy(Precision::BIN, Layout::C, weights, segment.start, w_dims));

pWL->_weights = binBlob;

} else {

pWL->_weights = GetBlobFromSegment(weights, lprms.blobs["weights"]);

}

pWL->blobs["weights"] = pWL->_weights;

}

if (lprms.blobs.find("biases") != lprms.blobs.end()) {

pWL->_biases = GetBlobFromSegment(weights, lprms.blobs["biases"]);

pWL->blobs["biases"] = pWL->_biases;

}

}

auto pGL = kvp.second.get();

if (pGL == nullptr) continue;

for (auto s : lprms.blobs) {

pGL->blobs[s.first] = GetBlobFromSegment(weights, s.second);

}

// Some layer can specify additional action to prepare weights

if (fit->second.internalWeightSet)

fit->second.internalWeightSet(weights);

}

for (auto &kvp : _preProcessSegments) {

const std::string &inputName = kvp.first;

auto &segments = kvp.second;

auto inputInfo = _network->getInput(inputName);

if (!inputInfo)

THROW_IE_EXCEPTION << "Internal error: missing input name " << inputName;

auto dims = inputInfo->getTensorDesc().getDims();

size_t width = 0ul, height = 0ul;

if (dims.size() == 3) {

height = dims.at(1);

width = dims.at(2);

} else if (dims.size() == 4) {

height = dims.at(2);

width = dims.at(3);

} else if (dims.size() == 5) {

height = dims.at(3);

width = dims.at(4);

} else {

THROW_IE_EXCEPTION << inputName << " has unsupported layout " << inputInfo->getTensorDesc().getLayout();

}

PreProcessInfo &pp = inputInfo->getPreProcess();

for (size_t c = 0; c < segments.size(); c++) {

if (segments[c].size == 0)

continue;

Blob::Ptr blob = GetBlobFromSegment(weights, segments[c]);

blob->getTensorDesc().reshape({height, width}, Layout::HW); // to fit input image sizes (summing it is an image)

pp.setMeanImageForChannel(blob, c);

}

}

} 后面再详细对FormatParser::SetWeights进行介绍。

总结

分析上述读取网络和权重文件可以看到最终是调用FormatParser两个主要函数对该文件进行解析,将解析出的数据最终通过ICNNNetReader进行统一管理。