OpenVINO InferenceEngine之FormatParser

目录

FormatParser::Parse

创建CNNetwork类

获取所有Layer节点

解析每层layer数据

获取到Layer id

获取到layer type

获取layer name

获取layer output

解析input

解析权重信息

创建Layer

获取创建器

Createlayer

创建generic layer

保存layer

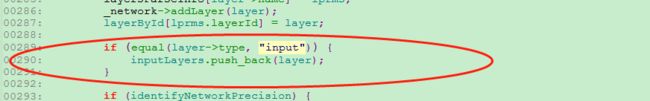

保存输入层

设置网络精度

设置layer输出

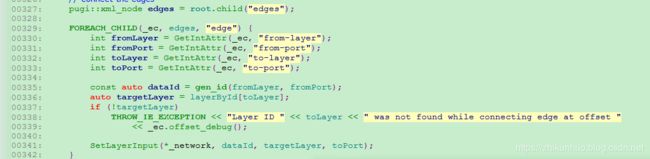

根据网络链接信息设置输入

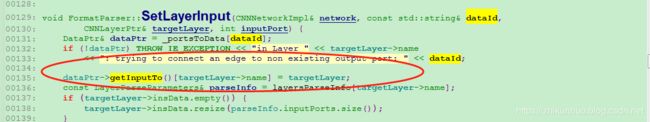

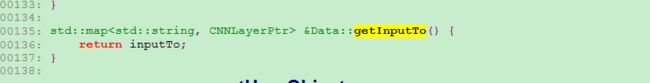

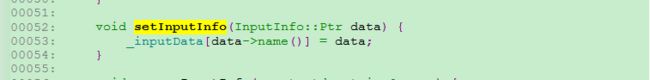

SetLayerInput()

设置网络层输入

检查网络层的所有Data

statistics字段处理

统计输入层数量

检查每层合法性

预处理

提取网络中输出

设置网络输出数据精度

总结

FormatParser类是负责IR文件的解析功能封装类,将IR文件的解析到CNNNetwork类中,该类封装到最底层,一般用户不需要关系该解析过程,因为项目需要对CNNNetwork管理进一步了解,顺便将解析过程看了下,做个记录。

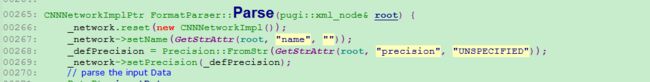

FormatParser::Parse

FormatParser::Parse负责对网络拓扑结构文件xml进行解析,该流程较长,需要分布来开:

创建CNNetwork类

创建一个CNNetwork类,并将该类保存到_network变量中:

从网络拓扑接口中获取到net节点中的name值,设置CNNetwork网络名称,并根据net节点的数据精度配置网络数据精度。

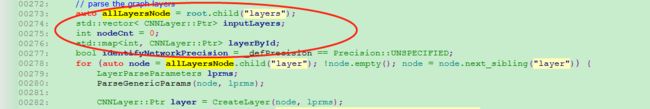

获取所有Layer节点

获取网络中所有layers节点下的所有子节点即所有的layer:

获取所有节点之后,分别解析每层的数据。

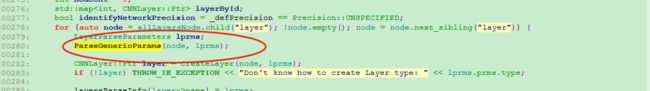

解析每层layer数据

解析每层的layer数据,将解析到的数据保存在LayerParseParameters lprms参数中:

调用ParseGenericParams接口对layer节点进行解析,该函数较长但是比较简单,通过该解析过程可以了解下layer节点下基本所有的节点。

获取到Layer id

解析到layer id,该id为每layer唯一,一般是以该网络结构中出现的顺序为主,从0开始:

获取到layer type

layer type为网络中每层的类型:

将获取到的网络类型参数保存到参数中,openvino的网络类型可以从Supported Framework Layers,可以看到,后面也可以在create layer中通过代码看到支持的网络类型。

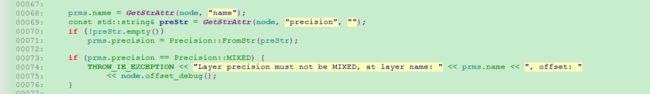

获取layer name

获取到layer name,以及设置每层的精度

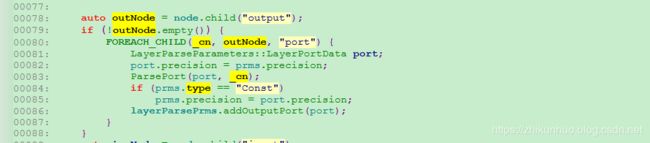

获取layer output

获取每层的输出参数

获取output下的所有子节点,FOREACH_CHILD宏展开如下:

#define FOREACH_CHILD(c, p, tag) for (auto c = p.child(tag); !c.empty(); c = c.next_sibling(tag)) 变量每个output下的节点, output下节点采用

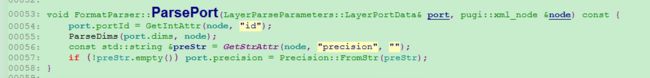

ParsePort()函数负责解析:

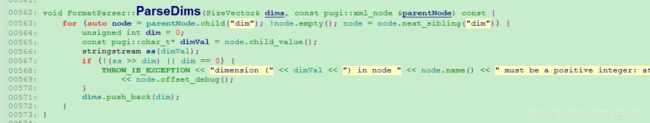

ParseDims()负责解析dim标签信息:

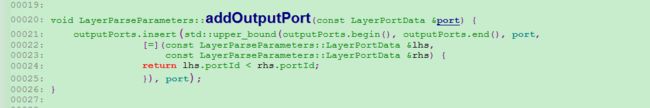

以vector形式将其dim信息进行保存。最终output的信息保存到outputPorts中。

解析input

提取出input节点下信息,作为layer的输入参数,将其保存到inputPorts信息中 :

处理过程和output类似,不再详细描述。

解析权重信息

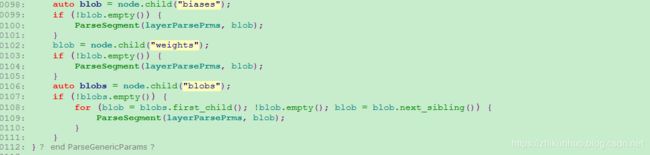

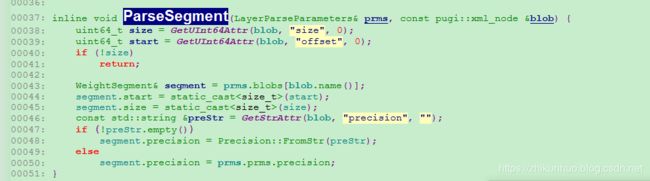

解析每层的layer 权重和偏移信息:

我的理解应该是两种表述方式,第一种使用

上述是整个解析并提取layer节点信息的处理过程,比较简单。

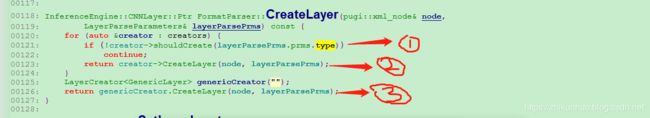

创建Layer

Layer参数解析完毕之后,需要根据需要所获得的参数创建layer,创建layer函数调用FormatParser::CreateLayer接口

创建layer主要由三步:

1:根据所注册的创建器类型,首先检查是否是对应的创建器是否支持该类型layer

2:如果该类型创建器支持创建该layer 则创建layer

3:如果没有支持的layer,则创建一个通过genericlayer。

获取创建器

layer创建器其实就是个对应的该层类型的指针,其所有的layer类型如下:

FormatParser::FormatParser(size_t version): _version(version) {

// there should be unique_ptr but it cant be used with initializer lists

creators = {

std::make_shared>("Power"),

std::make_shared>("Convolution"),

std::make_shared>("Deconvolution"),

std::make_shared>("DeformableConvolution"),

std::make_shared>("Pooling"),

std::make_shared>("InnerProduct"),

std::make_shared>("FullyConnected"),

std::make_shared>("LRN"),

std::make_shared>("Norm"),

std::make_shared>("Softmax"),

std::make_shared>("LogSoftmax"),

std::make_shared>("GRN"),

std::make_shared>("MVN"),

std::make_shared>("ReLU"),

std::make_shared>("Clamp"),

std::make_shared>("Split"),

std::make_shared>("Slice"),

std::make_shared>("Concat"),

std::make_shared>("Eltwise"),

std::make_shared>("Gemm"),

std::make_shared>("Pad"),

std::make_shared>("Gather"),

std::make_shared>("StridedSlice"),

std::make_shared>("ShuffleChannels"),

std::make_shared>("DepthToSpace"),

std::make_shared>("SpaceToDepth"),

std::make_shared>("SparseFillEmptyRows"),

std::make_shared>("ReverseSequence"),

std::make_shared>("Squeeze"),

std::make_shared>("Unsqueeze"),

std::make_shared>("Range"),

std::make_shared>("Broadcast"),

std::make_shared>("ScaleShift"),

std::make_shared>("PReLU"),

std::make_shared>("Crop"),

std::make_shared>("Reshape"),

std::make_shared>("Flatten"),

std::make_shared>("Tile"),

std::make_shared("Activation"),

std::make_shared>("BatchNormalization"),

std::make_shared("TensorIterator"),

std::make_shared>("LSTMCell"),

std::make_shared>("GRUCell"),

std::make_shared>("RNNCell"),

std::make_shared>("OneHot"),

std::make_shared>("RNNSequence"),

std::make_shared>("GRUSequence"),

std::make_shared>("LSTMSequence"),

std::make_shared>("BinaryConvolution"),

std::make_shared>("Select"),

std::make_shared>("Abs"),

std::make_shared>("Acos"),

std::make_shared>("Acosh"),

std::make_shared>("Asin"),

std::make_shared>("Asinh"),

std::make_shared>("Atan"),

std::make_shared>("Atanh"),

std::make_shared>("Ceil"),

std::make_shared>("Cos"),

std::make_shared>("Cosh"),

std::make_shared>("Erf"),

std::make_shared>("Floor"),

std::make_shared>("HardSigmoid"),

std::make_shared>("Log"),

std::make_shared>("Neg"),

std::make_shared>("Reciprocal"),

std::make_shared>("Selu"),

std::make_shared>("Sign"),

std::make_shared>("Sin"),

std::make_shared>("Sinh"),

std::make_shared>("Softplus"),

std::make_shared>("Softsign"),

std::make_shared>("Tan"),

std::make_shared>("ReduceAnd"),

std::make_shared>("ReduceL1"),

std::make_shared>("ReduceL2"),

std::make_shared>("ReduceLogSum"),

std::make_shared>("ReduceLogSumExp"),

std::make_shared>("ReduceMax"),

std::make_shared>("ReduceMean"),

std::make_shared>("ReduceMin"),

std::make_shared>("ReduceOr"),

std::make_shared>("ReduceProd"),

std::make_shared>("ReduceSum"),

std::make_shared>("ReduceSumSquare"),

std::make_shared>("GatherTree"),

std::make_shared>("TopK"),

std::make_shared>("Unique"),

std::make_shared>("NonMaxSuppression"),

std::make_shared>("ScatterUpdate")

};

creators.emplace_back(_version < 6 ? std::make_shared>("Quantize") :

std::make_shared>("FakeQuantize"));

上述代码中列出了所有openvino支持的layer类型, 如果使用的拓扑结构中没有上述所支持的类型则会创建一个genera类型layer,该层的layer类型都是继承了CNNLayer类,所获得的指针为LayerCreator类指针,该类负责对一个layer生成。

Createlayer

LayerCreator()接口最终是调用的LayerCreator类中的CreateLayer方法,创建Layer代码如下:

CNNLayer::Ptr CreateLayer(pugi::xml_node& node, LayerParseParameters& layerParsePrms) override {

auto res = std::make_shared(layerParsePrms.prms);

if (res->type == "FakeQuantize")

res->type = "Quantize";

if (std::is_same::value) {

layerChild[res->name] = {"fc", "fc_data", "data"};

} else if (std::is_same::value) {

layerChild[res->name] = {"lrn", "norm", "norm_data", "data"};

} else if (std::is_same::value) {

layerChild[res->name] = {"crop", "crop-data", "data"};

} else if (std::is_same::value) {

layerChild[res->name] = {"batch_norm", "batch_norm_data", "data"};

} else if ((std::is_same::value)) {

layerChild[res->name] = {"elementwise", "elementwise_data", "data"};

} else {

layerChild[res->name] = {"data", tolower(res->type) + "_data", tolower(res->type)};

}

pugi::xml_node dn = GetChild(node, layerChild[res->name], false);

if (!dn.empty()) {

if (dn.child("crop").empty()) {

for (auto ait = dn.attributes_begin(); ait != dn.attributes_end(); ++ait) {

pugi::xml_attribute attr = *ait;

res->params.emplace(attr.name(), attr.value());

}

} else {

if (std::is_same::value) {

auto crop_res = std::dynamic_pointer_cast(res);

if (!crop_res) {

THROW_IE_EXCEPTION << "Crop layer is nullptr";

}

std::string axisStr, offsetStr, dimStr;

FOREACH_CHILD(_cn, dn, "crop") {

int axis = GetIntAttr(_cn, "axis", 0);

crop_res->axis.push_back(axis);

axisStr += std::to_string(axis) + ",";

int offset = GetIntAttr(_cn, "offset", 0);

crop_res->offset.push_back(offset);

offsetStr += std::to_string(offset) + ",";

}

if (!axisStr.empty() && !offsetStr.empty() && !dimStr.empty()) {

res->params["axis"] = axisStr.substr(0, axisStr.size() - 1);

res->params["offset"] = offsetStr.substr(0, offsetStr.size() - 1);

}

}

}

}

return res;

}

std::map > layerChild;

}; 首先使用智能指针,创建一个相对应的layer类型的类,然后保存参数。对于一般的层,其layer参数一般都是保存在标签中,针对data就是将data标签中的内容按照name:value标签保存到layer中的params参数中

创建generic layer

如果网络拓扑结构中,所使用的层是一个特殊的层,openvino并不支持,通常被认为是一个custom layer,则为其创建一个通用的layer, GenericLayer本质上就是一个CNNLayer:

最终创建还是调用的是 LayerCreator类中的CreateLayer方法

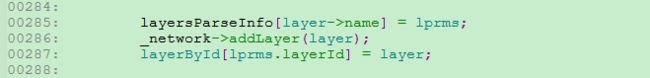

保存layer

将所创建的layer保存到网络中,以及其对应lprms以及layer id:

保存输入层

将输入层的信息单独保存到inputLayers中:

设置网络精度

如果网络的数据精度没有设置,则将层的数据精度作为网络的数据精度:

设置layer输出

整个深度学习网络处理的一个原则就是其数据内存空间都是在一个层的输出申请内存,以防止出现重复申请内存的动作,

该步骤设置layer的输出,并为其申请空间:

for (int i = 0; i < lprms.outputPorts.size(); i++) {

const auto &outPort = lprms.outputPorts[i];

const auto outId = gen_id(lprms.layerId, outPort.portId);

const std::string outName = lprms.outputPorts.size() == 1

? lprms.prms.name

: lprms.prms.name + "." + std::to_string(i);

DataPtr& ptr = _network->getData(outName.c_str());

if (!ptr) {

ptr.reset(new Data(outName, outPort.dims, outPort.precision, TensorDesc::getLayoutByDims(outPort.dims)));

ptr->setDims(outPort.dims);

}

_portsToData[outId] = ptr;

if (ptr->getCreatorLayer().lock())

THROW_IE_EXCEPTION << "two layers set to the same output [" << outName << "], conflict at offset "

<< node.offset_debug();

ptr->getCreatorLayer() = layer;

layer->outData.push_back(ptr);

}将所创建的输出,以

根据网络链接信息设置输入

根据edges网络链接信息设置其输入:

其中from-layer为上层链接的layer id, fromport表明为数据输入来自于上层第第几个输入作为输入,to-layer为链接的本层layer id, to-port为本层的输出port index.

根据toLayer获取到CNNLayer,最后调用SetLayerInput函数,设置每层layer的输出

SetLayerInput()

该函数为主要为设置每层的输入:

首先将该Data链接的每层的出入保存到Data类中的inputTo中,该变量记录着其作为输入对应的每层CNNlayer

inputTo变量定义如下:

std::map inputTo; dataPtr->getInputTo()[targetLayer->name] = targetLayer;

const LayerParseParameters& parseInfo = layersParseInfo[targetLayer->name];

if (targetLayer->insData.empty()) {

targetLayer->insData.resize(parseInfo.inputPorts.size());

}

for (unsigned i = 0; i < parseInfo.inputPorts.size(); i++) {

if (parseInfo.inputPorts[i].portId != inputPort) continue;

if (parseInfo.inputPorts[i].precision != dataPtr->getPrecision()) {

if (dataPtr->getPrecision() == Precision::UNSPECIFIED) {

dataPtr->setPrecision(parseInfo.inputPorts[i].precision);

} else {

// TODO: Make a correct exception

/*THROW_IE_EXCEPTION << "in Layer " << targetLayer->name

<< ": trying to connect an edge to mismatch precision of output port: "

<< dataPtr->getName();*/

}

}

if (!equal(parseInfo.inputPorts[i].dims, dataPtr->getDims()))

THROW_IE_EXCEPTION << "in Layer " << targetLayer->name

<< ": trying to connect an edge to mismatch dimensions of output port: "

<< dataPtr->getName()

<< " dims input: " << dumpVec(parseInfo.inputPorts[i].dims)

<< " dims output: " << dumpVec(dataPtr->getDims());

targetLayer->insData[i] = dataPtr;

const auto insId = gen_id(parseInfo.layerId, parseInfo.inputPorts[i].portId);

_portsToData[insId] = dataPtr;然后将该层CNNLayer中的 insData指向该Data,并将该Data作为输入的key

设置网络层输入

最后设置网络层的输入:

auto keep_input_info = [&] (DataPtr &in_data) {

InputInfo::Ptr info(new InputInfo());

info->setInputData(in_data);

Precision prc = info->getPrecision();

// Convert precision into native format (keep element size)

prc = prc == Precision::Q78 ? Precision::I16 :

prc == Precision::FP16 ? Precision::FP32 :

static_cast(prc);

info->setPrecision(prc);

_network->setInputInfo(info);

};

// Keep all data from InputLayers

for (auto inLayer : inputLayers) {

if (inLayer->outData.size() != 1)

THROW_IE_EXCEPTION << "Input layer must have 1 output. "

"See documentation for details.";

keep_input_info(inLayer->outData[0]);

} 将网络层的输出保存到_inputData中:

检查网络层的所有Data

最后检查网络中所有层的输入是否都有创建,以防止后面运行有异常,一般如果网络连接正确并不会出现这样场景:

for (auto &kvp : _network->allLayers()) {

const CNNLayer::Ptr& layer = kvp.second;

auto pars_info = layersParseInfo[layer->name];

if (layer->insData.empty())

layer->insData.resize(pars_info.inputPorts.size());

for (int i = 0; i < layer->insData.size(); i++) {

if (!layer->insData[i].lock()) {

std::string data_name = (layer->insData.size() == 1)

? layer->name

: layer->name + "." + std::to_string(i);

DataPtr data(new Data(data_name,

pars_info.inputPorts[i].dims,

pars_info.inputPorts[i].precision,

TensorDesc::getLayoutByDims(pars_info.inputPorts[i].dims)));

data->setDims(pars_info.inputPorts[i].dims);

layer->insData[i] = data;

data->getInputTo()[layer->name] = layer;

const auto insId = gen_id(pars_info.layerId, pars_info.inputPorts[i].portId);

_portsToData[insId] = data;

keep_input_info(data);

}

}

}

statistics字段处理

如果网络拓扑结构中有statistics字段,则进行处理

void FormatParser::ParseStatisticSection(const pugi::xml_node& statNode) {

auto splitParseCommas = [&](const string& s) ->vector {

vector res;

stringstream ss(s);

float val;

while (ss >> val) {

res.push_back(val);

if (ss.peek() == ',')

ss.ignore();

}

return res;

};

map newNetNodesStats;

for (auto layer : statNode.children("layer")) {

NetworkNodeStatsPtr nodeStats = NetworkNodeStatsPtr(new NetworkNodeStats());

string name = layer.child("name").text().get();

newNetNodesStats[name] = nodeStats;

nodeStats->_minOutputs = splitParseCommas(layer.child("min").text().get());

nodeStats->_maxOutputs = splitParseCommas(layer.child("max").text().get());

}

ICNNNetworkStats *pstats = nullptr;

StatusCode s = _network->getStats(&pstats, nullptr);

if (s == StatusCode::OK && pstats) {

pstats->setNodesStats(newNetNodesStats);

}

}

统计输入层数量

统计作为输入层数量:

size_t inputLayersNum(0);

CaselessEq cmp;

for (const auto& kvp : _network->allLayers()) {

const CNNLayer::Ptr& layer = kvp.second;

if (cmp(layer->type, "Input") || cmp(layer->type, "Const"))

inputLayersNum++;

} 检查每层合法性

对每层的是否合法进行检查,以防止后期出错

if (!inputLayersNum && !cmp(root.name(), "body"))

THROW_IE_EXCEPTION << "Incorrect model! Network doesn't contain input layers.";

// check all input ports are occupied

for (const auto& kvp : _network->allLayers()) {

const CNNLayer::Ptr& layer = kvp.second;

const LayerParseParameters& parseInfo = layersParseInfo[layer->name];

size_t inSize = layer->insData.size();

if (inSize != parseInfo.inputPorts.size())

THROW_IE_EXCEPTION << "Layer " << layer->name << " does not have any edge connected to it";

for (unsigned i = 0; i < inSize; i++) {

if (!layer->insData[i].lock()) {

THROW_IE_EXCEPTION << "Layer " << layer->name.c_str() << " input port "

<< parseInfo.inputPorts[i].portId << " is not connected to any data";

}

}

layer->validateLayer();

}预处理

对网络拓扑结构中的预处理进行处理,预处理使用的是pre-process标签

void FormatParser::ParsePreProcess(pugi::xml_node& root) {

/*

// in case of constant

// or

// in case of array 鈥?ref to the .bin file

*/

auto ppNode = root.child("pre-process");

if (ppNode.empty()) {

return;

}

// find out to what input this belongs to

std::string inputName;

InputInfo::Ptr preProcessInput;

inputName = GetStrAttr(ppNode, "reference-layer-name", "");

inputName = trim(inputName);

if (inputName.empty()) {

// fallback (old format), look for the picture in the inputs

InputsDataMap inputs;

_network->getInputsInfo(inputs);

if (inputs.empty()) THROW_IE_EXCEPTION << "network has no input";

for (auto i : inputs) {

if (i.second->getTensorDesc().getDims().size() == 4) {

preProcessInput = i.second;

break;

}

}

if (!preProcessInput) {

preProcessInput = inputs.begin()->second;

}

inputName = preProcessInput->name();

} else {

preProcessInput = _network->getInput(inputName);

if (!preProcessInput)

THROW_IE_EXCEPTION << "pre-process name ref '" << inputName << "' refers to un-existing input";

}

// dims vector without batch size

SizeVector inputDims = preProcessInput->getTensorDesc().getDims();

size_t noOfChannels = 0, width = 0, height = 0;

if (inputDims.size() < 2) {

THROW_IE_EXCEPTION << "network did not define input dimensions properly";

} else if (inputDims.size() == 2) { // NC

noOfChannels = inputDims[1];

width = inputDims[1];

height = inputDims[0];

} else if (inputDims.size() == 3) {

width = inputDims[2];

height = inputDims[1];

noOfChannels = inputDims[0];

} else if (inputDims.size() == 4) {

width = inputDims[3];

height = inputDims[2];

noOfChannels = inputDims[1];

} else if (inputDims.size() == 5) {

width = inputDims[4];

height = inputDims[3];

noOfChannels = inputDims[2];

}

PreProcessInfo &pp = preProcessInput->getPreProcess();

std::vector &segments = _preProcessSegments[inputName];

pp.init(noOfChannels);

segments.resize(noOfChannels);

auto meanSegmentPrecision = GetPrecisionAttr(ppNode, "mean-precision", Precision::UNSPECIFIED);

ResponseDesc resp;

InferenceEngine::PreProcessChannel::Ptr preProcessChannel;

int lastChanNo = -1;

std::unordered_set idsForMeanValue;

std::unordered_set idsForMeanImage;

FOREACH_CHILD(chan, ppNode, "channel") {

int chanNo = GetIntAttr(chan, "id", lastChanNo + 1);

if (chanNo >= static_cast(noOfChannels) || chanNo < 0) {

THROW_IE_EXCEPTION << "Pre-process channel id invalid: " << chanNo;

}

lastChanNo = chanNo;

preProcessChannel = pp[chanNo];

WeightSegment& preProcessSegment = segments[chanNo];

auto meanNode = chan.child("mean");

if (!meanNode.empty()) {

if (!meanNode.attribute("value") && (!meanNode.attribute("size"))) {

THROW_IE_EXCEPTION << "mean should have at least one of the following attribute: value, size";

}

if (meanNode.attribute("value")) {

preProcessChannel->meanValue = GetFloatAttr(meanNode, "value");

idsForMeanValue.insert(chanNo);

}

if (meanNode.attribute("size")) {

idsForMeanImage.insert(chanNo);

preProcessSegment.size = static_cast(GetIntAttr(meanNode, "size"));

preProcessSegment.start = static_cast(GetIntAttr(meanNode, "offset"));

preProcessSegment.precision = meanSegmentPrecision;

if (width*height*meanSegmentPrecision.size() != preProcessSegment.size) {

THROW_IE_EXCEPTION << "mean blob size mismatch expected input, got: "

<< preProcessSegment.size << " extpecting " << width

<< " x " << height << " x " << meanSegmentPrecision.size();

}

if (!meanSegmentPrecision || meanSegmentPrecision == Precision::MIXED)

THROW_IE_EXCEPTION << "mean blob defined without specifying precision.";

}

}

auto scaleNode = chan.child("scale");

if (!scaleNode.empty() && scaleNode.attribute("value")) {

preProcessChannel->stdScale = GetFloatAttr(scaleNode, "value");

}

}

if (idsForMeanImage.size() == noOfChannels) {

pp.setVariant(MEAN_IMAGE);

} else if (idsForMeanValue.size() == noOfChannels) {

pp.setVariant(MEAN_VALUE);

} else if ((idsForMeanImage.size() == 0) && (idsForMeanValue.size() == 0)) {

pp.setVariant(NONE);

} else {

std::string validMeanValuesIds = "";

std::string validMeanImageIds = "";

for (auto id : idsForMeanValue) { validMeanValuesIds += std::to_string(id) + " "; }

for (auto id : idsForMeanImage) { validMeanImageIds += std::to_string(id) + " "; }

THROW_IE_EXCEPTION << "mean is not provided for all channels\n"

"Provided mean values for : " << validMeanValuesIds << "\n"

"Provided mean image for: " << validMeanImageIds;

}

}

提取网络中输出

将网络中的输出数据进行提取,调用的是CNNNetworkImpl::resolveOutput接口

void CNNNetworkImpl::resolveOutput() {

// check orphan nodes...

for (auto kvp : _data) {

if (!kvp.second->isInitialized())

THROW_IE_EXCEPTION << "data name [" << kvp.first << "] dimensions is not known";

// data nodes not going to any layer are basically graph output...

if (kvp.second->getInputTo().empty()) {

_outputData[kvp.first] = kvp.second;

}

}

}如果该数据中没有作为其他层的输入,则认为是网络层的输出

设置网络输出数据精度

设置网络输出数据精度:

// Set default output precision to FP32 (for back-compatibility)

OutputsDataMap outputsInfo;

_network->getOutputsInfo(outputsInfo);

for (auto outputInfo : outputsInfo) {

if (outputInfo.second->getPrecision() != Precision::FP32 &&

outputInfo.second->getPrecision() != Precision::I32) {

outputInfo.second->setPrecision(Precision::FP32);

}

}总结

整个解析过程非常繁琐,搞懂该流程对理解整个openvino网络关键是非常重要的,最后整个网络拓扑结构及参数数保存到_network中,该流程基本覆盖了所有的FormatParser类的处理