《Python自然语言处理-雅兰·萨纳卡(Jalaj Thanaki)》学习笔记:02 语料库和数据集

什么是语料库?

为什么我们需要语料库?

理解语料库分析?

数据属性的类型

语料库的不同文件格式

免费语料库的资源

为NLP应用准备数据集

网页爬取

什么是语料库?

在语料库中,大数据集合可以采用以下格式:

- 文本数据,意思是书面材料

- 语音数据,即语音材料

语料库有三种类型:

- 单语语料库:这种语料库只有一种语言

- 双语语料库:这种语料库有两种语言

- 多语言语料库:这种语料库有多种语言

例如: - 谷歌图书NGRAM语料库

- 布朗语料库

- 美国国家语料库

为什么我们需要语料库?

创建语料库的挑战,如下:

- 决定我们解决问题陈述所需的数据类型

- 数据的可用性

- 数据质量

- 数据在数量方面的充分性

理解语料库分析

NLTK有四种语料库:

-Isolate corpus

Categorized corpus

Overlapping corpus

Temporal corpus

import nltk

from nltk.corpus import brown as cb

from nltk.corpus import gutenberg as cg

print(dir(cb)) #布朗语料库内的所有目录

['_LazyCorpusLoader__args', '_LazyCorpusLoader__kwargs', '_LazyCorpusLoader__load', '_LazyCorpusLoader__name', '_LazyCorpusLoader__reader_cls', '__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattr__', '__getattribute__', '__gt__', '__hash__', '__init__', '__le__', '__lt__', '__module__', '__name__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__unicode__', '__weakref__', '_unload', 'subdir', 'unicode_repr']

print(cb.categories()) #《布朗语料库》的主题

['adventure', 'belles_lettres', 'editorial', 'fiction', 'government', 'hobbies', 'humor', 'learned', 'lore', 'mystery', 'news', 'religion', 'reviews', 'romance', 'science_fiction']

print(cb.fileids())#布朗语料库文件块的名称

['ca01', 'ca02', 'ca03', 'ca04', 'ca05', 'ca06', 'ca07', 'ca08', 'ca09', 'ca10', 'ca11', 'ca12', 'ca13', 'ca14', 'ca15', 'ca16', 'ca17', 'ca18', 'ca19', 'ca20', 'ca21', 'ca22', 'ca23', 'ca24', 'ca25', 'ca26', 'ca27', 'ca28', 'ca29', 'ca30', 'ca31', 'ca32', 'ca33', 'ca34', 'ca35', 'ca36', 'ca37', 'ca38', 'ca39', 'ca40', 'ca41', 'ca42', 'ca43', 'ca44', 'cb01', 'cb02', 'cb03', 'cb04', 'cb05', 'cb06', 'cb07', 'cb08', 'cb09', 'cb10', 'cb11', 'cb12', 'cb13', 'cb14', 'cb15', 'cb16', 'cb17', 'cb18', 'cb19', 'cb20', 'cb21', 'cb22', 'cb23', 'cb24', 'cb25', 'cb26', 'cb27', 'cc01', 'cc02', 'cc03', 'cc04', 'cc05', 'cc06', 'cc07', 'cc08', 'cc09', 'cc10', 'cc11', 'cc12', 'cc13', 'cc14', 'cc15', 'cc16', 'cc17', 'cd01', 'cd02', 'cd03', 'cd04', 'cd05', 'cd06', 'cd07', 'cd08', 'cd09', 'cd10', 'cd11', 'cd12', 'cd13', 'cd14', 'cd15', 'cd16', 'cd17', 'ce01', 'ce02', 'ce03', 'ce04', 'ce05', 'ce06', 'ce07', 'ce08', 'ce09', 'ce10', 'ce11', 'ce12', 'ce13', 'ce14', 'ce15', 'ce16', 'ce17', 'ce18', 'ce19', 'ce20', 'ce21', 'ce22', 'ce23', 'ce24', 'ce25', 'ce26', 'ce27', 'ce28', 'ce29', 'ce30', 'ce31', 'ce32', 'ce33', 'ce34', 'ce35', 'ce36', 'cf01', 'cf02', 'cf03', 'cf04', 'cf05', 'cf06', 'cf07', 'cf08', 'cf09', 'cf10', 'cf11', 'cf12', 'cf13', 'cf14', 'cf15', 'cf16', 'cf17', 'cf18', 'cf19', 'cf20', 'cf21', 'cf22', 'cf23', 'cf24', 'cf25', 'cf26', 'cf27', 'cf28', 'cf29', 'cf30', 'cf31', 'cf32', 'cf33', 'cf34', 'cf35', 'cf36', 'cf37', 'cf38', 'cf39', 'cf40', 'cf41', 'cf42', 'cf43', 'cf44', 'cf45', 'cf46', 'cf47', 'cf48', 'cg01', 'cg02', 'cg03', 'cg04', 'cg05', 'cg06', 'cg07', 'cg08', 'cg09', 'cg10', 'cg11', 'cg12', 'cg13', 'cg14', 'cg15', 'cg16', 'cg17', 'cg18', 'cg19', 'cg20', 'cg21', 'cg22', 'cg23', 'cg24', 'cg25', 'cg26', 'cg27', 'cg28', 'cg29', 'cg30', 'cg31', 'cg32', 'cg33', 'cg34', 'cg35', 'cg36', 'cg37', 'cg38', 'cg39', 'cg40', 'cg41', 'cg42', 'cg43', 'cg44', 'cg45', 'cg46', 'cg47', 'cg48', 'cg49', 'cg50', 'cg51', 'cg52', 'cg53', 'cg54', 'cg55', 'cg56', 'cg57', 'cg58', 'cg59', 'cg60', 'cg61', 'cg62', 'cg63', 'cg64', 'cg65', 'cg66', 'cg67', 'cg68', 'cg69', 'cg70', 'cg71', 'cg72', 'cg73', 'cg74', 'cg75', 'ch01', 'ch02', 'ch03', 'ch04', 'ch05', 'ch06', 'ch07', 'ch08', 'ch09', 'ch10', 'ch11', 'ch12', 'ch13', 'ch14', 'ch15', 'ch16', 'ch17', 'ch18', 'ch19', 'ch20', 'ch21', 'ch22', 'ch23', 'ch24', 'ch25', 'ch26', 'ch27', 'ch28', 'ch29', 'ch30', 'cj01', 'cj02', 'cj03', 'cj04', 'cj05', 'cj06', 'cj07', 'cj08', 'cj09', 'cj10', 'cj11', 'cj12', 'cj13', 'cj14', 'cj15', 'cj16', 'cj17', 'cj18', 'cj19', 'cj20', 'cj21', 'cj22', 'cj23', 'cj24', 'cj25', 'cj26', 'cj27', 'cj28', 'cj29', 'cj30', 'cj31', 'cj32', 'cj33', 'cj34', 'cj35', 'cj36', 'cj37', 'cj38', 'cj39', 'cj40', 'cj41', 'cj42', 'cj43', 'cj44', 'cj45', 'cj46', 'cj47', 'cj48', 'cj49', 'cj50', 'cj51', 'cj52', 'cj53', 'cj54', 'cj55', 'cj56', 'cj57', 'cj58', 'cj59', 'cj60', 'cj61', 'cj62', 'cj63', 'cj64', 'cj65', 'cj66', 'cj67', 'cj68', 'cj69', 'cj70', 'cj71', 'cj72', 'cj73', 'cj74', 'cj75', 'cj76', 'cj77', 'cj78', 'cj79', 'cj80', 'ck01', 'ck02', 'ck03', 'ck04', 'ck05', 'ck06', 'ck07', 'ck08', 'ck09', 'ck10', 'ck11', 'ck12', 'ck13', 'ck14', 'ck15', 'ck16', 'ck17', 'ck18', 'ck19', 'ck20', 'ck21', 'ck22', 'ck23', 'ck24', 'ck25', 'ck26', 'ck27', 'ck28', 'ck29', 'cl01', 'cl02', 'cl03', 'cl04', 'cl05', 'cl06', 'cl07', 'cl08', 'cl09', 'cl10', 'cl11', 'cl12', 'cl13', 'cl14', 'cl15', 'cl16', 'cl17', 'cl18', 'cl19', 'cl20', 'cl21', 'cl22', 'cl23', 'cl24', 'cm01', 'cm02', 'cm03', 'cm04', 'cm05', 'cm06', 'cn01', 'cn02', 'cn03', 'cn04', 'cn05', 'cn06', 'cn07', 'cn08', 'cn09', 'cn10', 'cn11', 'cn12', 'cn13', 'cn14', 'cn15', 'cn16', 'cn17', 'cn18', 'cn19', 'cn20', 'cn21', 'cn22', 'cn23', 'cn24', 'cn25', 'cn26', 'cn27', 'cn28', 'cn29', 'cp01', 'cp02', 'cp03', 'cp04', 'cp05', 'cp06', 'cp07', 'cp08', 'cp09', 'cp10', 'cp11', 'cp12', 'cp13', 'cp14', 'cp15', 'cp16', 'cp17', 'cp18', 'cp19', 'cp20', 'cp21', 'cp22', 'cp23', 'cp24', 'cp25', 'cp26', 'cp27', 'cp28', 'cp29', 'cr01', 'cr02', 'cr03', 'cr04', 'cr05', 'cr06', 'cr07', 'cr08', 'cr09']

print(cb.words()[0:20]) #布朗语料库前20个词

['The', 'Fulton', 'County', 'Grand', 'Jury', 'said', 'Friday', 'an', 'investigation', 'of', "Atlanta's", 'recent', 'primary', 'election', 'produced', '``', 'no', 'evidence', "''", 'that']

print(cb.words(categories='news')[10:30]) #“新闻”类20字,从第10个字开始

["Atlanta's", 'recent', 'primary', 'election', 'produced', '``', 'no', 'evidence', "''", 'that', 'any', 'irregularities', 'took', 'place', '.', 'The', 'jury', 'further', 'said', 'in']

print(cb.words(fileids=['cg22'])) #从文件ID为“cg22”的数据文件中提取单词

['Does', 'our', 'society', 'have', 'a', 'runaway', ',', ...]

print(cb.tagged_words()[0:10]) #POS tags

[('The', 'AT'), ('Fulton', 'NP-TL'), ('County', 'NN-TL'), ('Grand', 'JJ-TL'), ('Jury', 'NN-TL'), ('said', 'VBD'), ('Friday', 'NR'), ('an', 'AT'), ('investigation', 'NN'), ('of', 'IN')]

raw_text = nltk.Text(cb.words('ca01')) #无标签的原始文本

print(raw_text)

raw_text.concordance("jury") #检查一个特定单词在语料库中有多少次

Displaying 18 of 18 matches:

The Fulton County Grand Jury said Friday an investigation of Atla

any irregularities took place . The jury further said in term-end presentment

nducted . The September-October term jury had been charged by Fulton Superior

f such reports was received '' , the jury said , `` considering the widespread

s and the size of this city '' . The jury said it did find that many of Georgi

ng and improving them '' . The grand jury commented on a number of other topic

s '' . Merger proposed However , the jury said it believes `` these two office

The City Purchasing Department , the jury said , `` is lacking in experienced

was also recommended by the outgoing jury . It urged that the next Legislature

e law may be effected '' . The grand jury took a swipe at the State Welfare De

general assistance program '' , the jury said , but the State Welfare Departm

burden '' on Fulton taxpayers . The jury also commented on the Fulton ordinar

d compensation . Wards protected The jury said it found the court `` has incor

om unmeritorious criticisms '' , the jury said . Regarding Atlanta's new multi

w multi-million-dollar airport , the jury recommended `` that when the new man

minate political influences '' . The jury did not elaborate , but it added tha

jail deputies On other matters , the jury recommended that : ( 1 ) Four additi

pension plan for city employes . The jury praised the administration and opera

raw_text.concordance("recent")

Displaying 1 of 1 matches:

riday an investigation of Atlanta's recent primary election produced `` no evi

raw_text.concordance("Music")

no matches

#布朗语料库的原始数据

raw_content = cb.raw("ca02")

print(raw_content[0:500])

Austin/np-hl ,/,-hl Texas/np-hl

--/-- Committee/nn approval/nn of/in Gov./nn-tl Price/np Daniel's/np$ ``/`` abandoned/vbn property/nn ''/'' act/nn seemed/vbd certain/jj Thursday/nr despite/in the/at adamant/jj protests/nns of/in Texas/np bankers/nns ./.

Daniel/np personally/rb led/vbd the/at fight/nn for/in the/at measure/nn ,/, which/wdt he/pps had/hvd watered/vbn down/rp considerably/rb since/in its/pp$ rejection/nn by/in two/cd previous/jj Legislatures/nns-tl ,/, in/in a/at public/jj hear

print(cg.fileids()) #gutenberg corpus

['austen-emma.txt', 'austen-persuasion.txt', 'austen-sense.txt', 'bible-kjv.txt', 'blake-poems.txt', 'bryant-stories.txt', 'burgess-busterbrown.txt', 'carroll-alice.txt', 'chesterton-ball.txt', 'chesterton-brown.txt', 'chesterton-thursday.txt', 'edgeworth-parents.txt', 'melville-moby_dick.txt', 'milton-paradise.txt', 'shakespeare-caesar.txt', 'shakespeare-hamlet.txt', 'shakespeare-macbeth.txt', 'whitman-leaves.txt']

raw_content_cg = cg.raw("burgess-busterbrown.txt")

print(raw_content_cg[0:500])

[The Adventures of Buster Bear by Thornton W. Burgess 1920]

I

BUSTER BEAR GOES FISHING

Buster Bear yawned as he lay on his comfortable bed of leaves and

watched the first early morning sunbeams creeping through the Green

Forest to chase out the Black Shadows. Once more he yawned, and slowly

got to his feet and shook himself. Then he walked over to a big

pine-tree, stood up on his hind legs, reached as high up on the trunk of

the tree as he could, and scratched the bark with his g

num_chars_cg =len(cg.raw("burgess-busterbrown.txt")) #字符数

print(num_chars_cg)

84663

num_words = len(cg.words("burgess-busterbrown.txt")) #单词数

print(num_words)

18963

num_sents = len(cg.sents("burgess-busterbrown.txt")) #语句数

print(num_sents)

1054

数据属性的类型

## 练习:# 1. 用fileid:cc12计算brown语料库中的单词数。

num_words = len(cb.words(fileids=['cc12']))

print(num_words)

2342

#2. 创建自己的文集文件,使用nltk加载,然后检查语料库的频率分布。

contents = open(r"C:\\Users\\Administrator\\workspace\\Jupyter\\NLPython\\Jalaj Thanaki.txt").read()

print(len(contents))

481

#语料库的频率分布

from nltk.book import *

fdist1 = FreqDist(text1)

print(fdist1)

print(fdist1.most_common(50))

*** Introductory Examples for the NLTK Book ***

Loading text1, ..., text9 and sent1, ..., sent9

Type the name of the text or sentence to view it.

Type: 'texts()' or 'sents()' to list the materials.

text1: Moby Dick by Herman Melville 1851

text2: Sense and Sensibility by Jane Austen 1811

text3: The Book of Genesis

text4: Inaugural Address Corpus

text5: Chat Corpus

text6: Monty Python and the Holy Grail

text7: Wall Street Journal

text8: Personals Corpus

text9: The Man Who Was Thursday by G . K . Chesterton 1908

[(',', 18713), ('the', 13721), ('.', 6862), ('of', 6536), ('and', 6024), ('a', 4569), ('to', 4542), (';', 4072), ('in', 3916), ('that', 2982), ("'", 2684), ('-', 2552), ('his', 2459), ('it', 2209), ('I', 2124), ('s', 1739), ('is', 1695), ('he', 1661), ('with', 1659), ('was', 1632), ('as', 1620), ('"', 1478), ('all', 1462), ('for', 1414), ('this', 1280), ('!', 1269), ('at', 1231), ('by', 1137), ('but', 1113), ('not', 1103), ('--', 1070), ('him', 1058), ('from', 1052), ('be', 1030), ('on', 1005), ('so', 918), ('whale', 906), ('one', 889), ('you', 841), ('had', 767), ('have', 760), ('there', 715), ('But', 705), ('or', 697), ('were', 680), ('now', 646), ('which', 640), ('?', 637), ('me', 627), ('like', 624)]

import nltk

from nltk import FreqDist

sentence='''This is my sentence. This is a test sentence.'''

tokens = nltk.word_tokenize(sentence)

fdist=FreqDist(tokens)

print(fdist)

data = nltk.word_tokenize(contents)

fdist=FreqDist(data)

print(fdist)

print(fdist.most_common(100))

[(',', 6), ('data', 6), ('.', 5), ('and', 4), ('a', 4), ('science', 3), ('to', 2), ('by', 2), ('is', 2), ('She', 2), ('related', 2), ('scientist', 2), ('learning', 2), ('the', 2), ('Jalaj', 2), ('Thanaki', 1), ('deal', 1), ('artificial', 1), ('place', 1), ('better', 1), ('social', 1), ('also', 1), ('with', 1), ('research', 1), ('processing', 1), ('analytics', 1), ('researcher', 1), ('being', 1), ('make', 1), ('activist', 1), ('big', 1), ('technologies', 1), ('practice', 1), ('锘緼bout', 1), ('profession', 1), ('intelligence', 1), ('language', 1), ('Author', 1), ('lies', 1), ('traveler', 1), ('deep', 1), ('problems', 1), ('nature-lover', 1), ('machine', 1), ('likes', 1), ('world', 1), ('Her', 1), ('Besides', 1), ('in', 1), ('using', 1), ('wants', 1), ('natural', 1), ('interest', 1)]

分类或定性数据属性

- 序数数据

- 标称数据

数字或定量数据属性

- 连续数据

- 离散数据

语料库的不同文件格式

.txt

.csv

.tsv:建立一个NLP系统,我们不能使用.csv文件格式来存储,当处理特性文件时,因为一些特性属性包含逗号,将影响性能。可以使用任何自定义分隔符,如\t。

.xml

.json

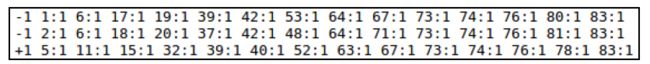

LibSVM:这是一种特殊的文件格式

自定义格式

免费语料库的资源

import nltk.corpus

dir(nltk.corpus) # Python shell

print(dir(nltk.corpus))

['_LazyModule__lazymodule_globals', '_LazyModule__lazymodule_import', '_LazyModule__lazymodule_init', '_LazyModule__lazymodule_loaded', '_LazyModule__lazymodule_locals', '_LazyModule__lazymodule_name', '__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattr__', '__getattribute__', '__gt__', '__hash__', '__init__', '__le__', '__lt__', '__module__', '__name__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__']

为NLP应用准备数据集

选择数据

https://github.com/caesar0301/awesome-public-datasets.

https://www.kaggle.com/datasets.

https://www.reddit.com/r/datasets/.

预处理数据

- 格式化

- 清洗

- 抽样

转换数据

网页爬取

import requests

from bs4 import BeautifulSoup

page = requests.get("https://www.baidu.com/")

soup = BeautifulSoup(page.content, 'html.parser')

print(soup.find_all('p')[0].get_text())

print(soup.find_all('p')[1].get_text())

关于百度 About Baidu

©2017 Baidu 使用百度前必读 意见反馈 京ICP证030173号

致谢

《Python自然语言处理》1 2 3,作者:【印】雅兰·萨纳卡(Jalaj Thanaki),是实践性很强的一部新作。为进一步深入理解书中内容,对部分内容进行了延伸学习、练习,在此分享,期待对大家有所帮助,欢迎加我微信(验证:NLP),一起学习讨论,不足之处,欢迎指正。

![]()

参考文献

https://github.com/jalajthanaki ↩︎

《Python自然语言处理》,(印)雅兰·萨纳卡(Jalaj Thanaki) 著 张金超 、 刘舒曼 等 译 ,机械工业出版社,2018 ↩︎

Jalaj Thanaki ,Python Natural Language Processing ,2017 ↩︎