tensorflow系列之_vgg16网络编写

Vgg16是卷据神经网络经典的网路结构之一,本人为学习tensorflow语法,实操构建vgg16网络,用的tensorflow 的contrib的模块,简洁方便。

Vgg16有16层,我一直纳闷16层怎么计算得来的,原来是卷积层+全连接层的数量! 记住pooling,bn,dropout层通常情况下都不算作层数!

vgg16有8个block(block,理解为网络里的一个卷积块),

block1 :2个conv+1个maxpool(卷积核64) 2层

block2 :2个conv+1个maxpool(卷积核128) 2层

block3 :3个conv+1个maxpool(卷积核256) 3层

block4 :3个conv+1个maxpool(卷积核512) 3层

block5 :3个conv+1个maxpool(卷积核512)3层

block6 :1个fc+1个dropout

block7 :1个fc+1个dropout

block8 :1个fc

总共就是2+2+3+3+3+1+1+1=16层

===

block1:

net = slim.conv2d(x, num_outputs=64, kernel_size=[3, 3], scope ='conv1_1')

net=slim.conv2d(net, num_outputs=64, kernel_size=[3, 3], scope='conv1_2')

# net=slim.max_pool2d(net,kernel_size=[2,2],stride=2,padding='VALID',scope='pool1') # 默认padding='VALID',stride=2 net = slim.max_pool2d(net,kernel_size=[2, 2],stride=2, scope='pool1') # h,w 减半 print('block1',net) # [1,112,112,64]

这里的卷积函数用的contrib.slim下的conv2d()api

slim.conv2d(inputs,num_outputs,kernel_size,stride,padding,scope)

inputs:输入图片

num_outputs:卷积核个数

kernel_size:卷积核尺寸,可以写成3*3 或者3 (主流卷积核大小一般3*3)

stride: 滑动步长,默认为1

padding:补齐方式,默认为SAME,即卷积后图片尺寸不变

还有 activate_fn 默认自带激活,tf.nn.relu方式

batch_norm 默认为None,可以自行设置

池化函数用的slim.max_pool2d()

slim.conv2d(inputs,kernel_size,stride,padding,scope)

inputs:输入图片

kernel_size:卷积核尺寸,可以写成3*3 或者3 (主流卷积核大小一般3*3)

stride: 滑动步长,默认为2 所以通常池化后 图片的 h,w 会减半! 以达到减小网络参数的目的

padding: 补齐方式,默认为VAlid 不补齐

===

block2,block3,block4,block5的构建核block1类似,区别是卷积核数量从64向128,256 ,512,512倍增。

block6是全连接层,在vgg16里用的卷积来代替全连接,

这样做的目的是,可以适应任何大小的图片(来自某乎大佬的解释)

代码如下:

假如是全连接层方式的写法:

nets=slim.fully_connected(net,4096,scope='fc6') # (1,7,7,512) -> (1,1,1,4096)

卷积的写法:

net=slim.conv2d(net,4096,[7,7],padding='VALID',scope='fc6') # (1,7,7,512) -> (1,1,1,4096)

dropout层(keep_prob参数 训练时一般设为0.6 ,测试时一定要设为1)

net=slim.dropout(net,keep_prob=keep_prob,is_training=is_training,scope='dropout6')

block7,block8和block6类似

==

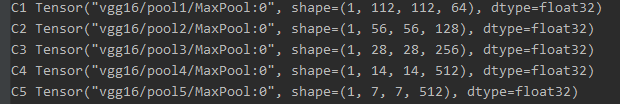

end_points是一个字典,存储了 block2, block3, block4, block5的特征图,

做为目标检测使用(可以构建特征金字塔,FasterRCNN/East/PseNet均用到过)。如网络任务是分类任务得到最后的net就可以啦。

做为目标检测使用(可以构建特征金字塔,FasterRCNN/East/PseNet均用到过)。如网络任务是分类任务得到最后的net就可以啦。

完整的代码如下:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# @time: 2020/6/19 15:39

import tensorflow as tf

from tensorflow.contrib import slim

import numpy as np

def vgg16(net,

num_classes=1000,

is_training=True,

spatial_squeeze=False,

scope='vgg16'):

"""

Note:所有完全连接的层都已转换为conv2d层。

若要在分类模式下使用,请将输入大小调整为224x224。

Args:

:param net: 输入图片 [batch_size , h ,w ,3]

:param num_classes: 分类的种类数

:param is_training: 训练模式或测试模型

:param spatial_squeeze: 是否删除不需要的维度 来压缩空间

:param scope:

:return:

"""

keep_prob=0.6 if is_training else 1.0 # 训练时为0.6 测试时为1

with tf.name_scope(scope) as sc:

#block1

# 特征层 存为字典

end_points = {} # 作为目标检测的特征层使用

# x=slim.conv2d(x,num_outputs=64,kernel_size=[3,3],stride=1,padding='SAME',scope='conv1_1') # 默认有relu stride=1,padding='SAME'

net = slim.conv2d(x, num_outputs=64, kernel_size=[3, 3], scope ='conv1_1')

net=slim.conv2d(net, num_outputs=64, kernel_size=[3, 3], scope='conv1_2')

# net=slim.max_pool2d(net,kernel_size=[2,2],stride=2,padding='VALID',scope='pool1') # 默认padding='VALID',stride=2

net = slim.max_pool2d(net,kernel_size=[2, 2],stride=2, scope='pool1') # h,w 减半 # [1,112,112,64]

# print('block1',net) # [1,112,112,64]

end_points['C1']=net

#block2

net=slim.conv2d(net, num_outputs=128, kernel_size=[2, 2], scope='conv_1') # [1,112,112,128]

net = slim.conv2d(net, num_outputs=128, kernel_size=[2, 2], scope='conv_2') # [1,112,112,128]

net = slim.max_pool2d(net, [2, 2],stride=2,scope='pool2') # [1,56,56,128]

end_points['C2'] = net

# #block3

net = slim.conv2d(net, num_outputs=256, kernel_size=[2, 2], scope='conv3_1') # [1,56,56,256]

net = slim.conv2d(net, num_outputs=256, kernel_size=[2, 2], scope='conv3_2')

net = slim.conv2d(net, num_outputs=256, kernel_size=[2, 2], scope='conv3_3')

net = slim.max_pool2d(net, [2, 2],stride=2, scope='pool3') # [1,28,28,256]

end_points['C3'] = net

#

# #block4

net = slim.conv2d(net, num_outputs=512, kernel_size=[2, 2], scope='conv4_1') # [1,56,56,256]

net = slim.conv2d(net, num_outputs=512, kernel_size=[2, 2], scope='conv4_2')

net = slim.conv2d(net, num_outputs=512, kernel_size=[2, 2], scope='conv4_3')

net = slim.max_pool2d(net, [2, 2], stride=2, scope='pool4') # [1,28,28,256]

end_points['C4'] = net

#block5

net = slim.conv2d(net, num_outputs=512, kernel_size=[2, 2], scope='conv5_1') # [1,56,56,256]

net = slim.conv2d(net, num_outputs=512, kernel_size=[2, 2], scope='conv5_2')

net = slim.conv2d(net, num_outputs=512, kernel_size=[2, 2], scope='conv5_3')

net = slim.max_pool2d(net, [2, 2], stride=2, scope='pool5') # [1,28,28,256]

end_points['C5'] = net

#fc6

# nets=slim.fully_connected(net,4096,scope='fc6') # 全连接的写法

net=slim.conv2d(net,4096,[7,7],padding='VALID',scope='fc6') # (1,7,7,512) -> (1,1,1,4096)

net=slim.dropout(net,keep_prob=keep_prob,is_training=is_training,scope='dropout6')

# fc7

net = slim.conv2d(net, 4096, [1, 1],scope='fc7')

net = slim.dropout(net,keep_prob=keep_prob, is_training=is_training, scope='dropout7')

# fc8

net = slim.conv2d(net, num_classes, [1, 1], activation_fn=None,normalizer_fn=None,scope='fc8')

return net,end_points

if __name__ == '__main__':

imgs=np.ones((224,162240,3),dtype=np.float32)

x=tf.placeholder(tf.float32, shape=[1, 224, 224, 3], name='input_images')

net,end_points=vgg16(x,10)

for key in end_points:

print(key,end_points[key])

# sess = tf.InteractiveSession()

# sess.run(tf.global_variables_initializer())

# net_= sess.run([net],feed_dict={x:[imgs]})

#