Python深度学习(4):猫狗分类

这个项目使用卷积神经网络,《Python深度学习》中使用了两个方法实现。一个是自己搭建卷积网络,另一个是直接使用VGG16。其中直接使用VGG16又可以分为抽取特征和微调模型两种方法。

1.数据集介绍:

本项目使用的数据集需要从http://www.kaggle.com/c/dog-vs-cats/data下载,一共包含25000张猫狗图像,每个类别有12500张。

2.数据准备

创建一个新的数据集,猫狗各含1000个测试集,500个验证集,500个测试集。

创建一个新的文件夹dogs_and_cats_small,在该文件夹下,创建‘train’,‘validation’,‘test’,在这三个子文件下,再分别创建‘dogs’,‘cats’,然后将对应数据放到对应文件夹下。

import os, shutil

original_dataset_dir = './dogs-vs-cats' #原数据位置

base_dir = './dogs_and_cats_small' #选取的数据的新位置

os.mkdir(base_dir) #创建新位置文件夹

#在新文件夹中依次新增‘train’,‘validation’,‘test’文件夹

train_dir = os.path.join(base_dir, 'train')

os.mkdir(train_dir)

validation_dir = os.path.join(base_dir, 'validation')

os.mkdir(validation_dir)

test_dir = os.path.join(base_dir, 'test')

os.mkdir(test_dir)

#在‘train’文件夹中新增‘dogs’,‘cats’文件夹

train_cats_dir = os.path.join(train_dir, 'cats')

os.mkdir(train_cats_dir)

train_dogs_dir = os.path.join(train_dir, 'dogs')

os.mkdir(train_dogs_dir)

#在‘validation’文件夹中新增‘dogs’,‘cats’文件夹

validation_cats_dir = os.path.join(validation_dir, 'cats')

os.mkdir(validation_cats_dir)

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

os.mkdir(validation_dogs_dir)

#在‘test’文件夹新增‘dogs’,‘cats’文件夹

test_cats_dir = os.path.join(test_dir, 'cats')

os.mkdir(test_cats_dir)

test_dogs_dir = os.path.join(test_dir, 'dogs')

os.mkdir(test_dogs_dir)

#将原数据中前1000张猫的图片复制到train_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname) #原位置

dst = os.path.join(train_cats_dir, fname) #目标位置

shutil.copyfile(src, dst) #复制文件

#将原数据中1000-1500张猫的图片复制到validation_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_cats_dir, fname)

shutil.copyfile(src, dst)

#将原数据中1500-2000张猫的图片复制到test_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_cats_dir, fname)

shutil.copyfile(src, dst)

#将原数据中前1000张狗的图片复制到train_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_dogs_dir, fname)

shutil.copyfile(src, dst)

#将原数据中1000-1500张狗的图片复制到validation_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1000,1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_dogs_dir, fname)

shutil.copyfile(src, dst)

#将原数据中1500-2000张狗的图片复制到test_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1500,2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_dogs_dir, fname)

shutil.copyfile(src, dst)

3.搭建卷积神经网络实现

import os

from keras import layers

from keras import models

from keras import optimizers

import matplotlib.pyplot as plt

from keras.preprocessing.image import ImageDataGenerator

base_dir = './dogs_and_cats_small' #数据集目录

train_dir = os.path.join(base_dir, 'train') #训练集目录

validation_dir = os.path.join(base_dir, 'validation') #验证集目录

test_dir = os.path.join(base_dir, 'test') #测试集目录

#图片的预处理,rescale参数表示将图片乘1/255缩放,其余参数都是用于数据增强

#rotation_range表示图像随机旋转的角度范围

#width_shift,height_shift表示图像在水平或者垂直方向上平移的范围

#shear_range表示随机错切变换的角度范围

#zoom_range为图像随机缩放的范围

#horizontal_flip将一半的图像水平翻转

#fill_mode用于填充新创建像素的方法

train_datagen = ImageDataGenerator(rescale=1./255, rotation_range=40, width_shift_range=0.2, height_shift_range=0.2,

shear_range=0.2,zoom_range=0.2, horizontal_flip=True)

test_datagen = ImageDataGenerator(rescale=1./255) #将测试集乘乘1/255缩放

#读取图像,将所有图像大小调整为150*150,使用了二元交叉熵,因此class_mode设为‘binary’

train_generator = train_datagen.flow_from_directory(train_dir, target_size=(150,150), batch_size=32, class_mode='binary')

validation_generator = test_datagen.flow_from_directory(validation_dir, target_size=(150, 150), batch_size=32,

class_mode='binary')

#搭建网络

model = models.Sequential()

model.add(layers.Conv2D(32, (3,3), activation='relu', input_shape=(150,150,3)))

model.add(layers.MaxPooling2D(2, 2))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D(2, 2))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D(2, 2))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D(2, 2))

model.add(layers.Flatten()) #添加flatten层,将3D张量展开为向量

model.add(layers.Dropout(0.5)) #添加Dropout层,将50%的参数改成0,防止过拟合

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

#编译网络

model.compile(loss='binary_crossentropy', optimizer = optimizers.RMSprop(lr = 1e-4), metrics=['acc'])

#训练模型

history = model.fit_generator(train_generator, steps_per_epoch = 100, epochs = 100, validation_data=validation_generator,

validation_steps=50)

model.save('cats_and_dogs_small_2.h5')#保存模型

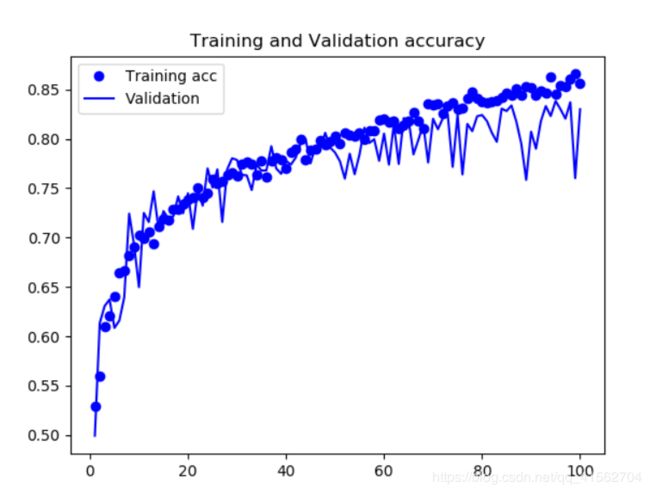

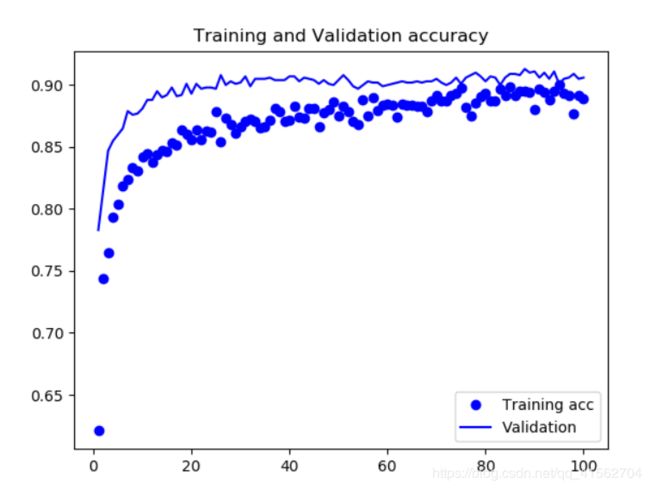

#绘制loss和准确度的图

acc = history.history['acc'] #训练精度

val_acc = history.history['val_acc'] #验证精度

loss = history.history['loss'] #训练损失

val_loss = history.history['val_loss'] #验证损失

epochs = range(1, len(acc) + 1) #横坐标的长度

#绘制精度图

plt.plot(epochs, acc, 'bo', label = 'Training acc')

plt.plot(epochs, val_acc, 'b', label = 'Validation')

plt.title('Training and Validation accuracy')

plt.legend()

plt.figure()

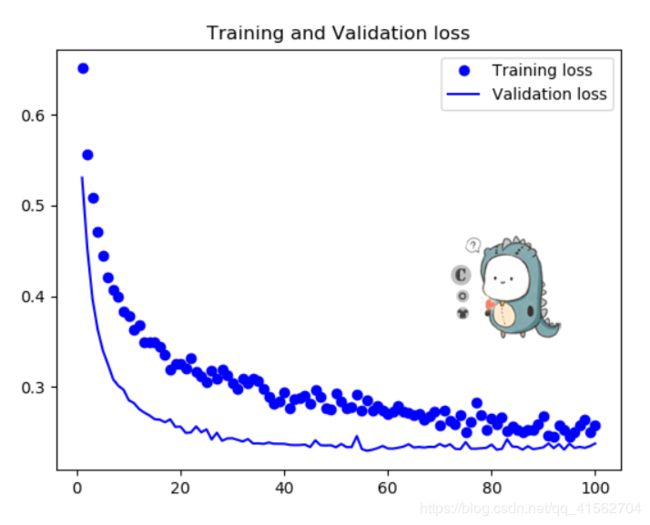

#绘制损失图

plt.plot(epochs, loss, 'bo', label = 'Training loss')

plt.plot(epochs, val_loss, 'b', label = 'Validation loss')

plt.title('Training and Validation loss')

plt.legend()

plt.show()

4.VGG16

4.1抽取特征(不可数据增强)

在数据集上运行卷积基,将输出保存为numpy数组,在搭建新的全连接分类器,将numpy的输出作为输入进行分类。

代码详解:

import os

import numpy as np

from keras.applications import VGG16

from keras.preprocessing.image import ImageDataGenerator

from keras import models

from keras import layers

from keras import optimizers

import matplotlib.pyplot as plt

conv_base = VGG16(weights = 'imagenet', include_top = False, input_shape = (150, 150, 3))

base_dir = './dogs_and_cats_small'

train_dir = os.path.join(base_dir, 'train') #训练集目录

validation_dir = os.path.join(base_dir, 'validation') #验证集目录

test_dir = os.path.join(base_dir, 'test') #测试集目录

datagen = ImageDataGenerator(rescale=1./255) #图片乘1/255进行缩放

batch_size = 20 #每次样本个数

#抽取特征

def extract_features(directory, sample_count):

features = np.zeros(shape=(sample_count, 4, 4, 512)) #特征(格式为VGG16训练后的输出格式)

labels = np.zeros(shape=(sample_count)) #图片标签

#载入数据集,将图片大小裁剪为150*150

generator = datagen.flow_from_directory(directory, target_size=(150, 150), batch_size=batch_size, class_mode='binary')

#每20个为一组,抽取特征并保存

i = 0

for inputs_batch, labels_batch in generator:

features_batch = conv_base.predict(inputs_batch) #将数据集放入VGG16进行训练

features[i * batch_size : (i+1) * batch_size] = features_batch

labels[i*batch_size : (i+1) * batch_size] = labels_batch

i += 1

if i * batch_size >= sample_count:

break

return features, labels

train_features, train_labels = extract_features(train_dir, 2000) #抽取训练集特征

validation_features, validation_labels = extract_features(validation_dir, 1000) #抽取验证集特征

test_features, test_labels = extract_features(test_dir, 1000) #抽取测试集特征

#将得到的特征展开,即对每条数据而言,得到的特征为(4,4,512)的张量,现展开为一维向量

train_features = np.reshape(train_features, (2000, 4*4*512))

validation_features = np.reshape(validation_features, (1000, 4*4*512))

test_features = np.reshape(test_features, (1000, 4*4*512))

#搭建分类网络

model = models.Sequential()

model.add(layers.Dense(256, activation='relu', input_dim=4*4*512)) #添加全连接层

model.add(layers.Dropout(0.5)) #使用Dropout

model.add(layers.Dense(1, activation='sigmoid')) #分类层

#网咯编译

model.compile(optimizer = optimizers.RMSprop(lr=2e-5), loss = 'binary_crossentropy', metrics=['acc'])

history = model.fit(train_features, train_labels, epochs = 30, batch_size = 20, validation_data

=(validation_features, validation_labels)) #网络训练

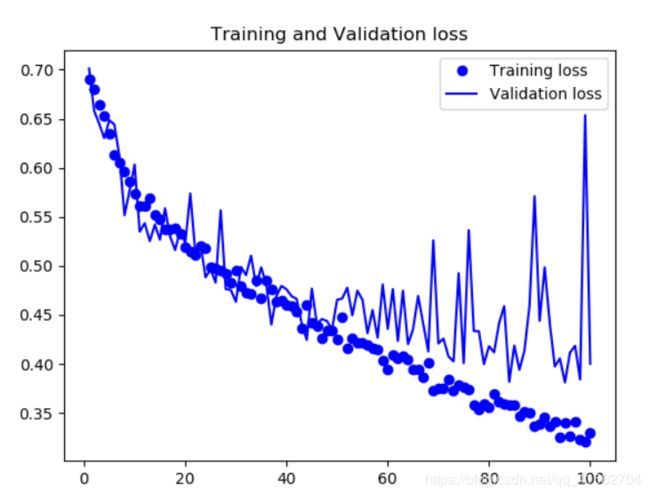

#绘制损失函数和模型准确度

#分别返回测试精度,验证精度,训练损失,验证损失

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)#迭代次数

#绘制训练精度和验证精度的图,bo表示蓝色圆点表示,b为蓝色曲线

plt.plot(epochs, acc, 'bo', label = 'Training acc')

plt.plot(epochs, val_acc, 'b', label = 'Validation')

plt.title('Training and Validation accuracy')

plt.legend()

plt.figure()

#绘制训练损失和验证损失

plt.plot(epochs, loss, 'bo', label = 'Training loss')

plt.plot(epochs, val_loss, 'b', label = 'Validation loss')

plt.title('Training and Validation loss')

plt.legend()

plt.show()

4.2抽取特征(可数据增强)

在顶部添加Dense层扩展已有模型,在数据集上端到端地运行整个模型。

代码详解:

from keras.applications import VGG16

from keras.preprocessing.image import ImageDataGenerator

from keras import models

from keras import layers

from keras import optimizers

import os

import numpy as np

import matplotlib.pyplot as plt

base_dir = './dogs_and_cats_small'

train_dir = os.path.join(base_dir, 'train') #训练集目录

validation_dir = os.path.join(base_dir, 'validation') #验证集目录

test_dir = os.path.join(base_dir, 'test') #测试集目录

#对训练集使用数据增强,并且将图片*1/255进行缩放

train_datagen = ImageDataGenerator(rescale=1./255, rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

test_datagen = ImageDataGenerator(rescale=1./255)

#载入数据集,并裁剪为150*150

train_generator = train_datagen.flow_from_directory(train_dir,

target_size = (150, 150),

batch_size=20,

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(validation_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

#weights指定模型初始化的权重检查点;include_top指定模型是否包含密集连接分类器

conv_base = VGG16(weights = 'imagenet', include_top = False, input_shape = (150, 150, 3)) #调用VGG16模型

#搭建网络

model = models.Sequential()

model.add(conv_base) #直接将VGG16搭建到目标网络中

model.add(layers.Flatten()) #将VGG16输出张量展开

#在先前的项目中介绍过,对于输入为向量,输出为数值的问题,使用激活函数为relu的全连接层,效果较好

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid')) #最后一层为分类层,此为二分类问题,激活函数设为sigmoid

#编译网络

model.compile(loss = 'binary_crossentropy', optimizer=optimizers.RMSprop(lr=2e-5),metrics=['acc'])

history = model.fit_generator(train_generator, steps_per_epoch=100, epochs = 30,

validation_data=validation_generator, validation_steps=50) #训练网络

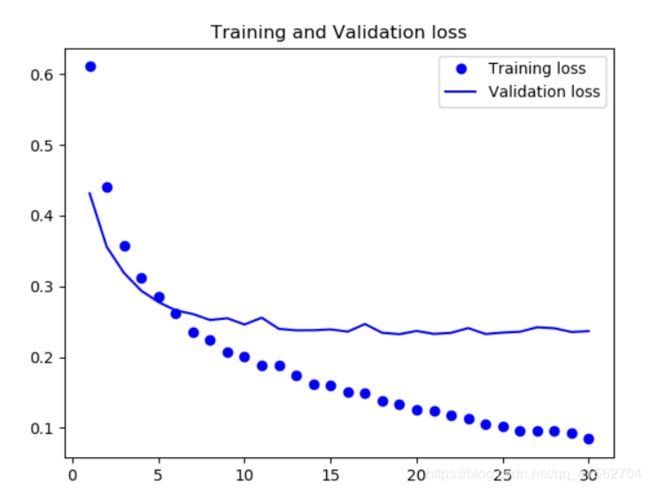

#绘制损失函数和模型准确度

#分别返回测试精度,验证精度,训练损失,验证损失

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)#迭代次数

#绘制训练精度和验证精度的图,bo表示蓝色圆点表示,b为蓝色曲线

plt.plot(epochs, acc, 'bo', label = 'Training acc')

plt.plot(epochs, val_acc, 'b', label = 'Validation')

plt.title('Training and Validation accuracy')

plt.legend()

plt.figure()

#绘制训练损失和验证损失

plt.plot(epochs, loss, 'bo', label = 'Training loss')

plt.plot(epochs, val_loss, 'b', label = 'Validation loss')

plt.title('Training and Validation loss')

plt.legend()

plt.show()

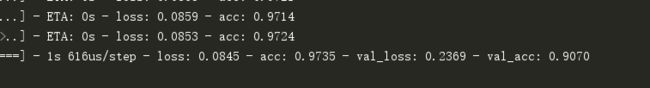

4.3微调模型

将VGG16的顶部几层“解冻”,并将这解冻的几层和新增加的部分联合训练。

微调模型的步骤为:(1)在VGG16上添加自定义网络;(2)冻结基网络;(3)训练所添加部分;(4)解冻基网络的一些层;(5)联合训练解冻的这些层和添加的部分

代码详解:

import os

import numpy as np

from keras.applications import VGG16

from keras.preprocessing.image import ImageDataGenerator

from keras import models

from keras import layers

from keras import optimizers

import matplotlib.pyplot as plt

base_dir = './dogs_and_cats_small'

train_dir = os.path.join(base_dir, 'train') #训练集目录

validation_dir = os.path.join(base_dir, 'validation') #验证集目录

test_dir = os.path.join(base_dir, 'test') #测试集目录

#对训练集使用数据增强,并且将图片*1/255进行缩放

train_datagen = ImageDataGenerator(rescale=1./255, rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

test_datagen = ImageDataGenerator(rescale=1./255)

#载入数据集,并裁剪为150*150

train_generator = train_datagen.flow_from_directory(train_dir,

target_size = (150, 150),

batch_size=20,

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(validation_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

conv_base = VGG16(weights = 'imagenet', include_top = False, input_shape = (150, 150, 3))

conv_base.trainable = True

set_trainable = False

for layer in conv_base.layers:

if layer.name == ' block5_conv1':

set_trainable = False

if set_trainable:

layer.trainable = True

else:

layer.trainable = False

#搭建网络

model = models.Sequential()

model.add(conv_base) #直接将VGG16搭建到目标网络中

model.add(layers.Flatten()) #将VGG16输出张量展开

#在先前的项目中介绍过,对于输入为向量,输出为数值的问题,使用激活函数为relu的全连接层,效果较好

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid')) #最后一层为分类层,此为二分类问题,激活函数设为sigmoid

model.compile(loss ='binary_crossentropy', optimizer = optimizers.RMSprop(lr = 1e-5), metrics=['acc'])

history = model.fit_generator(train_generator,

steps_per_epoch=100,

epochs = 100,

validation_data=validation_generator,

validation_steps=50)

#绘制损失函数和模型准确度

#分别返回测试精度,验证精度,训练损失,验证损失

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)#迭代次数

#绘制训练精度和验证精度的图,bo表示蓝色圆点表示,b为蓝色曲线

plt.plot(epochs, acc, 'bo', label = 'Training acc')

plt.plot(epochs, val_acc, 'b', label = 'Validation')

plt.title('Training and Validation accuracy')

plt.legend()

plt.figure()

#绘制训练损失和验证损失

plt.plot(epochs, loss, 'bo', label = 'Training loss')

plt.plot(epochs, val_loss, 'b', label = 'Validation loss')

plt.title('Training and Validation loss')

plt.legend()

plt.show()