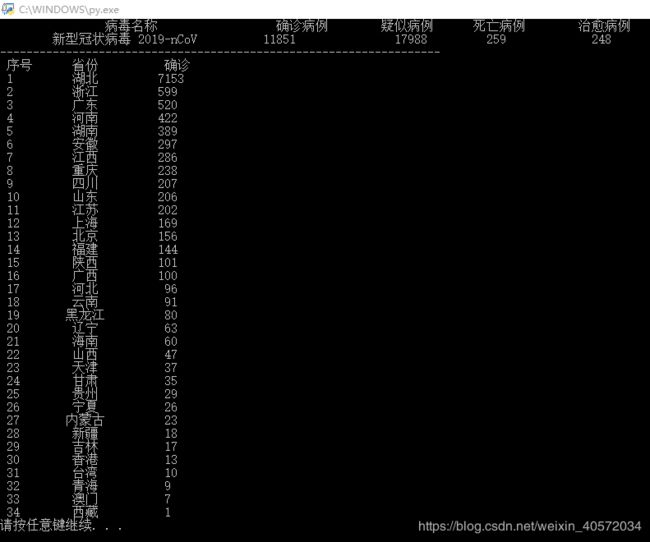

疫情讯息爬虫

只做静态网页爬取…

中间对齐真的好难,放弃了…

代码写的很杂糅,就这样叭

网址来源

代码1:

基于将网页源代码切片凑成列表或字典形式

import requests

from bs4 import BeautifulSoup

from os import system

def getHTMLText(url):

try:

head = {\

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.117 Safari/537.36'\

}

r = requests.get(url, timeout = 30, headers = head)

r.raise_for_status()

r.encoding = 'utf-8'

return r.text

except:

return ""

def parsePage(ilt, html):

try:

soup = BeautifulSoup(html, 'html.parser')

total = eval(str(soup.find('script', id = 'getStatisticsService'))[69:-20].replace('false', 'False'))

tplt1 = "\t{0:{5}^12}\t{1:^8}\t{2:^8}\t{3:^4}\t{4:^4}"

tplt2 = "\t{0:{5}^12}\t{1:{5}^14}\t{2:{5}^10}\t{3:{5}^6}\t{4:{5}^6}"

print(tplt1.format("病毒名称", "确诊病例", "疑似病例", "死亡病例", "治愈病例", chr(12288)))

print(tplt2.format(total['virus'], total['confirmedCount'], total['suspectedCount'], total['deadCount'], total['curedCount'], chr(12288)))

print("{0:-^80}".format(""))

data = eval(str(soup.find('script', id = 'getAreaStat'))[51:-20])

for temp in data:

prov = temp['provinceShortName']

conf = temp['confirmedCount']

ilt.append([prov, conf])

except:

print("")

def printList(ilt):

tplt = "{:^4}\t{:^8}\t{:^4}"

print(tplt.format("序号", "省份", "确诊"))

count = 0

for g in ilt:

count += 1

print(tplt.format(count, g[0], g[1]))

def main():

url = "https://ncov.dxy.cn/ncovh5/view/pneumonia_peopleapp"

infoList = []

try:

html = getHTMLText(url)

parsePage(infoList, html)

except:

pass

printList(infoList)

main()

system('pause')

代码2:

基于正则表达式进行检索

但是不会将raw string片段拼接在一起,就导致检索疫情汇总信息时,代码十分重复

import requests

from bs4 import BeautifulSoup

import re

from os import system

def getHTMLText(url):

try:

head = {\

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.117 Safari/537.36'\

}

r = requests.get(url, timeout = 30, headers = head)

r.raise_for_status()

r.encoding = 'utf-8'

return r.text

except:

return ""

def parsePage(total, ilt, html):

try:

soup = BeautifulSoup(html, 'html.parser')

data_temp = str(soup.find_all('script', id = 'getAreaStat'))

data = re.findall(r'\"provinceShortName\"\:\".*?\"\,\"confirmedCount\"\:\d+', data_temp)

for a in data:

prov = eval(a.split(',')[0].split(':')[1])

conf = eval(a.split(',')[1].split(':')[1])

ilt.append([prov, conf])

total_temp = str(soup.find_all('script', id = 'getStatisticsService'))

total.append(eval(re.findall(r'\"virus\"\:\".*?\"', total_temp)[0].split(':')[1]))

total.append(eval(re.findall(r'\"confirmedCount\"\:\d+', total_temp)[0].split(':')[1]))

total.append(eval(re.findall(r'\"suspectedCount\"\:\d+', total_temp)[0].split(':')[1]))

total.append(eval(re.findall(r'\"deadCount\"\:\d+', total_temp)[0].split(':')[1]))

total.append(eval(re.findall(r'\"curedCount\"\:\d+', total_temp)[0].split(':')[1]))

except:

print("")

def printList(tot, ilt):

tplt1 = "{0:{5}^12}\t{1:^8}\t{2:^8}\t{3:^4}\t{4:^4}"

tplt2 = "{0:{5}^12}\t{1:{5}^14}\t{2:{5}^10}\t{3:{5}^6}\t{4:{5}^6}"

print(tplt1.format("病毒名称", "确诊病例", "疑似病例", "死亡病例", "治愈病例", chr(12288)))

print(tplt2.format(tot[0], tot[1], tot[2], tot[3], tot[4], chr(12288)))

print("{0:-^80}".format(""))

tplt = "{:^4}\t{:^8}\t{:^4}"

print(tplt.format("序号", "省份", "确诊"))

count = 0

for g in ilt:

count += 1

print(tplt.format(count, g[0], g[1]))

def main():

url = "https://ncov.dxy.cn/ncovh5/view/pneumonia_peopleapp"

infoList = []

Totalinfo = []

try:

html = getHTMLText(url)

parsePage(Totalinfo, infoList, html)

except:

pass

printList(Totalinfo, infoList)

main()

system('pause')