LeNet3D + tfrecords 3D卷积样例 tf.nn.conv3d (立体图像卷积 3D 医疗图像卷积 (CT, fMRI))

import numpy as np

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3'

os.system("rm -r logs")

import tensorflow as tf

import matplotlib.pyplot as plt

from PIL import Image

import multiprocessing

trainPath = '../tfrecords/train.tfrecords'

testPath = '../tfrecords/test.tfrecords'

valPath = '../tfrecords/val.tfrecords'

def read_tfrecord(TFRecordPath):

with tf.Session() as sess:

feature = {

'image': tf.FixedLenFeature([], tf.string),

'label': tf.FixedLenFeature([], tf.int64),

'person': tf.FixedLenFeature([], tf.int64),

}

filename_queue = tf.train.string_input_producer([TFRecordPath])

reader = tf.TFRecordReader()

_, serialized_example = reader.read(filename_queue)

features = tf.parse_single_example(serialized_example, features = feature)

image = tf.decode_raw(features['image'], np.float64)

image = tf.cast(image, tf.float32)

image = tf.reshape(image, [31, 128, 128, 1])

label = tf.cast(features['label'], tf.int32)

return image, label

def conv3d_layer(X, k, s, channels_in, channels_out, name = 'CONV'):

with tf.name_scope(name):

W = tf.Variable(tf.truncated_normal([k, k, k, channels_in, channels_out], stddev = 0.1));

b = tf.Variable(tf.constant(0.01, shape = [channels_out]))

conv = tf.nn.conv3d(X, W, strides = [1, s, s, s, 1], padding = 'SAME')

result = tf.nn.relu(conv + b)

tf.summary.histogram('weights', W)

tf.summary.histogram('biases', b)

tf.summary.histogram('activations', result)

return result

def pool3d_layer(X, k, s, strr = 'SAME', pool_type = 'MAX'):

if pool_type == 'MAX':

result = tf.nn.max_pool3d(X,

ksize = [1, k, k, k, 1],

strides = [1, s, s, s, 1],

padding = strr)

else:

result = tf.nn.avg_pool3d(X,

ksize = [1, k, k, k, 1],

strides = [1, s, s, s, 1],

padding = strr)

return result

def fc_layer(X, neurons_in, neurons_out, last = False, name = 'FC'):

with tf.name_scope(name):

W = tf.Variable(tf.truncated_normal([neurons_in, neurons_out], stddev = 0.1))

b = tf.Variable(tf.constant(0.01, shape = [neurons_out]))

tf.summary.histogram('weights', W)

tf.summary.histogram('biases', b)

if last == False:

result = tf.nn.relu(tf.matmul(X, W) + b)

else:

result = tf.matmul(X, W) + b

tf.summary.histogram('activations', result)

return result

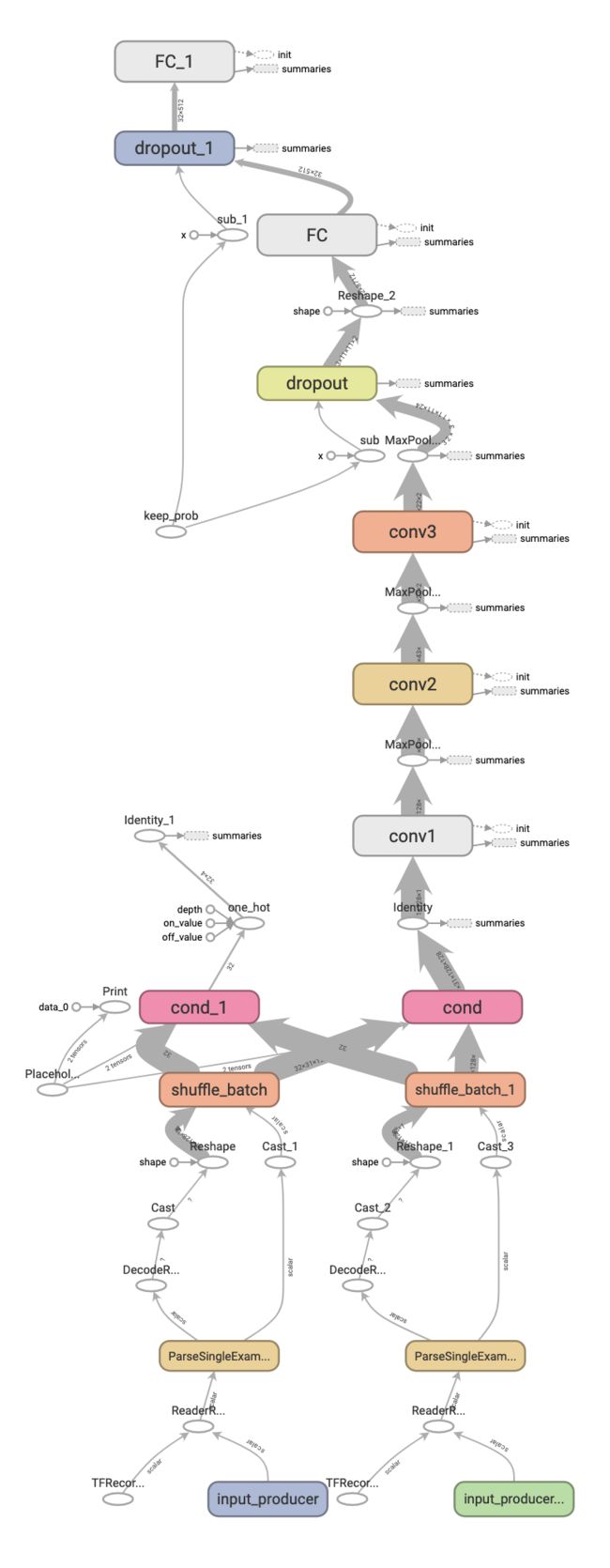

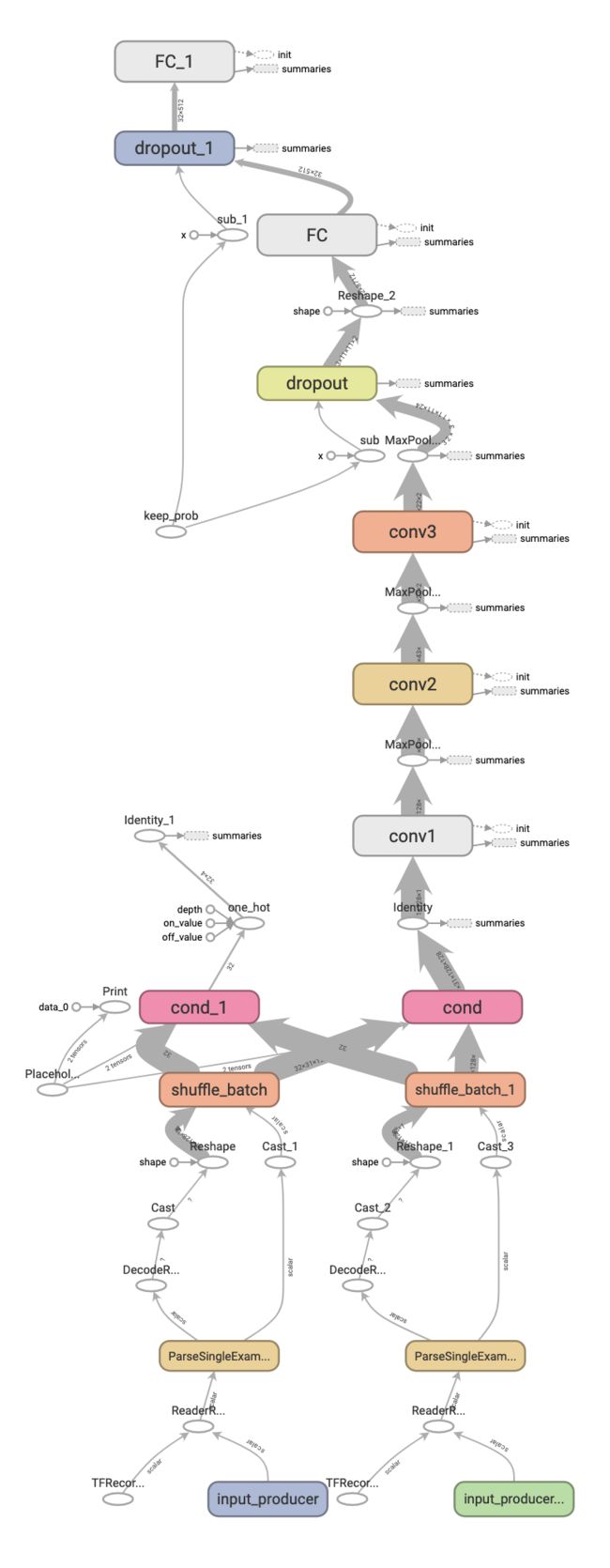

def Network(BatchSize, learning_rate):

tf.reset_default_graph()

with tf.Session() as sess:

is_training = tf.placeholder(dtype = tf.bool, shape=())

keep_prob = tf.placeholder('float32', name = 'keep_prob')

judge = tf.Print(is_training, ['is_training:', is_training])

image_train, label_train = read_tfrecord(trainPath)

image_val, label_val = read_tfrecord(valPath)

image_train_Batch, label_train_Batch = tf.train.shuffle_batch([image_train, label_train],

batch_size = BatchSize,

capacity = BatchSize*3 + 200,

min_after_dequeue = BatchSize)

image_val_Batch, label_val_Batch = tf.train.shuffle_batch([image_val, label_val],

batch_size = BatchSize,

capacity = BatchSize*3 + 200,

min_after_dequeue = BatchSize)

image_Batch = tf.cond(is_training, lambda: image_train_Batch, lambda: image_val_Batch)

label_Batch = tf.cond(is_training, lambda: label_train_Batch, lambda: label_val_Batch)

label_Batch = tf.one_hot(label_Batch, depth = 4)

X = tf.identity(image_Batch)

y = tf.identity(label_Batch)

conv1 = conv3d_layer(X, 3, 1, 1, 12, "conv1")

pool1 = pool3d_layer(conv1, 3, 3, "SAME", "MAX")

conv2 = conv3d_layer(pool1, 3, 1, 12, 48, 'conv2')

pool2 = pool3d_layer(conv2, 2, 2, "SAME", "MAX")

conv3 = conv3d_layer(pool2, 3, 1, 48, 24, 'conv3')

pool3 = pool3d_layer(conv3, 2, 2, "SAME", "MAX")

print(pool3.shape)

drop1 = tf.nn.dropout(pool3, keep_prob)

fc1 = fc_layer(tf.reshape(drop1, [-1, 3 * 11 * 11 * 24]), 3 * 11 * 11 * 24, 512)

drop2 = tf.nn.dropout(fc1, keep_prob)

y_result = fc_layer(drop2, 512, 4, True)

print(y_result.shape)

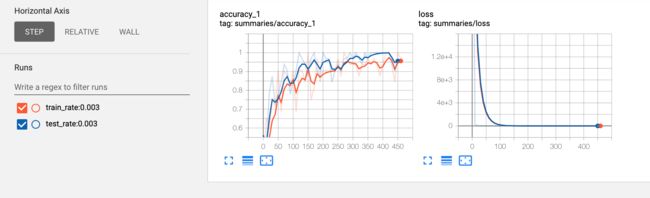

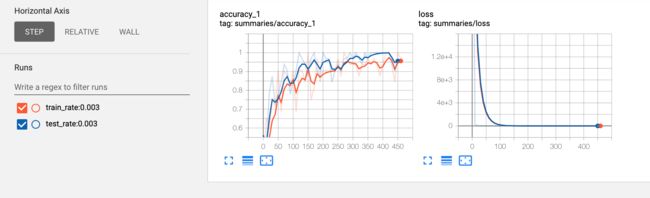

with tf.name_scope('summaries'):

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits = y_result, labels = y))

train_step = tf.train.AdamOptimizer(learning_rate).minimize(cross_entropy)

corrent_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_result, 1))

accuracy = tf.reduce_mean(tf.cast(corrent_prediction, 'float', name = 'accuracy'))

tf.summary.scalar("loss", cross_entropy)

tf.summary.scalar("accuracy", accuracy)

init_op = tf.group(tf.global_variables_initializer(), tf.local_variables_initializer())

sess.run(init_op)

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(coord = coord)

merge_summary = tf.summary.merge_all()

summary__train_writer = tf.summary.FileWriter("./logs/train" + '_rate:' + str(learning_rate), sess.graph)

summary_val_writer = tf.summary.FileWriter("./logs/test" + '_rate:' + str(learning_rate))

try:

batch_index = 0

while not coord.should_stop():

sess.run([train_step], feed_dict = {keep_prob: 0.5, is_training: True})

if batch_index % 10 == 0:

summary_train, _, acc_train, loss_train = sess.run([merge_summary, train_step, accuracy, cross_entropy], feed_dict = {keep_prob: 1.0, is_training: True})

summary__train_writer.add_summary(summary_train, batch_index)

print(str(batch_index) + ' train:' + ' ' + str(acc_train) + ' ' + str(loss_train))

summary_val, acc_val, loss_val = sess.run([merge_summary, accuracy, cross_entropy], feed_dict = {keep_prob: 1.0, is_training: False})

summary_val_writer.add_summary(summary_val, batch_index)

print(str(batch_index) + ' val: ' + ' ' + str(acc_val) + ' ' + str(loss_val))

batch_index += 1;

if batch_index > 3000:

break

except tf.errors.OutOfRangeError:

print("OutofRangeError!")

finally:

print("Finish")

coord.request_stop()

coord.join(threads)

sess.close()

def main():

for rate in (0.003, 0.001):

try:

Network(32, rate)

except KeyboardInterrupt:

pass

if __name__ == '__main__':

main()