内存管理(七):物理内存组织

目录

- 1 体系结构

- 2 内存模型

- 3 稀疏内存

linux版本:4.14.74

硬件:ARMV8 A53

1 体系结构

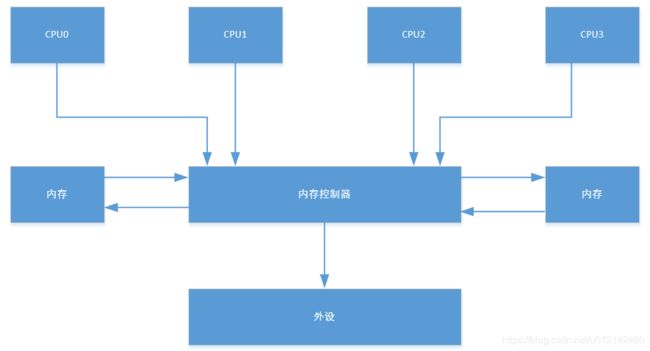

目前多处理器系统有两种体系结构

1、对称多处理器(Symmetric Muti-Processsor,SMP):即一致内存访问(UMA),UMA系统有以下特点

- 所有硬件资源都是共享的,每个处理器都能访问到系统中的内存和外设资源

- 所有处理器都是平等关系

- 统一寻主访问内存

- 处理器和内存通过内部的一条总线连接在一起。

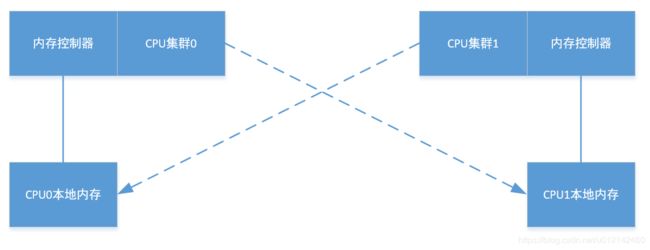

2 非一致性内存访问(Non-Uniform Memory Access, NUMA):内存被划分为多个内存节点,访问一个内存节点花费的时间取决于处理器和内存节点的距离,每个处理器都有一个本地内存节点,处理器访问本地内存节点的速度比访问其他内存节点的速度快。NUMA是中高端服务器的主流体系结构

2 内存模型

内存模型是从处理器的角度看到的物理内存分布情况,内核管理子系统支持3种内存模型

- 平坦内存(Flat Memory):内存的物理地址空间是连续的,没有空洞

- 不连续内存(Discontiguous Memory):内存的物理地址空间存在空洞,这种模型可以高效第处理空洞

- 稀疏内存(Sparse Memory):存在空洞且支持内存的热插拔

page frame的概念

linux管理内存的单位是页,页大小与硬件和linux的配置有关,最常用的大小是4K,物理内存被分为一个一个的page,每个page所代表的的内存区域成为page frame页帧,每一个page frame对应一个struct page的结构体。对每一个page frame进行编号,这也就是page frame number页帧号,简称PFN。假设物理内存从0地址开始,那么PFN等于0的那个页帧就是0地址(物理地址)开始的那个page。假设物理内存从x地址开始,那么第一个页帧号码就是(x>>PAGE_SHIFT)

3 稀疏内存

我使用的是稀疏内存,所以我就以稀疏内存为例来介绍。在出现了sparse memory之后,Discontiguous memory内存模型已经不是那么重要了,按理说sparse memory最终可以替代Discontiguous memory的,这个替代过程正在进行中。

在稀疏模型中,1G大小被称为1个SECTION,SECTION内部是连续的,而每一个SECTION都是hotplug的。

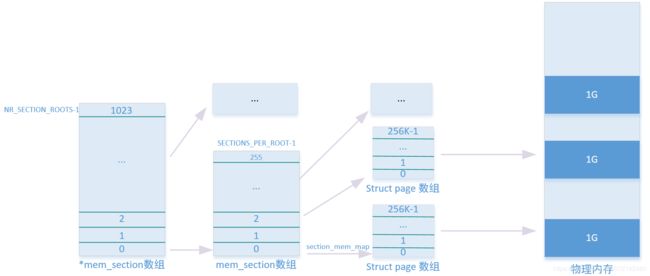

linux使用一个二级指针(二位数组)来描述稀疏内存

struct mem_section **mem_section;

struct mem_section {

unsigned long section_mem_map;

/* See declaration of similar field in struct zone */

unsigned long *pageblock_flags;

#ifdef CONFIG_PAGE_EXTENSION

/*

* If SPARSEMEM, pgdat doesn't have page_ext pointer. We use

* section. (see page_ext.h about this.)

*/

struct page_ext *page_ext;

unsigned long pad;

#endif

/*

* WARNING: mem_section must be a power-of-2 in size for the

* calculation and use of SECTION_ROOT_MASK to make sense.

*/

};

mem_section中的section_mem_map指向struct page数组,page数组描述就是section所对应的的1G内存。

一个mem_section描述的是1G的section。下面我们通过代码来进一步了解稀疏模型

下面是sparse常用到的宏定义

#define MAX_PHYSMEM_BITS 48 //最大物理地址宽度 48

#define SECTION_SIZE_BITS 30 //SECTION大小为1G,30bit

/*用来存储SECTION需要的偏移*/

#define SECTIONS_SHIFT (MAX_PHYSMEM_BITS - SECTION_SIZE_BITS)

/*用来存储SECTION需要的大小,256K */

#define NR_MEM_SECTIONS (1UL << SECTIONS_SHIFT)

/*二位数组包含的元素称为root section,root section指针包含的元素称为

* section,整个系统可以有1024个root section,1个root section有256个

* section,1个section大小为1G,对应的就是整个内核空间256T*/

#define NR_SECTION_ROOTS DIV_ROUND_UP(NR_MEM_SECTIONS, SECTIONS_PER_ROOT) //1024

#define SECTIONS_PER_ROOT (PAGE_SIZE / sizeof (struct mem_section)) //256

/*pfn to/from section number (18) */

#define PFN_SECTION_SHIFT (SECTION_SIZE_BITS - PAGE_SHIFT)

/*一个section中所包含的page,256K*/

#define PAGES_PER_SECTION (1UL << PFN_SECTION_SHIFT)

sparse模型的基本情况可以用上图来描述,struct mem_section **mem_section描述整个内存的情况,覆盖的最大范围为256T,包含1024个一级指针( * mem_section),每个( * mem_section)称为root_section,1个root_section包含256个mem_section,也就是( * mem_section)覆盖的范围为256G,每个(*mem_section)包含256个mem_section,一个mem_section覆盖范围是1G,元素section_mem_map指向struct page的数组,内核虚拟地址空间中有专门一块区域是用来存储这些struct page数组的,就是VMEMMAP区域。

在上面我们提到了页帧号PFN的概念,页到页帧号以及页帧号到页的转换是会经常用到的一组宏

#define __pfn_to_page(pfn) (vmemmap + (pfn))

#define __page_to_pfn(page) (unsigned long)((page) - vmemmap)

#define vmemmap ((struct page *)VMEMMAP_START - (memstart_addr >> PAGE_SHIFT))

在sparse模型中,所有struct page数组都存放在VMEMMAP区域,并且是连续存放的,所以页帧号PFN到page的转换就很简单,vmemmap的起始加上index的索引即可。相反过程也类似,两个struct *page类型的数据相减,就是当前page相对于vmemmap的偏移,也就是页帧号。

pfn到section的转换

static inline struct mem_section *__nr_to_section(unsigned long nr)

{

#ifdef CONFIG_SPARSEMEM_EXTREME

if (!mem_section)

return NULL;

#endif

if (!mem_section[SECTION_NR_TO_ROOT(nr)])

return NULL;

return &mem_section[SECTION_NR_TO_ROOT(nr)][nr & SECTION_ROOT_MASK];

}

static inline unsigned long pfn_to_section_nr(unsigned long pfn)

{

return pfn >> PFN_SECTION_SHIFT;

}

static inline struct mem_section *__pfn_to_section(unsigned long pfn)

{

return __nr_to_section(pfn_to_section_nr(pfn));

}

已知pfn,可通过pfn_to_section_nr找到section的编号,并以section编号在struct mem_section **mem_section寻找到mem_section.

void __init bootmem_init(void)

{

unsigned long min, max;

min = PFN_UP(memblock_start_of_DRAM());

max = PFN_DOWN(memblock_end_of_DRAM());

early_memtest(min << PAGE_SHIFT, max << PAGE_SHIFT);

max_pfn = max_low_pfn = max;

arm64_numa_init();

/*

* Sparsemem tries to allocate bootmem in memory_present(), so must be

* done after the fixed reservations.

*/

arm64_memory_present(); /*********1**********/

sparse_init(); /**********2*******/

zone_sizes_init(min, max);

memblock_dump_all();

}

- 主要功能是为mem_section二维数组分配空间,以及进行一些基本的初始化。

- 对sparse的初始化,包括内存的映射和usermap_map的初始化

arm64_memory_present中主要调用的是memory_present,我们把这个函数看一下

/* Record a memory area against a node. */

void __init memory_present(int nid, unsigned long start, unsigned long end)

{

unsigned long pfn;

#ifdef CONFIG_SPARSEMEM_EXTREME

/*****************1**************/

if (unlikely(!mem_section)) {

unsigned long size, align;

size = sizeof(struct mem_section*) * NR_SECTION_ROOTS;

align = 1 << (INTERNODE_CACHE_SHIFT);

mem_section = memblock_virt_alloc(size, align);

}

#endif

start &= PAGE_SECTION_MASK;

mminit_validate_memmodel_limits(&start, &end);

/************2**************/

for (pfn = start; pfn < end; pfn += PAGES_PER_SECTION) {

/* 页帧到section的转换,因为一个section有256K个page,所以

* 右移PFN_SECTION_SHIFT(18位,也就是256K的大小)

*

*/

/*********3*********/

unsigned long section = pfn_to_section_nr(pfn);

struct mem_section *ms;

/*

/************4**********/

sparse_index_init(section, nid);

set_section_nid(section, nid);

/************5**********/

ms = __nr_to_section(section);

/**********6*************/

if (!ms->section_mem_map) {

ms->section_mem_map = sparse_encode_early_nid(nid) |

SECTION_IS_ONLINE;

section_mark_present(ms);

}

}

}

(1) 只会调用一次,为二维数组**mem_section分配空间

(2) 以1G为单位进行处理,start和end都是页帧号

(3) 以页帧号获取section的编号

(4) 为一个root section分配空间,一个root section包含256个section(256G)

(5) 通过section编号获取到mem_section

(6) 进行简单的一些初始化的工作

arm64_memory_present主要工作是为mem_section数组分配空间,并进行一些简单的初始化,接下来需要在sparse_init对以1G为单位的struct mem_section进行初始化

/*

* Allocate the accumulated non-linear sections, allocate a mem_map

* for each and record the physical to section mapping.

*/

void __init sparse_init(void)

{

unsigned long pnum;

struct page *map;

unsigned long *usemap;

unsigned long **usemap_map;

int size;

#ifdef CONFIG_SPARSEMEM_ALLOC_MEM_MAP_TOGETHER

int size2;

struct page **map_map;

#endif

/* see include/linux/mmzone.h 'struct mem_section' definition */

BUILD_BUG_ON(!is_power_of_2(sizeof(struct mem_section)));

/* Setup pageblock_order for HUGETLB_PAGE_SIZE_VARIABLE */

set_pageblock_order();

/*

* map is using big page (aka 2M in x86 64 bit)

* usemap is less one page (aka 24 bytes)

* so alloc 2M (with 2M align) and 24 bytes in turn will

* make next 2M slip to one more 2M later.

* then in big system, the memory will have a lot of holes...

* here try to allocate 2M pages continuously.

*

* powerpc need to call sparse_init_one_section right after each

* sparse_early_mem_map_alloc, so allocate usemap_map at first.

*/

/**********1*************/

size = sizeof(unsigned long *) * NR_MEM_SECTIONS;

usemap_map = memblock_virt_alloc(size, 0);

if (!usemap_map)

panic("can not allocate usemap_map\n");

alloc_usemap_and_memmap(sparse_early_usemaps_alloc_node,

(void *)usemap_map);

#ifdef CONFIG_SPARSEMEM_ALLOC_MEM_MAP_TOGETHER

size2 = sizeof(struct page *) * NR_MEM_SECTIONS;

map_map = memblock_virt_alloc(size2, 0);

if (!map_map)

panic("can not allocate map_map\n");

alloc_usemap_and_memmap(sparse_early_mem_maps_alloc_node,

(void *)map_map);

#endif

for_each_present_section_nr(0, pnum) {

usemap = usemap_map[pnum];

if (!usemap)

continue;

#ifdef CONFIG_SPARSEMEM_ALLOC_MEM_MAP_TOGETHER

map = map_map[pnum];

#else

/******************2**************/

map = sparse_early_mem_map_alloc(pnum);

#endif

if (!map)

continue;

/*******************3**************/

sparse_init_one_section(__nr_to_section(pnum), pnum, map,

usemap);

}

vmemmap_populate_print_last();

#ifdef CONFIG_SPARSEMEM_ALLOC_MEM_MAP_TOGETHER

memblock_free_early(__pa(map_map), size2);

#endif

memblock_free_early(__pa(usemap_map), size);

}

1、 为每个section分配一个usermap,usermap的具体细节我们本章不讲

2、为struct page数组分配空间并映射到vmemmap区域。

3、对section进行初始化,也就是对指向struct page数组的section_mem_map的元素初始化。

第二步中为page数组分配空间的函数调用流程

sparse_early_mem_map_alloc—>sparse_mem_map_populate–>vmemmap_populate

static struct page __init *sparse_early_mem_map_alloc(unsigned long pnum)

{

struct page *map;

struct mem_section *ms = __nr_to_section(pnum);

int nid = sparse_early_nid(ms);

map = sparse_mem_map_populate(pnum, nid);

if (map)

return map;

pr_err("%s: sparsemem memory map backing failed some memory will not be available\n",

__func__);

ms->section_mem_map = 0;

return NULL;

}

struct page * __meminit sparse_mem_map_populate(unsigned long pnum, int nid)

{

unsigned long start;

unsigned long end;

struct page *map;

/**************1***************/

map = pfn_to_page(pnum * PAGES_PER_SECTION);

start = (unsigned long)map;

/**********2****************/

end = (unsigned long)(map + PAGES_PER_SECTION);

/***************2****************/

if (vmemmap_populate(start, end, nid))

return NULL;

return map;

}

- 获取page数组对应的vmemmap区域的虚拟地址

- end表示这个section的page数组对应的末地址,1Gsection需要256K个sruct page来表示,一个struct page大小是64字节,也就是1G 需要16M的vmemmap的虚拟空间

- 为page数组分配空间并映射到vmemmap区域

int __meminit vmemmap_populate(unsigned long start, unsigned long end, int node)

{

unsigned long addr = start;

unsigned long next;

pgd_t *pgd;

pud_t *pud;

pmd_t *pmd;

/*****************1***************/

do {

next = pmd_addr_end(addr, end);

/************2*************/

pgd = vmemmap_pgd_populate(addr, node);

if (!pgd)

return -ENOMEM;

pud = vmemmap_pud_populate(pgd, addr, node);

if (!pud)

return -ENOMEM;

pmd = pmd_offset(pud, addr);

if (pmd_none(*pmd)) {

void *p = NULL;

/****************3************/

p = vmemmap_alloc_block_buf(PMD_SIZE, node);

if (!p)

return -ENOMEM;

set_pmd(pmd, __pmd(__pa(p) | PROT_SECT_NORMAL));

} else

vmemmap_verify((pte_t *)pmd, node, addr, next);

} while (addr = next, addr != end);

return 0;

}

- vmemmap使用2M的方式进行映射,也就是对16M区域进行8次映射

- 依次获取pgd pud pmd,pgd整个系统使用一组pgd,所以这里不用再分配了,pud,pmd则就用根据情况再分配了。最终通过vmemmap_alloc_block分配page size大小

- vmemmap_alloc_block_buf分配PMD_SIZE的2M空间,最终也是调用的vmemmap_alloc_block来分配,然后填充pmd

由于此时slab还没准备就绪,所以vmemmap_alloc_block使用memblock来进行分配。