从零开始用node.js爬虫

现在互联网上python培训班广告铺天盖地,与之有着紧密联系的爬虫一词对于我这样一个新手来说也是耳熟能详。本学期学院开设了web编程课,第一次实验项目的主要内容也是爬虫,不过是用node.js来实现,这对于没有接触过JavaScript的我来说是又新奇又富有挑战呀。

本次实验的内容是新闻爬虫及爬取结果的查询网站。具体要求如下:

- 核心需求:

(1) 选取3-5个代表性的新闻网站(比如新浪新闻、网易新闻等,或者某个垂直领域权威性的网站比如经济领域的雪球财经、东方财富等,或者体育领域的腾讯体育、虎扑体育等等),建立爬虫,针对不同网站的新闻页面进行分析,爬取出编码、标题、作者、时间、关键词、摘要、内容、来源等结构化信息,存储在数据库中。

(2) 建立网站提供对爬取内容的分项全文搜索,给出所查关键词的时间热度分析。 - 技术要求:

(1) 必须采用Node.JS实现网络爬虫

(2) 必须采用Node.JS实现查询网站后端、HTML+JS实现前端(尽量不要使用任何前后端框架)。

首先大致了解了一下用node.js实现基础的爬虫之前要做的事情:

- 下载node.js

- 安装request包和cheerio包

request是一个node.js的模板库,可以轻松地完成http请求。

cheerio也是一个node.js的模板库,它可以从html的片段中构建DOM(文档对象模型)结构,然后提供像jQuery一样的css选择器查询。

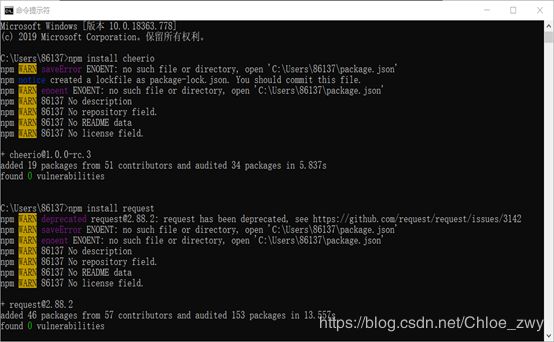

注意:cheerio和request不是Node.js自带的,需要npm install cheerio和npm install request进行第三方安装。

这里我是在Windows中的命令提示符里完成的:

看到这么多warn我以为出师未捷身先死了,但是在后续的代码运行中似乎并没有什么影响,于是就不在此纠结了,希望有空能回顾研究一下…

一、对项目示例1的学习及运用

然后就开始学习老师提供的项目示例1了:

这是基于node.js开始用Cheerio和Request做的一个最简单的爬虫代码示例,是以我校网站为例,爬:http://www.ecnu.edu.cn/e5/bc/c1950a255420/page/htm

代码如下:

var myRequest = require('request')

var myCheerio = require('cheerio')

var myURL = 'https://www.ecnu.edu.cn/e5/bc/c1950a255420/page.htm'

function request(url, callback) {//request module fetching url

var options = {

url: url, encoding: null, headers: null

}

myRequest(options, callback)

}

request(myURL, function (err, res, body) {

var html = body;

var $ = myCheerio.load(html, { decodeEntities: false });

console.log($.html());

//console.log("title: " + $('title').text());

//console.log("description: " + $('meta[name="description"]').eq(0).attr("content"));

})

作为一个从零开始的真·小白,刚看到这段代码的时候是懵比的,于是在跑代码之前先大概分析了一下这段代码:

第1&2行:引入Cheerio包和Request包;

第3行:把具体需要爬取的URL定义在myURL这个变量里面;

第4行:创建request函数;

调用request函数:把具体URL里的信息爬取出来,放到html的变量var $里面,然后用console.log把这个html打印出来。

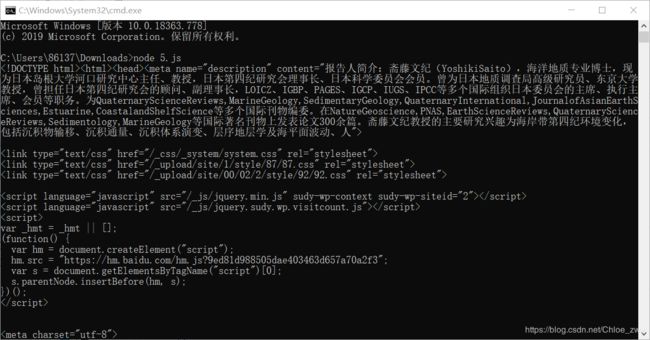

运行结果如下:

其中request包和cheerio包的具体作用:

request包直接把html页面爬下来,把具体的文档信息存储在本地变量里面

cheerio包是一个解析的作用,比如这段代码:var $ = myCheerio load(html,{ decodeEntities; false }); cheerio里把具体的报道的html页面存下来,放在 $ 变量里面,然后就可以用myCheerio包来做一个具体的文档里面信息的解析。

把这行代码

console.log($.html());

替换为:

console.log("title: " + $('title').text());

console.log("description: " + $('meta[name="description"]').eq(0).attr("content"));

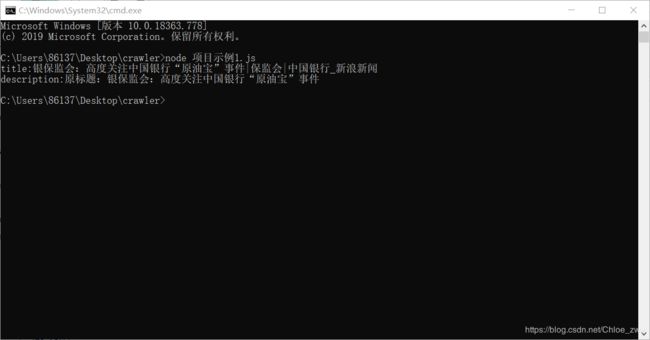

就可以看到cheerio包的作用了,修改后运行结果如下:

title和description这两个模块化信息就被摘录出来啦。

理解了老师的代码后就开始试着修改它,这段时间“原油宝”还蛮热门的,我就去中国新闻网上找了一篇新闻,将它的url替换示例中的url:

var myRequest = require('request')

var myCheerio = require('cheerio')

var myURL = 'https://news.sina.com.cn/c/2020-05-01/doc-iircuyvi0875852.shtml'

function request(url,callback){//request mmodule fetching url}

var options = {

url:url, encoding:null, headers:null

}

myRequest(options,callback)

}

request(myURL,function(err,res,body){

var html = body;

var $ = myCheerio.load(html,{decodeEntities:false});

//console.log($.html());

console.log("title:"+$('title').text());

console.log("description:"+$('meta[name="description"]').eq(0).attr("content"));

})

以上只是爬取单个页面的信息的最简单的例子,要满足本次实验的需求,还需丰富我们的代码。

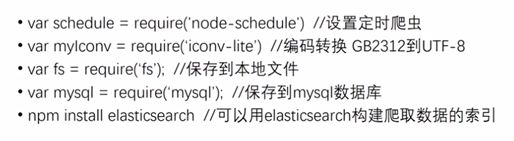

如下是一些类似request和cheerio的模板库,可以发挥巨大作用:

安装也需要:npm install ***

这些包的使用在后续的项目示例中有逐渐涉及。

二、对项目示例2的学习及运用

刚才简单地爬取了单个页面的信息,这个示例中老师向我们示范了如何爬取一个新闻网站种子页面中所包含的各新闻的信息。

整个流程分为五步:

- 读取种子页面

- 分析出种子页面里的所有新闻链接

- 爬取所有新闻链接的内容

- 分析新闻页面内容,解析出结构化数据

- 将结构化数据保存到本地文件

以中国新闻网为例:

可以分析出种子页面中所有新闻链接的格式,类似:

![]()

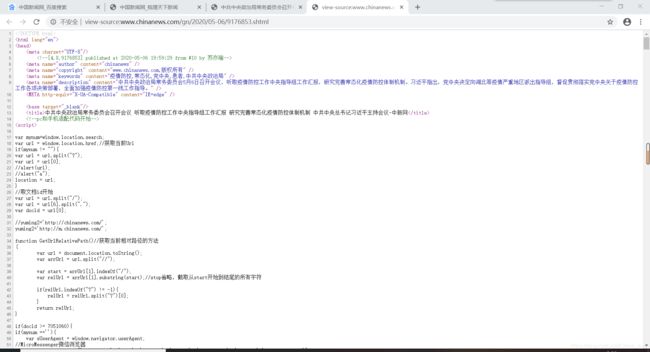

再通过已有的url获取到具体某一个新闻的页面,右击查看网页源代码分析新闻html页面内容:

可以分析出其中的encoding、author、keywords、description等等结构化信息,还有正文所在的那个div。

然后就可以开始写代码了。

这段代码中用到这么几个包:引入必须的模块,比如fs(等下存文件要用)、request(发http请求)、cheerio(类似jQuery获取到html里具体的element的内容)、iconv-lite(用来做转码)、date-utils(解析日期比较方便)。

剩下的代码好长就不作解释了…(我也解释不太清楚

var source_name = "中国新闻网";

var domain = 'http://www.chinanews.com/';

var myEncoding = "utf-8";

var seedURL = 'http://www.chinanews.com/';

var seedURL_format = "$('a')";

var keywords_format = " $('meta[name=\"keywords\"]').eq(0).attr(\"content\")";

var title_format = "$('title').text()";

var date_format = "$('#pubtime_baidu').text()";

var author_format = "$('#editor_baidu').text()";

var content_format = "$('.left_zw').text()";

var desc_format = " $('meta[name=\"description\"]').eq(0).attr(\"content\")";

var source_format = "$('#source_baidu').text()";

var url_reg = /\/(\d{4})\/(\d{2})-(\d{2})\/(\d{7}).shtml/;

var regExp = /((\d{4}|\d{2})(\-|\/|\.)\d{1,2}\3\d{1,2})|(\d{4}年\d{1,2}月\d{1,2}日)/

var fs = require('fs');

var myRequest = require('request')

var myCheerio = require('cheerio')

var myIconv = require('iconv-lite')

require('date-utils');

//防止网站屏蔽我们的爬虫

var headers = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.65 Safari/537.36'

}

//request模块异步fetch url

function request(url, callback) {

var options = {

url: url,

encoding: null,

//proxy: 'http://x.x.x.x:8080',

headers: headers,

timeout: 10000 //

}

myRequest(options, callback)

}

request(seedURL, function(err, res, body) { //读取种子页面

// try {

//用iconv转换编码

var html = myIconv.decode(body, myEncoding);

//console.log(html);

//准备用cheerio解析html

var $ = myCheerio.load(html, { decodeEntities: true });

// } catch (e) { console.log('读种子页面并转码出错:' + e) };

var seedurl_news;

try {

seedurl_news = eval(seedURL_format);

//console.log(seedurl_news);

} catch (e) { console.log('url列表所处的html块识别出错:' + e) };

seedurl_news.each(function(i, e) { //遍历种子页面里所有的a链接

var myURL = "";

try {

//得到具体新闻url

var href = "";

href = $(e).attr("href");

if (href.toLowerCase().indexOf('http://') >= 0) myURL = href; //http://开头的

else if (href.startsWith('//')) myURL = 'http:' + href; ////开头的

else myURL = seedURL.substr(0, seedURL.lastIndexOf('/') + 1) + href; //其他

} catch (e) { console.log('识别种子页面中的新闻链接出错:' + e) }

if (!url_reg.test(myURL)) return; //检验是否符合新闻url的正则表达式

//console.log(myURL);

newsGet(myURL); //读取新闻页面

});

});

function newsGet(myURL) { //读取新闻页面

request(myURL, function(err, res, body) { //读取新闻页面

//try {

var html_news = myIconv.decode(body, myEncoding); //用iconv转换编码

//console.log(html_news);

//准备用cheerio解析html_news

var $ = myCheerio.load(html_news, { decodeEntities: true });

myhtml = html_news;

//} catch (e) { console.log('读新闻页面并转码出错:' + e);};

console.log("转码读取成功:" + myURL);

//动态执行format字符串,构建json对象准备写入文件或数据库

var fetch = {};

fetch.title = "";

fetch.content = "";

fetch.publish_date = (new Date()).toFormat("YYYY-MM-DD");

//fetch.html = myhtml;

fetch.url = myURL;

fetch.source_name = source_name;

fetch.source_encoding = myEncoding; //编码

fetch.crawltime = new Date();

if (keywords_format == "") fetch.keywords = source_name; // eval(keywords_format); //没有关键词就用sourcename

else fetch.keywords = eval(keywords_format);

if (title_format == "") fetch.title = ""

else fetch.title = eval(title_format); //标题

if (date_format != "") fetch.publish_date = eval(date_format); //刊登日期

console.log('date: ' + fetch.publish_date);

fetch.publish_date = regExp.exec(fetch.publish_date)[0];

fetch.publish_date = fetch.publish_date.replace('年', '-')

fetch.publish_date = fetch.publish_date.replace('月', '-')

fetch.publish_date = fetch.publish_date.replace('日', '')

fetch.publish_date = new Date(fetch.publish_date).toFormat("YYYY-MM-DD");

if (author_format == "") fetch.author = source_name; //eval(author_format); //作者

else fetch.author = eval(author_format);

if (content_format == "") fetch.content = "";

else fetch.content = eval(content_format).replace("\r\n" + fetch.author, ""); //内容,是否要去掉作者信息自行决定

if (source_format == "") fetch.source = fetch.source_name;

else fetch.source = eval(source_format).replace("\r\n", ""); //来源

if (desc_format == "") fetch.desc = fetch.title;

else fetch.desc = eval(desc_format).replace("\r\n", ""); //摘要

var filename = source_name + "_" + (new Date()).toFormat("YYYY-MM-DD") +

"_" + myURL.substr(myURL.lastIndexOf('/') + 1) + ".json";

////存储json

fs.writeFileSync(filename, JSON.stringify(fetch));

});

}

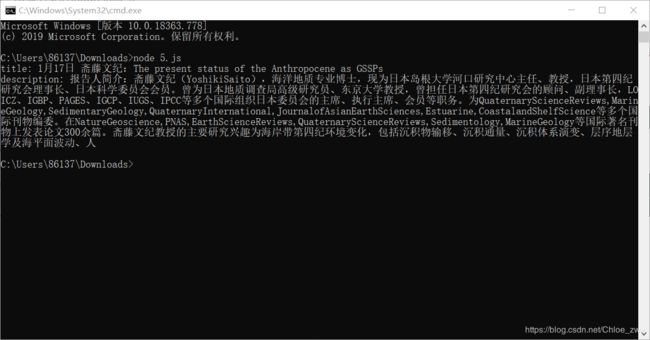

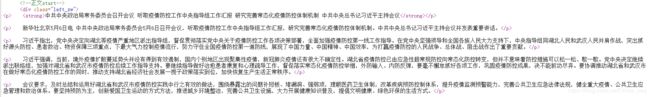

运行结果如下:

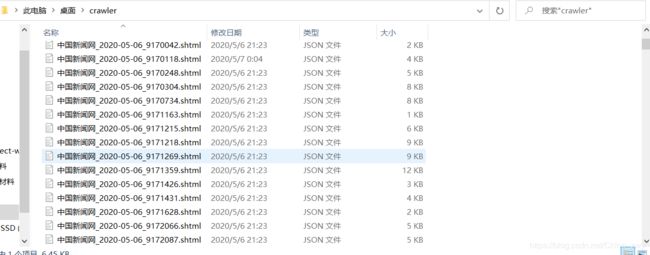

并且这时,该js文件所在的文件夹中多出了好多好多好多json文件:

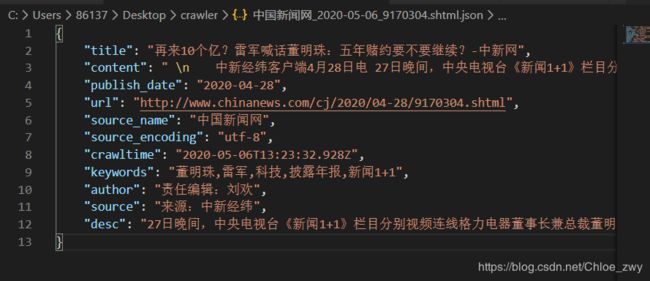

用vs code任意打开其中一个,并下载一个json插件美化一下它,效果如下:

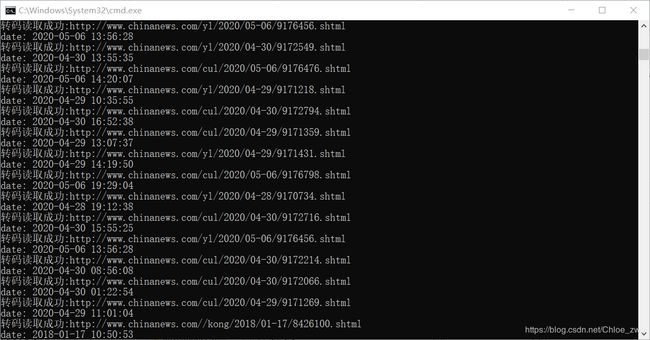

三、对项目示例3的学习及运用

3在2的基础上进行了以下扩展:

- 使用MySQL数据库存储爬取的信息

- 爬取新闻页面前先查询数据库,是否该url已爬取过

- 设置爬虫定时工作

首先要安装并配置MySQL,并且建立一个数据库crawl。

然后在刚才的代码中增加一个MySQL包。

var mysql = require('./mysql.js');

接着修改newsGet函数将数据写入数据库,同时保证相同的url不会重复写入数据库。

var fetchAddSql = 'INSERT INTO fetches(url,source_name,source_encoding,title,' +

'keywords,author,publish_date,crawltime,content) VALUES(?,?,?,?,?,?,?,?,?)';

var fetchAddSql_Params = [fetch.url, fetch.source_name, fetch.source_encoding,

fetch.title, fetch.keywords, fetch.author, fetch.publish_date,

fetch.crawltime.toFormat("YYYY-MM-DD HH24:MI:SS"), fetch.content

];

//执行sql,数据库中fetch表里的url属性是unique的,不会把重复的url内容写入数据库

mysql.query(fetchAddSql, fetchAddSql_Params, function(qerr, vals, fields) {

if (qerr) {

console.log(qerr);

}

}); //mysql写入

最后加入node-schedule包,设置定时爬虫。

var schedule = require('node-schedule');

//!定时执行

var rule = new schedule.RecurrenceRule();

var times = [0, 12]; //每天2次自动执行

var times2 = 5; //定义在第几分钟执行

rule.hour = times;

rule.minute = times2;

//定时执行httpGet()函数

schedule.scheduleJob(rule, function() {

seedget();

});

整个代码如下:

var fs = require('fs');

var myRequest = require('request');

var myCheerio = require('cheerio');

var myIconv = require('iconv-lite');

require('date-utils');

var mysql = require('./mysql.js');

var schedule = require('node-schedule');

var source_name = "中国新闻网";

var domain = 'http://www.chinanews.com/';

var myEncoding = "utf-8";

var seedURL = 'http://www.chinanews.com/';

var seedURL_format = "$('a')";

var keywords_format = " $('meta[name=\"keywords\"]').eq(0).attr(\"content\")";

var title_format = "$('title').text()";

var date_format = "$('#pubtime_baidu').text()";

var author_format = "$('#editor_baidu').text()";

var content_format = "$('.left_zw').text()";

var desc_format = " $('meta[name=\"description\"]').eq(0).attr(\"content\")";

var source_format = "$('#source_baidu').text()";

var url_reg = /\/(\d{4})\/(\d{2})-(\d{2})\/(\d{7}).shtml/;

var regExp = /((\d{4}|\d{2})(\-|\/|\.)\d{1,2}\3\d{1,2})|(\d{4}年\d{1,2}月\d{1,2}日)/

//防止网站屏蔽我们的爬虫

var headers = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.65 Safari/537.36'

}

//request模块异步fetch url

function request(url, callback) {

var options = {

url: url,

encoding: null,

//proxy: 'http://x.x.x.x:8080',

headers: headers,

timeout: 10000 //

}

myRequest(options, callback)

};

//!定时执行

var rule = new schedule.RecurrenceRule();

var times = [0, 12]; //每天2次自动执行

var times2 = 5; //定义在第几分钟执行

rule.hour = times;

rule.minute = times2;

//定时执行httpGet()函数

schedule.scheduleJob(rule, function() {

seedget();

});

function seedget() {

request(seedURL, function(err, res, body) { //读取种子页面

// try {

//用iconv转换编码

var html = myIconv.decode(body, myEncoding);

//console.log(html);

//准备用cheerio解析html

var $ = myCheerio.load(html, { decodeEntities: true });

// } catch (e) { console.log('读种子页面并转码出错:' + e) };

var seedurl_news;

try {

seedurl_news = eval(seedURL_format);

} catch (e) { console.log('url列表所处的html块识别出错:' + e) };

seedurl_news.each(function(i, e) { //遍历种子页面里所有的a链接

var myURL = "";

try {

//得到具体新闻url

var href = "";

href = $(e).attr("href");

if (href == undefined) return;

if (href.toLowerCase().indexOf('http://') >= 0) myURL = href; //http://开头的

else if (href.startsWith('//')) myURL = 'http:' + href; ////开头的

else myURL = seedURL.substr(0, seedURL.lastIndexOf('/') + 1) + href; //其他

} catch (e) { console.log('识别种子页面中的新闻链接出错:' + e) }

if (!url_reg.test(myURL)) return; //检验是否符合新闻url的正则表达式

//console.log(myURL);

var fetch_url_Sql = 'select url from fetches where url=?';

var fetch_url_Sql_Params = [myURL];

mysql.query(fetch_url_Sql, fetch_url_Sql_Params, function(qerr, vals, fields) {

if (vals.length > 0) {

console.log('URL duplicate!')

} else newsGet(myURL); //读取新闻页面

});

});

});

};

function newsGet(myURL) { //读取新闻页面

request(myURL, function(err, res, body) { //读取新闻页面

//try {

var html_news = myIconv.decode(body, myEncoding); //用iconv转换编码

//console.log(html_news);

//准备用cheerio解析html_news

var $ = myCheerio.load(html_news, { decodeEntities: true });

myhtml = html_news;

//} catch (e) { console.log('读新闻页面并转码出错:' + e);};

console.log("转码读取成功:" + myURL);

//动态执行format字符串,构建json对象准备写入文件或数据库

var fetch = {};

fetch.title = "";

fetch.content = "";

fetch.publish_date = (new Date()).toFormat("YYYY-MM-DD");

//fetch.html = myhtml;

fetch.url = myURL;

fetch.source_name = source_name;

fetch.source_encoding = myEncoding; //编码

fetch.crawltime = new Date();

if (keywords_format == "") fetch.keywords = source_name; // eval(keywords_format); //没有关键词就用sourcename

else fetch.keywords = eval(keywords_format);

if (title_format == "") fetch.title = ""

else fetch.title = eval(title_format); //标题

if (date_format != "") fetch.publish_date = eval(date_format); //刊登日期

console.log('date: ' + fetch.publish_date);

fetch.publish_date = regExp.exec(fetch.publish_date)[0];

fetch.publish_date = fetch.publish_date.replace('年', '-')

fetch.publish_date = fetch.publish_date.replace('月', '-')

fetch.publish_date = fetch.publish_date.replace('日', '')

fetch.publish_date = new Date(fetch.publish_date).toFormat("YYYY-MM-DD");

if (author_format == "") fetch.author = source_name; //eval(author_format); //作者

else fetch.author = eval(author_format);

if (content_format == "") fetch.content = "";

else fetch.content = eval(content_format).replace("\r\n" + fetch.author, ""); //内容,是否要去掉作者信息自行决定

if (source_format == "") fetch.source = fetch.source_name;

else fetch.source = eval(source_format).replace("\r\n", ""); //来源

if (desc_format == "") fetch.desc = fetch.title;

else fetch.desc = eval(desc_format).replace("\r\n", ""); //摘要

// var filename = source_name + "_" + (new Date()).toFormat("YYYY-MM-DD") +

// "_" + myURL.substr(myURL.lastIndexOf('/') + 1) + ".json";

// ////存储json

// fs.writeFileSync(filename, JSON.stringify(fetch));

var fetchAddSql = 'INSERT INTO fetches(url,source_name,source_encoding,title,' +

'keywords,author,publish_date,crawltime,content) VALUES(?,?,?,?,?,?,?,?,?)';

var fetchAddSql_Params = [fetch.url, fetch.source_name, fetch.source_encoding,

fetch.title, fetch.keywords, fetch.author, fetch.publish_date,

fetch.crawltime.toFormat("YYYY-MM-DD HH24:MI:SS"), fetch.content

];

//执行sql,数据库中fetch表里的url属性是unique的,不会把重复的url内容写入数据库

mysql.query(fetchAddSql, fetchAddSql_Params, function(qerr, vals, fields) {

if (qerr) {

console.log(qerr);

}

}); //mysql写入

});

}

至此爬虫的事情完成得差不多了,接下来的任务是建立网站提供对爬取内容的分项全文搜索,给出所查关键词的时间热度分析,分为四步:

- 用MySQL查询已爬取的数据

- 用网页发送请求到后端查询

- 用express构建网站访问MySQL

- 用表格显示查询结果

用MySQL查询已爬取的数据:

var mysql = require('./mysql.js');

var title = '新冠';

var select_Sql = "select title,author,publish_date from fetches where title like '%" + title + "%'";

mysql.query(select_Sql, function(qerr, vals, fields) {

console.log(vals);

});

搭建前端:

var http = require('http');

var fs = require('fs');

var url = require('url');

var mysql = require('./mysql.js');

http.createServer(function(request, response) {

var pathname = url.parse(request.url).pathname;

var params = url.parse(request.url, true).query;

fs.readFile(pathname.substr(1), function(err, data) {

response.writeHead(200, { 'Content-Type': 'text/html; charset=utf-8' });

if ((params.title === undefined) && (data !== undefined))

response.write(data.toString());

else {

response.write(JSON.stringify(params));

var select_Sql = "select title,author,publish_date from fetches where title like '%" +

params.title + "%'";

mysql.query(select_Sql, function(qerr, vals, fields) {

console.log(vals);

});

}

response.end();

});

}).listen(8080);

console.log('Server running at http://127.0.0.1:8080/');

用express构建网站访问MySQL:

var express = require('express');

var mysql = require('./mysql.js')

var app = express();

//app.use(express.static('public'));

app.get('/7.03.html', function(req, res) {

res.sendFile(__dirname + "/" + "7.03.html");

})

app.get('/7.04.html', function(req, res) {

res.sendFile(__dirname + "/" + "7.04.html");

})

app.get('/process_get', function(req, res) {

res.writeHead(200, { 'Content-Type': 'text/html;charset=utf-8' }); //设置res编码为utf-8

//sql字符串和参数

var fetchSql = "select url,source_name,title,author,publish_date from fetches where title like '%" +

req.query.title + "%'";

mysql.query(fetchSql, function(err, result, fields) {

console.log(result);

res.end(JSON.stringify(result));

});

})

var server = app.listen(8080, function() {

console.log("访问地址为 http://127.0.0.1:8080/7.03.html")

})

最后将查询结果以表格形式输出,这里有一些问题,等解决了再回来补。

第一次做爬虫觉得还是挺有意思的,虽然这个过程中困难很多,但是收获也很多。目前前端还没搭建成功,而这次实验的ddl也到了,这篇博客现在是有点虎头蛇尾,但是接下来我会继续完善的,希望能自己写一个漂漂亮亮的页面吧!