package com.itheima.spider.httpclient;

import org.apache.http.Header;

import org.apache.http.HttpEntity;

import org.apache.http.client.entity.UrlEncodedFormEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.client.methods.HttpPost;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.message.BasicNameValuePair;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

import java.util.ArrayList;

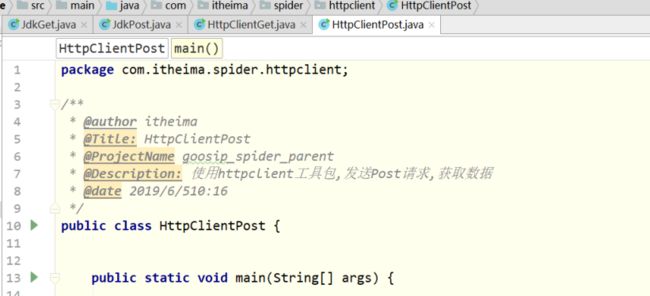

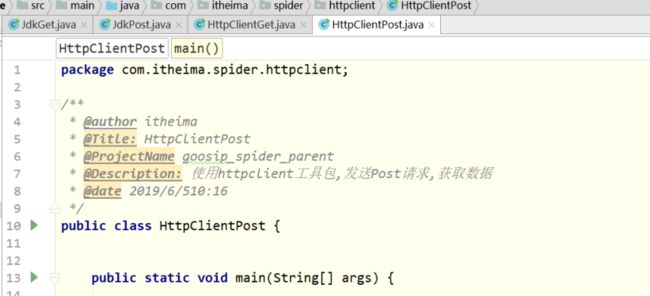

public class HttpClientPost {

public static void main(String[] args) throws IOException {

//1.确定URL

String indexUrl = "http://www.itcast.cn";

//2 发送请求,获得数据

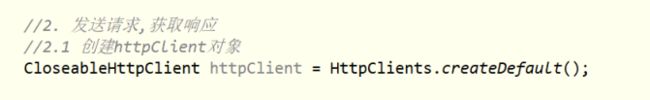

//2.1 创建httpclient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

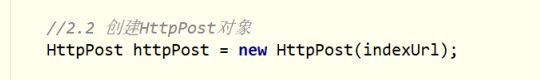

//2.2创建httppost对象--通过URL得到

HttpPost httpPost = new HttpPost(indexUrl);

//2.2.1 设置请求头

httpPost.setHeader("User-Agent","Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3676.400 QQBrowser/10.4.3505.400");

//2.2.2 设置请求参数

//先获得请求参数的键值对 list集合

//一,先建立泛型为 键值对的 list集合

ArrayList

//二,给集合中增加数据

basicNameValuePairs.add(new BasicNameValuePair("txtUser","黑马"));

basicNameValuePairs.add(new BasicNameValuePair("txtPass","123456"));

basicNameValuePairs.add(new BasicNameValuePair("city","北京"));

basicNameValuePairs.add(new BasicNameValuePair("birthday","1980-01-01"));

basicNameValuePairs.add(new BasicNameValuePair("sex","1"));

//三.把上面的装有表单数据的list集合给封装到请求体中entity

UrlEncodedFormEntity formEntity = new UrlEncodedFormEntity(basicNameValuePairs);

//四.把entity给装到请求体中

httpPost.setEntity(formEntity);

//2.3发送请求,获得响应

CloseableHttpResponse response = httpClient.execute(httpPost);

//2.4 把response中数据给解析出来

//2.4.1 获得响应头,并且判断是否成功访问

int statusCode = response.getStatusLine().getStatusCode();

if(statusCode == 200){

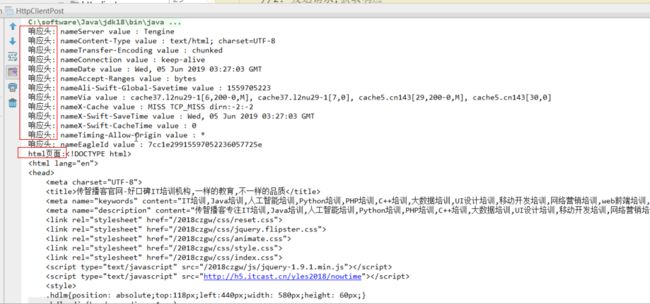

Header[] headers = response.getHeaders();

for (Header header : headers) {

System.out.println("响应头:name:"+header.getName()+"value:"+header.getValue());

}

//2.4.2 获得响应体

HttpEntity entity = response.getEntity();

//从响应体中获得网页内容并且打印

String html = EntityUtils.toString(entity, "utf-8");

System.out.println(html);

}

//2.5关闭资源

httpClient.close();

}

}

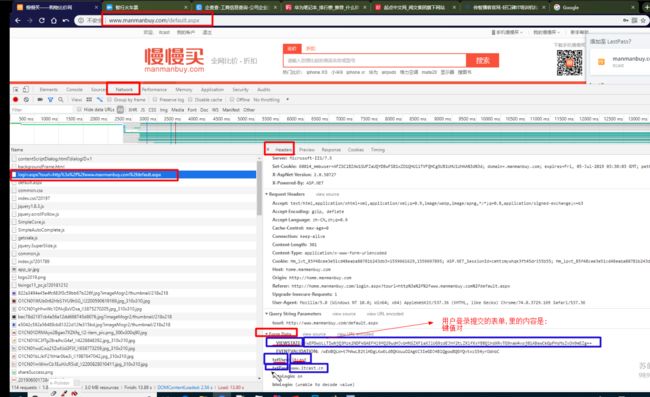

1.缺点一个爬取的URL

//1.确定URL

String indexUrl = "http://www.itcast.cn";

2发送请求,获取数据

2.1创建httpclient对象

//2.1 创建httpclient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

2.2创建就HTTPpost对象

//2.2创建httpget对象

HttpPost httpPost = new HttpPost(indexUrl);

设置请求头,请求体()

//2.2.1 设置请求头

httpPost.setHeader("User-Agent","Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3676.400 QQBrowser/10.4.3505.400");

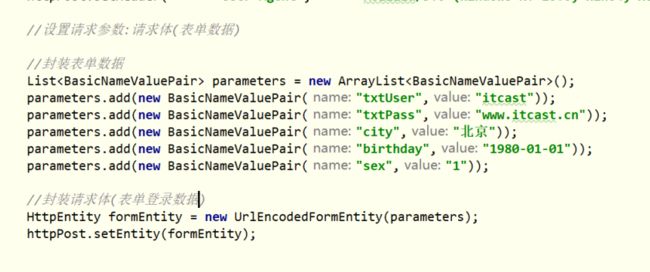

设置请求参数:请求体(表单-数据和最下面的)

用于登录的登录名,密码 还有用户信息--键值对

//先获得请求参数的键值对 list集合

把上面的装有表单数据的list集合给封装到请求体中entity,再到请求体中

//先获得请求参数的键值对 list集合

//一,先建立泛型为 键值对的 list集合

ArrayList

//二,给集合中增加数据--表单数据来源于 网页源码中的form data中的数据

basicNameValuePairs.add(new BasicNameValuePair("txtUser","黑马"));

basicNameValuePairs.add(new BasicNameValuePair("txtPass","123456"));

basicNameValuePairs.add(new BasicNameValuePair("city","北京"));

basicNameValuePairs.add(new BasicNameValuePair("birthday","1980-01-01"));

basicNameValuePairs.add(new BasicNameValuePair("sex","1"));

//三.把上面的装有表单数据的list集合给封装到formentity

UrlEncodedFormEntity formEntity = new UrlEncodedFormEntity(basicNameValuePairs);

//四.把entity给装到请求体中

httpPost.setEntity(formEntity);

2.3发送请求,获取响应 -- response

//2.3发送请求,获得响应

CloseableHttpResponse response = httpClient.execute(httpPost);

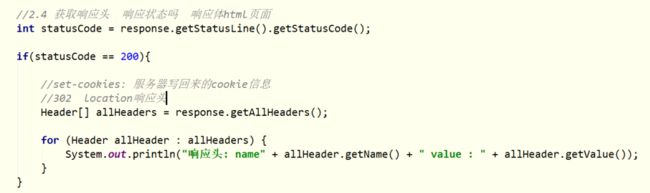

2.4获取响应头 响应状态码 响应体

//2.4 把response中数据给解析出来

//2.4.1 获得响应头,并且判断是否成功访问

int statusCode = response.getStatusLine().getStatusCode();

if(statusCode == 200){

Header[] headers = response.getHeaders();

for (Header header : headers) {

System.out.println("响应头:name:"+header.getName()+"value:"+header.getValue());

}

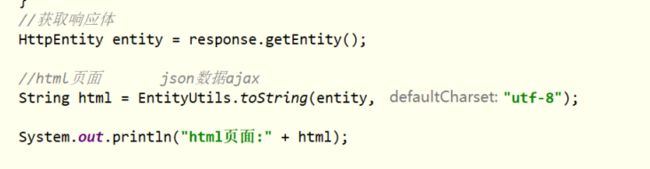

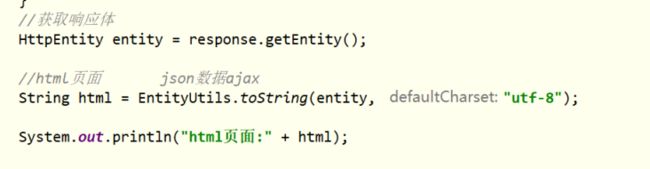

获得响应体

从响应体中能获得 网页,并且把html页面给打印出来; 如果是json格式,需要别的ajax处理

//2.4.2 获得响应体

HttpEntity entity = response.getEntity();

//从响应体中获得网页内容并且打印

String html = EntityUtils.toString(entity, "utf-8");

System.out.println(html);

}

2.5关闭资源

//2.5关闭资源

httpClient.close();

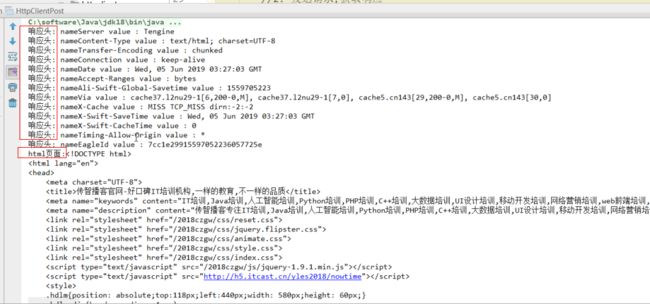

打印结果:

请求参数的样子

package com.itheima.spider.httpclient;

import org.apache.http.Header;

import org.apache.http.HttpEntity;

import org.apache.http.client.entity.UrlEncodedFormEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.client.methods.HttpPost;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.message.BasicNameValuePair;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

import java.util.ArrayList;

public class HttpClientPost {

public static void main(String[] args) throws IOException {

//1.确定URL

String indexUrl = "http://www.itcast.cn";

//2 发送请求,获得数据

//2.1 创建httpclient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//2.2创建httppost对象--通过URL得到

HttpPost httpPost = new HttpPost(indexUrl);

//2.2.1 设置请求头

httpPost.setHeader("User-Agent","Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3676.400 QQBrowser/10.4.3505.400");

//2.2.2 设置请求参数

//先获得请求参数的键值对 list集合

//一,先建立泛型为 键值对的 list集合

ArrayList

//二,给集合中增加数据

basicNameValuePairs.add(new BasicNameValuePair("txtUser","黑马"));

basicNameValuePairs.add(new BasicNameValuePair("txtPass","123456"));

basicNameValuePairs.add(new BasicNameValuePair("city","北京"));

basicNameValuePairs.add(new BasicNameValuePair("birthday","1980-01-01"));

basicNameValuePairs.add(new BasicNameValuePair("sex","1"));

//三.把上面的装有表单数据的list集合给封装到请求体中entity

UrlEncodedFormEntity formEntity = new UrlEncodedFormEntity(basicNameValuePairs);

//四.把entity给装到请求体中

httpPost.setEntity(formEntity);

//2.3发送请求,获得响应

CloseableHttpResponse response = httpClient.execute(httpPost);

//2.4 把response中数据给解析出来

//2.4.1 获得响应头,并且判断是否成功访问

int statusCode = response.getStatusLine().getStatusCode();

if(statusCode == 200){

Header[] headers = response.getHeaders();

for (Header header : headers) {

System.out.println("响应头:name:"+header.getName()+"value:"+header.getValue());

}

//2.4.2 获得响应体

HttpEntity entity = response.getEntity();

//从响应体中获得网页内容并且打印

String html = EntityUtils.toString(entity, "utf-8");

System.out.println(html);

}

//2.5关闭资源

httpClient.close();

}

}

1.缺点一个爬取的URL

//1.确定URL

String indexUrl = "http://www.itcast.cn";

2发送请求,获取数据

2.1创建httpclient对象

//2.1 创建httpclient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

2.2创建就HTTPpost对象

//2.2创建httpget对象

HttpPost httpPost = new HttpPost(indexUrl);

设置请求头,请求体()

//2.2.1 设置请求头

httpPost.setHeader("User-Agent","Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3676.400 QQBrowser/10.4.3505.400");

设置请求参数:请求体(表单-数据和最下面的)

用于登录的登录名,密码 还有用户信息--键值对

//先获得请求参数的键值对 list集合

把上面的装有表单数据的list集合给封装到请求体中entity,再到请求体中

//先获得请求参数的键值对 list集合

//一,先建立泛型为 键值对的 list集合

ArrayList

//二,给集合中增加数据--表单数据来源于 网页源码中的form data中的数据

basicNameValuePairs.add(new BasicNameValuePair("txtUser","黑马"));

basicNameValuePairs.add(new BasicNameValuePair("txtPass","123456"));

basicNameValuePairs.add(new BasicNameValuePair("city","北京"));

basicNameValuePairs.add(new BasicNameValuePair("birthday","1980-01-01"));

basicNameValuePairs.add(new BasicNameValuePair("sex","1"));

//三.把上面的装有表单数据的list集合给封装到formentity

UrlEncodedFormEntity formEntity = new UrlEncodedFormEntity(basicNameValuePairs);

//四.把entity给装到请求体中

httpPost.setEntity(formEntity);

2.3发送请求,获取响应 -- response

//2.3发送请求,获得响应

CloseableHttpResponse response = httpClient.execute(httpPost);

2.4获取响应头 响应状态码 响应体

//2.4 把response中数据给解析出来

//2.4.1 获得响应头,并且判断是否成功访问

int statusCode = response.getStatusLine().getStatusCode();

if(statusCode == 200){

Header[] headers = response.getHeaders();

for (Header header : headers) {

System.out.println("响应头:name:"+header.getName()+"value:"+header.getValue());

}

获得响应体

从响应体中能获得 网页,并且把html页面给打印出来; 如果是json格式,需要别的ajax处理

//2.4.2 获得响应体

HttpEntity entity = response.getEntity();

//从响应体中获得网页内容并且打印

String html = EntityUtils.toString(entity, "utf-8");

System.out.println(html);

}

2.5关闭资源

//2.5关闭资源

httpClient.close();

打印结果:

请求参数的样子

package com.itheima.spider.httpclient;

import org.apache.http.Header;

import org.apache.http.HttpEntity;

import org.apache.http.client.entity.UrlEncodedFormEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.client.methods.HttpPost;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.message.BasicNameValuePair;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

import java.util.ArrayList;

public class HttpClientPost {

public static void main(String[] args) throws IOException {

//1.确定URL

String indexUrl = "http://www.itcast.cn";

//2 发送请求,获得数据

//2.1 创建httpclient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//2.2创建httppost对象--通过URL得到

HttpPost httpPost = new HttpPost(indexUrl);

//2.2.1 设置请求头

httpPost.setHeader("User-Agent","Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3676.400 QQBrowser/10.4.3505.400");

//2.2.2 设置请求参数

//先获得请求参数的键值对 list集合

//一,先建立泛型为 键值对的 list集合

ArrayList

//二,给集合中增加数据

basicNameValuePairs.add(new BasicNameValuePair("txtUser","黑马"));

basicNameValuePairs.add(new BasicNameValuePair("txtPass","123456"));

basicNameValuePairs.add(new BasicNameValuePair("city","北京"));

basicNameValuePairs.add(new BasicNameValuePair("birthday","1980-01-01"));

basicNameValuePairs.add(new BasicNameValuePair("sex","1"));

//三.把上面的装有表单数据的list集合给封装到请求体中entity

UrlEncodedFormEntity formEntity = new UrlEncodedFormEntity(basicNameValuePairs);

//四.把entity给装到请求体中

httpPost.setEntity(formEntity);

//2.3发送请求,获得响应

CloseableHttpResponse response = httpClient.execute(httpPost);

//2.4 把response中数据给解析出来

//2.4.1 获得响应头,并且判断是否成功访问

int statusCode = response.getStatusLine().getStatusCode();

if(statusCode == 200){

Header[] headers = response.getHeaders();

for (Header header : headers) {

System.out.println("响应头:name:"+header.getName()+"value:"+header.getValue());

}

//2.4.2 获得响应体

HttpEntity entity = response.getEntity();

//从响应体中获得网页内容并且打印

String html = EntityUtils.toString(entity, "utf-8");

System.out.println(html);

}

//2.5关闭资源

httpClient.close();

}

}

1.缺点一个爬取的URL

//1.确定URL

String indexUrl = "http://www.itcast.cn";

2发送请求,获取数据

2.1创建httpclient对象

//2.1 创建httpclient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

2.2创建就HTTPpost对象

//2.2创建httpget对象

HttpPost httpPost = new HttpPost(indexUrl);

设置请求头,请求体()

//2.2.1 设置请求头

httpPost.setHeader("User-Agent","Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3676.400 QQBrowser/10.4.3505.400");

设置请求参数:请求体(表单-数据和最下面的)

用于登录的登录名,密码 还有用户信息--键值对

//先获得请求参数的键值对 list集合

把上面的装有表单数据的list集合给封装到请求体中entity,再到请求体中

//先获得请求参数的键值对 list集合

//一,先建立泛型为 键值对的 list集合

ArrayList

//二,给集合中增加数据--表单数据来源于 网页源码中的form data中的数据

basicNameValuePairs.add(new BasicNameValuePair("txtUser","黑马"));

basicNameValuePairs.add(new BasicNameValuePair("txtPass","123456"));

basicNameValuePairs.add(new BasicNameValuePair("city","北京"));

basicNameValuePairs.add(new BasicNameValuePair("birthday","1980-01-01"));

basicNameValuePairs.add(new BasicNameValuePair("sex","1"));

//三.把上面的装有表单数据的list集合给封装到formentity

UrlEncodedFormEntity formEntity = new UrlEncodedFormEntity(basicNameValuePairs);

//四.把entity给装到请求体中

httpPost.setEntity(formEntity);

2.3发送请求,获取响应 -- response

//2.3发送请求,获得响应

CloseableHttpResponse response = httpClient.execute(httpPost);

2.4获取响应头 响应状态码 响应体

//2.4 把response中数据给解析出来

//2.4.1 获得响应头,并且判断是否成功访问

int statusCode = response.getStatusLine().getStatusCode();

if(statusCode == 200){

Header[] headers = response.getHeaders();

for (Header header : headers) {

System.out.println("响应头:name:"+header.getName()+"value:"+header.getValue());

}

获得响应体

从响应体中能获得 网页,并且把html页面给打印出来; 如果是json格式,需要别的ajax处理

//2.4.2 获得响应体

HttpEntity entity = response.getEntity();

//从响应体中获得网页内容并且打印

String html = EntityUtils.toString(entity, "utf-8");

System.out.println(html);

}

2.5关闭资源

//2.5关闭资源

httpClient.close();

打印结果:

请求参数的样子