LDA推导 + python代码

参考blog:https://www.cnblogs.com/simon-c/p/5023726.html 只有代码和注释

https://blog.csdn.net/feilong_csdn/article/details/60964027 LDA线性判别原理解析<数学推导>

https://www.cnblogs.com/viviancc/p/4133630.html 这个推导的很详细了

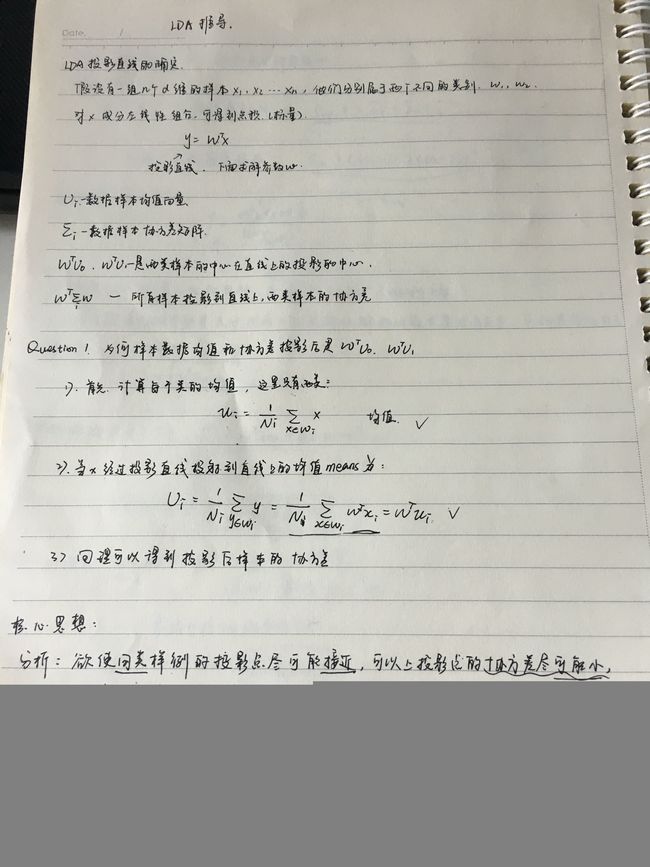

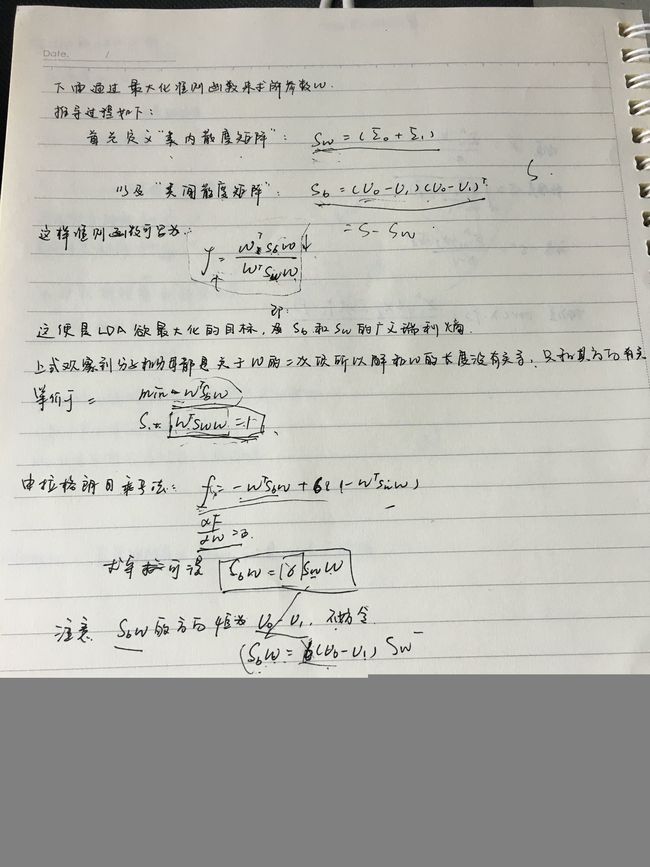

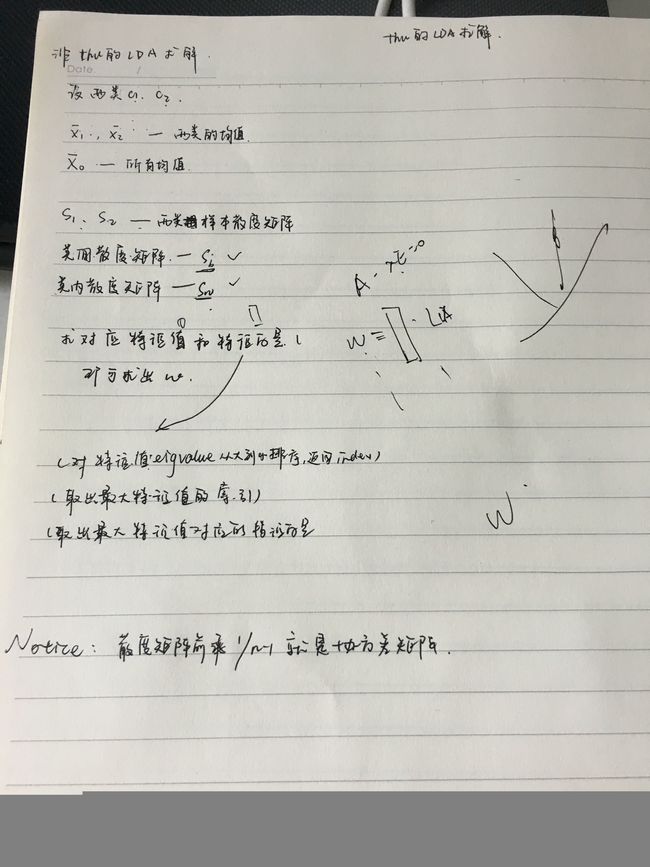

LDA的原理是,将带上标签的数据(点),通过投影的方法投影到维度更低的空间中,使得投影后的点形成按类别区分的情形,即相同类别的点,在投影后的空间中距离较近,不同类别的点,在投影后的空间中距离较远。

要说明LDA,首先得弄明白线性分类器(Linear Classifier):因为LDA是一种线性分类器。对于K-分类的一个分类问题,会有K个线性函数 y=wx+b

get_ipython().magic('matplotlib inline')

import matplotlib.pyplot as plt

import numpy as np

from __future__ import print_function

from scipy import linalg

from sklearn.utils.multiclass import unique_labels

from sklearn.utils import check_array, check_X_y

from sklearn.utils.validation import check_is_fitted

__all__=['LinearDiscriminantAnalysis']

# In[427]:

#总共两个类 0 1

# 计算类内的均值

def _class_means(X,y):

means = []

# classes:[0,1]

classes = np.unique(y)

for group in classes:

# print(X)

Xg = X[y==group,:]

# print(y)

# print(Xg)

means.append(Xg.mean(0))

return np.asarray(means)

#协方差

def _cov(x):

# print("x:",x)

s = np.cov(x, rowvar=0, bias=1)

#print("s:",s)

return s

#类内的协方差

def _class_cov(X,y):

classes = np.unique(y)

covs = []

for group in classes:

# print(X)

Xg = X[y == group,:]

# print(Xg)

# print("_cov(Xg):",_cov(Xg))

covs.append(np.atleast_2d(_cov(Xg)))

#print("covs:",covs)

#print("np.:",np.average(covs, axis=0))

return np.average(covs, axis=0)

# In[428]:

class LinearDiscriminantAnalysis:

def __init__(self, n_components=None, within_between_ratio=10.0, nearest_neighbor_ratio=1.2):

self.n_components = n_components

self.within_between_ratio = within_between_ratio

self.nearest_neighbor_ratio = nearest_neighbor_ratio

# print(self.n_components)

def _solve_eigen(self,X,y):

#print("y1=",y)

#print("x1=",x)

self.means_ = _class_means(X,y)

#print("y2=",y)

self.covariance_ = _class_cov(X,y)

#print(self.means_)

#Sw:类内的散度矩阵

#Sb:类间的散度矩阵

#St:总体散度矩阵

Sw = self.covariance_ #within scatter

St = _cov(X) #total scatter

Sb = St - Sw #between scatter

# print(Sw)

# print(St)

#linalg.eigh() 计算矩阵特征值和特征向量

evals, evecs = linalg.eigh(Sb, Sw)

#排序特征向量

evecs = evecs[:, np.argsort(evals)[::-1]] #sort eigenvectors

self.scalings_ = np.asarray(evecs)

#适合局部成对的LDA训练

def fit(self, X, y):

#asarray将元组列表转换成矩阵

#reshape(-1)将y矩阵转置

X, y = check_X_y(np.asarray(X), np.asarray(y.reshape(-1)), ensure_min_samples=2)

self.classes_ = unique_labels(y)

#得到类的最大数

#self.classes_ = [0,1]

if self.n_components is None:

self.n_components = len(self.classes_) - 1

else:

self.n_components = min(len(self.classes_) - 1, self.n_components)

# print(self.classes_)

self._solve_eigen(np.asarray(X), np.asarray(y))

return self

def transform(self, X):

check_is_fitted(self, ['scalings_'], all_or_any=any)

X = check_array(X)

# print(X)

#np.dot 矩阵乘

X_new = np.dot(X, self.scalings_)

#print(self.scalings_)

print(X_new)

return X_new[:, :self.n_components]

# In[429]:

if __name__ == '__main__':

samples =200

dim = 6

lda_dim =3

data = np.random.random((samples, dim))

label = np.random.randint(0,2,size=(samples,1))

# label1 = np.random.random_integers(0,2,size=(samples,1))

# print("label:",label)

# print("label1:",label1)

# print(data)

lda = LinearDiscriminantAnalysis(lda_dim)

lda.fit(data, label)

#print(data)

lda_data = lda.transform(data)

# print ("data = ")

# print(data)

#print ("y = ")

# print(y)

# print(y.reshape(-1))

# print ("lda_data = ")

print(lda_data)

plt.scatter(data[:,0],data[:,1],c=label)

# In[409]:

get_ipython().magic('matplotlib inline')

import matplotlib.pyplot as plt

import numpy as np

x = np.arange(20)

y = x**2

plt.plot(x,y)

x=np.linspace(0,1,10) y=np.sinx plt.scatter(x,y)