原创文章,转载注明出处,多谢合作。

经过上篇绘制过程,应用层已经准备好了DisplayList. 接下来就是渲染过程.Android硬件加速不同于软件绘制, 它的渲染过程会单独起一个native线程RenderThread来处理,而软件绘制的绘制过程和渲染过程都是在UI Thread完成.

下面开始继续分析, 仍然回到ThreadedRenderer的draw:

frameworks/base/core/java/android/view/ThreadedRenderer.java

void draw(View view, AttachInfo attachInfo, DrawCallbacks callbacks,

FrameDrawingCallback frameDrawingCallback) {

...

updateRootDisplayList(view, callbacks);//构建DisplayList

...

int syncResult = nSyncAndDrawFrame(mNativeProxy, frameInfo, frameInfo.length);//渲染视图

...

}

上文把updateRootDisplayList部分已经分析完了,下面接着看看nSyncAndDrawFrame, 它是一个Native方法,那么对应到Native层:

frameworks/base/core/jni/android_view_ThreadedRenderer.cpp

static int android_view_ThreadedRenderer_syncAndDrawFrame(JNIEnv* env, jobject clazz,

jlong proxyPtr, jlongArray frameInfo, jint frameInfoSize) {

LOG_ALWAYS_FATAL_IF(frameInfoSize != UI_THREAD_FRAME_INFO_SIZE,

"Mismatched size expectations, given %d expected %d",

frameInfoSize, UI_THREAD_FRAME_INFO_SIZE);

RenderProxy* proxy = reinterpret_cast(proxyPtr);

env->GetLongArrayRegion(frameInfo, 0, frameInfoSize, proxy->frameInfo());

return proxy->syncAndDrawFrame();

}

方法中RenderProxy执行它的syncAndDrawFrame方法

frameworks/base/libs/hwui/renderthread/RenderProxy.cpp

int RenderProxy::syncAndDrawFrame() {

return mDrawFrameTask.drawFrame();

}

起了一个Task执行drawFrame操作

frameworks/base/libs/hwui/renderthread/DrawFrameTask.cpp

int DrawFrameTask::drawFrame() {

LOG_ALWAYS_FATAL_IF(!mContext, "Cannot drawFrame with no CanvasContext!");

mSyncResult = SyncResult::OK;

mSyncQueued = systemTime(CLOCK_MONOTONIC);

postAndWait();

return mSyncResult;

}

void DrawFrameTask::postAndWait() {

AutoMutex _lock(mLock);

mRenderThread->queue().post([this]() { run(); });

mSignal.wait(mLock);

}

RenderThread是一个大loop,绘制操作都以RenderTask的形式post到RenderThread中完成。那么对应的run方法内就是

渲染的核心逻辑:

void DrawFrameTask::run() {

ATRACE_NAME("DrawFrame");// 对应systrace中的 DrawFrame label

bool canUnblockUiThread;

bool canDrawThisFrame;

{

TreeInfo info(TreeInfo::MODE_FULL, *mContext);

canUnblockUiThread = syncFrameState(info);//同步视图数据

canDrawThisFrame = info.out.canDrawThisFrame;

...

/ /绘制提交openGl命令到GPU

if (CC_LIKELY(canDrawThisFrame)) {

context->draw();//CanvasContext绘制

} else {

// wait on fences so tasks don't overlap next frame

context->waitOnFences();

}

...

}

将DrawFrameTask插入RenderThread,并且阻塞等待RenderThread跟UI线程同步应用绘制阶段封装好的数据,如果同步成功,则UI线程唤醒,否则UI线程一直处于阻塞等待状态。同步结束后RenderThread才会开始处理GPU渲染相关工作.

所以一个DrawFrameTask操作主要分为两个部分:

1)syncFrameState 将主线程的UI数据同步到Render线程

2)CanvasContext.draw 绘制

先看syncFrameState:

bool DrawFrameTask::syncFrameState(TreeInfo& info) {

ATRACE_CALL(); // 对应systraced的syncFrameState 标签

...

/ /同步DisplayListOp tree

mContext->prepareTree(info, mFrameInfo, mSyncQueued, mTargetNode);

...

return info.prepareTextures;

}

主要的同步过程是在CanvasContext的prepareTree中

frameworks/base/libs/hwui/renderthread/CanvasContext.cpp

void CanvasContext::prepareTree(TreeInfo& info, int64_t* uiFrameInfo,

int64_t syncQueued, RenderNode* target) {

...

mCurrentFrameInfo->importUiThreadInfo(uiFrameInfo); / /memcpy方式拷贝数据

mCurrentFrameInfo->set(FrameInfoIndex::SyncQueued) = syncQueued;

mCurrentFrameInfo->markSyncStart();

...

mRenderPipeline->onPrepareTree();

for (const sp& node : mRenderNodes) {

info.mode = (node.get() == target ? TreeInfo::MODE_FULL : TreeInfo::MODE_RT_ONLY);

//mRootRenderNode递归遍历所有节点执行prepareTree

node->prepareTree(info);

GL_CHECKPOINT(MODERATE);

}

...

}

这个过程简单说就是将应用层之前准备好的DisplayListOp集完整同步到Native的 RenderThread上来.

frameworks/base/libs/hwui/RenderNode.cpp

void RenderNode::prepareTree(TreeInfo& info) {

ATRACE_CALL();

LOG_ALWAYS_FATAL_IF(!info.damageAccumulator, "DamageAccumulator missing");

MarkAndSweepRemoved observer(&info);

// The OpenGL renderer reserves the stencil buffer for overdraw debugging. Functors

// will need to be drawn in a layer.

bool functorsNeedLayer = Properties::debugOverdraw && !Properties::isSkiaEnabled();

prepareTreeImpl(observer, info, functorsNeedLayer);

}

void RenderNode::prepareTreeImpl(TreeObserver& observer, TreeInfo& info, bool functorsNeedLayer) {

info.damageAccumulator->pushTransform(this);

if (info.mode == TreeInfo::MODE_FULL) {

pushStagingPropertiesChanges(info);//同步属性

}

...

prepareLayer(info, animatorDirtyMask);

if (info.mode == TreeInfo::MODE_FULL) {

pushStagingDisplayListChanges(observer, info);//同步DIsplayListOp

}

if (mDisplayList) {

info.out.hasFunctors |= mDisplayList->hasFunctor();

bool isDirty = mDisplayList->prepareListAndChildren(

observer, info, childFunctorsNeedLayer,

[](RenderNode* child, TreeObserver& observer, TreeInfo& info,

bool functorsNeedLayer) {

child->prepareTreeImpl(observer, info, functorsNeedLayer);//递归执行

});

if (isDirty) {

damageSelf(info);

}

}

pushLayerUpdate(info);

info.damageAccumulator->popTransform();

}

其中pushStagingDisplayListChanges是将setStagingDisplayList暂存的DisplayList赋值到RenderNode的mDisplayListData.

RenderNode::prepareTree()会遍历DisplayList的树形结构,对于子节点递归调用prepareTreeImpl(),如果是render layer,在RenderNode::pushLayerUpdate()中会将该layer的更新操作记录到LayerUpdateQueue中。

再回到Task的run(),看接下来的CanvasContext.draw

void CanvasContext::draw() {

...

Frame frame = mRenderPipeline->getFrame();

SkRect windowDirty = computeDirtyRect(frame, &dirty);

bool drew = mRenderPipeline->draw(frame, windowDirty, dirty, mLightGeometry, &mLayerUpdateQueue,

mContentDrawBounds, mOpaque, mLightInfo, mRenderNodes, &(profiler()));

waitOnFences();

bool requireSwap = false;

bool didSwap = mRenderPipeline->swapBuffers(frame, drew, windowDirty, mCurrentFrameInfo,

&requireSwap);

...

}

这里由mRenderPipeline执行了三个核心逻辑分别是:getFrame draw 和 swapBuffer . 分别来看一下:

(mRenderPipeline对应的是frameworks/base/libs/hwui/renderthread/OpenGLPipeline.cpp)

getFrame过程: 主要就是dequeueBuffer

Frame OpenGLPipeline::getFrame() {

LOG_ALWAYS_FATAL_IF(mEglSurface == EGL_NO_SURFACE,

"drawRenderNode called on a context with no surface!");

return mEglManager.beginFrame(mEglSurface);

}

Frame EglManager::beginFrame(EGLSurface surface) {

LOG_ALWAYS_FATAL_IF(surface == EGL_NO_SURFACE, "Tried to beginFrame on EGL_NO_SURFACE!");

makeCurrent(surface);

Frame frame;

frame.mSurface = surface;

eglQuerySurface(mEglDisplay, surface, EGL_WIDTH, &frame.mWidth);

eglQuerySurface(mEglDisplay, surface, EGL_HEIGHT, &frame.mHeight);

frame.mBufferAge = queryBufferAge(surface);

eglBeginFrame(mEglDisplay, surface);

return frame;

}

看对应的makeCurrent方法:

bool EglManager::makeCurrent(EGLSurface surface, EGLint* errOut) {

...

if (!eglMakeCurrent(mEglDisplay, surface, surface, mEglContext)) {

if (errOut) {

*errOut = eglGetError();

ALOGW("Failed to make current on surface %p, error=%s", (void*)surface,

egl_error_str(*errOut));

} else {

LOG_ALWAYS_FATAL("Failed to make current on surface %p, error=%s", (void*)surface,

eglErrorString());

}

}

mCurrentSurface = surface;

if (Properties::disableVsync) {

eglSwapInterval(mEglDisplay, 0);

}

return true;

}

再看eglMakeCurrent,这里很明显传入了EGLSurface

frameworks/native/opengl/libagl/egl.cpp

EGLBoolean eglMakeCurrent( EGLDisplay dpy, EGLSurface draw,

EGLSurface read, EGLContext ctx)

{

ogles_context_t* gl = (ogles_context_t*)ctx;

if (makeCurrent(gl) == 0) {

if (ctx) {

egl_context_t* c = egl_context_t::context(ctx);

egl_surface_t* d = (egl_surface_t*)draw;

egl_surface_t* r = (egl_surface_t*)read;

...

if (d) {

//dequeueBuffer相关逻辑

if (d->connect() == EGL_FALSE) {

return EGL_FALSE;

}

d->ctx = ctx;

d->bindDrawSurface(gl);//绑定Surface

}

...

return setError(EGL_BAD_ACCESS, EGL_FALSE);

}

再继续跟d->connect()

d对应的结构体egl_surface_t 往下查connect的实现:

发现又被egl_window_surface_v2_t实现 : struct egl_window_surface_v2_t : public egl_surface_t

那看看egl_window_surface_v2_t的connect

EGLBoolean egl_window_surface_v2_t::connect()

{

// dequeue a buffer

int fenceFd = -1;

if (nativeWindow->dequeueBuffer(nativeWindow, &buffer,

&fenceFd) != NO_ERROR) {

return setError(EGL_BAD_ALLOC, EGL_FALSE);

}

// wait for the buffer

sp fence(new Fence(fenceFd));

...

return EGL_TRUE;

}

nativeWindow对应的就是Surface

frameworks/native/libs/gui/Surface.cpp

Surface::Surface(

const sp& bufferProducer,

bool controlledByApp)

ANativeWindow::dequeueBuffer = hook_dequeueBuffer;

}

int Surface::hook_dequeueBuffer(ANativeWindow* window,

ANativeWindowBuffer** buffer, int* fenceFd) {

Surface* c = getSelf(window);

return c->dequeueBuffer(buffer, fenceFd);

}

int Surface::dequeueBuffer(android_native_buffer_t** buffer, int* fenceFd) {

ATRACE_CALL(); / /这里就是对应systrace的标签了

ALOGV("Surface::dequeueBuffer");

...

FrameEventHistoryDelta frameTimestamps;

status_t result = mGraphicBufferProducer->dequeueBuffer(&buf, &fence, reqWidth, reqHeight,

reqFormat, reqUsage, &mBufferAge,

enableFrameTimestamps ? &frameTimestamps

: nullptr);

... 如果需要重新分配,则requestBuffer,请求分配

if ((result & IGraphicBufferProducer::BUFFER_NEEDS_REALLOCATION) || gbuf == nullptr) {

//通过GraphicBufferProducer来申请buffer

result = mGraphicBufferProducer->requestBuffer(buf, &gbuf);

}

...

}

draw过程: 递归issue OpenGL命令,提交给GPU绘制

bool OpenGLPipeline::draw(const Frame& frame, const SkRect& screenDirty, const SkRect& dirty,

const FrameBuilder::LightGeometry& lightGeometry,

LayerUpdateQueue* layerUpdateQueue, const Rect& contentDrawBounds,

bool opaque, bool wideColorGamut,

const BakedOpRenderer::LightInfo& lightInfo,

const std::vector>& renderNodes,

FrameInfoVisualizer* profiler) {

mEglManager.damageFrame(frame, dirty);

bool drew = false;

auto& caches = Caches::getInstance();

FrameBuilder frameBuilder(dirty, frame.width(), frame.height(), lightGeometry, caches);

frameBuilder.deferLayers(*layerUpdateQueue);

layerUpdateQueue->clear();

frameBuilder.deferRenderNodeScene(renderNodes, contentDrawBounds);

BakedOpRenderer renderer(caches, mRenderThread.renderState(), opaque, wideColorGamut,//

lightInfo);

frameBuilder.replayBakedOps(renderer);

ProfileRenderer profileRenderer(renderer);

profiler->draw(profileRenderer);

drew = renderer.didDraw();

// post frame cleanup

caches.clearGarbage();

caches.pathCache.trim();

caches.tessellationCache.trim();

#if DEBUG_MEMORY_USAGE

caches.dumpMemoryUsage();

#else

if (CC_UNLIKELY(Properties::debugLevel & kDebugMemory)) {

caches.dumpMemoryUsage();

}

#endif

return drew;

}

这部分逻辑非常复杂. 就不跟代码流程了,简单梳理下:

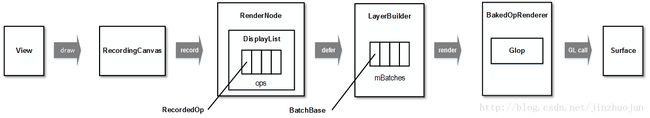

OpenGLPipeline::draw过程

- defer: 数据结构的重新整合, RenderNode 对应成LayerBuilder, 内部DisplayList以chunk为单位组合的RecordedOp重新封装成BakedOpState,保存到Batch, 按能合并和不能合并:mMergingBatchLookup和mBatchLookup两张表来索引.

- render: 将Op转化为对应的OpenGL命令,并缓存在本地的GL命令缓冲区中.

- GL call: 将OpenGL命令提交给GPU执行

swapBuffer过程:将绘制好的数据提交给SurfaceFlinger去合成.

bool OpenGLPipeline::swapBuffers(const Frame& frame, bool drew, const SkRect& screenDirty,

FrameInfo* currentFrameInfo, bool* requireSwap) {

GL_CHECKPOINT(LOW);

// Even if we decided to cancel the frame, from the perspective of jank

// metrics the frame was swapped at this point

currentFrameInfo->markSwapBuffers();

*requireSwap = drew || mEglManager.damageRequiresSwap();

if (*requireSwap && (CC_UNLIKELY(!mEglManager.swapBuffers(frame, screenDirty)))) {

return false;

}

return *requireSwap;

}

接着看swapBuffers

frameworks/base/libs/hwui/renderthread/EglManager.cpp

bool EglManager::swapBuffers(const Frame& frame, const SkRect& screenDirty) {

...

eglSwapBuffersWithDamageKHR(mEglDisplay, frame.mSurface, rects, screenDirty.isEmpty() ? 0 : 1);

...

return false;

}

eglSwapBuffersWithDamageKHR方法对应了systrace eglSwapBuffersWithDamageKHR标签.主要干的事就是queueBuffer,不详细分析了,分析方法类似dequeueBuffer,直接看最后的调用点:

frameworks/native/opengl/libs/EGL/eglApi.cpp

EGLBoolean egl_window_surface_v2_t::swapBuffers()

{

...

nativeWindow->queueBuffer(nativeWindow, buffer, -1);

buffer = 0;

// dequeue a new buffer

int fenceFd = -1;

if (nativeWindow->dequeueBuffer(nativeWindow, &buffer, &fenceFd) == NO_ERROR) {

sp fence(new Fence(fenceFd));

// fence->wait

if (fence->wait(Fence::TIMEOUT_NEVER)) {

nativeWindow->cancelBuffer(nativeWindow, buffer, );

return setError(EGL_BAD_ALLOC, EGL_FALSE);

}

...

}

这里先将保持好绘制数据的Buffer 通过queueBuffer把Buffer投入BufferQueue ,并通知SurfaceFlinger去BufferQueue中acquire Buffer出来合成,然后再通过dequeueBuffer申请一块Buffer用来处理下一次请求。这里是典型的生产者消费者过程,之前图形系统的文章多次说过了,有兴趣的可以去看之前的图形系统文章Android图形系统篇总结。

后续就是SurfaceFlinger的合成操作了,可以参考之前图形系统文章:

Android图形系统(十)-SurfaceFlinger启动及图层合成送显过程

注:

之前图形系统文章不是9.0的,属于初期学习总结,重在捋思路和流程。

最后简单总结下整个硬件加速绘制和渲染的流程图,我懂的,一图胜千言:

绘制和渲染过程是整个硬件加速的核心,流程图只涵盖了核心调用流程,要吃透这部分内容还需要花大量时间去源码中研究和抠细节,我这只算抛砖引玉了,有问题欢迎交流,觉得文章还可以的麻烦点个赞。

最后谈几点硬件加速设计优点:

绘制命令到GL命令之间引入DisplayList"中间命令",起到一个缓冲作用, 当需要绘制时才转化为GL命令.在View中只针对视图脏区域做RenderNode和RenderProperties调整即可,最后更新DisplayList,从而避免重复绘制和数据组织操作。

对绘制操作进行batch/merge可以减少GL的draw call,从而减少渲染状态切换,提高了性能。

将渲染任务转到RenderThread,进一步解放UIThread. 同时将CPU不擅长的图形计算转换成GPU专用指令由GPU完成, 也进一步提升了渲染效率.

另外推荐两篇参考过的好文:

https://www.jianshu.com/p/dd800800145b

https://blog.csdn.net/jinzhuojun/article/details/54234354