传感器融合-SFND_3D_Object_Tracking源码解读(四)

camFusion_Student.cpp

此部分做的是将激光雷达3D点云映射到相机图像,具体的理论可以参考这篇博客

视觉融合-相机校准与激光点云投影

#include

double threshold = dist_mean*1.5;

for (auto it = kptMatches_roi.begin(); it != kptMatches_roi.end(); ++it)

{

float dist = cv::norm(kptsCurr.at(it->trainIdx).pt - kptsPrev.at(it->queryIdx).pt);

if (dist< threshold)

boundingBox_c.kptMatches.push_back(*it);

}

std::cout<<"curr_bbx_matches_size: "<<boundingBox_c.kptMatches.size()<<std::endl;

}

// 根据连续图像中的关键点对应关系计算碰撞时间(TTC)

void computeTTCCamera(std::vector<cv::KeyPoint> &kptsPrev, std::vector<cv::KeyPoint> &kptsCurr,

std::vector<cv::DMatch> kptMatches, double frameRate, double &TTC, cv::Mat *visImg)

{

vector<double> distRatios; // 存储curr之间所有关键点的距离比率。 和上一个。 帧

for (auto it1 = kptMatches.begin(); it1 != kptMatches.end() - 1; ++it1)

{

cv::KeyPoint kpOuterCurr = kptsCurr.at(it1->trainIdx);

cv::KeyPoint kpOuterPrev = kptsPrev.at(it1->queryIdx);

for (auto it2 = it1 + 1; it2 != kptMatches.end() - 1; ++it2)

{

double minDist = 100.0; // min. required distance

cv::KeyPoint kpInnerCurr = kptsCurr.at(it2->trainIdx);

cv::KeyPoint kpInnerPrev = kptsPrev.at(it2->queryIdx);

// 计算距离和距离比

double distCurr = cv::norm(kpOuterCurr.pt - kpInnerCurr.pt);

double distPrev = cv::norm(kpOuterPrev.pt - kpInnerPrev.pt);

if (distPrev > std::numeric_limits<double>::epsilon() && distCurr >= minDist)

{ // 避免除数为0

double distRatio = distCurr / distPrev;

distRatios.push_back(distRatio);

}

}

}

// 仅在距离比列表不为空时继续

if (distRatios.size() == 0)

{

TTC = NAN;

return;

}

std::sort(distRatios.begin(), distRatios.end());

long medIndex = floor(distRatios.size() / 2.0);

double medDistRatio = distRatios.size() % 2 == 0 ? (distRatios[medIndex - 1] + distRatios[medIndex]) / 2.0 : distRatios[medIndex]; // 计算中位数距离 消除异常影响的比率

double dT = 1 / frameRate;

TTC = -dT / (1 - medDistRatio);

}

void computeTTCLidar(std::vector<LidarPoint> &lidarPointsPrev,

std::vector<LidarPoint> &lidarPointsCurr, double frameRate, double &TTC)

{

int lane_wide = 4;

//仅考虑本车道的雷达点

std::vector<float> ppx;

std::vector<float> pcx;

for(auto it = lidarPointsPrev.begin(); it != lidarPointsPrev.end() -1; ++it)

{

if(abs(it->y) < lane_wide/2) ppx.push_back(it->x);

}

for(auto it = lidarPointsCurr.begin(); it != lidarPointsCurr.end() -1; ++it)

{

if(abs(it->y) < lane_wide/2) pcx.push_back(it->x);

}

float min_px, min_cx;

int p_size = ppx.size();

int c_size = pcx.size();

if(p_size > 0 && c_size > 0)

{

for(int i=0; i<p_size; i++)

{

min_px += ppx[i];

}

for(int j=0; j<c_size; j++)

{

min_cx += pcx[j];

}

}

else

{

TTC = NAN;

return;

}

min_px = min_px /p_size;

min_cx = min_cx /c_size;

std::cout<<"lidar_min_px:"<<min_px<<std::endl;

std::cout<<"lidar_min_cx:"<<min_cx<<std::endl;

float dt = 1/frameRate;

TTC = min_cx * dt / (min_px - min_cx);

}

void matchBoundingBoxes(std::vector<cv::DMatch> &matches, std::map<int, int> &bbBestMatches, DataFrame &prevFrame, DataFrame &currFrame)

{

//注意:调用cv :: DescriptorMatcher :: match函数后,每个DMatch

//根据要匹配的图像参数的顺序,包含两个关键点索引queryIdx和trainIdx。

// https://docs.opencv.org/4.1.0/db/d39/classcv_1_1DescriptorMatcher.html#a0f046f47b68ec7074391e1e85c750cba

// prevFrame.keypoints由queryIdx索引

// currFrame.keypoints由trainIdx索引

int p = prevFrame.boundingBoxes.size();

int c = currFrame.boundingBoxes.size();

int pt_counts[p][c] = { };

for (auto it = matches.begin(); it != matches.end() - 1; ++it)

{

cv::KeyPoint query = prevFrame.keypoints[it->queryIdx];

auto query_pt = cv::Point(query.pt.x, query.pt.y);

bool query_found = false;

cv::KeyPoint train = currFrame.keypoints[it->trainIdx];

auto train_pt = cv::Point(train.pt.x, train.pt.y);

bool train_found = false;

std::vector<int> query_id, train_id;

for (int i = 0; i < p; i++)

{

if (prevFrame.boundingBoxes[i].roi.contains(query_pt))

{

query_found = true;

query_id.push_back(i);

}

}

for (int i = 0; i < c; i++)

{

if (currFrame.boundingBoxes[i].roi.contains(train_pt))

{

train_found= true;

train_id.push_back(i);

}

}

if (query_found && train_found)

{

for (auto id_prev: query_id)

for (auto id_curr: train_id)

pt_counts[id_prev][id_curr] += 1;

}

}

for (int i = 0; i < p; i++)

{

int max_count = 0;

int id_max = 0;

for (int j = 0; j < c; j++)

if (pt_counts[i][j] > max_count)

{

max_count = pt_counts[i][j];

id_max = j;

}

bbBestMatches[i] = id_max;

}

}

本节讲解如何将激光雷达点云俯视图(仅考虑水平坐标)映射到二维图像中,其中涉及到激光雷达点云的地面部分滤除,和不同坐标范围下的数据的简单映射。本文主要侧重提供方法的参考,代码仅供参考,可根据实际使用场景的需要进行修改。

本文中采用的激光雷达数据来自KITTI数据集,使用Velodyne HDL-64E激光雷达采集得到,该激光雷达工作频率10Hz,每个周期可以采集大约10万个点。更多信息可参考KITTI数据集的传感器安装部分文档:添加链接描述

在KITTI数据集中,该激光雷达点云数据已经和一个前视摄像头进行了同步,本文使用的激光雷达数据采集到的场景近似于下方图片所示的场景。

实现方法

1. 通过数据文件加载激光雷达点云数据,来自Kitti数据集。

2.初始化特定像素大小的图片(1000*2000)。

3.根据需要显示的激光雷达点云范围和图片像素范围,将激光雷达点云的横纵坐标映射到图片的特定像素中,其中激光雷达所处的世界坐标系遵循右手系,x轴对应前进方向,y轴对应左侧横向;而对于图像坐标系,x,y分别对应其图像的行索引和列索引,且图像左上角为原点。

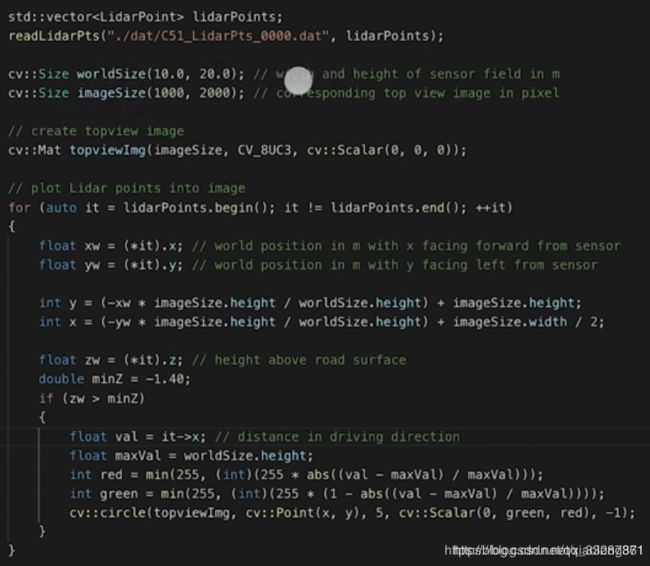

具体处理方式参考如下代码:

//参考定义:CvSize cvSize( int width, int height );

cv::Size worldSize(10.0,20.0); //待显示的激光雷达点云在世界坐标系下的范围

cv::Size imageSize(1000.0,2000.0); //待显示的图片的像素范围(与世界坐标系等比例)

...

float xw=(*it).x;//世界坐标系下的激光雷达点云纵坐标

float yw=(*it).y;//世界坐标系下的激光雷达点云横坐标(车身左侧为正)

// x,y表示图片坐标系下的坐标点,分别对应图像的行索引和列索引。

//(如相关坐标系对应关系不同,可进行相应调整)

int x = (-yw * imageSize.height / worldSize.height) + imageSize.width / 2;

int y = (-xw * imageSize.height / worldSize.height) + imageSize.height;

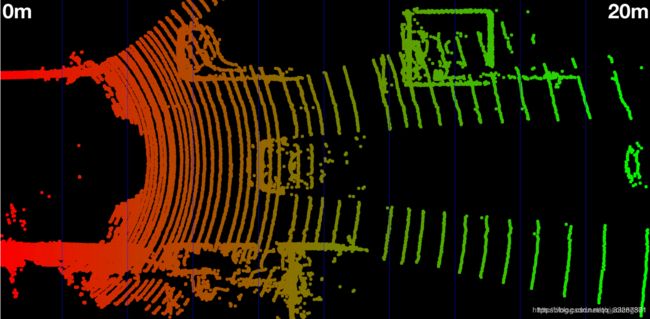

转换后的显示效果如下图所示:

4. 可根据激光雷达相对地面的安装位置高度,设置恰当的阈值变量doulble minZ=-1.40,只有该阈值以上的激光点云才进行上述映射操作,从而过滤掉激光点云中的地面反射目标。最终映射后的图片显示效果如下所示:

4. 可根据激光雷达相对地面的安装位置高度,设置恰当的阈值变量doulble minZ=-1.40,只有该阈值以上的激光点云才进行上述映射操作,从而过滤掉激光点云中的地面反射目标。最终映射后的图片显示效果如下所示:

参考代码

实现以图片形式显示激光雷达点云俯视图的相关参考代码: