并行计算入门案例--转载

首先是cuda编程,分三步,把数据从内存拷贝进显存,GPU进行计算,将结果从显存拷贝回内存。

cuda-C程序冒泡排序案例:

#include "cuda_runtime.h"

#include "device_launch_parameters.h"

#include <stdio.h>

#include <stdlib.h>

#define N 400

void random_ints(int *);

__global__ void myKernel(int *d_a)

{

__shared__ int s_a[N]; //定义共享变量

int tid = threadIdx.x;

s_a[tid] = d_a[tid]; //每个线程搬运对应的数据到共享内存中

__syncthreads(); //线程同步

for (int i = 1; i <= N; i++) { //最多N次排序完成

if (i % 2 == 1 && (2 * tid + 1) < N) { //奇数步

if (s_a[2 * tid] > s_a[2 * tid + 1]) {

int temp = s_a[2 * tid];

s_a[2 * tid] = s_a[2 * tid+1];

s_a[2 * tid + 1] = temp;

}

}

__syncthreads(); //线程同步

if (i % 2 == 0 && (2 * tid + 2) < N ) { //偶数步

if (s_a[2 * tid+1] > s_a[2 * tid + 2]) {

int temp = s_a[2 * tid+1];

s_a[2 * tid+1] = s_a[2 * tid + 2];

s_a[2 * tid + 2] = temp;

}

}

__syncthreads(); //线程同步

}

d_a[tid] = s_a[tid]; //将排序结果搬回到Global Memory

}

int main()

{

//定义变量

int *a,*d_a;

int size = N * sizeof(int);

//Host端变量分配内存

a = (int *)malloc(size);

//初始化待排序数组

random_ints(a);

//Device端变量分配内存

cudaMalloc((void **)&d_a, size);

//将数据从Host端拷贝到Device端

cudaMemcpy(d_a, a, size, cudaMemcpyHostToDevice);

//调用核函数

myKernel<<<1, N >>> (d_a);

//将数据从Device端拷贝到Host端

cudaMemcpy(a, d_a, size, cudaMemcpyDeviceToHost);

//打印排序结果

for (int i = 0; i < N; i++) {

printf("%d ", a[i]);

}

//释放内存

free(a);

cudaFree(d_a);

return 0;

}

void random_ints(int *a)

{

if (!a) { //异常判断

return;

}

for (int i = 0; i < N; i++) {

a[i] = rand() % N; //产生0-N之间的随机整数

}

}

然后openmp只需要在循环耗时的地方加上一句话即可。

还有就是MPI使用6个基本函数即可。

MPI初始化:通过MPI_Init函数进入MPI环境并完成所有的初始化工作

int MPI_Init( int *argc, char * * * argv )

MPI结束:通过MPI_Finalize函数从MPI环境中退出

int MPI_Finalize(void)

获取进程的编号:调用MPI_Comm_rank函数获得当前进程在指定通信域中的编号,将自身与其他程序区分

int MPI_Comm_rank(MPI_Comm comm, int *rank)

获取指定通信域的进程数:调用MPI_Comm_size函数获取指定通信域的进程个数,确定自身完成任务比例

int MPI_Comm_size(MPI_Comm comm, int *size)

消息发送:MPI_Send函数用于发送一个消息到目标进程

int MPI_Send(void *buf, int count, MPI_Datatype dataytpe, int dest, int tag, MPI_Comm comm)

消息接受:MPI_Recv函数用于从指定进程接收一个消息

int MPI_Recv(void *buf, int count, MPI_Datatype datatyepe,int source, int tag, MPI_Comm comm, MPI_Status *status)

圆周率计算案例:

【OpenMP_PI】

#include <omp.h>

#include<stdio.h>

#include<time.h>

#define NUM_THREADS 8

double seriel_pi();

double parallel_pi();

static long num_steps = 1000000000;

double step;

void main()

{

clock_t start; float time; double pi;

start = clock();

pi=seriel_pi();

time = (float)(clock() - start);

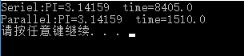

printf("Seriel:PI=%.5f time=%.1f\n", pi,time);

start = clock();

pi = parallel_pi();

time = (float)(clock() - start);

printf("Parallel:PI=%.5f time=%.1f\n", pi, time);

}

double parallel_pi() {

int i; double x, pi, sum = 0.0;

step = 1.0 / (double)num_steps;

omp_set_num_threads(NUM_THREADS);

#pragma omp parallel for private(x) reduction (+:sum)

for (i = 0; i< num_steps; i++) {

x = (i + 0.5)*step;

sum += 4.0 / (1.0 + x*x);

}

pi = sum * step;

return pi;

}

double seriel_pi() {

double x, pi, sum = 0.0;

step = 1.0 / (double)num_steps;

for (int i = 0; i< num_steps; i++) {

x = (i + 0.5)*step;

sum = sum + 4.0 / (1.0 + x*x);

}

pi = step * sum;

return pi;

}

【Cuda_PI】

#include "cuda_runtime.h"

#include "device_launch_parameters.h"

#include <stdio.h>

#include<stdlib.h>

#include<time.h>

double seriel_pi();

#define B 128 //块的个数

#define T 512 //每个块中的线程数

#define N 1000000000 //划分的step个数

__global__ void reducePI1(double *d_sum) {

int gid = threadIdx.x + blockIdx.x*blockDim.x;//线程索引

__shared__ double s_sum[T];//长度为block线程数

s_sum[threadIdx.x] = 0.0; //初始化

double x;

while (gid < N) {

x = (gid + 0.5)/N;//当前x值

s_sum[threadIdx.x] += 4.0 / (1.0 + x*x);//对应的y值

gid += blockDim.x*gridDim.x; //若总线程数不足时,进行累加

__syncthreads();

}

for (int i = (blockDim.x >> 1); i>0; i >>= 1) { //归约到s_sum[0]

if (threadIdx.x<i) {

s_sum[threadIdx.x] += s_sum[threadIdx.x + i];

}

__syncthreads();

}

if (threadIdx.x == 0) {

d_sum[blockIdx.x] = s_sum[0]; //每个block存储自己block的归约值

}

}

__global__ void reducePI2(double *d_sum) {

int id = threadIdx.x;

__shared__ double s_pi[B];

s_pi[id] = d_sum[id]; //将数据拷贝到共享内存区

__syncthreads();

for (int i = (blockDim.x >> 1); i>0; i >>= 1) { //归约到s_pi[0]

if (id < i) {

s_pi[id] += s_pi[id + i];

}

__syncthreads();

}

if (id == 0) {

d_sum[0] = s_pi[0]/N;

}

}

int main()

{

//定义变量

double *pi, *d_sum;

clock_t start; float time;

//Host端变量分配内存

pi = (double *)malloc(sizeof(double));

//Device端变量分配内存

cudaMalloc((void **)&d_sum, B*sizeof(double));

//串行计算

start = clock();

pi[0] = seriel_pi();

time = (float)(clock() - start);

printf("Seriel:PI=%.5f time=%.1f\n", pi[0], time);

//并行计算

start = clock();

//调用核函数

reducePI1 <<<B, T >>> (d_sum);

reducePI2 <<<1, B >>> (d_sum);

//将数据从Device端拷贝到Host端

cudaMemcpy(pi, d_sum, sizeof(double), cudaMemcpyDeviceToHost);

time = (float)(clock() - start);

printf("Parallel:PI=%.5f time=%.1f\n", pi[0], time);

//释放内存

free(pi);

cudaFree(d_sum);

return 0;

}

double seriel_pi() {

double x, pi,step, sum = 0.0;

step = 1.0 / (double)N;

for (int i = 0; i< N; i++) {

x = (i + 0.5)*step;

sum = sum + 4.0 / (1.0 + x*x);

}

pi = step * sum;

return pi;

}

【MPI_PI】

#include<stdio.h>

#include "mpi.h"

#include<math.h>

#pragma comment (lib, "msmpi.lib")

int main(int argc, char *argv[]) {

int my_rank, num_procs;

int i, n = 100000000;

double sum=0.0, step, x, mypi, pi;

double start = 0.0, stop = 0.0;

MPI_Init(&argc, &argv); //初始化环境

MPI_Comm_size(MPI_COMM_WORLD, &num_procs); //获取并行的进程数

MPI_Comm_rank(MPI_COMM_WORLD, &my_rank); //当前进程在所有进程中的序号

start = MPI_Wtime();

step = 1.0 / n;

for (i = my_rank; i<n; i += num_procs) {

x = step*((double)i + 0.5);

sum += 4.0 / (1.0 + x*x);

}

mypi = step*sum;

MPI_Reduce(&mypi, &pi, 1, MPI_DOUBLE, MPI_SUM, 0, MPI_COMM_WORLD); //由进程0进行归约,把每个进程计算出来的mypi进行相加(MPI_SUM),赋给pi

if (my_rank == 0) {

printf("PI is %.20f\n", pi);

stop = MPI_Wtime();

printf("Time: %f\n", stop - start);

fflush(stdout);

}

MPI_Finalize();

return 0;

}