ROS:海康威视+opencv运动检测

主要功能:

通过海康威视视频服务器读取视频流,采用opencv对视频流进行处理,

采用红色的方框框出镜头中运动的物体轮廓.

一、下载对应电脑版本的海康威视SDK

海康威视官网

二、配置环境

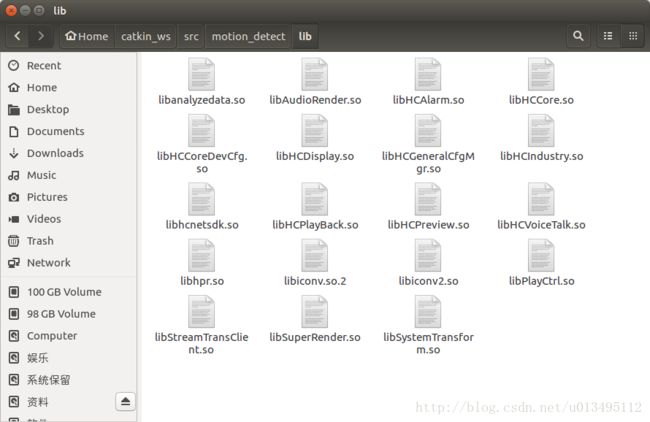

1.将海康威视提供的库文件安装到/usr/lib和/lib下

具体方法

sudo chmod 777 lib/*

sudo cp lib/* /usr/lib/

sudo cp lib/* /lib/

cd /usr/lib

sudo ldconfig

cd /lib/

sudo ldconfig三、代码

1.motion_detection.cpp

#include

#include

#include

#include int rowptr=row*width;

for (col=0; col//int colhalf=col>>1;

tmp = (row/2)*(width/2)+(col/2);

Y=(unsigned int) inYv12[row*width+col];

U=(unsigned int) inYv12[width*height+width*height/4+tmp];

V=(unsigned int) inYv12[width*height+tmp];

outYuv[idx+col*3] = Y;

outYuv[idx+col*3+1] = U;

outYuv[idx+col*3+2] = V;

}

}

}

//解码回调 视频为YUV数据(YV12),音频为PCM数据

void CALLBACK DecCBFun(int nPort,char * pBuf,int nSize,FRAME_INFO * pFrameInfo, int nReserved1,int nReserved2)

{

long lFrameType = pFrameInfo->nType;

if(lFrameType ==T_YV12) //YV12

{

IplImage* pImgYCrCb = cvCreateImage(cvSize(pFrameInfo->nWidth,pFrameInfo->nHeight), IPL_DEPTH_8U, 3);//得到图像的Y分量

yv12toYUV(pImgYCrCb->imageData, pBuf, pFrameInfo->nWidth,pFrameInfo->nHeight,pImgYCrCb->widthStep);//得到全部RGB图像

//申请内存

IplImage* pImg = cvCreateImage(cvSize(pFrameInfo->nWidth,pFrameInfo->nHeight), IPL_DEPTH_8U, 3);

IplImage* motion = cvCreateImage(cvSize(pFrameInfo->nWidth,pFrameInfo->nHeight), IPL_DEPTH_8U, 1);

cvCvtColor(pImgYCrCb,pImg,CV_YCrCb2RGB);

update_mhi( pImg, motion, 60 );//detect motion

cvShowImage( "Motion", pImg );

cvWaitKey(1);

cvReleaseImage(&pImgYCrCb);

cvReleaseImage(&pImg);

}

if(lFrameType ==T_AUDIO16)

{

//PCM

}

}

///实时流回调

void CALLBACK fRealDataCallBack(LONG lRealHandle,DWORD dwDataType,BYTE *pBuffer,DWORD dwBufSize,void *pUser)

{

DWORD dRet;

switch (dwDataType)

{

case NET_DVR_SYSHEAD: //系统头

if (!PlayM4_GetPort(&nPort)) //获取播放库未使用的通道号

{

break;

}

if(dwBufSize > 0)

{

if (!PlayM4_OpenStream(nPort,pBuffer,dwBufSize,1024*1024))

{

dRet=PlayM4_GetLastError(nPort);

break;

}

//设置解码回调函数 只解码不显示

if (!PlayM4_SetDecCallBack(nPort,DecCBFun))

{

dRet=PlayM4_GetLastError(nPort);

break;

}

//打开视频解码

if (!PlayM4_Play(nPort,hWnd))

{

dRet=PlayM4_GetLastError(nPort);

break;

}

//打开音频解码, 需要码流是复合流

if (!PlayM4_PlaySoundShare(nPort))

{

dRet=PlayM4_GetLastError(nPort);

break;

}

}

break;

case NET_DVR_STREAMDATA: //码流数据 复合流/视频音频

if (dwBufSize > 0 && nPort != -1)

{

BOOL inData=PlayM4_InputData(nPort,pBuffer,dwBufSize);

while (!inData)

{

waitKey(0);

inData=PlayM4_InputData(nPort,pBuffer,dwBufSize);

printf("PlayM4_InputData failed \n");

}

}

break;

}

}

void CALLBACK g_ExceptionCallBack(DWORD dwType, LONG lUserID, LONG lHandle, void *pUser)

{

char tempbuf[256] = {0};

switch(dwType)

{

case EXCEPTION_RECONNECT: //预览时重连

printf("----------reconnect--------%ld\n", time(NULL));

break;

default:

break;

}

}

int main(int argc,char ** argv) {

//---------------------------------------

// 初始化

NET_DVR_Init();

//设置连接时间与重连时间

NET_DVR_SetConnectTime(2000, 1);

NET_DVR_SetReconnect(10000, true);

// 注册设备

LONG lUserID;

NET_DVR_DEVICEINFO_V30 struDeviceInfo;

lUserID = NET_DVR_Login_V30("192.168.1.111", 8000, "admin", "12345", &struDeviceInfo);

if (lUserID < 0)

{

printf("Login error, %d\n", NET_DVR_GetLastError());

NET_DVR_Cleanup();

return HPR_ERROR;

} else

printf("Login success!\n");

//---------------------------------------

//设置异常消息回调函数

NET_DVR_SetExceptionCallBack_V30(0, NULL,g_ExceptionCallBack, NULL);

//---------------------------------------

//启动预览并设置回调数据流

NET_DVR_CLIENTINFO ClientInfo;

ClientInfo.lChannel = 1; //Channel number 设备通道号

ClientInfo.hPlayWnd = hWnd; //窗口为空,设备SDK不解码只取流

ClientInfo.lLinkMode = 0; //Main Stream

ClientInfo.sMultiCastIP = NULL;

LONG lRealPlayHandle;

lRealPlayHandle = NET_DVR_RealPlay_V30(lUserID,&ClientInfo,fRealDataCallBack,NULL,FALSE);

if (lRealPlayHandle<0)

{

printf("pyd1---NET_DVR_RealPlay_V30 error %d\n", NET_DVR_GetLastError());

return HPR_ERROR;

}

cvNamedWindow("Motion",CV_WINDOW_NORMAL);//could resize the window

sleep(-1);

//---------------------------------------

//关闭预览

cvDestroyWindow( "Motion" );

if(!NET_DVR_StopRealPlay(lRealPlayHandle))

{

printf("NET_DVR_StopRealPlay error! Error number: %d\n", NET_DVR_GetLastError());

return HPR_ERROR;

}

printf("close preview\n");

//注销用户

NET_DVR_Logout(lUserID);

NET_DVR_Cleanup();

return 0;

} 2.CMakeLists.txt

cmake_minimum_required(VERSION 2.8.3)

project(motion_detection)

if(COMMAND cmake_policy)

cmake_policy(SET CMP0003 NEW)

endif(COMMAND cmake_policy)

find_package(catkin REQUIRED COMPONENTS

cv_bridge

image_transport

roscpp

sensor_msgs

)

find_package( OpenCV REQUIRED )

catkin_package(

INCLUDE_DIRS include

LIBRARIES motion_detection

CATKIN_DEPENDS cv_bridge image_transport roscpp sensor_msgs

# DEPENDS system_lib

)

include_directories(

${catkin_INCLUDE_DIRS}

include

)

add_executable(motion_detection src/motion_detection.cpp)

target_link_libraries(motion_detection

${catkin_LIBRARIES}

${OpenCV_LIBS}

libPlayCtrl.so

libAudioRender.so

libSuperRender.so

libhcnetsdk.so

)3.编译

mkdir build

cd build

cmake ..

make4.运行

./motion_detection