import os

import xlrd

import numpy as np

import matplotlib.pyplot as plt

import re

from numpy.ma import log

xpath = "/Volumes/DISK1/微博总语料库/"

xtype = "xlsx"

start_name = 'yug'

typedata = []

name = []

raw_data = []

file_path = []

def collect_xls(list_collect, type1):

#取得列表中所有的type文件

for each_element in list_collect:

if isinstance(each_element, list):

collect_xls(each_element, type1)

elif each_element.endswith(type1) and each_element not in typedata and each_element.split('/')[-1].startswith(start_name):

typedata.insert(0, each_element)

return typedata

#读取所有文件夹中的xls文件

def read_xls(path, type2):

#遍历路径文件夹

for file in os.walk(path):

for each_list in file[2]:

file_path = file[0]+"/"+each_list

#os.walk()函数返回三个参数:路径,子文件夹,路径下的文件,利用字符串拼接file[0]和file[2]得到文件的路径

name.insert(0, file_path)

all_xls = collect_xls(name, type2)

print('all:', all_xls)

#遍历所有type文件路径并读取数据

return all_xls

def contains_emoji(content):

if not content:

return False

if u"\U0001F600" <= content and content <= u"\U0001F64F":

return True

elif u"\U0001F300" <= content and content <= u"\U0001F5FF":

return True

elif u"\U0001F680" <= content and content <= u"\U0001F6FF":

return True

elif u"\U0001F1E0" <= content and content <= u"\U0001F1FF":

return True

else:

return False

zh_pattern = re.compile(u'[\u4e00-\u9fa5]+')

def contain_zh(word):

word = word.encode().decode()

global zh_pattern

match = zh_pattern.search(word)

return match

def cutLongNameFun(s):

'''

longWords变为 long word:log里面有很多长函数名,比如WbxMeeting_VerifyMeetingIsExist。

将其拆成小单词wbx meeting verify meeting is exist,更有意义。若有大写则分割。

'''

# Build a cost dictionary, assuming Zipf's law and cost = -math.log(probability).

# 建立一个成本字典,假设Zipf定律和成本= -math.log(概率)。

words = open("/Users/chenqiuchang/Downloads/words-by-frequency.txt").read().split() # 有特殊字符的话直接在其中添加

wordcost = dict((k, log((i+1)*log(len(words)))) for i,k in enumerate(words))

maxword = max(len(x) for x in words)

def infer_spaces(s):

'''Uses dynamic programming to infer the location of spaces in a string without spaces.

.使用动态编程来推断不带空格的字符串中空格的位置。'''

# Find the best match for the i first characters, assuming cost has

# been built for the i-1 first characters.

# Returns a pair (match_cost, match_length).

def best_match(i):

candidates = enumerate(reversed(cost[max(0, i-maxword):i]))

return min((c + wordcost.get(s[i-k-1:i], 9e999), k+1) for k,c in candidates)

# Build the cost array.

cost = [0]

for i in range(1,len(s)+1):

c, k = best_match(i)

cost.append(c)

# Backtrack to recover the minimal-cost string.

out = []

i = len(s)

while i > 0:

c, k = best_match(i)

assert c == cost[i]

out.append(s[i-k:i])

i -= k

return " ".join(reversed(out))

print("==============", infer_spaces(s))

return infer_spaces(s)

def main():

all_xls = read_xls(xpath, xtype)

print('all_xls:', all_xls)

print("Victory")

arr = np.zeros((50), dtype=np.int)

print('空数组:', arr)

all_len = 0

large_len_arr = []

zh_arr = []

eg_arr = []

after_split_arr = []

cnt = 0

# 获取文档对象

for name_i in all_xls:

print('name:', name_i)

data = xlrd.open_workbook(name_i)

table = data.sheets()[0]

cols = table.col_values(2)

# 输出段落编号及段落内容

for i in range(len(cols)):

cnt += 1

print("第" + str(i) + "段的内容是:" + cols[i])

res = re.findall(r"[\w’]+", cols[i])

print('分隔特殊符号:', res)

temp = []

for j in res:

if not contain_zh(j) and not contains_emoji(j) and not re.compile('[0-9]+').findall(j):

temp.insert(0, j)

elif len(j) >= 30 and not contain_zh(j) and not contains_emoji(j) and not re.compile('[0-9]+').findall(j):

temp.insert(0, cutLongNameFun(j.lower()).split(" "))

print('处理后的temp:', temp)

if len(temp) >= 50:

large_len_arr.insert(0, cols[i])

continue

if len(temp) > 0:

arr[len(temp)] += 1

print('总共查找:', cnt, '个句子')

#print('包含中文的单词有:', zh_arr)

#print('去掉中文之后的字符串:', eg_arr)

print('长度超过50的句子有:', len(large_len_arr), "句\n==>", large_len_arr)

for key in range(50):

print('arr=', arr[key])

name_list = [1] * 50

for i in range(50):

name_list[i] = i

num_list = arr

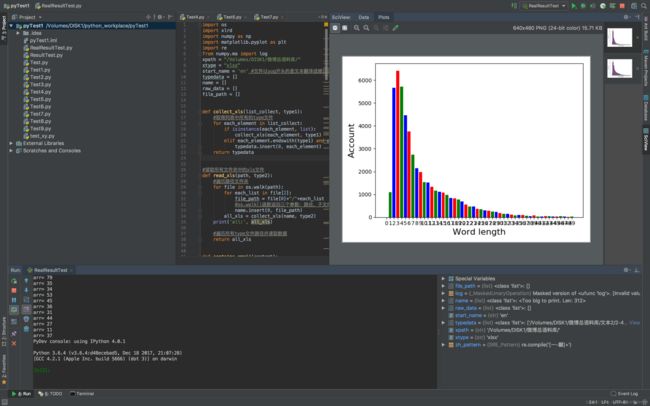

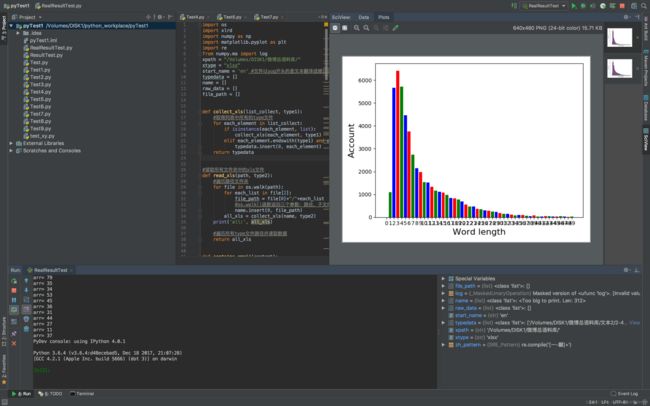

plt.bar(range(len(num_list)), num_list, color='rgb', tick_label=name_list)

#plt.grid(True)

plt.xlabel('Word length', fontsize=16)

plt.ylabel('Account', fontsize=16)

plt.show()

return

if __name__ == "__main__":

main()

维语的统计结果:

英语的统计结果: