jetson nano上部署运行 tensorflow lite模型

在移动端这里就不使用python而是使用C++作为开发语言,总体流程就是我们在PC端交叉编译出相关执行程序,然后在jetson nano上直接部署运行,不考虑在jetson nano上编译,使用交叉编译这也更加符合嵌入式软件开发的流程(虽然jetson nano和raspberry pi都自带系统可以下载编译代码。。。。。)

1. PC机上交叉编译出tflite静态库

首先需要做的是在PC机上从github上拉取tensorflow源代码,在源代码中使用交叉编译工具编译出tensorflow lite相关的静态库。

具体的方法可参考官网guide:https://tensorflow.google.cn/lite/guide/build_arm64

#安装交叉编译工具

sudo apt-get install crossbuild-essential-arm64

#下载tensorflow源代码

git clone https://github.com/tensorflow/tensorflow.git

#进入lite文件夹

cd tensorflow/tensorflow/lite/tools/make

#运行tflite相关所需依赖环境脚本

./download_dependencies.sh

#编译

./build_aarch64_lib.sh这样我们就在 tensorflow/lite/tools/make/gen/linux_aarch64/lib目录编译出了tensorflow lite静态库(benchmark-lib.a libtensorflow-lite.a)

2. 编译示例 label_image

上一步中我们本地编译出了tflite的静态库,这一步我们在tensorflow源码中编译出其自带的label_image示例,源代码位置在:

tensorflow/lite/examples/label_image目录下

前面在生成tflite静态库的时候编译了tensorflow/lite/examples/目录下的minimal,但是并没有编译 label_image,所以我们需要修改

tensorflow/lite/tools/make/Makefile文件,具体修改如下(参照minimal例子对应添加了label_image相关编译命令):

diff --git a/tensorflow/lite/tools/make/Makefile b/tensorflow/lite/tools/make/Makefile

index ad3832f996..c32091594f 100644

--- a/tensorflow/lite/tools/make/Makefile

+++ b/tensorflow/lite/tools/make/Makefile

@@ -97,6 +97,12 @@ BENCHMARK_PERF_OPTIONS_BINARY_NAME := benchmark_model_performance_options

MINIMAL_SRCS := \

tensorflow/lite/examples/minimal/minimal.cc

+# galaxyzwj

+LABEL_IMAGE_SRCS := \

+ tensorflow/lite/examples/label_image/label_image.cc \

+ tensorflow/lite/examples/label_image/bitmap_helpers.cc \

+ tensorflow/lite/tools/evaluation/utils.cc

+

# What sources we want to compile, must be kept in sync with the main Bazel

# build files.

@@ -161,7 +167,8 @@ $(wildcard tensorflow/lite/*/*/*/*/*/*tool.cc) \

$(wildcard tensorflow/lite/kernels/*test_main.cc) \

$(wildcard tensorflow/lite/kernels/*test_util*.cc) \

tensorflow/lite/tflite_with_xnnpack.cc \

-$(MINIMAL_SRCS)

+$(MINIMAL_SRCS) \

+$(LABEL_IMAGE_SRCS)

BUILD_WITH_MMAP ?= true

ifeq ($(BUILD_TYPE),micro)

@@ -257,7 +264,8 @@ ALL_SRCS := \

$(PROFILER_SUMMARIZER_SRCS) \

$(TF_LITE_CC_SRCS) \

$(BENCHMARK_LIB_SRCS) \

- $(CMD_LINE_TOOLS_SRCS)

+ $(CMD_LINE_TOOLS_SRCS) \

+ $(LABEL_IMAGE_SRCS)

# Where compiled objects are stored.

TARGET_OUT_DIR ?= $(TARGET)_$(TARGET_ARCH)

@@ -271,6 +279,7 @@ BENCHMARK_LIB := $(LIBDIR)$(BENCHMARK_LIB_NAME)

BENCHMARK_BINARY := $(BINDIR)$(BENCHMARK_BINARY_NAME)

BENCHMARK_PERF_OPTIONS_BINARY := $(BINDIR)$(BENCHMARK_PERF_OPTIONS_BINARY_NAME)

MINIMAL_BINARY := $(BINDIR)minimal

+LABEL_IMAGE_BINARY := $(BINDIR)label_image

CXX := $(CC_PREFIX)${TARGET_TOOLCHAIN_PREFIX}g++

CC := $(CC_PREFIX)${TARGET_TOOLCHAIN_PREFIX}gcc

@@ -279,6 +288,9 @@ AR := $(CC_PREFIX)${TARGET_TOOLCHAIN_PREFIX}ar

MINIMAL_OBJS := $(addprefix $(OBJDIR), \

$(patsubst %.cc,%.o,$(patsubst %.c,%.o,$(MINIMAL_SRCS))))

+LABEL_IMAGE_OBJS := $(addprefix $(OBJDIR), \

+$(patsubst %.cc,%.o,$(patsubst %.c,%.o,$(LABEL_IMAGE_SRCS))))

+

LIB_OBJS := $(addprefix $(OBJDIR), \

$(patsubst %.cc,%.o,$(patsubst %.c,%.o,$(patsubst %.cpp,%.o,$(TF_LITE_CC_SRCS)))))

@@ -309,7 +321,7 @@ $(OBJDIR)%.o: %.cpp

$(CXX) $(CXXFLAGS) $(INCLUDES) -c $< -o $@

# The target that's compiled if there's no command-line arguments.

-all: $(LIB_PATH) $(MINIMAL_BINARY) $(BENCHMARK_BINARY) $(BENCHMARK_PERF_OPTIONS_BINARY)

+all: $(LIB_PATH) $(MINIMAL_BINARY) $(BENCHMARK_BINARY) $(BENCHMARK_PERF_OPTIONS_BINARY) $(LABEL_IMAGE_BINARY)

# The target that's compiled for micro-controllers

micro: $(LIB_PATH)

@@ -353,6 +365,14 @@ $(BENCHMARK_PERF_OPTIONS_BINARY) : $(BENCHMARK_PERF_OPTIONS_OBJ) $(BENCHMARK_LIB

benchmark: $(BENCHMARK_BINARY) $(BENCHMARK_PERF_OPTIONS_BINARY)

+$(LABEL_IMAGE_BINARY): $(LABEL_IMAGE_OBJS) $(LIB_PATH)

+ @mkdir -p $(dir $@)

+ $(CXX) $(CXXFLAGS) $(INCLUDES) \

+ -o $(LABEL_IMAGE_BINARY) $(LABEL_IMAGE_OBJS) \

+ $(LIBFLAGS) $(LIB_PATH) $(LDFLAGS) $(LIBS)

+

+label_image: $(LABEL_IMAGE_BINARY)

+

libdir:

@echo $(LIBDIR)

最终在 tensorflow/lite/tools/make/gen/linux_aarch64/bin 生成了 label_image 运行程序

我们将此bin文件scp到jetson nano中,同时将 tensorflow/lite/examples/label_image/testdata/grace_hopper.bmp文件scp到jetson nano中

3. 在jetson nano上运行tflite模型

首先在网络上下载 tflite模型文件,下载网址:

https://storage.googleapis.com/download.tensorflow.org/models/tflite/mobilenet_v1_224_android_quant_2017_11_08.zip

将解压后的 mobilenet_quant_v1_224.tflite 模型文件和 标签文件 labels.txt文件传入到jetson nano中。

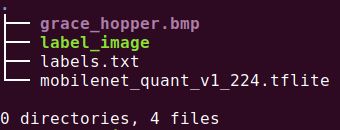

所以我们需要的几个文件具体如下:

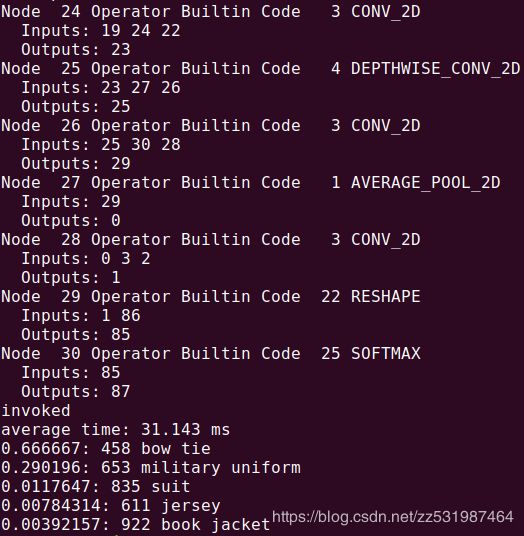

执行下面命令识别grace_hopper.bmp文件中的相关目标物体

./label_image -v 1 -m ./mobilenet_quant_v1_224.tflite -i ./grace_hopper.bmp -l ./labels.txt具体打印结果如下,我们可以看到会输出使用的模型的结构以及最终识别出的物体和花费的具体时间