从HDFS读取文件,把记录存到Hbase的java API操作

从HDFS读取文件,文件的数据格式类似如下

computer,xuzheng,54,52,86,91,42

computer,huangbo,85,42,96,38

english,zhaobenshan,54,52,86,91,42,85,75

english,liuyifei,85,41,75,21,85,96,14

algorithm,liuyifei,75,85,62,48,54,96,15

数据的意义:课程名 姓名 多次考试的分数

首先主类extends Configured implements Tool

public class ReadFromHdfsToHbase extends Configured implements Tool{

private static final String ZK_CONNECT="hadoop02:2199,hadoop03:2199";

private static final String TABLE_NAME="Student_Score";

public static void main(String[] args) throws Exception {

int run=ToolRunner.run(new ReadFromHdfsToHbase(), args);

System.exit(run);

}

@Override

public int run(String[] arg0) throws Exception {

//设置环境变量

Configuration conf=HBaseConfiguration.create();

/**

* 思路

* 1.建立与hdfs的连接(设定zookeeper法定人数)

* */

conf.set("hbase.zookeeper.quorum",ZK_CONNECT);

System.setProperty("HADOOP_USER_NAME", "root");

conf.addResource("config/core-site.xml");

conf.addResource("config/hdfs-site.xml");

conf.set("fs.defaultFS", "hdfs://myha01/");

/**

* 创建一张family叫chengji的表

* 获取admin对象

* */

HBaseAdmin haAdmin=new HBaseAdmin(conf);

HTableDescriptor htd=new HTableDescriptor(TABLE_NAME);

HColumnDescriptor family=new HColumnDescriptor("chengji".getBytes());

//添加一个family名字是chengji到整个表的描述中

htd.addFamily(family);

//判断这张表是否存在

if(haAdmin.tableExists(TABLE_NAME)){//如果存在这张表 先删除

haAdmin.disableTable(TABLE_NAME);

haAdmin.deleteTable(TABLE_NAME);

}

haAdmin.createTable(htd);

//-----------

//对job的设置

Job job=Job.getInstance(conf);

job.setJarByClass(ReadFromHdfsToHbase.class);

job.setMapperClass(ReadFromHdfsToHbase_Mapper.class);

//设置reduce

TableMapReduceUtil.initTableReducerJob(TABLE_NAME,

ReadFromHdfsToHbase_Reducer.class,

job,

null,

null,

null,

null,

false);

// 设置数据读取组件

job.setInputFormatClass(TextInputFormat.class);

// 给mapper和reducer指定输出的key-value的类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(NullWritable.class);

job.setOutputKeyClass(NullWritable.class);

job.setOutputValueClass(Put.class);

// 指定输入数据的路径

FileInputFormat.setInputPaths(job, new Path("/aaa/input1/"));

boolean waitForCompletion = job.waitForCompletion(true);

return waitForCompletion?0:1;

}

}配置文件建议创建一个source Folder,命名为config

用来存放hdfs-site.xml和core-site.xml

因为已经配好HA高可用集群,所以根据加载的配置文件,可以自动识别myha01代表的是两个namenode,系统会自动找到active的一个执行程序

public static class ReadFromHdfsToHbase_Mapper extends Mapper{

@Override

protected void map(LongWritable key, Text value, Mapper.Context context)

throws IOException, InterruptedException {

context.write(value, NullWritable.get());

}

}

/**

* english,huanglei,85,75,85,99,66,88,75,91

rowkey computer family chengji qualify:name qualify:score

* */

public static class ReadFromHdfsToHbase_Reducer extends TableReducer{

@Override

protected void reduce(Text key, Iterable value,Context context)

throws IOException, InterruptedException {

String line=key.toString();

String[] strs=line.split(",");

String rowkey=strs[0]+"---"+strs[1];

String family="chengji";

String qualify1=strs[1];

String qualify2=strs[2];

Put put=new Put(rowkey.getBytes());//获取rowkey

put.addColumn(family.getBytes(), "name".getBytes(), qualify1.getBytes());

put.addColumn(family.getBytes(), "score".getBytes(), qualify2.getBytes());

/**

* 输出格式

* computer name:huangleu

* computer score:99

* */

ImmutableBytesWritable rowkeystr=new ImmutableBytesWritable(rowkey.getBytes());

context.write(NullWritable.get(), put);

}

}

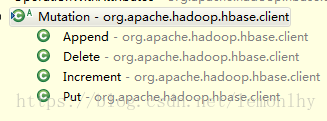

mutation的实现类有如下四个,分别对应的是Hbase的添加删除