大数据(完全分布式)配置详解

linux大数据

hadoop完全分布式

完全分布式

hadoop最大的优势就是分布式集群计算,所以在生产环境下都是搭建的最后一种模式:完全分布模式

技术准备

系统规划

搭建

测试

上线使用

完全分布式

系统规则

| 主机 |

角色 |

软件 |

| 192.168.6.10 master |

NameNode SecondaryNameNode ResourceManager |

HDFS YARN |

| 192.168.6.11 node1 |

DataNode NodeManager |

HDFS YARN |

| 192.168.6.12 node2 |

DataNode NodeManager |

HDFS YARN |

| 192.168.6.13 node3 |

DataNode NodeManager |

HDFS YARN |

[root@rootroom9pc01 ~]# cd /etc/libvirt/qemu/

[root@rootroom9pc01 qemu]# sed 's/demo/node10/' demo.xml > /etc/libvirt/qemu/node10.xml

[root@rootroom9pc01 qemu]# sed 's/demo/node11/' demo.xml > /etc/libvirt/qemu/node11.xml

[root@rootroom9pc01 qemu]# sed 's/demo/node12/' demo.xml > /etc/libvirt/qemu/node12.xml

[root@rootroom9pc01 qemu]# sed 's/demo/node13/' demo.xml > /etc/libvirt/qemu/node13.xml

[root@rootroom9pc01 qemu]# cd /var/lib/libvirt/images/

[root@rootroom9pc01 images]# qemu-img create -b node.qcow2 -f qcow2 node10.img 20G

[root@rootroom9pc01 images]# qemu-img create -b node.qcow2 -f qcow2 node11.img 20G

[root@rootroom9pc01 images]# qemu-img create -b node.qcow2 -f qcow2 node12.img 20G

[root@rootroom9pc01 images]# qemu-img create -b node.qcow2 -f qcow2 node13.img 20G

[root@rootroom9pc01 images]# cd /etc/libvirt/qemu/

[root@rootroom9pc01 qemu]# virsh define node10.xml

[root@rootroom9pc01 qemu]# virsh define node11.xml

[root@rootroom9pc01 qemu]# virsh define node12.xml

[root@rootroom9pc01 qemu]# virsh define node13.xml

[root@localhost ~]# vim /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

ONBOOT=yes

IPV6INIT=no

BOOTPROTO=static

TYPE=Ethernet

IPADDR="192.168.6.10"

NETMASK="255.255.255.0"

GATEWAY="192.168.6.254"

[root@localhost ~]# halt -p

[root@localhost ~]# ifconfig | head -3

eth0: flags=4163

inet 192.168.6.10 netmask 255.255.255.0 broadcast 192.168.6.255

[root@localhost ~]# hostnamectl set-hostname nn01

[root@localhost ~]# vim /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

ONBOOT=yes

IPV6INIT=no

BOOTPROTO=static

TYPE=Ethernet

IPADDR="192.168.6.11"

NETMASK="255.255.255.0"

GATEWAY="192.168.6.254"

[root@localhost ~]# halt -p

[root@localhost ~]# hostnamectl set-hostname node1

[root@node1 ~]# ifconfig | head -3

eth0: flags=4163

inet 192.168.6.11 netmask 255.255.255.0 broadcast 192.168.6.255

[root@localhost ~]# vim /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

ONBOOT=yes

IPV6INIT=no

BOOTPROTO=static

TYPE=Ethernet

IPADDR="192.168.6.12"

NETMASK="255.255.255.0"

GATEWAY="192.168.6.254"

[root@localhost ~]# halt -p

[root@localhost ~]# hostnamectl set-hostname node2

[root@localhost ~]# vim /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

ONBOOT=yes

IPV6INIT=no

BOOTPROTO=static

TYPE=Ethernet

IPADDR="192.168.6.13"

NETMASK="255.255.255.0"

GATEWAY="192.168.6.254"

[root@localhost ~]# hostnamectl set-hostname node3

[root@localhost ~]# halt -p

[root@nn01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.6.10 nn01 namenode,secondarynamenode

192.168.6.11 node1 datanode

192.168.6.12 node2 datanode

192.168.6.13 node3 datanode

[root@nn01 ~]# cat /etc/yum.repos.d/local.repo

[local_source]

name=CentOS Source

baseurl=ftp://192.168.6.254/centos7

enabled=1

gpgcheck=1

[root@nn01 ~]#

[root@nn01 hadoop]# pwd

/usr/local/hadoop/etc/hadoop

[root@nn01 ~]# vim /etc/ssh/ssh_config

Host *

GSSAPIAuthentication yes

StrictHostKeyChecking no

[root@node1 xx]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.6.jar wordcount oo xx

[root@node1 hadoop]# file share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.6.jar

share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.6.jar: Zip archive data, at least v1.0 to extract

[root@node1 hadoop]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.6.jar

[root@node1 hadoop]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.6.jar wordcount

Usage: wordcount

/local/hadoop/xx already exists

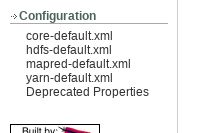

[root@nn01 hadoop]# cat core-site.xml

[root@nn01 hadoop]# cat hdfs-site.xml

[root@nn01 hadoop]# cat hadoop-env.sh

export JAVA_HOME="/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.131-11.b12.el7.x86_64/jre/"

export HADOOP_CONF_DIR="/usr/local/hadoop/etc/hadoop"

[root@nn01 hadoop]# cat slaves

node1

node2

node3

[root@node1 ~]# yum -y install java-1.8.0-openjdk-devel

[root@node1 ~]# yum -y install rsync-3.0.9-18.el7.x86_64

[root@node2 ~]# yum -y install rsync-3.0.9-18.el7.x86_64

[root@node2 ~]# yum -y install rsync-3.0.9-18.el7.x86_64

[root@rootroom9pc01 ~]# scp -r '/root/桌面/hadoop-2.7.6.tar.gz' 192.168.6.10:/root

[root@nn01 ~]# tar zxf hadoop-2.7.6.tar.gz

[root@nn01 ~]# mv hadoop-2.7.6 /usr/local/hadoop

[root@nn01 ~]# yum -y install java-1.8.0-devel

– ssh-keygen -b 2048 -t rsa -N '' -f key

– ssh-copy-id -i ./key.pub [email protected]

[root@nn01 .ssh]# mv key /root/.ssh/id_rsa

[root@nn01 hadoop]# yum -y install rsync-3.0.9-18.el7.x86_64

[root@nn01 hadoop]# for i in node{1..3};do

> rsync -aSH --delete /usr/local/hadoop ${i}:/usr/local/ -e 'ssh' &

> done

[root@node1 ~]# yum -y install rsync-3.0.9-18.el7.x86_64

[root@node2 ~]# yum -y install rsync-3.0.9-18.el7.x86_64

[root@node2 ~]# yum -y install rsync-3.0.9-18.el7.x86_64

[root@node1 ~]# ls /usr/local/hadoop

bin etc include lib libexec LICENSE.txt NOTICE.txt oo README.txt sbin share xx xx1

[root@nn01 bin]# chmod 755 rrr

[root@nn01 bin]# ls

container-executor hdfs mapred.cmd test-container-executor

hadoop hdfs.cmd rcc yarn

hadoop.cmd mapred rrr yarn.cmd

[root@nn01 bin]# ./rrr node{1..3}

[root@nn01 bin]# cat rrr

#!/bin/bash

for i in $@;do

rsync -aSH --delete /usr/local/hadoop ${i}:/usr/local/ -e 'ssh' &

done

wait

./bin/hdfs namenode –format

./sbin/start-dfs.sh

ssh node1 jps

./bin/hdfs dfsadmin -report

Live datanodes(3)

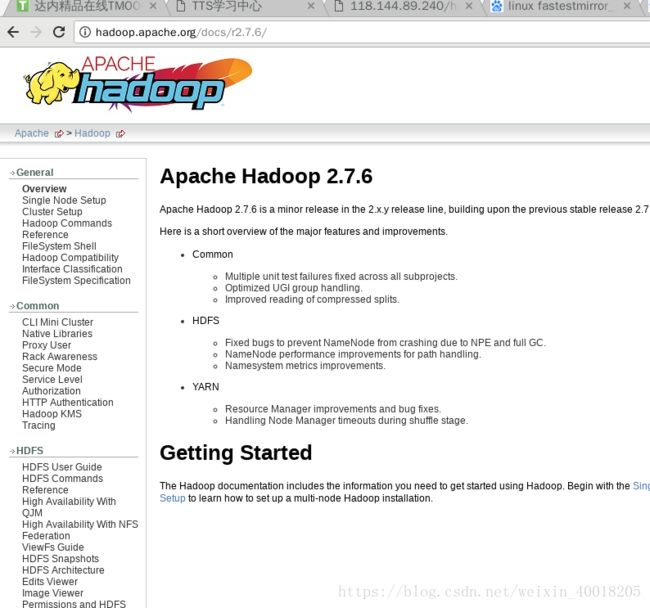

http://hadoop.apache.org/

hadoop.tmp.dir /tmp/hadoop-${user.name}