7.switch_to到底干了啥?

第六讲(传送门:fork + execve:一个进程的诞生)我们介绍了进程的诞生,可是操作系统中有很多进程,进程之间怎么切换的,又有哪些奥秘?我们回到源码,细细阅读。

操作系统原理中介绍了大量进程调度算法,这些算法从实现的角度看仅仅是从运行队列中选择一个新进程,选择的过程中运用了不同的策略而已。

对于理解操作系统的工作机制,反而是进程的调度时机与进程的切换机制更为关键。

进程调度的时机:

1. 中断处理过程(包括时钟中断、I/O中断、系统调用和异常)中,直接调用schedule(),或者返回用户态时根据need_resched标记调用schedule();

2. 内核线程可以直接调用schedule()进行进程切换,也可以在中断处理过程中进行调度,也就是说内核线程作为一类的特殊的进程可以主动调度,也可以被动调度;

3. 用户态进程无法实现主动调度,仅能通过陷入内核态后的某个时机点进行调度,即在中断处理过程中进行调度。

进程的切换

为了控制进程的执行,内核必须有能力挂起正在CPU上执行的进程,并恢复以前挂起的某个进程的执行,这叫做进程切换、任务切换、上下文切换;

挂起正在CPU上执行的进程,与中断时保存现场是不同的,中断前后是在同一个进程上下文中,只是由用户态转向内核态执行;

进程上下文包含了进程执行需要的所有信息

1. 用户地址空间:包括程序代码,数据,用户堆栈等

2. 控制信息:进程描述符,内核堆栈等

3. 硬件上下文(注意中断也要保存硬件上下文只是保存的方法不同)

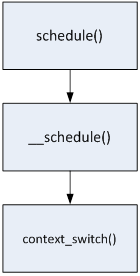

schedule()函数选择一个新的进程来运行,并调用context_switch进行上下文的切换,这个宏调用switch_to来进行关键上下文切换。

next= pick_next_task(rq, prev);//进程调度算法都封装这个函数内部

context_switch(rq,prev, next);//进程上下文切换

switch_to利用了prev和next两个参数:prev指向当前进程,next指向被调度的进程

我们从schedule()函数开始,一步步分析,看内核为切换进程做了哪些工作。schedule()函数位于linux-3.18.6\kernel\sched\core.c文件中。

asmlinkage__visible void __sched schedule(void)

{

struct task_struct *tsk = current;

sched_submit_work(tsk);

__schedule();

}__visible指出在内核的任何位置都可以调用schedule()。schedule()的尾部调用了__schedule(),我们进入__schedule()看看,__schedule()位于linux-3.18.6\kernel\sched\core.c文件中。

进入__schedule();之后的函数栈为:schedule()-> __schedule()。

static void__sched __schedule(void)

{

struct task_struct *prev, *next;

unsigned long *switch_count;

struct rq *rq;

int cpu;

need_resched:

preempt_disable();

cpu = smp_processor_id();

rq = cpu_rq(cpu);

rcu_note_context_switch(cpu);

prev = rq->curr;

schedule_debug(prev);

if (sched_feat(HRTICK))

hrtick_clear(rq);

/*

* Make sure thatsignal_pending_state()->signal_pending() below

* can't be reordered with__set_current_state(TASK_INTERRUPTIBLE)

* done by the caller to avoid the race withsignal_wake_up().

*/

smp_mb__before_spinlock();

raw_spin_lock_irq(&rq->lock);

switch_count = &prev->nivcsw;

if (prev->state && !(preempt_count()& PREEMPT_ACTIVE)) {

if(unlikely(signal_pending_state(prev->state, prev))) {

prev->state =TASK_RUNNING;

} else {

deactivate_task(rq,prev, DEQUEUE_SLEEP);

prev->on_rq = 0;

/*

* If a worker went to sleep, notify and askworkqueue

* whether it wants to wake up a task tomaintain

* concurrency.

*/

if (prev->flags& PF_WQ_WORKER) {

structtask_struct *to_wakeup;

to_wakeup =wq_worker_sleeping(prev, cpu);

if(to_wakeup)

try_to_wake_up_local(to_wakeup);

}

}

switch_count =&prev->nvcsw;

}

if (task_on_rq_queued(prev) ||rq->skip_clock_update < 0)

update_rq_clock(rq);

next = pick_next_task(rq, prev);

clear_tsk_need_resched(prev);

clear_preempt_need_resched();

rq->skip_clock_update = 0;

if (likely(prev != next)) {

rq->nr_switches++;

rq->curr = next;

++*switch_count;

context_switch(rq, prev,next); /* unlocks the rq */

/*

* The context switch have flipped the stackfrom under us

* and restored the local variables which weresaved when

* this task called schedule() in the past.prev == current

* is still correct, but it can be moved toanother cpu/rq.

*/

cpu = smp_processor_id();

rq = cpu_rq(cpu);

} else

raw_spin_unlock_irq(&rq->lock);

post_schedule(rq);

sched_preempt_enable_no_resched();

if (need_resched())

goto need_resched;

}__schedule(void)的关键代码是

next =pick_next_task(rq, prev)pick_next_task()封装了Linux的进程调度策略,我们不关注Linux使用了何种进程调度策略(有兴趣的童鞋可以深入研究pick_next_task()函数),总之Linux选出了下一个将要执行的进程。

next =pick_next_task(rq, prev)得到next之后下一步工作要完成进程上下文的切换,进程上下文的切换主要通过

context_switch(rq,prev, next);来实现。

我们进入context_switch(rq,prev, next);函数,看看进程上下文切换的具体过程。context_switch()位于linux-3.18.6\kernel\sched\core.c文件中。

注意,进入context_switch()之后的函数栈为:schedule()-> __schedule() –> context_switch()。

static inlinevoid

context_switch(structrq *rq, struct task_struct *prev,

struct task_struct *next)

{

struct mm_struct *mm, *oldmm;

prepare_task_switch(rq, prev, next);

mm = next->mm;

oldmm = prev->active_mm;

/*

* For paravirt, this is coupled with an exitin switch_to to

* combine the page table reload and the switchbackend into

* one hypercall.

*/

arch_start_context_switch(prev);

if (!mm) {

next->active_mm = oldmm;

atomic_inc(&oldmm->mm_count);

enter_lazy_tlb(oldmm, next);

} else

switch_mm(oldmm, mm, next);

if (!prev->mm) {

prev->active_mm = NULL;

rq->prev_mm = oldmm;

}

/*

* Since the runqueue lock will be released bythe next

* task (which is an invalid locking op but inthe case

* of the scheduler it's an obviousspecial-case), so we

* do an early lockdep release here:

*/

spin_release(&rq->lock.dep_map,1, _THIS_IP_);

context_tracking_task_switch(prev,next);

/* Here we just switch the registerstate and the stack. */

switch_to(prev, next, prev);

barrier();

/*

* this_rq must be evaluated again because prevmay have moved

* CPUs since it called schedule(), thus the'rq' on its stack

* frame will be invalid.

*/

finish_task_switch(this_rq(), prev);

}context_switch()中的关键代码为

switch_to(prev,next, prev);switch_to()是一个宏,而不是一个函数。我们注意到switch_to()头顶上的注释,/* Here we just switch the register state and the stack. */:切换寄存器的状态以及切换next进程和prev进程的堆栈。

我们去看看switch_to()的代码实现,switch_to()位于linux-3.18.6\arch\x86\include\asm\switch_to.h文件中。进入switch_to()后我们的函数栈为:schedule() -> __schedule() –> context_switch() –> switch_to()。(switch_to()虽然不算函数,可为了表示代码执行过程,姑且把switch_to()压入函数栈吧~)。

/*

* Saving eflags is important. It switches notonly IOPL between tasks,

* it also protects other tasks from NT leakingthrough sysenter etc.

*/

#defineswitch_to(prev, next, last) \

do { \

/* \

* Context-switching clobbers all registers, sowe clobber \

* them explicitly, via unused outputvariables. \

* (EAX and EBP is not listed because EBP issaved/restored \

* explicitly for wchan access and EAX is thereturn value of \

* __switch_to()) \

*/ \

unsigned long ebx, ecx, edx, esi, edi; \

\

asm volatile("pushfl\n\t" /* save flags */ \

"pushl %%ebp\n\t" /* save EBP */ \

"movl %%esp,%[prev_sp]\n\t" /* save ESP */ \

"movl %[next_sp],%%esp\n\t" /* restore ESP */ \

"movl $1f,%[prev_ip]\n\t" /* save EIP */ \

"pushl %[next_ip]\n\t" /* restore EIP */ \

__switch_canary \

"jmp __switch_to\n" /* regparm call */ \

"1:\t" \

"popl %%ebp\n\t" /* restore EBP */ \

"popfl\n" /* restore flags */ \

\

/* output parameters */ \

: [prev_sp] "=m"(prev->thread.sp), \

[prev_ip] "=m"(prev->thread.ip), \

"=a" (last), \

\

/* clobbered output registers: */ \

"=b" (ebx), "=c"(ecx), "=d" (edx), \

"=S" (esi), "=D"(edi) \

\

__switch_canary_oparam \

\

/* input parameters: */ \

: [next_sp] "m" (next->thread.sp), \

[next_ip] "m" (next->thread.ip), \

\

/* regparm parameters for __switch_to():*/ \

[prev] "a" (prev), \

[next] "d" (next) \

\

__switch_canary_iparam \

\

: /* reloaded segment registers */ \

"memory"); \

} while (0)switch_to()是AT&T语法的gcc内嵌汇编,其中已有不少注释,我来一句句的翻译注释吧。

1.

"pushfl\n\t"把当前进程(prev)的flag压入当前进程的内核堆栈;

2.

"pushl %%ebp\n\t"把当前进程(prev)的内核堆栈基址(%ebp寄存器值)压入当前进程的内核堆栈;

3.

"movl%%esp,%[prev_sp]\n\t"结合/* outputparameters */下的[prev_sp] "=m" (prev->thread.sp)可知%[prev_sp]代表prev->thread.sp。也就是把当前进程(prev)的内核堆栈栈顶(%esp寄存器值)保存到prev->thread.sp中。

4.

"movl%[next_sp],%%esp\n\t"结合/* inputparameters: */下的[next_sp] "m"(next->thread.sp)可知%[next_sp]代表next->thread.sp。也就是把next进程的内核堆栈栈顶(next->thread.sp值)还原到esp寄存器中。

5.

"movl%%esp,%[prev_sp]\n\t"

"movl %[next_sp],%%esp\n\t"这两步完成了内核堆栈的切换。

"movl %[next_sp],%%esp\n\t"这句之后的堆栈操作都是在next进程(next)的内核堆栈中进行的。

6.

"movl$1f,%[prev_ip]\n\t"把"1:\t"地址赋给prev进程的IP指针,当prev进程下次被switch_to回来时,就从"1:\t"这个位置开始执行,"1:\t"的后面两句是 "popl%%ebp\n\t"和"popfl\n",恢复prev进程的flag和内核堆栈基址。

7.

"pushl%[next_ip]\n\t"结合/* inputparameters: */下的[next_ip] "m"(next->thread.ip)可知%[next_ip]代表next->thread.ip。也就是把next进程的ip指针(执行起点)压入next进程的内核堆栈栈顶。

8.

"jmp __switch_to\n"__switch_to是一个函数,这里没有call __switch_to函数,而是jmp __switch_to,jmp的方式通过寄存器传递参数。请看/* regparm parameters for __switch_to(): */ \注释

[prev] "a"(prev),

[next] "d" (next)通过”a”(表示eax寄存器)和”d”(表示edx寄存器)传递参数。因为我们没有call __switch_to,故没有把__switch_to函数的下一条指令压入next进程的内核堆栈,虽然__switch_to没有被call,但是不影响__switch_to返回(ret)时pop(弹出)next进程内核堆栈栈顶的值,并把该值赋给了next的IP指针,next根据IP 指针指向的位置开始执行。

__switch_to返回时弹出的值是什么呢?next是要被换入执行的进程,那么next之前肯定被换出过,next被换出时同样执行了以下两句:

"movl $1f,%[prev_ip]\n\t"

"pushl %[next_ip]\n\t"这两句使next的内核堆栈栈顶保存了"1:\t"的位置。

所以__switch_to返回时弹出了"1:\t"的位置,并把该位置赋给next的IP指针,进而可知next的开始执行位置是"1:\t"。

9.

"popl %%ebp\n\t" /* restore EBP */ \

"popfl\n" /* restore flags */ \恢复next进程的内核堆栈和标志,接下来next进程就可以愉快的执行了!

__switch_to的模糊地带:

"movl%%esp,%[prev_sp]\n\t"

"movl%[next_sp],%%esp\n\t"这两句话把prev的内核堆栈切换为了next的内核堆栈。

但是到

"1:\t"时才开始执行next进程的第一条指令。

中间的这一段

"movl$1f,%[prev_ip]\n\t" /*save EIP */ \

"pushl%[next_ip]\n\t" /* restoreEIP */ \

__switch_canary \

"jmp__switch_to\n" /* regparmcall */ \使用的是next进程的内核堆栈,但是还算在prev进程里执行。

总的而言

"movl%%esp,%[prev_sp]\n\t"

"movl%[next_sp],%%esp\n\t"

"movl$1f,%[prev_ip]\n\t" /*save EIP */ \

"pushl%[next_ip]\n\t" /* restoreEIP */ \

__switch_canary \

"jmp__switch_to\n" /* regparm call */ \

"1:\t"这段是prev和next相对模糊的地方,说不清是属于哪个进程的执行序列。