CDH 6.3.1 sql-client踩坑纪

系统环境

CentOS 7.7.1908

CDH 6.3.1

FLINK1.9.0

目标

其实就一个朴素的想法:利用sql-client运行一个hello world

排错过程

[root@slave02 flink]# ./bin/sql-client.sh embedded -d conf/t.yaml -l opt/

Setting HADOOP_CONF_DIR=/etc/hadoop/conf because no HADOOP_CONF_DIR was set.

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

Reading default environment from: file:/opt/cloudera/parcels/FLINK-1.9.0-csa1.0.0.0-cdh6.3.0/lib/flink/conf/t.yaml

No session environment specified.

Validating current environment...

Exception in thread "main" org.apache.flink.table.client.SqlClientException: The configured environment is invalid. Please check your environment files again.

at org.apache.flink.table.client.SqlClient.validateEnvironment(SqlClient.java:147)

at org.apache.flink.table.client.SqlClient.start(SqlClient.java:99)

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:194)

Caused by: org.apache.flink.table.client.gateway.SqlExecutionException: Could not create execution context.

at org.apache.flink.table.client.gateway.local.LocalExecutor.getOrCreateExecutionContext(LocalExecutor.java:553)

at org.apache.flink.table.client.gateway.local.LocalExecutor.validateSession(LocalExecutor.java:373)

at org.apache.flink.table.client.SqlClient.validateEnvironment(SqlClient.java:144)

... 2 more

Caused by: org.apache.flink.table.api.NoMatchingTableFactoryException: Could not find a suitable table factory for 'org.apache.flink.table.factories.TableSourceFactory' in

the classpath.

Reason: No context matches.

The following properties are requested:

connector.properties.0.key=zookeeper.connect

connector.properties.0.value=slave01.cdh:2181,slave02.cdh:2181,slave03.cdh:2181

connector.properties.1.key=bootstrap.servers

connector.properties.1.value=slave01.cdh:9092,slave02.cdh:9092,slave03.cdh:9092

connector.properties.2.key=group.id

connector.properties.2.value=test-consumer-group

connector.property-version=1

connector.startup-mode=earliest-offset

connector.topic=order_sql

connector.type=kafka

connector.version=2.2.1

format.property-version=1

format.schema=ROW(order_id LONG, shop_id VARCHAR, member_id LONG, trade_amt DOUBLE, pay_time TIMESTAMP)

format.type=json

schema.0.name=order_id

schema.0.type=LONG

schema.1.name=shop_id

schema.1.type=VARCHAR

schema.2.name=member_id

schema.2.type=LONG

schema.3.name=trade_amt

schema.3.type=DOUBLE

schema.4.name=payment_time

schema.4.rowtime.timestamps.from=pay_time

schema.4.rowtime.timestamps.type=from-field

schema.4.rowtime.watermarks.delay=60000

schema.4.rowtime.watermarks.type=periodic-bounded

schema.4.type=TIMESTAMP

update-mode=append

The following factories have been considered:

org.apache.flink.table.catalog.GenericInMemoryCatalogFactory

org.apache.flink.table.sources.CsvBatchTableSourceFactory

org.apache.flink.table.sources.CsvAppendTableSourceFactory

org.apache.flink.table.sinks.CsvBatchTableSinkFactory

org.apache.flink.table.sinks.CsvAppendTableSinkFactory

org.apache.flink.table.planner.StreamPlannerFactory

org.apache.flink.table.executor.StreamExecutorFactory

org.apache.flink.table.planner.delegation.BlinkPlannerFactory

org.apache.flink.table.planner.delegation.BlinkExecutorFactory

org.apache.flink.table.catalog.hive.factories.HiveCatalogFactory

org.apache.flink.formats.json.JsonRowFormatFactory

org.apache.flink.streaming.connectors.kafka.KafkaTableSourceSinkFactory

at org.apache.flink.table.factories.TableFactoryService.filterByContext(TableFactoryService.java:283)

at org.apache.flink.table.factories.TableFactoryService.filter(TableFactoryService.java:191)

at org.apache.flink.table.factories.TableFactoryService.findSingleInternal(TableFactoryService.java:144)

at org.apache.flink.table.factories.TableFactoryService.find(TableFactoryService.java:114)

at org.apache.flink.table.client.gateway.local.ExecutionContext.createTableSource(ExecutionContext.java:265)

at org.apache.flink.table.client.gateway.local.ExecutionContext.lambda$new$1(ExecutionContext.java:144)

at java.util.LinkedHashMap.forEach(LinkedHashMap.java:684)

at org.apache.flink.table.client.gateway.local.ExecutionContext.<init>(ExecutionContext.java:142)

at org.apache.flink.table.client.gateway.local.LocalExecutor.getOrCreateExecutionContext(LocalExecutor.java:549)

... 4 more

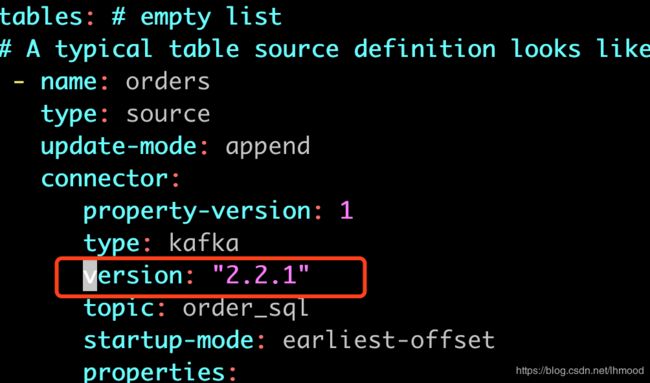

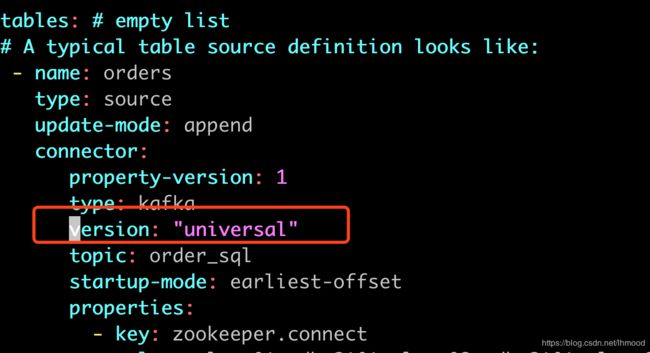

修改配置文件中connector ->version:

修改前:

修改后:

至此恭喜你就可以看到“松鼠图”了:

[root@slave02 flink]# ./bin/sql-client.sh embedded -d conf/t.yaml -l opt/

Setting HADOOP_CONF_DIR=/etc/hadoop/conf because no HADOOP_CONF_DIR was set.

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

Reading default environment from: file:/opt/cloudera/parcels/FLINK-1.9.0-csa1.0.0.0-cdh6.3.0/lib/flink/conf/t.yaml

No session environment specified.

Validating current environment...done.

▒▓██▓██▒

▓████▒▒█▓▒▓███▓▒

▓███▓░░ ▒▒▒▓██▒ ▒

░██▒ ▒▒▓▓█▓▓▒░ ▒████

██▒ ░▒▓███▒ ▒█▒█▒

░▓█ ███ ▓░▒██

▓█ ▒▒▒▒▒▓██▓░▒░▓▓█

█░ █ ▒▒░ ███▓▓█ ▒█▒▒▒

████░ ▒▓█▓ ██▒▒▒ ▓███▒

░▒█▓▓██ ▓█▒ ▓█▒▓██▓ ░█░

▓░▒▓████▒ ██ ▒█ █▓░▒█▒░▒█▒

███▓░██▓ ▓█ █ █▓ ▒▓█▓▓█▒

░██▓ ░█░ █ █▒ ▒█████▓▒ ██▓░▒

███░ ░ █░ ▓ ░█ █████▒░░ ░█░▓ ▓░

██▓█ ▒▒▓▒ ▓███████▓░ ▒█▒ ▒▓ ▓██▓

▒██▓ ▓█ █▓█ ░▒█████▓▓▒░ ██▒▒ █ ▒ ▓█▒

▓█▓ ▓█ ██▓ ░▓▓▓▓▓▓▓▒ ▒██▓ ░█▒

▓█ █ ▓███▓▒░ ░▓▓▓███▓ ░▒░ ▓█

██▓ ██▒ ░▒▓▓███▓▓▓▓▓██████▓▒ ▓███ █

▓███▒ ███ ░▓▓▒░░ ░▓████▓░ ░▒▓▒ █▓

█▓▒▒▓▓██ ░▒▒░░░▒▒▒▒▓██▓░ █▓

██ ▓░▒█ ▓▓▓▓▒░░ ▒█▓ ▒▓▓██▓ ▓▒ ▒▒▓

▓█▓ ▓▒█ █▓░ ░▒▓▓██▒ ░▓█▒ ▒▒▒░▒▒▓█████▒

██░ ▓█▒█▒ ▒▓▓▒ ▓█ █░ ░░░░ ░█▒

▓█ ▒█▓ ░ █░ ▒█ █▓

█▓ ██ █░ ▓▓ ▒█▓▓▓▒█░

█▓ ░▓██░ ▓▒ ▓█▓▒░░░▒▓█░ ▒█

██ ▓█▓░ ▒ ░▒█▒██▒ ▓▓

▓█▒ ▒█▓▒░ ▒▒ █▒█▓▒▒░░▒██

░██▒ ▒▓▓▒ ▓██▓▒█▒ ░▓▓▓▓▒█▓

░▓██▒ ▓░ ▒█▓█ ░░▒▒▒

▒▓▓▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░▓▓ ▓░▒█░

______ _ _ _ _____ ____ _ _____ _ _ _ BETA

| ____| (_) | | / ____|/ __ \| | / ____| (_) | |

| |__ | |_ _ __ | | __ | (___ | | | | | | | | |_ ___ _ __ | |_

| __| | | | '_ \| |/ / \___ \| | | | | | | | | |/ _ \ '_ \| __|

| | | | | | | | < ____) | |__| | |____ | |____| | | __/ | | | |_

|_| |_|_|_| |_|_|\_\ |_____/ \___\_\______| \_____|_|_|\___|_| |_|\__|

Welcome! Enter 'HELP;' to list all available commands. 'QUIT;' to exit.

Flink SQL>

不过别高兴的太早,咱们试试执行简单的select语句:

Flink SQL> select 'hello world';

[ERROR] Could not execute SQL statement. Reason:

java.lang.ClassNotFoundException: org.apache.flink.table.planner.delegation.ExecutorBase

搜索各种资料发现org.apache.flink.table.planner.delegation.ExecutorBase 是blink包里的一个类,下载flink-table-planner-blink_2.11-1.9.0-csa1.0.0.0.jar放在/opt/cloudera/parcels/FLINK/lib/flink/opt目录里,发现问题依旧,猜测是不是配置文件里的planner需要改成blink,just do it,改完之后来了新问题:

[root@slave02 flink]# ./bin/sql-client.sh embedded -d conf/t.yaml -l opt/

Setting HADOOP_CONF_DIR=/etc/hadoop/conf because no HADOOP_CONF_DIR was set.

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

Reading default environment from: file:/opt/cloudera/parcels/FLINK-1.9.0-csa1.0.0.0-cdh6.3.0/lib/flink/conf/t.yaml

No session environment specified.

Validating current environment...

Exception in thread "main" org.apache.flink.table.client.SqlClientException: The configured environment is invalid. Please check your environment files again.

at org.apache.flink.table.client.SqlClient.validateEnvironment(SqlClient.java:147)

at org.apache.flink.table.client.SqlClient.start(SqlClient.java:99)

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:194)

Caused by: org.apache.flink.table.client.gateway.SqlExecutionException: Could not create environment instance.

at org.apache.flink.table.client.gateway.local.ExecutionContext.createEnvironmentInstance(ExecutionContext.java:195)

at org.apache.flink.table.client.gateway.local.LocalExecutor.validateSession(LocalExecutor.java:373)

at org.apache.flink.table.client.SqlClient.validateEnvironment(SqlClient.java:144)

... 2 more

Caused by: org.apache.flink.table.api.TableException: Could not instantiate the executor. Make sure a planner module is on the classpath

at org.apache.flink.table.client.gateway.local.ExecutionContext.lookupExecutor(ExecutionContext.java:329)

at org.apache.flink.table.client.gateway.local.ExecutionContext.access$100(ExecutionContext.java:104)

at org.apache.flink.table.client.gateway.local.ExecutionContext$EnvironmentInstance.<init>(ExecutionContext.java:360)

at org.apache.flink.table.client.gateway.local.ExecutionContext$EnvironmentInstance.<init>(ExecutionContext.java:342)

at org.apache.flink.table.client.gateway.local.ExecutionContext.lambda$createEnvironmentInstance$3(ExecutionContext.java:192)

at org.apache.flink.table.client.gateway.local.ExecutionContext.wrapClassLoader(ExecutionContext.java:216)

at org.apache.flink.table.client.gateway.local.ExecutionContext.createEnvironmentInstance(ExecutionContext.java:192)

... 4 more

Caused by: org.apache.flink.table.api.NoMatchingTableFactoryException: Could not find a suitable table factory for 'org.apache.flink.table.delegation.ExecutorFactory' in

the classpath.

Reason: No factory supports the additional filters.

The following properties are requested:

class-name=org.apache.flink.table.planner.delegation.BlinkExecutorFactory

streaming-mode=true

The following factories have been considered:

org.apache.flink.table.executor.StreamExecutorFactory

at org.apache.flink.table.factories.ComponentFactoryService.find(ComponentFactoryService.java:71)

at org.apache.flink.table.client.gateway.local.ExecutionContext.lookupExecutor(ExecutionContext.java:320)

... 10 more

Could not find a suitable table factory for ‘org.apache.flink.table.delegation.ExecutorFactory’ in

the classpath.这句是sql-client没有发现blink的包,没想太多,移动blink包到lib下试试,具体效果如下:

[root@slave02 flink]# ./bin/sql-client.sh embedded -d conf/t.yaml -l opt/

Setting HADOOP_CONF_DIR=/etc/hadoop/conf because no HADOOP_CONF_DIR was set.

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

Reading default environment from: file:/opt/cloudera/parcels/FLINK-1.9.0-csa1.0.0.0-cdh6.3.0/lib/flink/conf/t.yaml

No session environment specified.

Validating current environment...

Exception in thread "main" org.apache.flink.table.client.SqlClientException: The configured environment is invalid. Please check your environment files again.

at org.apache.flink.table.client.SqlClient.validateEnvironment(SqlClient.java:147)

at org.apache.flink.table.client.SqlClient.start(SqlClient.java:99)

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:194)

Caused by: org.apache.flink.table.client.gateway.SqlExecutionException: Could not create environment instance.

at org.apache.flink.table.client.gateway.local.ExecutionContext.createEnvironmentInstance(ExecutionContext.java:195)

at org.apache.flink.table.client.gateway.local.LocalExecutor.validateSession(LocalExecutor.java:373)

at org.apache.flink.table.client.SqlClient.validateEnvironment(SqlClient.java:144)

... 2 more

Caused by: java.lang.NoClassDefFoundError: org/apache/flink/table/dataformat/GenericRow

at org.apache.flink.table.planner.codegen.ExpressionReducer.<init>(ExpressionReducer.scala:56)

at org.apache.flink.table.planner.delegation.PlannerContext.createFrameworkConfig(PlannerContext.java:113)

at org.apache.flink.table.planner.delegation.PlannerContext.<init>(PlannerContext.java:96)

at org.apache.flink.table.planner.delegation.PlannerBase.<init>(PlannerBase.scala:87)

at org.apache.flink.table.planner.delegation.StreamPlanner.<init>(StreamPlanner.scala:44)

at org.apache.flink.table.planner.delegation.BlinkPlannerFactory.create(BlinkPlannerFactory.java:50)

at org.apache.flink.table.client.gateway.local.ExecutionContext.createStreamTableEnvironment(ExecutionContext.java:303)

at org.apache.flink.table.client.gateway.local.ExecutionContext.access$200(ExecutionContext.java:104)

at org.apache.flink.table.client.gateway.local.ExecutionContext$EnvironmentInstance.<init>(ExecutionContext.java:361)

at org.apache.flink.table.client.gateway.local.ExecutionContext$EnvironmentInstance.<init>(ExecutionContext.java:342)

at org.apache.flink.table.client.gateway.local.ExecutionContext.lambda$createEnvironmentInstance$3(ExecutionContext.java:192)

at org.apache.flink.table.client.gateway.local.ExecutionContext.wrapClassLoader(ExecutionContext.java:216)

at org.apache.flink.table.client.gateway.local.ExecutionContext.createEnvironmentInstance(ExecutionContext.java:192)

... 4 more

Caused by: java.lang.ClassNotFoundException: org.apache.flink.table.dataformat.GenericRow

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 17 more

继续找,发现缺少:flink-table-runtime-blink_2.11-1.9.0-csa1.0.0.0.jar

下载后放在lib目录下,再执行sql-client, 终于又见到了小松鼠图

执行select试试:

org.apache.flink.util.ConfigurationException: Config parameter 'Key:

'jobmanager.rpc.address' , default: null (deprecated keys: [])' is missing

(hostname/address of JobManager to connect to).

这个需要在/opt/cloudera/parcels/FLINK/lib/flink/conf/flink-conf.yaml 中增加如下配置:

jobmanager.rpc.address: localhost

jobmanager.rpc.port: 6123

再执行sql:

提示connection refused

最后发现jobmanager并未启动;找到这里又去了解了一下官方文档,最后决定启动yarn-session试试,告诉我yarn相关的lib找不到,参考这位仁兄的文章进行尝试:https://www.tfzx.net/index.php/article/2763037.html

总结一下,正确启动yarn-session.sh 方式:

export HADOOP_CLASSPATH=`hadoop classpath`

yarn-session.sh -d -s 2 -tm 800 -n 2

再次执行sql-client.sh:

SQL Query Result (Table)

Table program finished. Page: Last of 1 Updated: 21:40:45.783

EXPR$0

hello world

Q Quit + Inc Refresh G Goto Page N Next Page O Open Row

至此踩坑基本结束,后续会继续探索。

参考资料

1.https://www.tfzx.net/index.php/article/2763037.html

2.https://www.cnblogs.com/frankdeng/p/9400627.html

3.https://www.jianshu.com/p/c47e8f438291

4.https://www.jianshu.com/p/680970e7c2d9